Monitoring breathing is important for a plethora of applications including but not limited to baby monitoring, sleep monitoring and elderly care. This paper presents a way to fuse both vision based and RF based modalities for the task of estimating the breathing rate of a human. The modalities used are the F200 Intel's RealSense RGB and depth (RGBD) sensor, and an ultra wideband (UWB) radar. RGB image based features and their corresponding image coordinates are detected on the human body and are tracked using the famous optical flow algorithm of Lucas and Kanade. The depth at these coordinates is also tracked. The synced-radar received signal is processed to extract the breathing pattern. All of these signals are then passed to a harmonic signal detector which is based on a generalized likelihood ratio test. Finally, a spectral estimation algorithm based on the reformed Pisarenko algorithm tracks the breathing fundamental frequencies in real time, which are then fused into a one optimal breathing rate in a maximum likelihood fashion. We tested this multi-modal setup on 14 human subjects and we report a maximum error of $0.5$ BPM compared to the true breathing rate.

Introduction

Vital signs extraction has been a research topic both in the computer vision and radar research community. The computer vision-based algorithms are tackling this problem from two different angles: One is the color-based algorithms [

1,

2,

3,

4], which capture the minute color variation of the human skin during a heartbeat [

5]. Color based algorithms are primarily used for heartbeat estimation which is not the scope of this paper. The other is known as motion magnification [

6] that magnify minute movements in a video. This is used mainly for heartbeat but can be used also for breathing rate estimation.

Intel’s RealSense camera was used in [

7] to estimate the heartrate of a human subject. They used the infrared (IR) channel for estimating the heartrate. The depth channel was used as well for estimating the pose of the human head. The use of optical flow for breathing estimation was presented in [

8]; however, the detailed algorithm and result performance was not reported. Finally, in [

9] we describe in details the algorithm to reliably extract breathing from a RGB video alone in real-time.

Lately, biological signals monitoring utilizing uDoppler has been the topic of the research community. Respiration rate extraction with a pulse-Doppler architecture is presented in [

10]. The wavelet transform was used in [

11] to overcome the Discrete Fourier Transform (DFT) resolution insufficiency, and in [

12], the chirp Z transform was used on a IR-UWB radar echos to extract respiration rate. The same transform was used in [

13] coupled with an analytical model for the remote extraction of both respiration and heartrate. Moreover, they verified the validity of a model in which the thorax and the heart are considered vibrating-scatterers, such that the total uDoppler return is a superposition of two sinusoids with different frequencies and amplitudes in which, the breathing frequency is smaller than the heartrate, and its amplitude is much larger.

Radar Measurement Setup

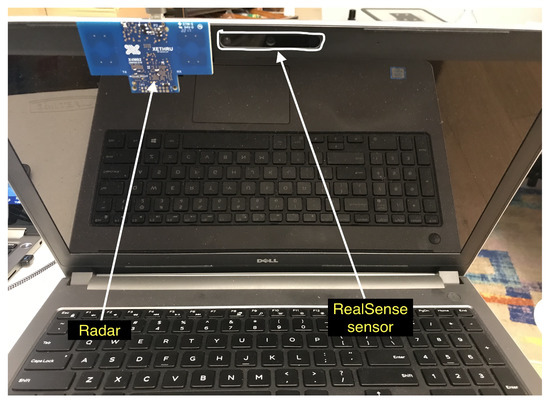

The radar measurement setup is given in and . We use a IR-UWB impulse XeThru X4 radar module that transmits on a human subject (hereinafter “subject”). The raw data is collected at the PC through a USB interface and fed into the algorithm which is running real time. The radar is synced to the F200 Intel® RealSenseTM RGBD sensor. The radar operating parameters is given in . This single setup cost is ~$400. The cost can be reduced substantially after system design and large quantities discounts.

Figure 1. Xethru X4 radar mounted to a laptop with RealSense sensor on it.

Figure 2. Subject under test will be sitting on this chair infront of the radar and RealSense sensor.

Table 1. Radar parameters.

This entry is adapted from the peer-reviewed paper 10.3390/s20041229