Spinal metastasis is the most common malignant disease of the spine, and its early diagnosis and treatment is important to prevent complications and improve quality of life. The main clinical applications of AI techniques include image processing, diagnosis, decision support, treatment assistance and prognostic outcomes. In the realm of spinal oncology, artificial intelligence technologies have achieved relatively good performance and hold immense potential to aid clinicians, including enhancing work efficiency and reducing adverse events.

1. Introduction

Spinal metastasis is a malignant process along the spine that is up to 35 times more common than any other primary malignant disease along the spine [

1] and represents the third most common location for metastases [

2]. Spinal metastasis can tremendously impact quality of life, secondary to complications such as pain due to fractures, spinal cord compression, neurological deficits [

3,

4], reduced mobility, bone marrow aplasia and hypercalcemia leading to symptoms such as constipation, polyuria, polydipsia, fatigue and even cardiac arrythmias and acute renal failure [

5,

6]. Therefore, the timely detection, diagnosis and optimal treatment of spinal metastases is essential to reduce complications and to improve patients’ quality of life [

7].

Radiological investigations play a central role in the diagnosis and treatment planning of spinal metastases. Plain radiographs are a quick and inexpensive first-line investigation, although advanced modalities such as computed tomography (CT), magnetic resonance imaging (MRI), positron emission tomography (PET) and bone scintigraphy are all superior for the detection and classification of spinal metastases [

8]. Different imaging modalities have their own advantages over each other in the assessment of spinal metastasis. CT with sensitivity and specificity of 79.2% and 92.3%, respectively, for the detection of spinal metastases [

9], can be used to guide interventional procedures and also provides systemic staging [

10]. Compared to CT, MRI has higher sensitivity and specificity of 94.1% and 94.2%, respectively, for spinal metastasis detection [

9], and is radiation-free. MRI is the modality of choice for assessing metastatic spread to the bone marrow and associated epidural soft tissue extension [

11,

12]. 18F FDG-PET (flurodeoxyglucose) has sensitivity and specificity of 89.8% and 63.3%, respectively, although sensitivity varies among different histologies due to their innate metabolic activity [

9,

13]. In bone scintigraphy, the sensitivity and specificity are 80.0% and 92.8%, respectively, and it is the most widely available technique for the study of bone metastatic disease [

8,

9].

Recently, preliminary Artificial Intelligence (AI) techniques have demonstrated remarkable progress in medical imaging applications, especially in the field of oncology [

14]. The two most popular machine learning techniques are radiomics-based feature analysis, along with convolutional neural networks (CNN). Radiomics-based techniques require extraction of several handcrafted features, which are then selected to provide a training set for deep learning-based image classification [

15]. One drawback of the technique is that the selected handcrafted features remain limited to the knowledge of the radiologist or clinician, which could reduce the accuracy of the developed algorithm [

16]. Machine learning along with deep learning techniques can directly learn important imaging features for classification without the need for handcrafted feature selection. This typically involves convolutional neural networks, and these techniques have been shown in the literature to have improved prediction accuracy for lesion detection, segmentation and treatment response in oncological imaging [

17,

18,

19].

2. Artificial Intelligence Methods for Spinal Metastasis Imaging

2.1. Artificial Intelligence (AI)

Artificial Intelligence (AI)

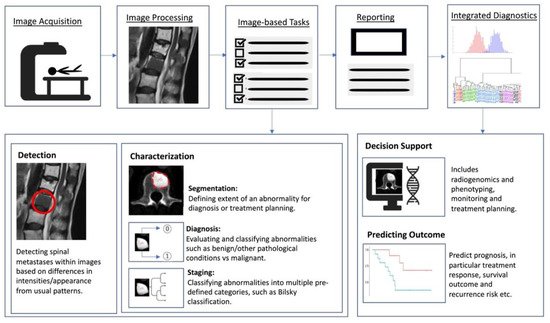

Artificial intelligence (AI) is a term referring to a machine’s computational ability to perform tasks that are comparable to those executed by humans. This is done by utilising unique inputs and then generating outputs with high added value [37]. With recent advances in medical imaging and ever increasing large amounts of digital image and report data, worldwide interest in AI for medical imaging continues to increase [38]. The rationale of using AI and computer-aided diagnostic (CAD) systems was initially thought to assist clinicians or radiologists in the detection of tumours or lesions which in turn increases efficiency, improves detection and reduces error rates [39]. As a result, efforts are ongoing to enhance the diagnostic ability of AI, and enhance its efficiency so that is can be successfully translated into clinical practice [40]. With the advent of artificial neural networks, which are a class of architectures loosely based on how the human brain works [41], several computational learning models (mainly machine learning (ML) and deep learning (DL) algorithms) have been introduced and are largely responsible for the growth of AI in radiology. In general, the clinical applications of AI (Figure 2) can be broadly characterised into three categories for oncology imaging workflow: (1) detection of abnormalities; (2) characterisation of abnormalities, which includes image processing steps such as segmentation, differentiation and classification; and (3) integrated diagnostics, which include decision support for treatment decision and planning, treatment response and prognosis prediction.

Figure 2. Schematic outline showing where AI implementation can optimise the radiology workflow. The workflow comprises the following steps: image acquisition, image processing, image-based tasks, reporting, and integrated diagnostics. AI can add value to the image-based clinical tasks, including the detection of abnormalities; characterisation of objects in images using segmentation, diagnosis and staging; and integrated diagnostics including decision support for treatment planning and prognosis prediction.

Machine Learning (ML)

Machine learning is a field of AI in which models are trained for prediction using known datasets, from which the machine “learns”. The developed model then applies its knowledge to perform diagnostic tasks in unknown datasets [

42]. The application of ML requires collection of data inputs that have been labelled by human experts (typically radiologists) or by direct extraction of the data using several different computational methods including supervised and unsupervised learning. Supervised machine learning models rely on labelled input data to learn the relationship with output training data [

43], and are often used to classify data or make predictions. On the other hand, unsupervised machine learning models learn from unlabelled raw training data to learn the relationships and patterns within the dataset and discover inherent trends within the data set [

44,

45]. Unsupervised models are mainly used as an efficient representation of the initial dataset (e.g., densities, distances or clustering through dataset statistical properties) and to better understand relationships or patterns within the datasets [

46,

47]. Such new representation can be an initial step prior to training a supervised model (e.g., identifying anomalies and outliers within the datasets), which could improve performance in the supervised model [

48,

49,

50].

Deep Learning (DL)

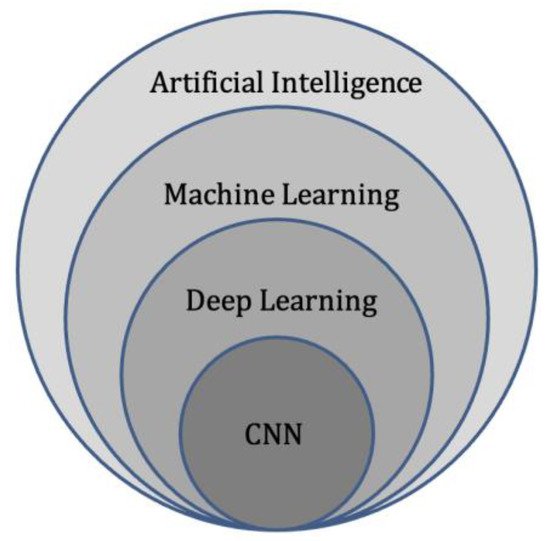

Deep learning represents a subdivision of machine learning (

Figure 3), and is modelled on the neuronal architecture within the brain. The technique leverages artificial neural networks, which involve several layers to solve complex medical imaging challenges [

51]. The multiple layered structure enables the deep learning model or algorithm to actively learn knowledge from the imaging datasets and make predictions on unseen imaging data [

52]. These deep learning techniques can provide accurate image classification (disease present/absent, or severity of disease), segmentation (pixel-based), and detection capability [

53]

Figure 3. Diagram of artificial intelligence hierarchy. Machine learning lies within the field of artificial intelligence and is an area of study that enables computers to learn without explicit knowledge or programming. Within machine learning, deep learning is another area of study that enables computation of neural networks involving multiple layers. Finally, convolutional neural networks (CNN) are an important subset of deep learning, commonly applied to analyse medical images.

Radiomics

Radiomics is a relatively new branch of machine learning that involves converting medical images containing important information related to tumour features into measurable and quantifiable data [

55]. This information can then aid clinicians in the assessment of tumours by providing additional data about tumour behaviour and pathophysiology beyond that of current subjective visual interpretation (inferable by human eyes) [

56,

57], such as tumour subtyping and grading [

58]. Combined with clinical and qualitative imaging data, radiomics has been shown to guide and improve medical decision making [

59], and can be used to aid disease prediction, provide prognostic information, along with treatment response assessment [

58]. In general, the workflow for deriving a radiomics model can be divided into several steps (

Figure 4): data selection (input), radiological imaging evaluation and segmentation, image feature extraction in the regions of interest (ROIs) and exploratory analysis followed by modelling [

55]. Depending on the type of imaging modality, the acquisition, technical specifications, software, segmentation of the ROIs, image feature extraction and structure of the predictive algorithm are all different and subject to several factors [

60]. Machine learning methods including random decision forest, an ensemble learning method for classifying data using decision trees, can then be performed to validate and further evaluate the classification accuracy of the set of predictors [

61]. These can then be applied in a clinical setting to potentially improve the diagnostic accuracy and prediction of survival post-treatment [

62,

63].

Figure 4. Diagram showing the general framework and main steps for radiomics, namely data selection (input), medical imaging evaluation and segmentation, feature extraction in the regions of interest (ROIs), exploratory analysis and modelling.

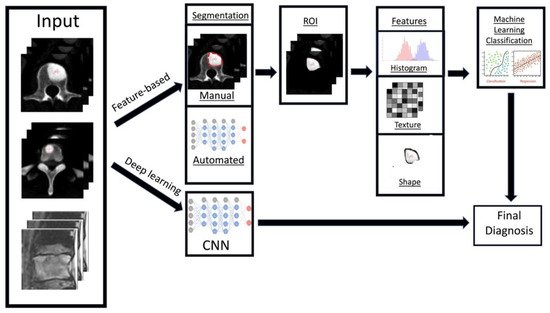

There are two key radiomics techniques, namely handcrafted-feature based and deep learning-based analysis [

64]. Firstly, handcrafted-feature radiomics involves extraction of features from an area of interest (typically segmented). These features can be placed into groups based on shape [

65], histogram criteria (first order statistics) [

66], textural-based criteria (second-order statistics) [

39] and other higher order statistical criteria [

67]. Following this step, machine learning models can be developed to provide clinical predictions, including survival/prognostic information based on the handcrafted-features [

62,

68,

69]. The models are also assessed on validation datasets to review their efficiency and sensitivity.

In contrast to handcrafted-feature radiomics, deep learning techniques rely on convolutional neural networks (CNN) or other architectures [

70] to identify the most pertinent radiological imaging features without relying on prior feature descriptions. CNNs provide automated extraction of the most important features from the radiological imaging data using a cascading process, which can then be used for pattern recognition and training [

71]. The generated dominant imaging features can undergo further processing, or exit the neural network and be used for machine learning model generation using algorithms similar to the feature-based radiomics method before validation. The main drawback of deep learning-based radiomics is the requirement for much larger training datasets, since feature extraction is required as part of the initial process compared to feature-based radiomics where the features are manually selected for analysis [

72]. With recent advances in AI, this limitation can be circumvented through transfer learning, which is a technique that uses neural networks that were pre-trained for another separate but closely related purpose [

73]. As such, by leveraging on the network’s prior knowledge, transfer learning reduces computational demand and the amount of training data required, but can still produce reliable performance.

Radiomics techniques have transformed the outlook of quantitative medical imaging research. Radiomics could provide rapid, comprehensive characterisation of tumours at minimal cost, which would act as an initial screen to determine the need for further clinical or genomic testing [

74].

2.2. Artificial Intelligence Methods for Spinal Metastasis Imaging

Detection of Spinal Metastases

Early detection and diagnosis of spinal metastases plays a key role in clinical practice. This will determine the stage of disease for the patient, and has the potential to alter the treatment regimen [

105]. Metastatic spinal disease is associated with increased morbidity, and more than half of these patients will require radiotherapy or invasive intervention for complications, such as spinal cord or nerve root compression [

106]. Hence, early diagnosis and treatment before permanent neurologic and functional deficits occur is essential for a favourable prognosis [

107,

108,

109].

Manual detection of spinal metastasis through various imaging modalities is time consuming, tedious and often challenging with imaging features overlapping with many other pathologies. It is widely recognised that automated lesion detection could improve radiologist sensitivity for detecting osseous metastases, with computer-aided detection (CAD) software systems and artificial intelligence models proving to be as effective or even superior to manual radiologist detection [

91,

92,

93]. Computer-assisted detection of spinal metastases was first studied on CT by O’Connor et al. [

93] in 2007 for the detection of osteolytic spinal metastases. This paved the way for further studies using CAD, focusing on other subtypes of spinal metastasis such as osteoblastic or mixed type lesions [

90], and other imaging modalities. Subsequently, with the recent advances in artificial intelligence in medical imaging [

110,

111,

112], there were substantial improvements in the detection of spinal metastases with the aid of deep learning and convolutional neural networks. This has resulted in improvement in the accuracy of computer-assisted automated detection of spinal metastases across various imaging modalities with significant reduction in false positive and negative rates [

75,

85,

88,

89].

Differentiating Spinal Metastases from Other Pathological Conditions

Machine learning has been applied in several studies to help distinguish between spinal metastases and other pathology. This was first done by identifying key radiomics features in vertebral metastases [

77], and incorporating this information with various machine learning models. For example, Liu et al. [

80] and Xiong X et al. [

76] utilised MRI-based radiomics to differentiate between spinal metastases and multiple myeloma, based on conventional T1-weighted (T1W) and fat-suppression T2-weighted (T2W) MR sequences. They incorporated the radiomics models using various machine learning algorithms such as Support-Vector Machine (SVM), Random Forest (RF), K-Nearest Neighbour (KNN), Naïve Bayes (NB) using 10-fold cross validation, Artificial Neural Networks (ANN) and Logistic Regression Classifier to predict the likelihood of spinal metastases. The radiomics model from Xiong X et al. used features from T2WI images, and achieved accuracy, sensitivity, and specificity of 81.5%, 87.9% and 79.0%, respectively, in their validation cohort. As for Liu et al., their model with 10-EPV (events per independent variable) showed good performance in distinguishing multiple myeloma from spinal metastases with an AUC of 0.85.

Pre-Treatment Evaluation

Prediction of prognosis is a paradigm in oncological treatment. In patients with vertebral metastases, the ability to predict treatment response may help clinicians provide the most appropriate treatment with the best clinical outcome for the patient, avoid delayed transition to another treatment and prevent exposing patients to unnecessary treatment-related side effects. Shi YJ et al. [101] studied the value of MRI-based radiomics in predicting the treatment response of chemotherapy in a small group of breast cancer patients with vertebral metastases. Their radiomics model was effective in predicting progressive vs non-progressive disease with an area under the curve (AUC) of up to 0.91. This method could be extrapolated in future studies to predict the treatment response of spinal metastases and other primary tumours.

Applications of deep learning models goes beyond tumour detection and differentiation, and they have the ability to automatically generate meaningful parameters from MRI and other modalities. Hallinan et al. [94] developed a deep learning model for automated classification of metastatic epidural disease and/or spinal cord compression on MRI using the Bilsky classification. The model showed almost perfect agreement when compared to specialist readers on internal and external datasets with kappas of 0.92–0.98, p < 0.001 and 0.94–0.95, p < 0.001, respectively, for dichotomous Bilsky classification (low versus high grade). Accurate, reproducible classification of metastatic epidural spinal cord compression will enable clinicians to decide on initial radiotherapy versus surgical intervention [115].

Segmentation refers to delineation or volume extraction of a lesion or organ based on image analysis. In clinical practice, manual or semi-manual segmentation techniques are being applied to provide further value to CT and MRI studies. However, these techniques are subjective, operator-dependent and very time-consuming which limits their adoption. Automatic segmentation of spinal metastases using deep learning models has been shown to be as accurate as expert annotations in both MRI and CT [88]. Hille G et. al. [96] showed that automated vertebral metastasis segmentation on MRI using deep convolutional neural networks (U-net like architecture) were almost as accurate as expert annotation. Their automated segmentation solution achieved a Dice–Sørensen coefficient (DSC) of up to 0.78 and mean sensitivity rates up to 78.9% on par with inter-reader variability DSC of 0.79. Potentially, these models will not only reduce the need for time-consuming manual segmentation of spinal metastases, but also support stereotactic body radiotherapy planning, and improve the performance [117,118] and treatment outcome of minimally invasive interventions for spinal metastasis such as radiofrequency ablation [95]. In respect to radiotherapy, precise automated tumour contours will improve treatment planning, reduce segmentation times and reduce the radiation dose to the surrounding organs at risk, including the spinal cord. In recent years, various image segmentation techniques have been proposed, resulting in more accurate and efficient image segmentation for clinical diagnosis and treatment [119,120,121,122].

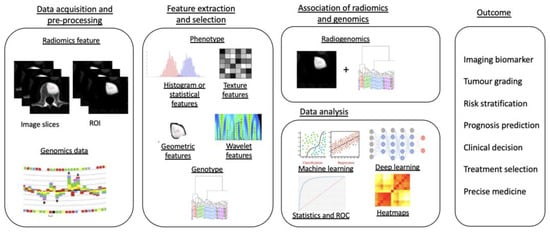

Radiogenomics, the combination of “

Radiomics” and “

Genomics”, refers to the use of imaging features or surrogates to determine genomic signatures and advanced biomarkers in tumours. These biomarkers can then be used for clinical management decisions, including prognostic, diagnostic and predictive precision of tumour subtypes [

131]. The workflow of a radiogenomics study can be commonly classified into five different stages (

Figure 5): (1) image acquisition and pre-processing, (2) feature extraction and selection from both the medical imaging and genotype, (3) association of radiomics and genomics features, (4) data analysis using machine learning models and (5) final radiogenomics outcome model [

132].

Figure 5. Diagram showing a five-stage radiogenomics pipeline including data acquisition (radiological imaging) and pre-processing, feature extraction and selection, subsequent association of radiomics techniques and genomics, analysis of data and model development and, finally, radiogenomics outcomes.

Post-Treatment Evaluation

Zhong et al. [78] created an MRI-based radiomics nomogram that was shown to be clinically useful in discriminating between cervical spine osteoradionecrosis and metastases, with an AUC of 0.73 on the training set and 0.72 in the validation set.

Pseudo-progression is a post-treatment phenomenon involving an increase in the target tumour volume (usually without any worsening symptoms), which then demonstrates interval stability or reduction in volume on repeat imaging. It occurs in approximately 14 to 18% of those with vertebral metastases treated with stereotactic body radiotherapy [160,161]. The differentiation of pseudo-progression from true progression is challenging on imaging even with many studies suggesting some differentiating factors [161,162], such as location of involvement, e.g., purely vertebral body involvement with pseudo compared to involvement of the epidural space with true progression. Artificial intelligence has already shown utility in aiding the differentiation of pseudo from true progression in brain imaging.

This entry is adapted from the peer-reviewed paper 10.3390/cancers14164025