The evolution of recent malicious software with the rising use of digital services has increased the probability of corrupting data, stealing information, or other cybercrimes by malware attacks. Therefore, malicious software must be detected before it impacts a large number of computers. While malware analysis is taxonomy and linked to the data types that are used with each analysis approach, malware detection is introduced with a deep taxonomy where each known detection approach is presented in subcategories and the relationship between each introduced detection subcategory and the data types that are utilized is determined.

- malware detection and classification models

- malware analysis approaches

- malware detection approaches

1. Malware Analysis Approaches and Data Types

| Ref. | Date | String | PE-Header | Opcode | API Calls | DLL | Machine Activities | Process Data | File Data | Registry Data | Network Data | Derived Data | Accuracy | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Entropy | Compression | |||||||||||||

| Static Analysis | ||||||||||||||

| [1] | 2015 | ✓ | 98.60% | |||||||||||

| [2] | 2015 | ✓ | 99.97% | |||||||||||

| [3] | 2016 | ✓ | 99.00% | |||||||||||

| [4] | 2018 | ✓ | ✓ | NA | ||||||||||

| [5] | 2017 | ✓ | 96.09% | |||||||||||

| [6] | 2017 | ✓ | 98.90% | |||||||||||

| [7] | 2017 | ✓ | 81.07% | |||||||||||

| [8] | 2018 | ✓ | NA | |||||||||||

| [9] | 2019 | ✓ | 99.80% | |||||||||||

| [10] | 2019 | ✓ | 87.50% | |||||||||||

| [11] | 2019 | ✓ | 97.87% | |||||||||||

| [12] | 2020 | ✓ | NA | |||||||||||

| [13] | 2019 | ✓ | 91.43% | |||||||||||

| [14] | 2016 | ✓ | ✓ | 89.44% | ||||||||||

| [15] | 2019 | ✓ | NA | |||||||||||

| [16] | 2019 | ✓ | ✓ | NA | ||||||||||

| [17] | 2020 | ✓ | ✓ | ✓ | NA | |||||||||

| [18] | 2016 | ✓ | ✓ | 98.17% | ||||||||||

| Dynamic analysis | ||||||||||||||

| [19] | 2015 | ✓ | 97.8% | |||||||||||

| [20] | 2016 | ✓ | 97.19% | |||||||||||

| [21] | 2016 | ✓ | 98.92% | |||||||||||

| [22] | 2016 | ✓ | ✓ | ✓ | 96.00% | |||||||||

| [23] | 2016 | ✓ | ✓ | NA | ||||||||||

| [24] | 2017 | ✓ | 98.54% | |||||||||||

| [25] | 2018 | ✓ | ✓ | NA | ||||||||||

| [26] | 2019 | ✓ | 92.00% | |||||||||||

| [27] | 2019 | ✓ | ✓ | 97.22% | ||||||||||

| [28] | 2019 | ✓ | 94.89% | |||||||||||

| [29] | 2020 | ✓ | 97.28% | |||||||||||

| [30] | 2019 | ✓ | ✓ | 75.01% | ||||||||||

| [31] | 2020 | ✓ | 98.43% | |||||||||||

| [32] | 2020 | ✓ | ✓ | ✓ | ✓ | ✓ | 99.54% | |||||||

| [33] | 2017 | ✓ | NA | |||||||||||

| Hybrid analysis | ||||||||||||||

| [34] | 2014 | ✓ | ✓ | 98.71% | ||||||||||

| [35] | 2016 | ✓ | ✓ | 99.99% | ||||||||||

| [36] | 2019 | ✓ | ✓ | 99.70% | ||||||||||

| [37] | 2021 | ✓ | ✓ | 94.70% | ||||||||||

| [38] | 2020 | ✓ | ✓ | ✓ | 93.92% | |||||||||

| [39] | 2020 | ✓ | ✓ | ✓ | ✓ | 96.30% | ||||||||

1.1. Static Analysis

1.2. Dynamic Analysis

1.3. Hybrid Analysis

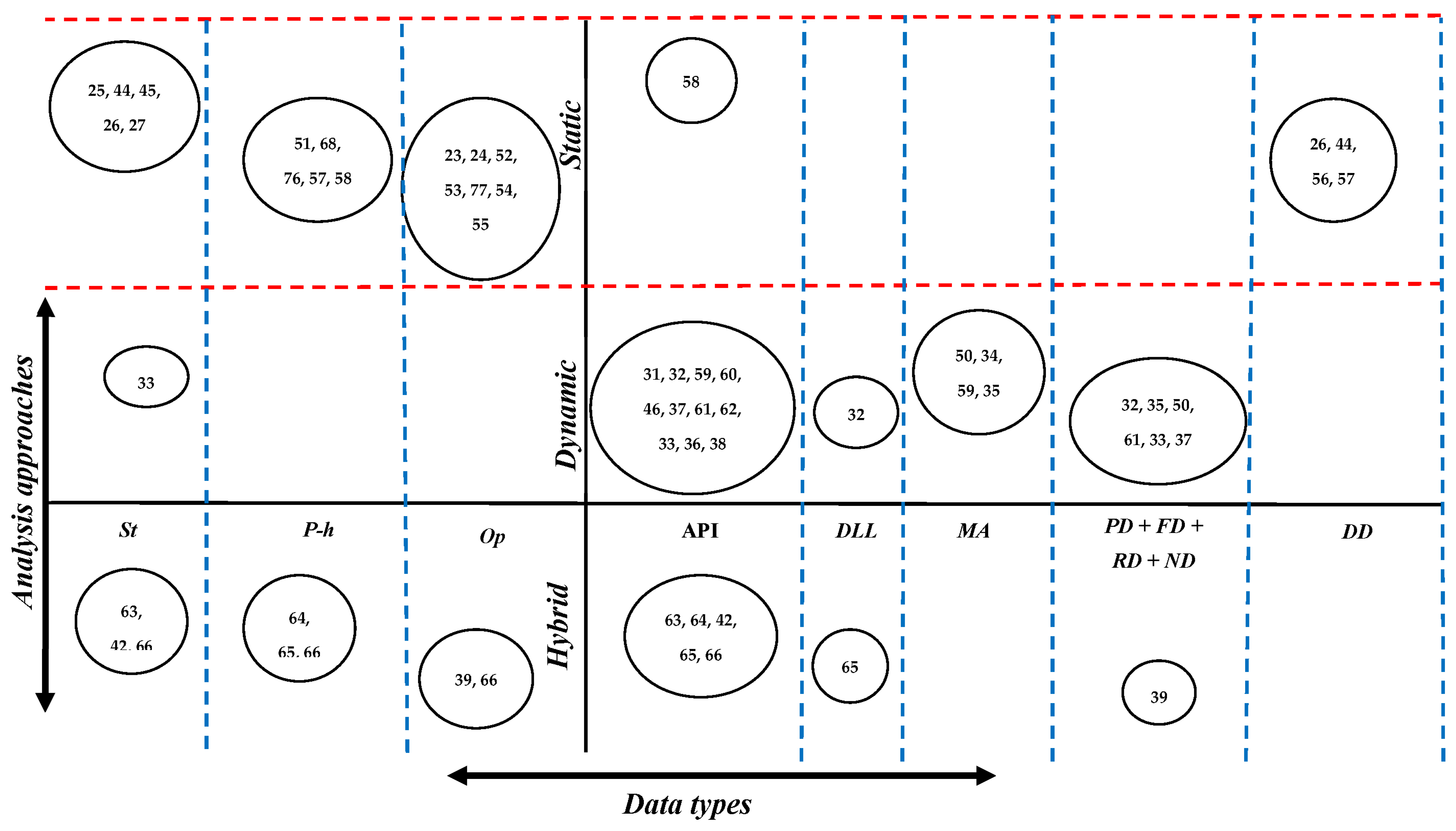

1.4. Malware Analysis and Data Types Discussion

2. Malware Detection Approaches

2.1. Signature-Based

2.2. Behavioral-Based

2.3. Heuristic-Based

2.4. Malware Detection Discussion

This entry is adapted from the peer-reviewed paper 10.3390/app12178482

References

- Khodamoradi, P.; Fazlali, M.; Mardukhi, F.; Nosrati, M. Heuristic metamorphic malware detection based on statistics of assembly instructions using classification algorithms. In Proceedings of the 2015 18th CSI International Symposium on Computer Architecture and Digital Systems (CADS), Tehran, Iran, 7–8 October 2015; pp. 1–6.

- Zakeri, M.; Daneshgar, F.F.; Abbaspour, M. A static heuristic approach to detecting malware targets. Secur. Commun. Netw. 2015, 8, 30.

- Kumar, R.; Vaishakh, A.R.E. Detection of Obfuscation in Java Malware. Procedia Comput. Sci. 2015, 78, 521–529.

- Wael, D.; Sayed, S.G.; AbdelBaki, N. Enhanced Approach to Detect Malicious VBScript Files Based on Data Mining Techniques. Procedia Comput. Sci. 2018, 141, 552–558.

- Hashemi, H.; Azmoodeh, A.; Hamzeh, A.; Hashemi, S. Graph embedding as a new approach for unknown malware detection. J. Comput. Virol. Hacking Tech. 2017, 13, 153–166.

- Liu, L.; Wang, B.; Yu, B.; Zhong, Q. Automatic malware classification and new malware detection using machine learning. Front. Inf. Technol. Electron. Eng. 2017, 18, 1336–1347.

- Fuyong, Z.; Tiezhu, Z. Malware Detection and Classification Based on N-Grams Attribute Similarity. In Proceedings of the 2017 IEEE International Conference on Computational Science and Engineering (CSE) and IEEE International Conference on Embedded and Ubiquitous Computing (EUC), Guangzhou, China, 21–24 July 2017; pp. 793–796.

- Khalilian, A.; Nourazar, A.; Vahidi-Asl, M.; Haghighi, H. G3MD: Mining frequent opcode sub-graphs for metamorphic malware detection of existing families. Expert Syst. Appl. 2018, 112, 15–33.

- Zelinka, I.; Amer, E. An Ensemble-Based Malware Detection Model Using Minimum Feature Set. Mendel 2019, 25, 1–10.

- Denzer, T.; Shalaginov, A.; Dyrkolbotn, G.O. Intelligent Windows Malware Type Detection based on Multiple Sources of Dynamic Characteristics. Nis. J. 2019, 12, 20.

- Lu, R. Malware Detection with LSTM using Opcode Language. arXiv 2019, arXiv:1906.04593.

- Li, X.; Qiu, K.; Qian, C.; Zhao, G. An Adversarial Machine Learning Method Based on OpCode N-grams Feature in Malware Detection. In Proceedings of the 2020 IEEE Fifth International Conference on Data Science in Cyberspace (DSC), Hong Kong, China, 27–30 July 2020; pp. 380–387.

- Zhang, H.; Xiao, X.; Mercaldo, F.; Ni, S.; Martinelli, F.; Sangaiah, A.K. Classification of ransomware families with machine learning based on N-gram of opcodes. Futur. Gener. Comput. Syst. 2019, 90, 211–221.

- PSeshagiri, P.; Vazhayil, A.; Sriram, P. AMA: Static Code Analysis of Web Page for the Detection of Malicious Scripts. Procedia Comput. Sci. 2016, 93, 768–773.

- Ling, Y.T.; Sani, N.F.M.; Abdullah, M.T.; Hamid, N.A.W.A. Nonnegative matrix factorization and metamorphic malware detection. J. Comput. Virol. Hacking Tech. 2019, 15, 195–208.

- Kumar, A.; Kuppusamy, K.; Aghila, G. A learning model to detect maliciousness of portable executable using integrated feature set. J. King Saud Univ.-Comput. Inf. Sci. 2019, 31, 252–265.

- Euh, S.; Lee, H.; Kim, D.; Hwang, D. Comparative Analysis of Low-Dimensional Features and Tree-Based Ensembles for Malware Detection Systems. IEEE Access 2020, 8, 76796–76808.

- Belaoued, M.; Mazouzi, S. A chi-square-based decision for real-time malware detection using PE-file features. J. Inf. Process. Syst. 2016, 12, 644–660.

- Choudhary, S.; Vidyarthi, M.D. A Simple Method for Detection of Metamorphic Malware using Dynamic Analysis and Text Mining. Procedia Comput. Sci. 2015, 54, 265–270.

- Galal, H.S.; Mahdy, Y.B.; Atiea, M.A. Behavior-based features model for malware detection. J. Comput. Virol. Hacking Tech. 2016, 12, 59–67.

- Banin, S.; Shalaginov, A.; Franke, K. Memory access patterns for malware detection. Nor. Inf. 2016, 96, 107.

- Mosli, R.; Li, R.; Yuan, B.; Pan, Y. Automated malware detection using artifacts in forensic memory images. In Proceedings of the 2016 IEEE Symposium on Technologies for Homeland Security (HST), Waltham, MA, USA, 10–12 May 2016; pp. 1–6.

- Norouzi, M.; Souri, A.; Zamini, M.S. A Data Mining Classification Approach for Behavioral Malware Detection. J. Comput. Netw. Commun. 2016, 2016, 1–9.

- Burnap, P.; French, R.; Turner, F.; Jones, K. Malware classification using self organising feature maps and machine activity data. Comput. Secur. 2018, 73, 399–410.

- Jerlin, M.A.; Marimuthu, K. A New Malware Detection System Using Machine Learning Techniques for API Call Sequences. J. Appl. Secur. Res. 2018, 13, 45–62.

- Fasano, F.; Martinelli, F.; Mercaldo, F.; Santone, A. Energy Consumption Metrics for Mobile Device Dynamic Malware Detection. Procedia Comput. Sci. 2019, 159, 1045–1052.

- Belaoued, M.; Boukellal, A.; Koalal, M.A.; Derhab, A.; Mazouzi, S.; Khan, F.A. Combined dynamic multi-feature and rule-based behavior for accurate malware detection. Int. J. Distrib. Sens. Netw. 2019, 15, 155014771988990.

- Kim, H.; Kim, J.; Kim, Y.; Kim, I.; Kim, K.J.; Kim, H. Improvement of malware detection and classification using API call sequence alignment and visualization. Cluster Comput. 2019, 22, 921–929.

- Hwang, J.; Kim, J.; Lee, S.; Kim, K. Two-Stage Ransomware Detection Using Dynamic Analysis and Machine Learning Techniques. Wirel. Pers. Commun. 2020, 112, 2597–2609.

- Arabo, A.; Dijoux, R.; Poulain, T.; Chevalier, G. Detecting Ransomware Using Process Behavior Analysis. Procedia Comput. Sci. 2020, 168, 289–296.

- Ali, M.; Shiaeles, S.; Bendiab, G.; Ghita, B. MALGRA: Machine Learning and N-Gram Malware Feature Extraction and Detection System. Electronics 2020, 9, 1777.

- Singh, J.; Singh, J. Detection of malicious software by analyzing the behavioral artifacts using machine learning algorithms. Inf. Softw. Technol. 2020, 121, 106273.

- JVidal, M.; Orozco, A.L.S.; Villalba, L.J.G. Malware Detection in Mobile Devices by Analyzing Sequences of System Calls. Int. J. Comput. Electr. Autom. Control. Inf. Eng. 2017, 11, 588–592.

- Shijo, P.; Salim, A. Integrated Static and Dynamic Analysis for Malware Detection. Procedia Comput. Sci. 2015, 46, 804–811.

- Fraley, J.B.; Figueroa, M. Polymorphic malware detection using topological feature extraction with data mining. In Proceedings of the SoutheastCon 2016, Norfolk, VA, USA, 30 March–3 April 2016; pp. 1–7.

- Darshan, S.L.S.; Jaidhar, C.D. Windows malware detection system based on LSVC recommended hybrid features. J. Comput. Virol. Hacking Tech. 2019, 15, 127–146.

- Huang, X.; Ma, L.; Yang, W.; Zhong, Y. A Method for Windows Malware Detection Based on Deep Learning. J. Signal Process. Syst. 2021, 93, 265–273.

- Darshan, S.L.S.; Jaidhar, C.D. An empirical study to estimate the stability of random forest classifier on the hybrid features recommended by filter based feature selection technique. Int. J. Mach. Learn. Cybern. 2020, 11, 339–358.

- Kang, J.; Won, Y. A study on variant malware detection techniques using static and dynamic features. J. Inf. Process. Syst. 2020, 16, 882–895.

- Naz, S.; Singh, D.K. Review of Machine Learning Methods for Windows Malware Detection. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019; pp. 1–6.

- Ahmed, Y.A.; Koçer, B.; Huda, S.; Al-Rimy, B.A.S.; Hassan, M.M. A system call refinement-based enhanced Minimum Redundancy Maximum Relevance method for ransomware early detection. J. Netw. Comput. Appl. 2020, 167, 102753.

- Ndibanje, B.; Kim, K.H.; Kang, Y.J.; Kim, H.H.; Kim, T.Y.; Lee, H.J. Cross-Method-Based Analysis and Classification of Malicious Behavior by API Calls Extraction. Appl. Sci. 2019, 9, 239.

- Zhong, W.; Gu, F. A multi-level deep learning system for malware detection. Expert Syst. Appl. 2019, 133, 151–162.

- Damodaran, A.; Di Troia, F.; Visaggio, C.A.; Austin, T.; Stamp, M. A comparison of static, dynamic, and hybrid analysis for malware detection. J. Comput. Virol. Hacking Tech. 2017, 13, 1–12.

- Wael, D.; Shosha, A.; Sayed, S.G. Malicious VBScript detection algorithm based on data-mining techniques. In Proceedings of the 2017 International Conference on Advanced Control. Circuits Systems (ACCS) Systems & 2017 International Conference on New Paradigms in Electronics & Information Technology (PEIT), Alexandria, Egypt, 5–8 November 2017; pp. 112–116.

- Ki, Y.; Kim, E.; Kim, H.K. A Novel Approach to Detect Malware Based on API Call Sequence Analysis. Int. J. Distrib. Sens. Netw. 2015, 11, 659101.

- Sihwail, R.; Omar, K.; Ariffin, K.A.Z.; Al Afghani, S. Malware Detection Approach Based on Artifacts in Memory Image and Dynamic Analysis. Appl. Sci. 2019, 9, 3680.

- Saxena, S.; Mancoridis, S. Malware Detection using Behavioral Whitelisting of Computer Systems. In Proceedings of the 2019 IEEE International Symposium on Technologies for Homeland Security (HST), Greater Boston, MA, USA, 5–6 November 2019; pp. 1–6.

- Alieyan, K.; Almomani, A.; Anbar, M.; Alauthman, M.; Abdullah, R.; Gupta, B.B. DNS rule-based schema to botnet detection. Enterp. Inf. Syst. 2021, 15, 545–564.

- Kakisim, A.G.; Nar, M.; Sogukpinar, I. Metamorphic malware identification using engine-specific patterns based on co-opcode graphs. Comput. Stand. Interfaces 2019, 71, 103443.

- Du, D.; Sun, Y.; Ma, Y.; Xiao, F. A Novel Approach to Detect Malware Variants Based on Classified Behaviors. IEEE Access 2019, 7, 81770–81782.

- Catak, F.O.; Yazı, A.F.; Elezaj, O.; Ahmed, J. Deep learning based Sequential model for malware analysis using Windows exe API Calls. PeerJ Comput. Sci. 2020, 6, e285.