Fire tracking has become an attractive application of satellite remote sensing thanks to the characteristics of recent remote sensing images, such as high frequency, large range, and multi-spectrum. Additionally, the high-resolution images provide more information and high-time resolution data in forest fire monitoring, showing great potential in environment monitoring. In recent years, many researchers have concentrated on the activate fire detection based on single images, while a few pieces of literature tracked the fire and smoke based on multi-temporal detection or continuous detection. A vital component of fire tracking from remote sensors is the accurate estimation of the background temperature of an area in a fire’s absence, which helps identify and report fire activity.

- satellite video

- fire tracking

- deep learning

1. Introduction

Object tracking is a hot topic in computer vision and remote sensing, and it typically employs a bounding box that locks onto the region of interest (ROI) when only an initial state of the target (in a video frame) is available [1][2]. Thanks to the development of satellite imaging technology, various satellites with advanced onboard cameras have been launched to obtain very high resolution (VHR) satellite videos for military and civilian applications. Compared to traditional target tracking methods, satellite video target tracking is more efficient in motion analysis and object surveillance, and has shown great potential applications in spying on enemies [3], monitoring and protecting sea ice [4], fighting wildfires [5], and monitoring city trafficking [6], which traditional target tracking cannot even approach.

| Target | Method | Ref. | Year | Description |

|---|---|---|---|---|

| Fire | Traditional | [7] | 2018 | A threshold algorithm with visual interpretation |

| [8] | 2019 | A multi-temporal method of temperature estimation | ||

| [9] | 2020 | Temperature dynamics by data assimilation | ||

| [10] | 2022 | Wildfire tracking via visible and infrared image series | ||

| DL-based | [11] | 2019 | 3D CNN to capture spatial and spectral patterns | |

| [12] | 2019 | Inception-v3 model with transfer learning | ||

| [13] | 2021 | Near-real-time fire smoking prediction | ||

| [14] | 2022 | Combine the residual convolution and separable convolution to detect fire | ||

| [15] | 2022 | Multiple Kernel learning for various size fire detections |

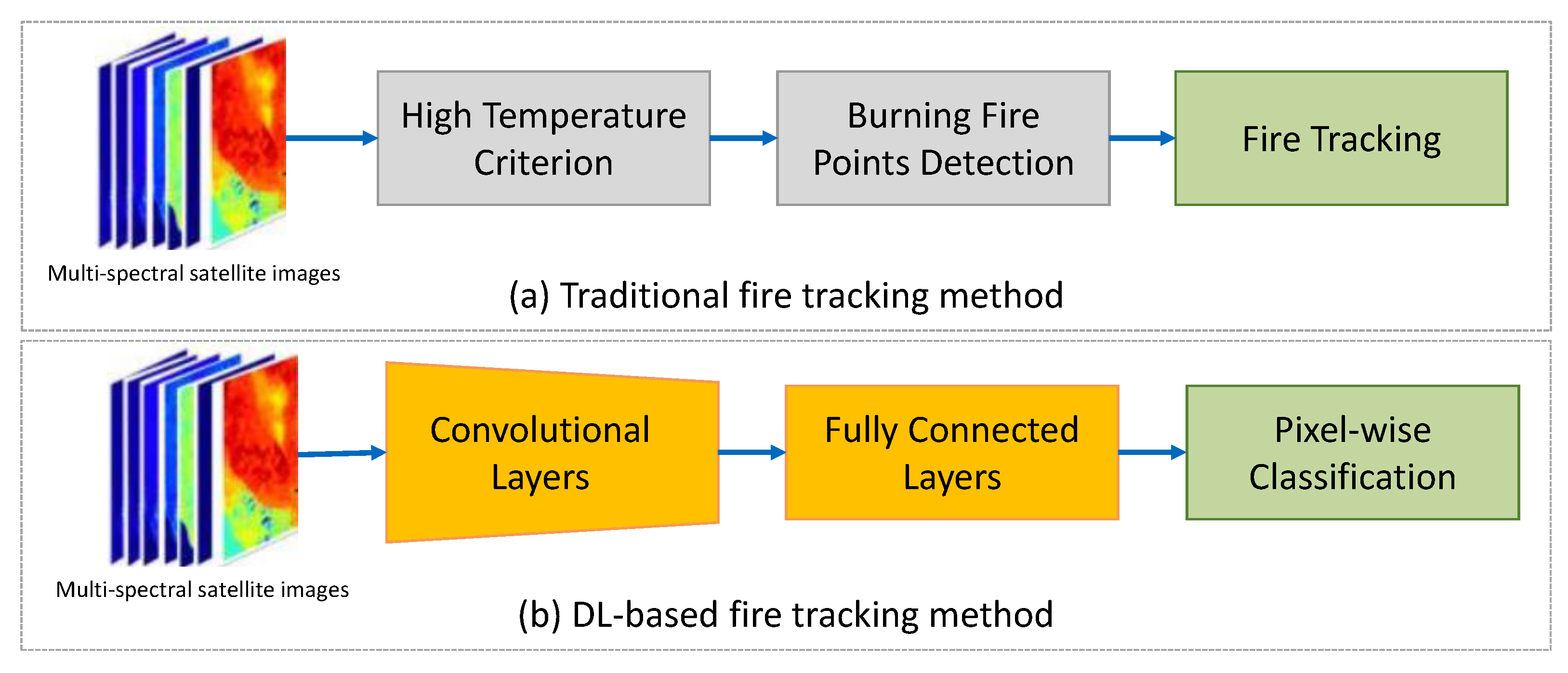

3. DL-Based Tracking Methods

This entry is adapted from the peer-reviewed paper 10.3390/rs14153674

References

- Yilmaz, A.; Javed, O.; Shah, M. Object tracking: A survey. ACM Comput. Surv. (CSUR) 2006, 38, 13.

- Jiao, L.; Zhang, R.; Liu, F.; Yang, S.; Hou, B.; Li, L.; Tang, X. New Generation Deep Learning for Video Object Detection: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–21.

- Melillos, G.; Themistocleous, K.; Papadavid, G.; Agapiou, A.; Prodromou, M.; Michaelides, S.; Hadjimitsis, D.G. Integrated use of field spectroscopy and satellite remote sensing for defence and security applications in Cyprus. In Proceedings of the Fourth International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2016), Paphos, Cyprus, 4–8 April 2016; Volume 9688, pp. 127–135.

- Xian, Y.; Petrou, Z.I.; Tian, Y.; Meier, W.N. Super-resolved fine-scale sea ice motion tracking. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5427–5439.

- Bailon-Ruiz, R.; Lacroix, S. Wildfire remote sensing with UAVs: A review from the autonomy point of view. In Proceedings of the 2020 International Conference on Unmanned Aircraft Systems (ICUAS), Athens, Greece, 1–4 September 2020; pp. 412–420.

- Du, B.; Sun, Y.; Cai, S.; Wu, C.; Du, Q. Object tracking in satellite videos by fusing the kernel correlation filter and the three-frame-difference algorithm. IEEE Geosci. Remote Sens. Lett. 2017, 15, 168–172.

- Na, L.; Zhang, J.; Bao, Y.; Bao, Y.; Na, R.; Tong, S.; Si, A. Himawari-8 satellite based dynamic monitoring of grassland fire in China-Mongolia border regions. Sensors 2018, 18, 276.

- Hally, B.; Wallace, L.; Reinke, K.; Jones, S.; Skidmore, A. Advances in active fire detection using a multi-temporal method for next-generation geostationary satellite data. Int. J. Digit. Earth 2019, 12, 1030–1045.

- Udahemuka, G.; van Wyk, B.J.; Hamam, Y. Characterization of Background Temperature Dynamics of a Multitemporal Satellite Scene through Data Assimilation for Wildfire Detection. Remote Sens. 2020, 12, 1661.

- Chen, Y.; Hantson, S.; Andela, N.; Coffield, S.R.; Graff, C.A.; Morton, D.C.; Ott, L.E.; Foufoula-Georgiou, E.; Smyth, P.; Goulden, M.L.; et al. California wildfire spread derived using VIIRS satellite observations and an object-based tracking system. Sci. Data 2022, 9, 249.

- Phan, T.C.; Nguyen, T.T. Remote Sensing Meets Deep Learning: Exploiting Spatio-Temporal-Spectral Satellite Images for Early Wildfire Detection. 2019. Available online: https://Infoscience.Epfl.Ch/Record/270339 (accessed on 31 May 2022).

- Vani, K. Deep learning based forest fire classification and detection in satellite images. In Proceedings of the 2019 11th International Conference on Advanced Computing (ICoAC), Chennai, India, 18–20 December 2019; pp. 61–65.

- Larsen, A.; Hanigan, I.; Reich, B.J.; Qin, Y.; Cope, M.; Morgan, G.; Rappold, A.G. A deep learning approach to identify smoke plumes in satellite imagery in near-real time for health risk communication. J. Expo. Sci. Environ. Epidemiol. 2021, 31, 170–176.

- Seydi, S.T.; Saeidi, V.; Kalantar, B.; Ueda, N.; Halin, A.A. Fire-Net: A deep learning framework for active forest fire detection. J. Sens. 2022, 2022, 8044390.

- Rostami, A.; Shah-Hosseini, R.; Asgari, S.; Zarei, A.; Aghdami-Nia, M.; Homayouni, S. Active Fire Detection from Landsat-8 Imagery Using Deep Multiple Kernel Learning. Remote Sens. 2022, 14, 992.

- Xu, G.; Zhong, X. Real-time wildfire detection and tracking in Australia using geostationary satellite: Himawari-8. Remote Sens. Lett. 2017, 8, 1052–1061.

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A review on early forest fire detection systems using optical remote sensing. Sensors 2020, 20, 6442.

- De Almeida Pereira, G.H.; Fusioka, A.M.; Nassu, B.T.; Minetto, R. Active fire detection in Landsat-8 imagery: A large-scale dataset and a deep-learning study. ISPRS J. Photogramm. Remote Sens. 2021, 178, 171–186.

- Zhang, Q.; Ge, L.; Zhang, R.; Metternicht, G.I.; Liu, C.; Du, Z. Towards a Deep-Learning-Based Framework of Sentinel-2 Imagery for Automated Active Fire Detection. Remote Sens. 2021, 13, 4790.

- Florath, J.; Keller, S. Supervised Machine Learning Approaches on Multispectral Remote Sensing Data for a Combined Detection of Fire and Burned Area. Remote Sens. 2022, 14, 657.