Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Subjects:

Transportation Science & Technology

Preceding vehicles have a significant impact on the safety of the vehicle, whether or not it has the same driving direction as an ego-vehicle. Reliable trajectory prediction of preceding vehicles is crucial for making safer planning.

- trajectory prediction

- preceding target vehicle

- multi-sensor fusion

1. Introduction

Automated vehicles and advanced driver assistance systems (ADAS) have received a surge of attention in recent years as they are considered to be an effective solution for traffic congestion and safety [1,2,3]. Reliable trajectory prediction of preceding vehicles is crucial for the planning and decision-making of automated vehicles. Compared to studies of surrounding vehicles trajectory prediction [4], preceding target vehicles (PTVs) should receive more attention, which in turn has a higher possibility of risk to the automated ego-vehicle (EV). Based on the future trajectory of PTVs, the EV can generate a more comfortable and safe path, avoiding or mitigating the risk of collision [5].

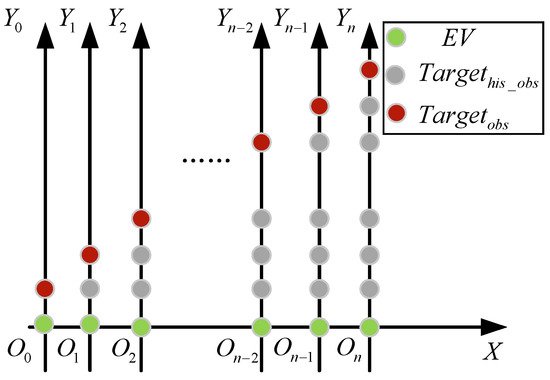

A major reason for the prosperity of vehicle trajectory prediction algorithms is the availability of public datasets [6,7], which assists researchers in quickly validating their algorithms. Despite these favorable results on public datasets, when the vehicle trajectory prediction system evaluated, not only should the accuracy of the prediction should be considered, but also the generalization ability of the established model should be evaluated; that is, whether the model can accurately predict the trajectory of the vehicle in real road driving. Vehicle-to-vehicle (V2V) is also a source for trajectory prediction input. However, in the case that the V2V communication technique is unavailable, autonomous vehicles cannot receive accurate information from surrounding vehicles, and the autonomous vehicles have to deduce the trajectory of other vehicles through various onboard sensors [8,9,10]. Currently, a lot of research has captured PTVs using cameras, LIDAR, and other sensors in real road driving [10,11,12]. However, the sensor is moving because it is fixed to EV, which results in PTV positions that are not in the same coordinate system. Figure 1 shows the results of the PTV in a moving EV vehicle coordinate system and a stationary EV vehicle coordinate system, respectively, where the green points represent the EV position, the gray points represent observed PTV history position, and the red points represent real PTV position. When EV and PTV are driving at the same speed in the X-axis, PTV is traveling in the Y-axis as observed by the EV sensor. For this reason, when predicting vehicle trajectories in real roads, it is necessary to focus not only on excellent prediction algorithms to predict future trajectories but also on methods to obtain historical trajectories of PTVs.

Figure 1. Relative motion of the PTV.

2. Trajectory Prediction of Preceding Target Vehicles

Vehicle driving is a continuous, time-varying, and dynamic process. Extensive research has been conducted on vehicle trajectory prediction. For the PTVs’ trajectories prediction, prior works can be divided into two categories: model-driven methods and data-driven methods [13].

The model-driven methods include the hidden Markov model (HMM), Gaussian mixture model (GMM), vehicle dynamics model (VDM), and polynomial model (PM). Ye et al. [14] proposed a novel vehicle trajectory prediction algorithm named double hidden Markov of trajectory prediction (DHMTP). The algorithm was based on a hidden Markov model with double hidden states and predicted the vehicle trajectory at multiple subsequent moments. Wiest et al. [15] built a mixture Gaussian–Bayesian model-based variational probabilistic trajectory, in which Gaussian and Bayesian methods were jointly utilized to predict future vehicle coordinates. The validation of real word trajectory showed that the proposed model could predict future vehicle trajectories within two seconds. VDM represented a model built using vehicle dynamics data (e.g., velocity, acceleration, steering angle, and yaw angle) and relevant mathematical methods. Vehicle kinematic data and a maneuver identification model were combined to trajectory prediction model which was validated by real driving data [16]. The results indicated that the model was effective in short-term prediction. However, the accuracy of its long-term prediction was not stable. PM was usually employed to fit non-linear curves. Guo et al. [17] fitted and predicted the longitudinal trajectory of a vehicle using a fifth-order polynomial. These approaches only achieved favorable results on short-term trajectory forecasts. However, it did not show promising results when predicting long trajectories.

Besides the traditional methods motioned above, a large number of works focused on vehicle trajectory prediction by using recurrent neural network (RNN) and Long short-term memory (LSTM). Especially variant LSTM had received a lot of attention from researchers. Deo and Trivedi [18] used the LSTM encoder to encode the trajectory vectors of surrounding vehicles to predict the future trajectory and validated the effect on the NGSIM dataset. In [19], two streams graph LSTM to predict trajectories and driving behavior were adopted under urban scenarios. The first stream used only a conventional LSTM encoder-decoder network when the second stream used a weighted dynamic geometric graph. The model was evaluated on the Argoverse, Lyft, Apolloscape, and NGSIM datasets. Although it had achieved promising results in long-term trajectory prediction, LSTM normally had difficulty modeling complex temporal dependencies [20].

Recently, Transformer networks had made ground-breaking progress in Natural Language Processing domains (NLP) [21]. Transformers discarded the sequence of language sequences and only modeled temporal dependencies using a powerful self-attention mechanism. The key advantage of the transformer architecture was the significant improvement in temporal modeling compared to RNN. Several studies had used transformer networks to model pedestrian trajectory prediction and achieved good results [22,23,24]. Although the transformer was excellent at predicting pedestrian trajectories, vehicles had faster speeds compared to pedestrians. Moreover, these studies had not been able to predict target trajectory from the raw data because the location of the targets was already provided in the public dataset.

3. Sensors Fusion and History Trajectory Generation

3.1. Detection

Detection and tracking are prerequisites for generating PTV’s historical trajectory. Moreover, excellent trackers depend largely on a superb detector. You only look once (YOLO) algorithms can achieve faster performance than the two-stage algorithm by tuning the backbone network due to the omission of the coarse localization process. In particular, the YOLOv5 model is faster, more accurate and has a lower number of model parameters than the YOLOv4 model [25]. Therefore, YOLOv5 is employed as the detector for PTV.

3.2. Tracking

DeepSORT is an improved version of simple online and real-time tracking (SORT). It integrates a pre-trained neural network to generate feature vectors which are used as a deep association metric. Specifically, it applies a trained convolutional neural network (CNN) to detect obstacles on large-scale datasets. By using this network integration, deepSORT overcomes the shortcomings of SORT while ensuring that the system is easy to implement, effective, and suitable for real-time situations [26]. Hence, we apply deepSORT as the tracker.

3.3. Lidar-Camera Fusion

Sensor fusion can enhance sensing capabilities and reduce costs by exploiting the complementary properties. LIDAR provides accurate PTV geometry information; however, the LIDAR has low resolution and a low frame rate. On the contrary, monocular cameras have high frame rates and resolution but difficulty in perceiving 3D geometric information. Therefore, camera–LIDAR fusion has been more focused on the perception of autonomous driving [27].

The process of LIDAR-camera fusion is as follows. First, the 3D point cloud is cropped according to EV’s driving direction. Next, the YOLOv5 detector fetches the PTV’s bounding box from the image. Then, point clouds are projected and clustered in the pixel coordinate system according to the joint calibration parameters of the LIDAR and the camera. After that, the position of PTV relative to EV is extracted based on the clustered point cloud. Finally, temporal features of the PTV are associated with the deepSORT tracker. Figure 2 displays the effect of LIDAR–camera fusion.

Figure 2. The effect of LIDAR–camera fusion.

This entry is adapted from the peer-reviewed paper 10.3390/s22134808

This entry is offline, you can click here to edit this entry!