Half-truth is defined as “a statement that mingles truth and falsehood with deliberate intent to deceive”.

- fake news

- half-truth effect

- persuasion

- misinformation

1. Introduction

“The lie which is half a truth is ever the blackest of lies.”[1] (St. 8).

2. History

3. New Discoveries: The Half-Truth Effect

4. Applications: Sustainability and Misinformation

5. Countering the Half-Truth Effect through Poison Parasite Counter

6. Results

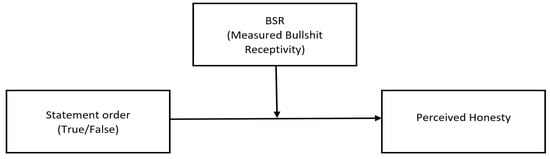

The results show that the half-truth effect enhances belief in misinformation regarding sustainability and GMOs. Specifically, the studies indicate that the order in which true and false facts are presented influences the perception of the truthfulness of the combined claim. That is, presenting a true claim first increases believability of the overall argument, whereas presenting a false claim first reduces believability. The results also indicate that the half-truth effect is moderated by individuals’ bullshit receptivity (BSR, [52]), such that the effect is evident for individuals with low BSR, but not for individuals with moderate or high BSR. Additionally, the studies show that using the Poison Parasite Counter (PPC) debiasing technique [53] reduces belief in false information but does not eliminate the half-truth effect.

This entry is adapted from the peer-reviewed paper 10.3390/su14116943

References

- Tennyson, L.A. The Grandmother. 1864. Available online: https://collections.vam.ac.uk/item/O198447/the-grandmother-photograph-cameron-julia-margaret/ (accessed on 30 March 2022).

- Lewandowsky, S. Climate Change Disinformation and how to Combat It. Annu. Rev. Public Health 2021, 42, 1–21.

- Treen, K.M.d.; Williams, H.T.P.; O’Neill, S.J. Online Misinformation about Climate Change. Wiley interdisciplinary reviews. Clim. Change 2020, 11, e665.

- Vicario, M.D.; Bessi, A.; Zollo, F.; Petroni, F.; Scala, A.; Caldarelli, G.; Stanley, H.E.; Quattrociocchi, W. The Spreading of Misinformation Online. Proc. Natl. Acad. Sci. USA 2016, 113, 554–559.

- Wang, Y.; McKee, M.; Torbica, A.; Stuckler, D. Systematic Literature Review on the Spread of Health-Related Misinformation on Social Media. Soc. Sci. Med. 2019, 240, 112552.

- Barthel, M.; Mitchell, A.; Holcomb, J. Many Americans Believe Fake News Is Sowing Confusion. 2016. Available online: https://www.pewresearch.org/journalism/2016/12/15/many-americans-believe-fake-news-is-sowing-confusion/ (accessed on 30 March 2022).

- Vosoughi, S.; Roy, D.; Aral, S. The Spread of True and False News Online. Sci. (Am. Assoc. Adv. Sci.) 2018, 359, 1146–1151.

- Hong, S.C. Presumed Effects of “Fake News” on the Global Warming Discussion in a Cross-Cultural Context. Sustainability 2020, 12, 2123.

- Kim, S.; Kim, S. The Crisis of Public Health and Infodemic: Analyzing Belief Structure of Fake News about COVID-19 Pandemic. Sustainability 2020, 12, 9904.

- Ries, M. The COVID-19 Infodemic: Mechanism, Impact, and Counter-Measures—A Review of Reviews. Sustainability 2022, 14, 2605.

- Scheufele, D.A.; Krause, N.M. Science Audiences, Misinformation, and Fake News. Proc. Natl. Acad. Sci. USA 2019, 116, 7662–7669.

- De Sousa, Á.F.L.; Schneider, G.; de Carvalho, H.E.F.; de Oliveira, L.B.; Lima, S.V.M.A.; de Sousa, A.R.; de Araújo, T.M.E.; Camargo, E.L.S.; Oriá, M.O.B.; Ramos, C.V.; et al. COVID-19 Misinformation in Portuguese-Speaking Countries: Agreement with Content and Associated Factors. Sustainability 2021, 14, 235.

- Farrell, J.; McConnell, K.; Brulle, R. Evidence-Based Strategies to Combat Scientific Misinformation. Nat. Clim. Change 2019, 9, 191–195.

- Larson, H.J. The Biggest Pandemic Risk? Viral Misinformation. Nature 2018, 562, 309.

- Loomba, S.; de Figueiredo, A.; Piatek, S.J.; de Graaf, K.; Larson, H.J. Measuring the Impact of COVID-19 Vaccine Misinformation on Vaccination Intent in the UK and USA. Nat. Hum. Behav. 2021, 5, 337–348.

- Van Der Linden, S.; Maibach, E.; Cook, J.; Leiserowitz, A.; Lewandowsky, S. Inoculating Against Misinformation. Sci. (Am. Assoc. Adv. Sci.) 2017, 358, 1141–1142.

- Cacciatore, M.A. Misinformation and Public Opinion of Science and Health: Approaches, Findings, and Future Directions. Proc. Natl. Acad. Sci. USA 2021, 118, 1.

- Charlton, E. Fake News: What It Is, and How to Spot It. 2019. Available online: https://europeansting.com/2019/03/06/fake-news-what-it-is-and-how-to-spot-it/ (accessed on 30 March 2022).

- Merriam-Webster. Half-Truth. In Merriam-Webster.Com Dictionary. 2022. Available online: https://www-merriam-webster-com.uc.idm.oclc.org/dictionary/half-truth (accessed on 30 March 2022).

- Xarhoulacos, C.; Anagnostopoulou, A.; Stergiopoulos, G.; Gritzalis, D. Misinformation Vs. Situational Awareness: The Art of Deception and the Need for Cross-Domain Detection. Sensors 2021, 21, 5496.

- Van Bavel, J.J.; Pereira, A. The Partisan Brain: An Identity-Based Model of Political Belief. Trends Cogn. Sci. 2018, 22, 213–224.

- Lerner, J.S.; Small, D.A.; Loewenstein, G. Heart Strings and Purse Strings: Carryover Effects of Emotions on Economic Decisions. Psychol. Sci. 2004, 15, 337–341.

- Kruglanski, A.W.; Shah, J.Y.; Pierro, A.; Mannetti, L. When Similarity Breeds Content: Need for Closure and the Allure of Homogeneous and Self-Resembling Groups. J. Pers. Soc. Psychol. 2002, 83, 648–662.

- Priester, J.R.; Petty, R.E. Source Attributions and Persuasion: Perceived Honesty as a Determinant of Message Scrutiny. Personal. Soc. Psychol. Bull. 1995, 21, 637–654.

- Priester, J.R.; Petty, R.E. The Influence of Spokesperson Trustworthiness on Message Elaboration, Attitude Strength, and Advertising Effectiveness. J. Consum. Psychol. 2003, 13, 408–421.

- Kruglanski, A.W.; Webster, D.M. Motivated Closing of the Mind: “Seizing” and “Freezing”. Psychol. Rev. 1996, 103, 263–283.

- Roets, A.; Kruglanski, A.W.; Kossowska, M.; Pierro, A.; Hong, Y. The Motivated Gatekeeper of our Minds: New Directions in Need for Closure Theory and Research. In Advances in Experimental Social Psychology; Elsevier Science & Technology: Waltham MA, USA, 2015; Volume 52, pp. 221–283.

- Wyer, R.S.; Xu, A.J.; Shen, H. The Effects of Past Behavior on Future Goal-Directed Activity. In Advances in Experimental Social Psychology; Elsevier Science & Technology: Waltham, MA, USA, 2012; Volume 46, pp. 237–283.

- Xu, A.J.; Wyer, R.S. The Role of Bolstering and Counterarguing Mind-Sets in Persuasion. J. Consum. Res. 2012, 38, 920–932.

- Berg, J.; Dickhaut, J.; McCabe, K. Trust, Reciprocity, and Social History. Games Econ. Behav. 1995, 10, 122–142.

- Pennycook, G.; Cheyne, J.A.; Barr, N.; Koehler, D.J.; Fugelsang, J.A. On the Reception and Detection of Pseudo-Profound Bullshit. Judgm. Decis. Mak. 2015, 10, 549–563.

- Dechêne, A.; Stahl, C.; Hansen, J.; Wänke, M. The Truth about the Truth: A Meta-Analytic Review of the Truth Effect. Personal. Soc. Psychol. Rev. 2010, 14, 238–257.

- Hasher, L.; Goldstein, D.; Toppino, T. Frequency and the Conference of Referential Validity. J. Verbal Learn. Verbal Behav. 1977, 16, 107–112.

- Fazio, L.K.; Pillai, R.M.; Patel, D. The Effects of Repetition on Belief in Naturalistic Settings. J. Exp. Psychol. Gen. 2022.

- Brown, A.S.; Nix, L.A. Turning Lies into Truths: Referential Validation of Falsehoods. J. Exp. Psychol. Learn. Mem. Cogn. 1996, 22, 1088–1100.

- Begg, I.M.; Anas, A.; Farinacci, S. Dissociation of Processes in Belief: Source Recollection, Statement Familiarity, and the Illusion of Truth. J. Exp. Psychol. Gen. 1992, 121, 446–458.

- Reber, R.; Schwarz, N. Effects of Perceptual Fluency on Judgments of Truth. Conscious. Cogn. 1999, 8, 338–342.

- Alter, A.L.; Oppenheimer, D.M. Uniting the Tribes of Fluency to Form a Metacognitive Nation. Personal. Soc. Psychol. Rev. 2009, 13, 219–235.

- Fazio, L.K. Repetition Increases Perceived Truth Even for Known Falsehoods. Collabra. Psychol. 2020, 6, 38.

- Hassan, A.; Barber, S.J. The Effects of Repetition Frequency on the Illusory Truth Effect. Cogn. Res. Princ. Implic. 2021, 6, 38.

- Gigerenzer, G. External Validity of Laboratory Experiments: The Frequency-Validity Relationship. Am. J. Psychol. 1984, 97, 185–195.

- Pennycook, G.; Cannon, T.D.; Rand, D.G. Prior Exposure Increases Perceived Accuracy of Fake News. J. Exp. Psychol. Gen. 2018, 147, 1865–1880.

- Fazio, L.K.; Brashier, N.M.; Payne, B.K.; Marsh, E.J. Knowledge does Not Protect Against Illusory Truth. J. Exp. Psychol. Gen. 2015, 144, 993–1002.

- Cook, J.; Oreskes, N.; Doran, P.T.; Anderegg, W.R.L.; Verheggen, B.; Maibach, E.W.; Carlton, J.S.; Lewandowsky, S.; Skuce, A.G.; Green, S.A.; et al. Consensus on Consensus: A Synthesis of Consensus Estimates on Human-Caused Global Warming. Environ. Res. Lett. 2016, 11, 48002–48008.

- Marlon, J.; Neyens, L.; Jefferson, M.; Howe, P.; Mildenberger, M.; Leiserowitz, A. Yale Climate Opinion Maps 2021. 2022. Available online: https://climatecommunication.yale.edu/visualizations-data/ycom-us/ (accessed on 30 March 2022).

- Butters, C. Myths and Issues about Sustainable Living. Sustainability 2021, 13, 7521.

- Piñeiro, V.; Arias, J.; Dürr, J.; Elverdin, P.; Ibáñez, A.M.; Kinengyere, A.; Opazo, C.M.; Owoo, N.; Page, J.R.; Prager, S.D. A Scoping Review on Incentives for Adoption of Sustainable Agricultural Practices and their Outcomes. Nat. Sustain. 2020, 3, 809–820.

- Teklewold, H.; Kassie, M.; Shiferaw, B. Adoption of Multiple Sustainable Agricultural Practices in Rural Ethiopia. J. Agric. Econ. 2013, 64, 597–623.

- Mannion, A.M.; Morse, S. Biotechnology in Agriculture: Agronomic and Environmental Considerations and Reflections Based on 15 Years of GM Crops. Prog. Phys. Geogr. 2012, 36, 747–763.

- Zilberman, D.; Holland, T.G.; Trilnick, I. Agricultural GMOs-what we Know and Where Scientists Disagree. Sustainability 2018, 10, 1514.

- Kamal, A.; Al-Ghamdi, S.G.; Koc, M. Revaluing the Costs and Benefits of Energy Efficiency: A Systematic Review. Energy Res. Soc. Sci. 2019, 54, 68–84.

- Pew Research Center. Genetically Modified Foods (GMOs) and Views on Food Safety; Pew Research Center: Washington, DC, USA, 2015.

- Cialdini, R.B.; Lasky-Fink, J.; Demaine, L.J.; Barrett, D.W.; Sagarin, B.J.; Rogers, T. Poison Parasite Counter: Turning Duplicitous Mass Communications into Self-Negating Memory-Retrieval Cues. Psychol. Sci. 2021, 32, 1811–1829.

- Ecker, U.K.H.; Lewandowsky, S.; Jayawardana, K.; Mladenovic, A. Refutations of Equivocal Claims: No Evidence for an Ironic Effect of Counterargument Number. J. Appl. Res. Mem. Cogn. 2019, 8, 98–107.

- Wahlheim, C.N.; Alexander, T.R.; Peske, C.D. Reminders of Everyday Misinformation Statements can Enhance Memory for and Beliefs in Corrections of those Statements in the Short Term. Psychol. Sci. 2020, 31, 1325–1339.

- Autry, K.S.; Duarte, S.E. Correcting the Unknown: Negated Corrections may Increase Belief in Misinformation. Appl. Cogn. Psychol. 2021, 35, 960–975.