Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

When a natural disaster occurs, humanitarian organizations need to be prompt, effective, and efficient to support people whose security is threatened. Satellite imagery offers rich and reliable information to support expert decision-making, yet its annotation remains labour-intensive and tedious.

- damage assessment

- transfer learning

- deep learning

- convolutional neural networks

1. Introduction

For decades, humanitarian agencies have been developing robust processes to respond effectively when natural disasters occur. As soon as the event happens, processes are triggered, and resources are deployed to assist and relieve the affected population. Nevertheless, from a hurricane in the Caribbean to a heavy flood in Africa, every catastrophe is different, thus requiring organizations to adapt within the shortest delay to support the affected population on the field. Hence, efficient yet flexible operations are essential to the success of humanitarian organizations.

Humanitarian agencies can leverage machine learning to automate traditionally labour-intensive tasks and speed up their crisis relief response. However, to assist decision-making in an emergency context, humans and machine learning models can be no different; they both need to adjust quickly to the new disaster. Climate conditions, construction types, and types of damage caused by the event may differ from those encountered in the past. Nonetheless, the response must be sharp and attuned to the current situation. Hence, a model must learn from past disaster events to understand what damaged buildings resemble, but it should first and foremost adapt to the environment revealed by the new disaster.

Damage assessment is the preliminary evaluation of damage in the event of a natural disaster, intended to inform decision-makers on the impact of the incident [1]. This work focuses on the building damage assessment. Damaged buildings are strong indicators of the humanitarian consequences of the hazard: they mark where people need immediate assistance. In this work, we address building damage assessment using machine learning techniques and remote sensing imagery. We train a neural network to automatically locate buildings from satellite images and assess any damages.

Given the emergency context, a model trained on images of past disaster events should be able to generalize to images from the current one, but the complexity lies in the data distribution shift between these past disaster events and the current disaster. A distribution describes observation samples in a given space; here, it is influenced by many factors such as the location, the nature, and the strength of the natural hazards.

Neural networks are known to perform well when the training and testing samples are drawn from the same distributions; however, they fail to generalize under important distribution shifts [2]. The implementation of machine learning solutions in real-world humanitarian applications is extremely challenging because of the domain gaps in between different disasters. This gap is caused by multiple factors such as the disaster’s location, the type of damages, the season and climate, etc. In fact, we show that a model trained with supervision on past disaster event images is not sufficient to guarantee good performance on a new disaster event, given the problem’s high variability. Moreover, given the urgency in which the model should operate, we limit the amount of labels produced for the new disaster with human-annotation. We thus suggest an approach where the model first learns generic features from many past disaster events to assimilate current disaster-specific features. This technique is known as transfer learning.

2. Damage Assessment

In this work, we study the damage assessment task from satellite imagery. As mentioned before, damage assessment may be based on ground observations. However, satellite images offer a safe, scalable, and predictable alternative source of information.

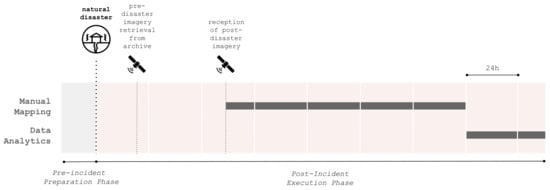

Damage assessment should be conducted as rapidly as possible, right after the rise of a natural disaster event. However, the process can only really begin after the reception of the post-disaster satellite images. This critical delay typically varies from hours to many days when the meteorological conditions do not allow image captures. When conditions allow, post-disaster satellite images are quickly shared through the strategic partnerships between Earth observation providers and humanitarian organizations. The delay to retrieve pre-disaster images is typically shorter; the data already exist, they only need to be retrieved from archives and shared. Upon reception of satellite images, the goal is to produce an initial damage assessment report as rapidly as possible. This process includes two main steps: mapping and data analytics (Figure 1).

Figure 1. Building damage assessment incident workflow. The post-incident execution phase is triggered by a natural disaster but is only initiated upon retrieval of post-disaster satellite images from an imagery archive. Those images are then used to produce maps and analyzed to produce a damage assessment report. The duration of each task is approximate and depends upon many external factors.

Mapping is the backbone task in a damage assessment process. It consists of locating buildings from satellite imagery and tagging those which are damaged according to a predefined scale. Large devastated areas may be processed to find impaired structures. Maps can then be used as-is to seek precise information by field workers or further analyzed to inform decision-making. They include critical information, such as the density of damaged buildings in a given area.

The data analytics step combines the raw maps of damaged buildings along with other sources of demographic information to inform decision-making. It takes into account the disaster event’s specificity to organize an appropriate and dedicated response. For instance, demographic data may indicate if the disaster affected a vulnerable population, in which case the need for food assistance is even more important.

3. Related Works

Satellite images contain highly valuable information about our planet. They can inform and support decision-making about global issues, from climate changes [3] to water sustainability [4], food security [5], and urban planning [6]. Many applications, such as fire detection [7], land use monitoring [8], and disaster assistance [9], utilize remote sensing imagery.

The task of damage assessment can be decoupled into two separate tasks: building detection and damage classification. In the field of building detection, there have been a lot of approaches presented recently. In the literature, the task is typically framed as a semantic segmentation task.

On one side, refs. [10,11,12] all present variations of fully convolutional networks to detect buildings from aerial images. The differences mostly reside in the post-processing stages, to improve the detection performance. More recently, refs. [13,14] proposed architecture to leverage multi-scale features. In the same direction, refs. [15,16] presented encoder–decoder architectures, an approach that has proven to predict edges more precisely. Finally, ref. [17] not only used a multi-scale encoder–decoder architecture, but they introduced a morphological filter to better define building edges.

To help the model recognize buildings in a different context, ref. [18] proposed a multi-task setup to extract buildings and classify the scene (rural, industrial, high-rise urban, etc.) in parallel. Finally, ref. [19] proposed a methodology to update building footprint that rapidly becomes outdated due to constantly evolving cities. They proposed to use pre-change imagery and annotations and to update only 20 percent of the annotation to obtain a complete updated building footprint.

Data have limited the development of machine learning models for damage assessment since few suitable public datasets exist. The first works were conducted in the form of case studies, i.e., works that targeted one or few disaster events to develop and evaluate machine learning approaches.

Cooner et al. [20] took the 2010 Haiti earthquake case to apply machine learning techniques to the detection of damaged buildings in urban areas. Fujita et al. [21] took it a step further by applying CNN to solve damage assessment using pre- and post-disaster images from the 2011 Japan earthquake. They released the ABCD dataset as part of their work. Sublime and Kalinicheva [22] studied the same disaster by applying change detection techniques. Doshi et al. [23] leveraged two publicly available datasets for building and road detection: SpaceNet [24] and DeepGlobe [25], to develop a building damage detection model. Their approach relies on the relative changes of pre- and post-disaster building segmentation maps.

Since then, Gupta et al. [26] have released the xBD dataset, a vast collection of satellite images annotated for building damage assessment. It consists of very-high-resolution (VHR) pre- and post-disaster images from 18 disaster events worldwide, containing a diversity of climate, building, and disaster types. The dataset is annotated with building polygons classified according to a joint damage scale with four ordinal classes: No damage, Minor damage, Major damage, and Destroyed. A competition was organized along with the dataset release. The challenge’s first position went to [27], who proposed a two-step modelling approach composed of a building detector and a damage classifier.

The release of the xBD dataset sparked further research in the field. Shao et al. [28] investigated the use of pre- and post-disaster images as well as different loss functions to approach the task. Gupta and Shah [29] and Weber and Kané [30] proposed similar end-to-end per-pixel classification models with multi-temporal fusion. Hao et al. [31] introduced a self-attention mechanism to help the model capture long-range information. Shen et al. [32] studied the sophisticated fusion of pre- and post-disaster feature maps, presenting a cross-directional fusion strategy. Finally, Boin et al. [33] proposed to upsample the challenging classes to mitigate the class imbalance problem of the xBD dataset.

More recently, Khvedchenya and Gabruseva [34] proposed fully convolutional Siamese networks to solve the problem. They performed an ablation study over different architecture hyperparameters and loss functions, but did not compare their performance with the state-of-the-art. Xiao et al. [35] and Shen et al. [36] also presented innovative model architectures to solve the problem. The former used a dynamic cross-fusion mechanism (DCFNet) and the latter a multiscale convolutional network with cross-directional attention (BDANet). To our knowledge, DamFormer is the state-of-the-art in terms of model performance on the xBD original test set and metric. It consists of a transformer-based CNN architecture. The model learns non-local features from pre- and post-disaster images using a transformer-encoder and fuses the information for the downstream dual-tasks.

All of these methods share the same training and testing sets, and hence they can be easily compared. However, we argue that this dataset split does not suit the emergency context well since the train and test distribution is the same. Therefore, it does not show the ability of a model to generalize to an unseen disaster event. In this work, our main objective is to investigate a model’s ability to be trained on different disaster events to be ready when a new disaster unfolds.

Some studies focus on developing a specialized model. For example, ref. [37] studied the use of well-established convolutional neural networks and transfer learning techniques to predict building damages in the specific case of hurricane events.

The model’s ability to transfer to a future disaster was first studied by Xu et al. [38]. That work included a data generation pipeline to quantify the model’s ability to generalize to a new disaster event. The study was conducted before the release of xBD, being limited to three disaster events.

Closely aligned with our work, Valentijn et al. [39] evaluated the applicability of CNNs under operational emergency conditions. Their in-depth study of per-disaster performance led them to propose a specialized model for each disaster type. Benson and Ecker [40] highlighted the unrealistic test setting in which damage assessment models were developed and proposed a new formulation based on out-of-domain distribution. They experimented with two domain adaptation techniques, multi-domain AdaBN [41] and stochastic weight averaging [42].

The use of CNNs in the emergency context is also thoroughly discussed by Nex et al. [43], who evaluated the transferability and computational time needed to assess damages in an emergency context. This extensive study is conducted on heterogeneous sources of data, including both drone and satellite images.

To our knowledge, Lee et al. [44] is the first successful damage assessment application of a semi-supervised technique to leverage unlabelled data. They compared fully-supervised approaches with MixMatch [45] and FixMatch [46] semi-supervised techniques. Their study, limited to three disaster events, showed promising results. Xia et al. [47] applied emerging self-positive unlabelled learning (known as PU-learning) techniques. The approach is proven efficient when tested on the ABDC dataset and two selected disasters from the xBD dataset (Palu tsunami and Hurricane Michael).

Ismail and Awad [48] proposed a novel approach based on graph convolutional network to incorporate knowledge on similar neighbour buildings for the model to make a prediction. They have introduced this technique to help cross-disaster generalization in time-limited settings after a natural disaster.

The domain gap and the difficulty to gather annotation was acknowledged by Kuzin et al. [49]. The study proposed the use of crowdsourced point labels instead of polygons to accelerate the annotation time. They also presented a methodology to aggregate inconsistent labels accross crowdworkers.

Parallelly, Anand and Miura [50] proposed a model to predict the hazards’ damages before the event to allow humanitarian organizations to prepare their resources and be ready to respond. They used building footprints and predicted the damage locations and severity for different hazard scenarios. Presa-Reyes and Chen [51] suggested that building footprint information is a moving source of information. To alleviate the impact of noise, they introduced a noise reduction mechanism to embed the premise into training.

Finally, in this work, we focus on automatic damage assessment from satellite images, and more specifically, on very-high-resolution imagery. Some work has rather investigated the use of drone images [52,53], multi-sensors satellite images [54], social media images [55], and a mix of multiple data sources [56]. Recently, Weber et al. [57] shared a new large-scale and open-source dataset of natural images from natural disasters and other incidents. Detecting damages from natural images finds many applications using crowd-sourced information from social media.

It is clear that the xBD dataset of [26] boosted research in the field of building damage assessment after a natural disaster, and more specifically using deep learning techniques. The dataset is undoubtedly important; before their creation, the data were a major constraint to any research and development. It was introduced along with a traditional machine learning competition to find the best architecture. For the competition, the dataset that contains images from 18 different disaster events was randomly split into training and testing. These training and test sets remain the leading procedure to compare models and define the state-of-the-art.

However, we argue that this setting does not measure the capacity of a given model to replace human and effectively assess damage in an emergency context. In fact, the training and the testing sets share the same distribution. However, this layout is not possible after a natural disaster, where the distribution of images from the event that just happened is unknown, and therefore not guaranteed to fit into it. This domain gap can eventually lead to generalizability issues that should be quantified.

In this work, we propose to modify the dataset split. All images, except for those associated with a single disaster event, are used for training. Testing is performed on those set-apart disaster event images. This procedure ensures that the test set remains unknown during training such that the resulting score measures the effective score on the new distribution. We also expend this procedure to all 18 disaster events: one by one, each disaster event is set apart for training. To our knowledge, there is no other piece of work that runs such extensive study to quantify the model’s ability to generalize to a new disaster event.

This entry is adapted from the peer-reviewed paper 10.3390/rs14112532

This entry is offline, you can click here to edit this entry!