Emotions play a critical role in our daily lives, so the understanding and recognition of emotional responses is crucial for human research. Affective computing research has mostly used non-immersive two-dimensional (2D) images or videos to elicit emotional states. However, immersive virtual reality, which allows researchers to simulate environments in controlled laboratory conditions with high levels of sense of presence and interactivity, is becoming more popular in emotion research. Moreover, its synergy with implicit measurements and machine-learning techniques has the potential to impact transversely in many research areas, opening new opportunities for the scientific community. This paper presents a systematic review of the emotion recognition research undertaken with physiological and behavioural measures using head-mounted displays as elicitation devices. The results highlight the evolution of the field, give a clear perspective using aggregated analysis, reveal the current open issues and provide guidelines for future research.

- emotion recognition

- virtual reality

- affective computing

Note:All the information in this draft can be edited by authors. And the entry will be online only after authors edit and submit it.

Definition:

Emotions play a critical role in our daily lives, so the understanding and recognition of emotional responses is crucial for human research. Affective computing research has mostly used non-immersive two-dimensional (2D) images or videos to elicit emotional states. However, immersive virtual reality, which allows researchers to simulate environments in controlled laboratory conditions with high levels of sense of presence and interactivity, is becoming more popular in emotion research. Moreover, its synergy with implicit measurements and machine-learning techniques has the potential to impact transversely in many research areas, opening new opportunities for the scientific community.

1. Introduction

Emotions play an essential role in rational decision-making, perception, learning and a variety of other functions that affect both human physiological and psychological status [1]. Therefore, understanding and recognising emotions are very important aspects of human behaviour research. To study human emotions, affective states need to be evoked in laboratory environments, using elicitation methods such as images, audio, videos and, recently, virtual reality (VR). VR has experienced an increase in popularity in recent years in scientific and commercial contexts [2]. Its general applications include gaming, training, education, health and marketing. This increase is based on the development of a new generation of low-cost headsets which has democratised global purchases of head-mounted displays (HMDs) [3]. Nonetheless, VR has been used in research since the 1990s [4]. The scientific interest in VR is due to the fact that it provides simulated experiences that create the sensation of being in the real world [5]. In particular, environmental simulations are representations of physical environments that allow researchers to analyse reactions to common concepts [6]. They are especially important when what they depict cannot be physically represented. VR makes it possible to study these scenarios under controlled laboratory conditions [7]. Moreover, VR allows the time- and cost-effective isolation and modification of variables, unfeasible in real space [8].

2. Emotions Analysed

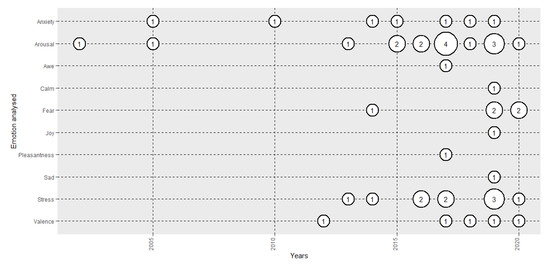

Figure 1 depicts the evolution in the number of papers analysed in the review based on the emotion under analysis. Until 2015, the majority of the papers analysed arousal-related emotions, mostly arousal, anxiety and stress. From that year, some experiments started to analyse valence- related emotions, such as valence, joy, pleasantness and sadness, but the analysis of arousal-related emotions still predominated. Some 50% of the studies used CMA (arousal 38.1% [54] and valence 11.9% [125]), and the other 50% used basic or complex emotions (stress 23.8% [112], anxiety 16.7% [109], fear 11.9% [43], awe 2.4% [121], calmness 2.4% [135], joy 2.4% [135], pleasantness 2.4% [64] and sadness 2.4% [135]).

Figure 1. Evolution of the number of papers published each year based on emotion analysed.

3. Implicit Technique, Features used and Participants

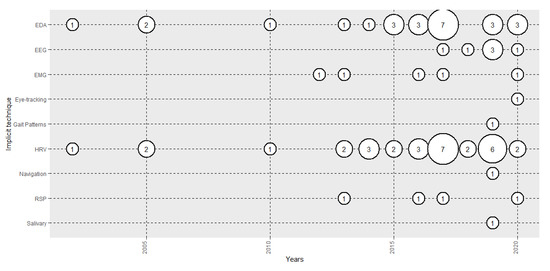

Figure 2 shows the evolution of the number of papers analysed in terms of the implicit measures used. The majority used HRV (73.8%) and EDA (59.5%). Therefore, the majority of the studies used ANS to analyse emotions. However, most of the studies that used HRV used very few features from the time domain, such as HR [115,120]. Very few studies used features from the frequency domain, such as HF, LF or HF/LF [119,126] and 2 used non-linear features, such as entropy and Poincare [65,105]. Of the studies that used EDA, the majority used total skin conductance (SC) [116], but some used tonic (SCL) [54] or phasic activity (SCR) [124]. In recent years, EEG use has increased, with 6 papers being published (14.3%), and the CNS has started to be used, in combination with HMDs, to recognise emotions. The analyses that have been used are ERP [138], power spectral density [140] and functional connectivity [65]. EMG (11.9%) and RSP (9.5) were also used, mostly in combination with HRV. Other implicit measures used were eye-tracking, gait patterns, navigation and salivary cortisol responses. The average number of participants used in the various studies depended on the signal, that is, 75.34 (σ = 73.57) for EDA, 68.58 (σ = 68.35) for HRV and 33.67 (σ = 21.80) for EEG.

Figure 2. Evolution of the number of papers published each year based on the implicit measure used.

4. Data Analysis

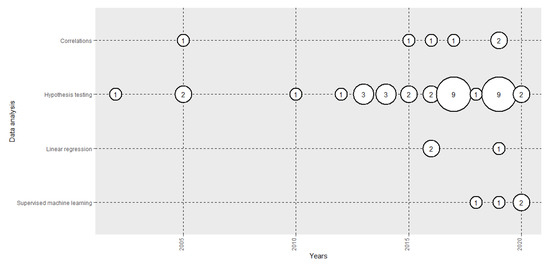

Figure 3 shows the evolution of the number of papers published in terms of the data analysis performed. The vast majority analysed the implicit responses of the subjects in different emotional states using hypothesis testing (83.33%), correlations (14.29) or linear regression (4.76%). However, in recent years, we have seen the introduction of applied supervised machine-learning algorithms (11.90%), such as SVM [105], Random Forest [139] and kNN [140] to perform automatic emotion recognition models. They have been used in combination with EEG [65], HRV [105] and EDA [140].

Figure 3. Evolution of the number of papers published each year by data analysis method used.

5. VR Set-Ups Used: HMDs and Formats

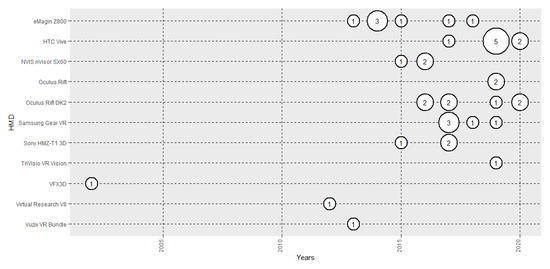

Figure 4 shows the evolution of the number of papers published based on HMD used. In the first years of the 2010s, eMagin was the most used. In more recent years, advances in HMD technologies have positioned HTC Vive as the most used (19.05%). In terms of formats, 3D environments are the most used [138] (85.71%), with 360° panoramas following far behind [142] (16.67%). One research used both formats [64].

Figure 4. Evolution of the number of papers published each year based on head-mounted display (HMD) used.

6. Validation of VR

Table 1 shows the percentage of the papers that presented analyses of the validation of VR in an emotional research. Some 83.33% of the papers did not present any type of validation. Three papers included direct comparisons of results between VR environments and the physical world [64,65,109], and 3 compared, in terms of the formats used, the emotional reactions evoked in 3D VRs, photos [109], 360° panoramas [64] and augmented reality [129]. Finally, another compared the influence of immersion [121], the similarity of VR results with previous datasets [108] and one compared its results with a previous version of the study performed in the real world [132].

Table 1. Previous research that included analyses of the validation of virtual reality (VR).

| Type of Validation | % of Papers | Number of Papers |

|---|---|---|

| No validation | 83.33% | 35 |

| Real | 7.14% | 3 |

| Format | 7.14% | 3 |

| Immersivity | 2.38% | 1 |

| Previous datasets | 2.38% | 1 |

| Replication | 2.38% | 1 |

This entry is adapted from the peer-reviewed paper 10.3390/s20185163