Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

Subjects:

Health Care Sciences & Services

As people age, they are more likely to develop multiple chronic diseases and experience a decline in some of their physical and cognitive functions, leading to the decrease in their ability to live independently. Innovative technology-based interventions tailored to older adults’ functional levels and focused on healthy lifestyles are considered imperative.

- older adults

- healthy ageing

- assistive technology

- cognitive training

- physical training

- social robots

- voice assistant

- chatbot

- emotion recognition

1. Introduction

People throughout the world are ageing [1], and as they age, they are more prone to develop multiple chronic diseases and related impairments [2], as well as experience an inevitable decline in their physical and cognitive functions [3]. Over the years, biological ageing may affect older adults’ independent living, and, as a result, many of them have limited opportunities to participate in active living and struggle with communicating, concentrating, memorizing, talking, walking, or maintaining their balance. In addition, ageing-related cognitive decline may greatly impact older adults’ daily life [4], and this state of vulnerability can detrimentally undermine the process of active ageing, as well as the older adults’ wellbeing [5]. In this vein, effective interventional strategies and innovative solutions that empower older adults’ physical and cognitive function and promote their active and healthy aging are considered essential as they may have great benefits not only for older adults, but also for their caregivers and the whole society [6].

The use of Information and Communication Technology (ICT) and Assistive Technologies (ATs) aim to maintain and even enhance older adults’ cognitive and physical condition, sense of security and safety, as well as their overall quality of life [7]. ICT’s and AT’s use in the health domain is progressively expanding [8], including a diverse array of devices, services, strategies, and practices addressing the alleviation of the adversities that older adults face in their everyday living [7]. Specifically, they are designed to simplify older adults’ lives and improve their ability to accomplish complex tasks in an effective way, through compensating acquired impairments that might emerge as a consequence of a wide variety of neurodegenerative diseases [9].

In particular, various studies exploring the effectiveness of technology-based interventions in providing cognitive, physical, and emotional support for older adults and their caregivers are reported in literature [5][10][11][12][13]. Computerized Cognitive Training (CCT) programs have emerged as a promising strategy to promote healthy cognitive ageing by providing older adults access to gamified, attractive, cognitive exercises everywhere and at any time [14]. Participation and systematic engagement in CCT interventions can potentially improve older adults’ overall function in areas including memory, attention, speed, executive functions, orientation, and cognitive skills [15][16][17], while a combination of physical activity with cognitive stimulation may delay cognitive decline in older adults with dementia [15]. In addition to CCT interventions, there is an additional emergence in Natural Language Processing (NLP), which enables text analysis, extraction, and the automatic summarization of useful information, helping older adults to manage and minimize the volume and complexity of the information they receive.

Furthermore, there is an increased awareness on the potential role of robots in supporting older adults in maintaining their independence and wellbeing. Healthcare, rehabilitation, and social robots are among the many types and applications available [18]. Following this perspective, social robots, i.e., robots that engage in social interactions with their human partners, and often elicit social connections in return, have also been investigated, particularly in dementia care [19]. These types of robots act as companions for older adults and have the potential to benefit older adults’ mental and psychological well-being [20][21]. Robots have indeed been found to positively affect engagement and motivation in the context of cognitive training, offering personalized care and therapy effectiveness [22]. Given this, robots should therefore be able to adapt to, develop, and recall a clear understanding of the means of human communication, thereby ensuring a high level of effective Human–Robot Interaction (HRI) [23]. This could be achieved through the integration of speech, face, and emotion recognition systems into the robot. In particular, speech recognition is enabled through the integration of Voice Assistants, which are interactive human–machine interfaces that allow natural and frictionless communication [24]. Due to their naturalness and ease of use, the interest in Voice Assistants in social and clinical domains has grown in recent years [25]. However, the development of Voice Assistants has emerged as a challenging task, where a pipeline of highly specific technological modules is built to have a more efficient interaction with the user [26]. In parallel, face recognition is achieved through the deployment of modern Deep Neural Network (DNN)-based face recognition solutions [27]. Face recognition is considered to be an attractive feature for older adults, as user authentication is enabled without the repetitive input of usernames and passwords, while, at the same time, a one-time login provides them with access to a full Internet-of-Things (IoT) network of different devices and/or robots [28]. Furthermore, emotions are considered to play an integral role in human communication [29] and, thus, emotion recognition is highlighted as a key component of affective computing [23]. Emotion recognition has increasingly attracted researchers’ interest from diverse fields, and both the interpretation and understanding of human emotional states have been underlined as essential components in the HRI [30].

This study presents a broad spectrum of digital technologies integrated into a healthcare technological ecosystem for active and healthy ageing, facilitating the provision of more integrated services and care to older adults and supporting the maintenance of a higher-quality standard of life.

2. Shaping the Framework of Active and Healthy Ageing

2.1. The SHAPES Project

The SHAPES project [31] aims to create the first European open ecosystem enabling the large-scale deployment of a broad range of digital solutions for supporting and extending healthy and independent living for older adults, who are facing permanently or temporarily reduced cognitive functionalities and physical capabilities. The development of a large-scale, EU-standardized open platform will provide a broad range of interoperable technologies to improve the health, wellbeing, and independence of older adults, while enhancing the long-term sustainability of health and care systems in Europe. The SHAPES Pan-European Piloting Campaign will potentially engage more than 2000 older adults in fifteen (15) pilot sites, including six (6) European Innovation Partnership on Active and Healthy Ageing (EIP on AHA) Reference Sites [32], and involving hundreds of key stakeholders, such as older adults, their families, caregivers, and care service providers.

2.2. SHAPES Methodology

2.2.1. Co-Design Process to Develop Personas, Scenarios, and Use Cases

Seven (7) Pilot Themes are being explored within the SHAPES Pilot Campaign reflecting a common framework to the different small-scale and large-scale pilot activities. Based on the pilot methodology, a human-centered co-design process was followed to de-sign and develop the different pilot activities and persona-based use cases under each Pi-lot Theme. A three-stage methodology was adopted: Creation of personas; Development of persona-based use cases; and Refinement of specific use cases of Pilot Themes.

As a first step, the personas’ creation was actualized on the basis of mini-ethnographic studies and in-depth interviews with experts and focus groups designed and conducted by SHAPES partners. Through this process, the living context of older individuals was explored, leading to the design of the persona-based use cases under each Pilot Theme. As a second step, the persona-based use cases were generally envisioned as user stories, with storylines depicting users’ activities and decisions in a specific context, while the range and variety of the devices, applications, and technological solutions within the living environment of older adults were identified. The third step was the refinement of specific use cases of Pilot Themes designating the main criteria for the selection of effective personalized technological solutions contributing to older adults’ improved integrated care. Three main sources were used to determine the most suitable components and technological solutions for each of the use cases:

-

Technological solutions included in available scientific literature;

-

Technological solutions co-designed and co-executed by SHAPES partners with significant expertise in health and care settings;

-

Third-party technological solutions from the Open Calls.

Conclusively, twenty-four (24) revised and improved use cases emerged based on the collaborative evaluation and agreement by the SHAPES partners and were allocated accordingly across the seven (7) Pilot Themes.

2.2.2. SHAPES Pilot Campaign

The SHAPES Pilot Campaign follows a stepwise incremental approach, including: (1) Design and Preparation and (2) Deployment and Execution. The first part comprises the description of pilot objectives, needs, and KPIs and includes the development of: Table-top Exercises to validate initial concepts and approaches; Mock-up or Prototype Validation to assess technology acceptance and feedback on user experience; and Hands-on Experiments to validate functional elements of SHAPES components and digital technologies. The second part encompasses deployment and co-experimentation cycles in real-life environments; small-scale demonstrations to experiment with SHAPES digital technologies in a controlled environment; large-scale Pan-European piloting activities to test and validate the SHAPES technological platform.

Two use cases are discussed in the study: one from Pilot Theme 2 “Improving In-Home and Community-Based Care” and one from Pilot Theme 4 “Psycho-social and Cognitive Stimulation Promoting Wellbeing”. These use cases are currently undergoing the Hands-on Experiments validation, involving key stakeholders, to validate the digital technologies’ functionalities and provide feedback on user experience and other nonfunctional elements, including accessibility and inclusion features, necessary amendments, and improvements.

3. Deploying the Emerging Use Cases within Pilot Themes

3.1. Improving In-Home and Community-Based Care

The “Improving In-Home and Community-based Care Services” Pilot Theme is focused on providing an appropriate in-home setting for older adults mostly living on their own, who are in need of continuous healthcare provision, due to their suffering from permanent or temporarily reduced functions or capabilities, resulting from chronic age-related illnesses or declines. The pilot activities will build a safe and caring environment at-home that aim to promote older adults’ autonomy and support through technology, and contribute to the long-term sustainability of health and care delivery for them. It will be implemented in rural as well as urban areas and, in particular, in the pilot sites Gewi-Institut für Gesundheitswirtschaft e.V. in Germany and in the Thessaloniki Active and Healthy Ageing Living Lab (Thess-AHALL) [33] in Greece.

This Pilot Theme encompasses four use cases that aim to build the multidimensional support of older adults through the exploitation of different digital technologies: remote monitoring of key health and wellbeing parameters, support interaction with the community, engagement in cognitive and physical training and provision of night surveillance rounds at the community care level in the home setting. The SHAPES digital technologies deployed in this Pilot Theme refer to an IoT-based living platform and web-based communication tool, monitoring devices, cognitive and physical training platforms and applications, as well as a social robot.

“LLM Care Health and Social Care Ecosystem for Cognitive and Physical training” is one of the use cases that are being deployed within this Pilot Theme and is, particularly, explored in this study. Through this use case, the integrated home-based social and health care service is provided by addressing the maintenance of cognitive and physical condition along with the promotion of independent living and healthy ageing of older adults. The target group of this use case is older adults with or without neurodegenerative diseases, mild cognitive impairment and mild dementia, chronic and mental disorders (e.g., schizophrenia), and difficulties in the production or comprehension of speech (e.g., tracheostomy, aphasia, stroke, and brain injuries). The Integrated Health and Social Care System Long Lasting Memories Care—LLM Care acts as an umbrella for the digital technologies of Talk & Play, Talk & Play Marketplace, and NewSum that are included in this use case and described below.

3.1.1. The Long-Lasting Memories Care—LLM Care

The Long Lasting Memories Care—LLM Care [34] is an integrated ICT platform that combines cognitive training exercises [35] with physical activity [36], providing evidence-based interventions in order to improve both cognitive functions and overall physical condition [37], as well as quality of life. The combination of cognitive and physical training provides an effective protection against cognitive decline as age-related, thus improving the overall quality of life through the enhancement of physical condition and mental health, while preventing any deterioration and social exclusion [16]. LLM Care is considered a nonpharmaceutical intervention against cognitive deterioration that provides vital training to people belonging to vulnerable groups in order to improve their mental abilities while simultaneously boosting their physical wellbeing through daily monitoring. It has been recognized as an innovative ecosystem and was awarded a Transnational “Reference Point 2 *” within the EIP on AHA [37][38] due to its excellence in developing, adopting, and scaling up of innovative practices on active and healthy ageing.

LLM Care incorporates two interoperable components, physical and cognitive training. The physical training component, webFitForAll, is an exergaming platform (Figure 1) that was developed by the research group of Medical Physics and Digital Innovation Laboratory of Aristotle University of Thessaloniki within the European project “Long-Lasting Memories (LLM)” [34]. webFitForAll is addressed to older adults as well as individuals belonging to other vulnerable groups with the aim to promote a healthier and more independent living. It is based on new technologies and offers essential physical training within an engaging game environment through the incorporation of different gaming exercises (exergames) including aerobic, muscle flexibility, endurance, and balance training. More specifically, physical training is based on exercise protocols that have been proven to strengthen the body and enhance aerobic capacity, flexibility, and balance [39], while the adjustment of the difficulty level according to individuals’ performance is provided aiming at achieving the optimum exercise performance.

Figure 1. LLM Care physical and cognitive training.

The cognitive training component (Figure 1) is the specialized software BrainHQ that was designed and developed by Posit Science [35], in order to support cognitive game-based exercises in a fully personalized and adaptable cognitive training environment. Provision of personalized training, where each exercise is automatically adjusted to the beneficiary’s level of competence, has been proven to accelerate and promote visual as well as auditory processing by improving memory, thinking, observation, and concentration [40]. BrainHQ is an online interactive environment that incorporates empowering cognitive techniques and includes six categories with more than 29 exercises with a hundred levels of difficulty, which focus on attention, memory, brain speed, people skills, navigation, and intelligence. It is addressed to older adults, as well as individuals belonging to other vulnerable groups aiming at a healthier and more independent living.

3.1.2. Talk & Play Desktop-Based Application

Talk & Play is a desktop application addressed to people with difficulties in the production or comprehension of speech (e.g., tracheostomy, aphasia, stroke, and brain injuries) with the aim to ensure sufficient communication and enhanced independence during their leisure time, while offering in-home cognitive training with the support of their caregivers [41]. Three categories of activities are provided: (a) Communication, (b) Games, and (c) Entertainment (Figure 2). In the context of the SHAPES Pilot Campaign, Talk & Play will be exploited among older adults and will be available in Greek, English, and German.

Figure 2. Talk & Play application interface.

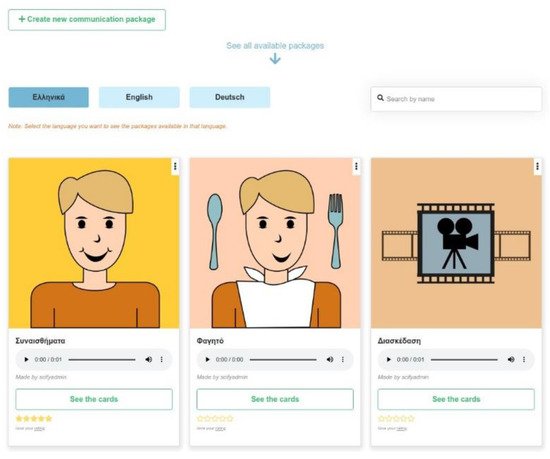

In addition to that, the Talk & Play Marketplace will be provided as an expansion of the Talk & Play app acting as an online platform addressed both to formal and informal caregivers, providing them the opportunity to access, create, share, and download material and resources used in the Talk & Play app (Figure 3). More specifically, free access to a variety of communication and game resources will be provided, based on the crowdsourcing paradigm that promotes the generation of information, co-production of services, and creation of new solutions and public policies [42]. Caregivers will be able to explore and evaluate the content of Communication and Games categories and resources, as well as download the existing material and upload their own.

Figure 3. Talk & Play Marketplace interface.

3.1.3. NewSum App

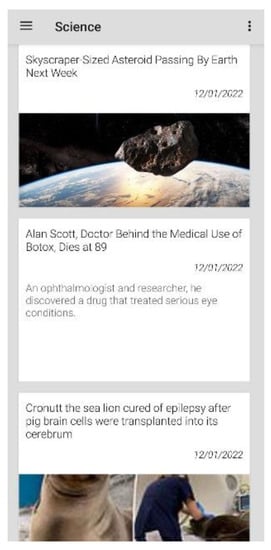

NewSum [43][44] is a mobile application that enables news summarization based on Natural Language Processing (NLP) and Artificial Intelligence (AΙ). The NewSum app exploits state-of-the-art, language-agnostic methods of NLP to automatically develop news’ summaries from a variety of news resources. Essentially, articles that refer to the same news’ field are grouped in specific categories and concise summaries are created, without allowing the duplication of repeated information [45]. NewSum provides a simple User Interface (UI) design and its exploitation does not require a high level of digital skills and affinity to technology by users (Figure 4). Therefore, it can be exploited as a useful news summarization mobile app appropriate for older adults with little to no experience with technology and mobile apps. In the context of the SHAPES Pilot Campaign, NewSum will be available in Greek and English.

Figure 4. NewSum application interface.

3.2. Psycho-Social and Cognitive Stimulation Promoting Wellbeing

The Psycho-social and Cognitive Stimulation Promoting Wellbeing Pilot Theme is dedicated to technology-based interventions in living space environments for the improvement of the psycho-social and cognitive wellbeing of older adults, including those living with permanent or temporary reduced functions or capabilities. This Pilot Theme aims at using SHAPES psycho-social and cognitive gaming applications to make a positive impact on the older adults’ healthy lifestyle and quality of life. It will be implemented in the pilot sites Clinica Humana (CH) in Spain, University College Cork (UCC) in Ireland, Thess-AHALL in Greece, and Italian Association for Spastic Care (AIAS) in Italy.

This Pilot Theme encompasses two use cases that aim to promote older people’s physical and cognitive functioning with a particular focus on their psycho-social functioning, as well as supporting people with early-stage dementia through personalized cognitive activities. The SHAPES digital technologies deployed in this Pilot Theme refer to physical and cognitive training software, motion detection devices, emotion and face recognition software, chatbots, and social robots.

“A robot assistant in cognitive activities for people with early-stage dementia” is one of the use cases that are being deployed within this Pilot Theme. The target group of this use case is people with early-stage dementia, who are considered independent or highly independent and are in need of frequent assistance and support from either formal or informal caregivers. Through this use case, individual cognitive activities will be promoted to older adults based on the interaction with a social humanoid robot, which, among other features, will offer different interaction modes (text, sound, touchscreen, and voice recognition) depending on users’ unique needs and preferences. The system will be connected to a panel where healthcare professionals and caregivers will be able to set-up the activities for older adults. As part of the SHAPES Pilot Campaign, a number of digital technologies will be integrated into the social robot ARI to increase its interaction capabilities: the DiAnoia and Memor-i cognitive activities; the Adilib chatbot, including speech interaction and reminders; the FACECOG face recognition module, providing encrypted authentication, and the emotion recognition module to detect user engagement.

3.2.1. ARI Robot

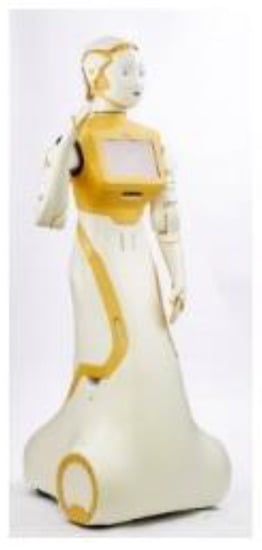

ARI, the social robot (Figure 5), developed by PAL Robotics [46][47], has a human-like shape, autonomous behavior, and advanced HRI that enables the efficient display of the robot’s intentions, the enhancement of human understanding, and the overall increase in users’ engagement and acceptability [48].

Figure 5. PAL Robotics ARI social robot.

ARI has a set of multi-modal behavior capabilities to increase users’ acceptability and enrich the level of interaction. It incorporates various features including speech interaction to support multiple languages (Acapela Group’s text-to-speech and Google Cloud API) and expressive animated eyes combined with head movement, so as to simulate gaze behavior. Default behavioral cues (waving, shaking hands, and nodding) and lifelike imitations (Alive module) motivate users to actively interact with ARI. Animated LED effects in both ears and the back torso may indicate both internal status of the robot (e.g., battery level) and interaction status (e.g., listening, waiting). ARI incorporates a touchscreen that allows the display of web-based content (images, videos, or HTML5 content) and includes both a temperature monitoring and alert system based on an input retrieved by the thermal camera located on the robot’s head (Figure 6).

Figure 6. Different interaction modalities for the ARI robot.

Nodes of interaction are depicted in the robot’s touchscreen, while text-to-speech and gestures are used to interpret them to users. In addition to the front touchscreen, an Android tablet located at the back of the robot can be used. Additional modifications include an RGB-D Intel RealSense camera on the head instead of the original RGB camera for more robust authentication, as well as a thermal camera for temperature monitoring.

Various features and functionalities will be incorporated in ARI, including autonomous navigation to specific rooms and the detection of pre-registered older adults in order to engage them in cognitive games (DiAnoia and Memor-i). Personalization in both cognitive games and the robot’s behavior will be achieved based on the recognized user, using face recognition (FACECOG) and user engagement (Emotion recognition module). While the robot’s multi-modal interaction capabilities will ensure the provision of feedback (e.g., speech, touchscreen displays, and LEDs) on users’ performance and speech recognition, the chatbot (Adilib Chatbot) will enable the efficient interaction between users and the robot.

3.2.2. DiAnoia and Memor-i

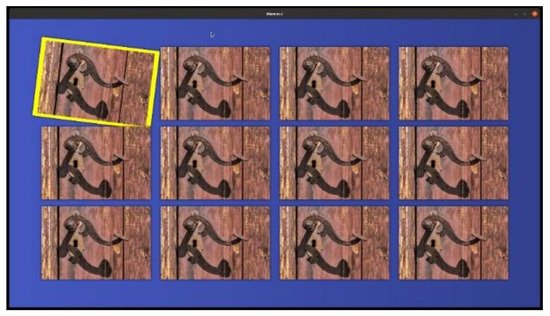

DiAnoia [49] is a mobile app that provides nonpharmaceutical cognitive training to people with mild dementia and/or cognitive impairments focusing on attention, memory, thinking and communication, and executive functions. The app is primarily addressed to healthcare professionals and formal and informal caregivers of people with dementia, providing access to appropriate material for the cognitive training of their care recipients. In addition, Memor-i is an online free game, based on the “Memory Game” [50], that focuses on enhancing memory skills through pairing images with identical content (Figure 7). Memor-i is designed for people or/and older adults with visual impairments, who would preferably engage with an audio-based game as it offers binaural sound (3D audio), ensuring accessibility [51]. Interaction with Memor-i can be succeeded through the use of a keyboard, a mouse, or even a touchscreen in order to pair the identical images, while, as the game progresses, the difficulty level increases along with the number of the cards on the grid. Memor-i will be available in Greek, English, and Spanish.

Figure 7. Memor-i desktop application.

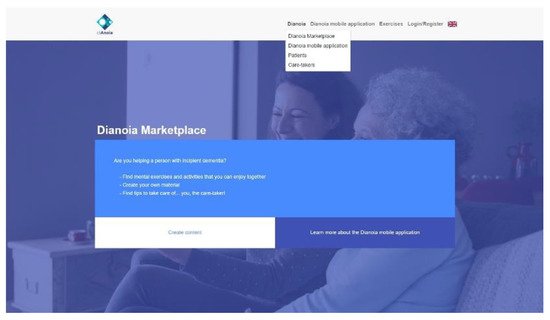

In addition, DiAnoia Marketplace and Memor-i Studio will be provided, acting as online free apps targeted to multiple stakeholders (healthcare professionals, caregivers, family members, etc.) who support people with mild dementia and/or cognitive impairments and visual impairments (Figure 8 and Figure 9). Following the crowdsourcing paradigm [42], both apps will provide users the opportunity to access various activities and games developed by related stakeholders, as well as create and download the content they develop. Specifically, users will be prompted to navigate through a simple UI and upload content, following the guidelines and requirements provided. Before publishing in the DiAnoia and Memor-i apps, each game and activity will be reviewed by the administrative team, in order to ensure that all necessary requirements have been thoroughly applied.

Figure 8. DiAnoia Marketplace.

Figure 9. Memor-i Studio web application.

3.2.3. FACECOG—Face Recognition Module

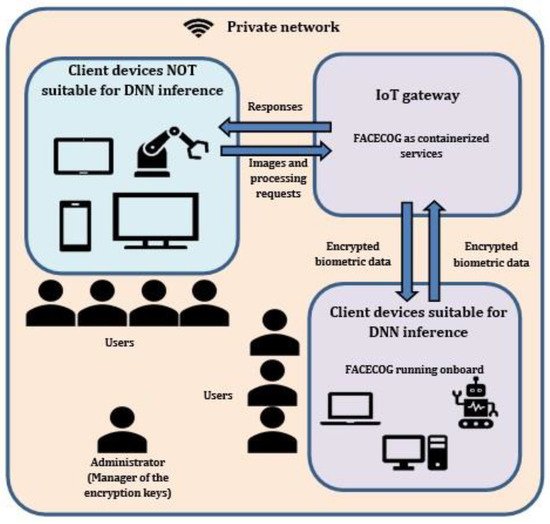

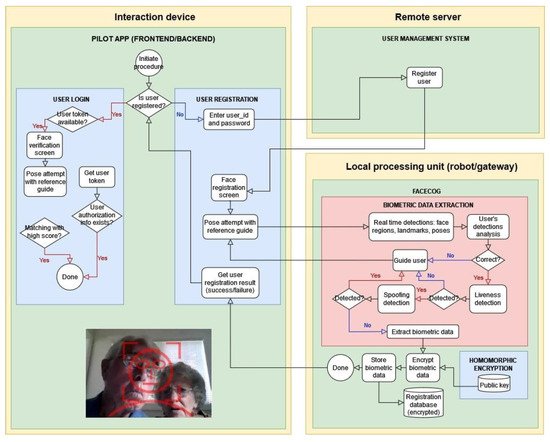

The SHAPES Pilot Campaign will be conducted through the use of a large-scale open technological platform consisting of a broad range of heterogeneous IoT platforms, digital technologies, and services. In light of this, older adults might be confronted with difficulties during their interaction with the technological platform and, therefore, need guidance and support from their caregivers. FACECOG (Face Recognition Solution for Heterogeneous IoT Platforms), a software module from Vicomtech’s Viulib library [52], provides and supports a password-based user authentication process, ensuring a friendly UI as well as the recognition of potential users at a distance (Figure 10).

Figure 10. Deployment diagram of FACECOG.

FACECOG can be directly deployed on devices and robots with sufficient computing capabilities, such as ARI, to reduce the latency as much as possible in all cases. To allow users to register in one device and be recognized by another, the biometric data should be shared among the devices where it is deployed. Even if the network is private, the data would be encrypted, and an administrator would manage the encryption keys to preserve privacy with a secure element. In order to avoid compromising the privacy of users, FACECOG includes an encryption mechanism, based on fully homomorphic encryption that allows maintaining the biometric data always encrypted, even during matching operations [53]. User registration and verification workflows are presented in Figure 11.

Figure 11. User registration workflow.

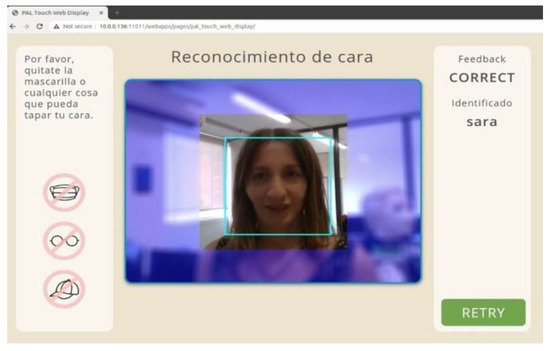

The high-quality extraction of biometric data is ensured based on the provision of visual feedback to the users. In particular, visual feedback is achieved through the notification of the tracked user and the subsequent provision of adequate guidance on the correct face position (“correct”, “look straight”, “move closer”, “move backwards”, “move to the right”, “move to the left”, “move downwards”, and “move upwards”). When a high-quality facial image is captured, it is automatically registered in the system and the recognition process is enabled (Figure 12).

Figure 12. Face registration interface.

3.2.4. Emotion Recognition Module

The emotion recognition module developed by TREE [54] focuses on the integration of a set of digital technologies within the field of computer vision, exploiting the deep learning paradigm. Through the exploitation of cameras embedded in the ARI robot, an initial determination of the different emotional states of older adults during the interaction with ARI is achieved, detecting up to eight different emotions: neutral, happy, sad, surprise, fear, disgust, anger, and contempt. Additional characteristics (eye ratio, gaze direction) are extracted to allow the calculation of a complex metric of the current user’s engagement with the tasks performed in front of the camera.

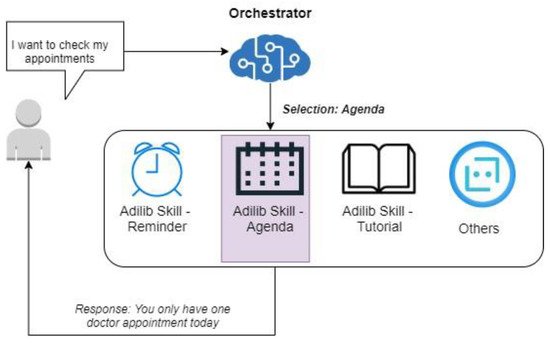

3.2.5. Adlib Chabot

Building a Voice Assistant in the context of the SHAPES project and tailored to cover the needs of a variety of different use cases scenarios would be unfeasible and too costly. To this end, a skill-based architecture using Adilib is proposed. Adilib Dialogue Skills are templates that allow users to instantiate closed-logic interactions such as personal reminders or tutorials. In this skill-based setup, an Orchestrator based on Language Representation models [55] selects who has to handle the users’ query, e.g., if the user asks “I want to check my appointments”, the Orchestrator will route that interaction to the “Agenda” skill to give an appropriate response, as depicted in Figure 13.

Figure 13. Deployment diagram of Adilib Chatbot.

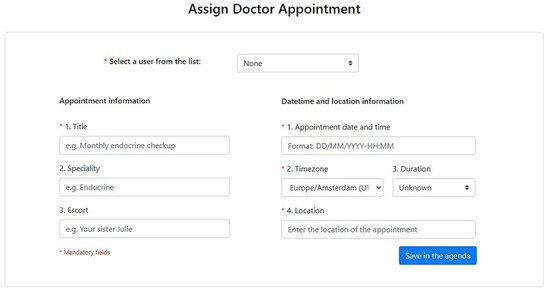

Personalization of each Dialogue Skill’s content responding to unique needs of older adults is provided through the use of a simple web UI, filling simple forms to adjust the dialogue template of the skill to their needs. The web form to add a doctor’s appointment to the “Agenda Skill” is presented in Figure 14. Based on the information filled in this form, the Voice Assistant acts differently when presenting this agenda event to the user, e.g., if the escort field is defined in the web form, the assistant will be able to give a proper response to the question “Is anyone accompanying me there?” With this skill-based architecture, the interactions with the Voice Assistant are easily tailored and personalized even by caregivers without specific training on virtual assistant technical development.[56]

Figure 14. Agenda Skill interface.

This entry is adapted from the peer-reviewed paper 10.3390/technologies10010008

References

- World Health Organization. Global Health and Ageing. Available online: https://www.who.int/ageing/publications/global_health.pdf (accessed on 15 December 2021).

- Vogeli, C.; Shields, A.E.; Lee, T.A.; Gibson, T.B.; Marder, W.D.; Weiss, K.B.; Blumenthal, D. Multiple chronic conditions: Prevalence, health consequences, and implications for quality, care management, and costs. J. Gen. Intern. Med. 2007, 22, 391–395.

- Falck, R.S.; Davis, J.C.; Best, J.R.; Crockett, R.A.; Liu-Ambrose, T. Impact of exercise training on physical and cognitive function among older adults: A systematic review and meta-analysis. Neurobiol. Aging 2019, 79, 119–130.

- Gheysen, F.; Poppe, L.; DeSmet, A.; Swinnen, S.; Cardon, G.; De Bourdeaudhuij, I.; Chastin, S.; Fias, W. Physical activity to improve cognition in older adults: Can physical activity programs enriched with cognitive challenges enhance the effects? A systematic review and meta-analysis. Int. J. Behav. Nutr. Phys. Act. 2018, 15, 63.

- Romanopoulou, E.D.; Zilidou, V.I.; Gilou, S.; Dratsiou, I.; Varella, A.; Petronikolou, V.; Katsouli, A.M.; Karagianni, M.; Bamidis, P.D. Technology Enhanced Health and Social Care for Vulnerable People during the COVID-19 Outbreak. Front. Hum. Neurosci. 2021, 544.

- Zhang, Y.; Zhang, Y.; Du, S.; Wang, Q.; Xia, H.; Sun, R. Exercise interventions for improving physical function, daily living activities and quality of life in community-dwelling frail older adults: A systematic review and meta-analysis of randomized controlled trials. Geriatr. Nurs. 2020, 41, 261–273.

- Cook, A.; Polgar, J. Assistive Technologies: Principles and Practice; Elsevier Health Science: Amsterdam, The Netherlands, 2014.

- Robert, P.; Manera, V.; Derreumaux, A.; Montesino, M.F.Y.; Leone, E.; Fabre, R.; Bourgeois, J. Efficacy of a Web App for Cognitive Training (MeMo) Regarding Cognitive and Behavioral Performance in People with Neurocognitive Disorders: Randomized Controlled Trial. J. Med. Internet Res. 2020, 22, e17167.

- Siegel, C.; Hochgatterer, A.; Dorner, T.E. Contributions of ambient assisted living for health and quality of life in the elderly and care services—A qualitative analysis from the experts’ perspective of care service professionals. BMC Geriatr. 2021, 14, 112.

- Herrero, R.; Vara, M.; Miragall, M.; Botella, C.; Garcia Palacios, A.; Riper, H.; Kleiboer, A.; Baños, R.M. Working alliance inventory for online interventions-short form (Wai-tech-sf): The role of the therapeutic alliance between patient and online program in therapeutic outcomes. Int. J. Environ. Res. Public Health 2020, 17, 6169.

- Chen, Y.R.S. The effect of information communication technology interventions on reducing social isolation in the elderly: A systematic review. J. Med. Internet Res. 2016, 18, 4596.

- Boots, L.M.; de Vugt, M.E.; Van Knippenberg, R.J.; Kempen, G.I.; Verhey, F.R. A systematic review of the Internet-based supportive interventions for caregivers of patients with dementia. Int. J. Geriatr. Psychiatry 2014, 29, 331–344.

- Xavier, A.J.; D’Orsi, E.; De Oliveira, C.; Orrell, M.; Demakakos, P.; Biddulph, J.P.; Marmot, M.G. English Longitudinal Study of Aging: Can internet/e-mail use reduce cognitive decline? J. Gerontol. Ser. A Biol. Sci. Med. Sci. 2014, 69, 1117–1121.

- Barnes, D.E.; Yaffe, K.; Belfor, N.; Jagust, W.J.; DeCarli, C.; Reed, B.R.; Kramer, J.H. Computer-based cognitive training for mild cognitive impairment: Results from a pilot randomized, controlled trial. Alzheimer Dis. Assoc. Disord. 2009, 23, 205–210.

- Frantzidis, C.A.; Ladas, A.K.; Vivas, A.B.; Tsolaki, M.; Bamidis, P.D. Cognitive and physical training for the elderly: Evaluating outcome efficacy by means of neurophysiological synchronization. Int. J. Psychophysiol. 2014, 93, 1–11.

- Bamidis, P.D.; Fissler, P.; Papageorgiou, S.G.; Zilidou, V.; Konstantinidis, E.I.; Billis, A.S.; Romanopoulou, E.; Karagianni, M.; Beratis, I.; Tsapanou, A.; et al. Gains in cognition through combined cognitive and physical training: The role of training dosage and severity of neurocognitive disorder. Front. Aging Neurosci. 2015, 7, 152.

- Klados, M.A.; Styliadis, C.; Frantzidis, C.A.; Paraskevopoulos, E.; Bamidis, P.D. Beta-band functional connectivity is reorganized in mild cognitive impairment after combined computerized physical and cognitive training. Front. Neurosci. 2016, 29, 55.

- Shishehgar, M.; Kerr, D.; Blake, J. A systematic review of research into how robotic technology can help older people. Smart Health 2018, 7–8, 1–18.

- Moyle, W.; Arnautovska, U.; Ownsworth, T.; Jones, C. Potential of telepresence robots to enhance social connectedness in older adults with dementia: An integrative review of feasibility. Int. Psychogeriatr. 2017, 29, 1951–1964.

- Chen, K.; Lou, V.W.; Tan, K.C.; Wai, M.Y.; Chan, L.L. Effects of a humanoid companion robot on dementia symptoms and caregiver distress for residents in long-term care. J. Am. Med. Dir. Assoc. 2020, 21, 1724–1728.

- Robinson, H.; MacDonald, B.; Broadbent, E. Physiological effects of a companion robot on blood pressure of older people in residential care facility: A pilot study. Australas. J. Ageing 2015, 34, 27–32.

- Salichs, E.; Fernández-Rodicio, E.; Castillo, J.C.; Castro-González, Á.; Malfaz, M.; Salichs, M.Á. A social robot assisting in cognitive stimulation therapy. In Proceedings of the International Conference on Practical Applications of Agents and Multi-Agent Systems, Toledo, Spain, 20–22 June 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 344–347.

- Zhang, J.; Yin, Z.; Chen, P.; Nichele, S. Emotion recognition using multi-modal data and machine learning techniques: A tutorial and review. Inf. Fusion 2020, 59, 103–126.

- Firdaus, M.; Golchha, H.; Ekbal, A.; Bhattacharyya, P. A deep multi-task model for dialogue act classification, intent detection and slot filling. Cogn. Comput. 2021, 13, 626–645.

- Laranjo, L.; Dunn, A.G.; Tong, H.L.; Kocaballi, A.B.; Chen, J.; Bashir, R.; Surian, D.; Gallego, B.; Magrabi, F.; Lau, A.Y.; et al. Conversational agents in healthcare: A systematic review. J. Am. Med. Inform. Assoc. 2018, 9, 1248–1258.

- Chen, H.; Liu, X.; Yin, D.; Tang, J. A survey on dialogue systems: Recent advances and new frontiers. ACM Sigkdd Explor. Newsl. 2017, 19, 25–35.

- Maltoni, D.; Maio, D.; Jain, A.K.; Prabhakar, S. Handbook of Fingerprint Recognition; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2019.

- Elordi, U.; Bertelsen, A.; Unzueta, L.; Aranjuelo, N.; Goenetxea, J.; Arganda-Carreras, I. Optimal deployment of face recognition solutions in a heterogeneous IoT platform for secure elderly care applications. Procedia Comput. Sci. 2021, 192, 3204–3213.

- Lanciano, T.; Curci, A. Does emotions communication ability affect psychological well-being? A study with the Mayer-Salovey-Caruso Emotional Intelligence Test (MSCEIT). Health Commun. 2015, 30, 1112–1121.

- Chen, L.; Su, W.; Feng, Y.; Wu, M.; She, J.; Hirota, K. Two-layer fuzzy multiple random forest for speech emotion recognition in human-robot interaction. Inf. Sci. 2020, 509, 150–163.

- SHAPES Project. Available online: https://shapes2020.eu/ (accessed on 15 December 2021).

- The European Innovation Partnership on Active and Healthy Ageing (EIP on AHA). Available online: https://digital-strategy.ec.europa.eu/en/policies/eip-aha#:~:text=The%20European%20Innovation%20Partnership%20in,for%20active%20and%20healthy%20ageing.&text=The%20concept%20of%20a%20European,strengthen%20EU%20research%20and%20innovation (accessed on 15 December 2021).

- Thessaloniki Active and Healthy Ageing Living Lab (Thess-AHALL). Available online: https://enoll.org/network/living-labs/?livinglab=thessaloniki-active-and-healthy-ageing-living-lab-thess-ahall (accessed on 15 December 2021).

- Long Lasting Memories—LLM Care. Available online: www.llmcare.gr/en (accessed on 15 December 2021).

- BrainHQ by Posit Science. Available online: www.brainhq.com (accessed on 15 December 2021).

- webFitForAll. Available online: www.fitforall.gr (accessed on 15 December 2021).

- Bousquet, J.; Illario, M.; Farrell, J.; Batey, N.; Carriazo, A.M.; Malva, J.; Hajjam, J.; Colgan, E.; Guldemond, N.; Perälä-Heape, M.; et al. The Reference Site Collaborative Network of the European Innovation Partnership on Active and Healthy Ageing. Transl. Med. UniSa 2019, 31, 66–81.

- Active and Healthy Living in the Digital World. Available online: https://ec.europa.eu/eip/ageing/news/77-regional-and-local-organisations-are-awarded-reference-site-status-results-2019-call_en.html (accessed on 15 December 2021).

- Zilidou, V.I.; Konstantinidis, E.I.; Romanopoulou, E.D.; Karagianni, M.; Kartsidis, P.; Bamidis, P.D. Investigating the effectiveness of physical training through exergames: Focus on balance and aerobic protocols. In Proceedings of the 1st International Conference on Technology and Innovation in Sports, Health and Wellbeing (TISHW), Vila Real, Portugal, 1–3 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–6.

- González-Palau, F.; Franco, M.; Bamidis, P.; Losada, R.; Parra, E.; Papageorgiou, S.G.; Vivas, A.B. The effects of a computer-based cognitive and physical training program in a healthy and mildly cognitive impaired aging sample. Aging Ment. Health 2014, 18, 838–846.

- Talk and Play. Available online: https://www.scify.gr/site/en/impact-areas-en/assistive-technologies/talk-play (accessed on 15 December 2021).

- Nam, T. Suggesting frameworks of citizen-sourcing via Government 2.0. Gov. Inf. Q. 2012, 29, 12–20.

- Giannakopoulos, G.; Kiomourtzis, G.; Karkaletsis, V. Newsum: “n-gram graph”-based summarization in the real world. In Innovative Document Summarization Techniques: Revolutionizing Knowledge Understanding; IGI Global: Hershey, PA, USA, 2014; pp. 205–230.

- Kiomourtzis, G.; Giannakopoulos, G.; Karkaletsis, V.; Kosmopoulos, A.; SciFY, P.N.P.C. A multi-lingually applicable journalist toolset for the big-data era. In Proceedings of the 2016 IJCAI Workshop on Natural Language Processing Meets Journalism, New York, NY, USA, 10 July 2016; pp. 41–46.

- Giannakopoulos, G.; Kiomourtzis, G.; Pittaras, N.; Karkaletsis, V. Scaling and Semantically-Enriching Language-Agnostic Summarization. In Trends and Applications of Text Summarization Techniques; IGI Global: Hershey, PA, USA, 2020; pp. 244–292.

- Cooper, S.; Di Fava, A.; Vivas, C.; Marchionni, L.; Ferro, F. ARI: The social assistive robot and companion. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Waterloo, ON, Canada, 31 August–4 September 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 745–751.

- Anzalone, S.M.; Boucenna, S.; Ivaldi, S.; Chetouani, M. Evaluating the engagement with social robots. Int. J. Soc. Robot. 2015, 7, 465–478.

- Cooper, S.; Di Fava, A.; Villacañas, Ó.; Silva, T.; Fernandez-Carbajales, V.; Unzueta, L.; Serras, M.; Marchionni, L.; Ferro, F. Social robotic application to support active and healthy ageing. In Proceedings of the 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN), Waterloo, ON, Canada, 8–12 August 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1074–1080.

- DiAnoia App: A Handy Helper for Carers of People with Dementia. Available online: https://shapes2020.eu/2021/02/23/diania-app-a-handy-helper-for-carers-of-people-with-dementia (accessed on 15 December 2021).

- Zwick, U.; Paterson, M.S. The memory game. Theor. Comput. Sci. 1993, 110, 169–196.

- Giannakopoulos, G.; Tatlas, N.A.; Giannakopoulos, V.; Floros, A.; Katsoulis, P. Accessible electronic games for blind children and young people. Br. J. Educ. Technol. 2018, 49, 608–619.

- Vicomtech. Available online: http://www.viulib.org/ (accessed on 15 December 2021).

- Boddeti, V.N. Secure face matching using fully homomorphic encryption. In Proceedings of the 2018 IEEE 9th International Conference on Biometrics Theory, Redondo Beach, CA, USA, 22–25 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–10.

- Tree Technology. Available online: https://www.treelogic.com/en/Computer_Vision.html (accessed on 15 December 2021).

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805.

- Khasnabis, C.; Holloway, C.; MacLachlan, M. The Digital and Assistive Technologies for Ageing initiative: Learning from the GATE initiative. Lancet Healthy Longev. 2020, 1, 94–95.

This entry is offline, you can click here to edit this entry!