The development and application of emerging technologies of Industry 4.0 enable the realization of digital twins (DT), which facilitates the transformation of the manufacturing sector to a more agile and intelligent one. DTs are virtual constructs of physical systems that mirror the behavior and dynamics of such physical systems. A fully developed DT consists of physical components, virtual components, and information communications between the two. Integrated DTs are being applied in various processes and product industries. Although the pharmaceutical industry has evolved recently to adopt Quality-by-Design (QbD) initiatives and is undergoing a paradigm shift of digitalization to embrace Industry 4.0, there has not been a full DT application in pharmaceutical manufacturing. Therefore, there is a critical need to examine the progress of the pharmaceutical industry towards implementing DT solutions. The aim of this entry is to give an overview of the current status of DT development and its application in pharmaceutical and biopharmaceutical manufacturing. State-of-the-art Process Analytical Technology (PAT) developments, process modeling approaches, and data integration studies are reviewed. Challenges and opportunities for future research in this field are also discussed.

- Digital Twin

- Industry 4.0

- Pharmaceutical Manufacturing

- Biopharmaceutical Manufacturing

- Process Modeling

1. Introduction

Competitive markets today demand the use of new digital technologies to promote innovation, improve productivity, and increase profitability[1]. The growing interests in digital technologies and the promotion of them in various aspects of economic activities[2] have led to a wave of applications of the technologies in manufacturing sectors. Over the years, the advancements of digital technologies have initiated different levels of changes in manufacturing sectors, including but not limited to the replacement of paper processing with computers, the nurturing and promotion of Internet and digital communication[1], the use of programmable logical controller (PLC) and information technology (IT) for automated production[3], as well as the current movement towards a fully digitalized manufacturing cycle[4]. The digitalization waves have enabled a broad range of applications from upstream supply chain management, shop floor control and management, to post-manufacturing product tracing and tracking.

Among the new digital advancements, the development of artificial intelligence (AI)[5], Internet of Things (IoT) devices[3][5] and digital twins (DTs) have received attention from governments, agencies, academic institutions, and industries[6]. The idea of Industry 4.0 has been put forward by the community of practice to achieve a higher level of automation for increased operational efficiency and productivity. Smart technologies under the umbrella of Industry 4.0, such as the development of the IoT, big data analytics (BDA), cyber-physical systems (CPS), and cloud computing (CC) are playing critical roles in stimulating the transformation of current manufacturing to smart manufacturing[7][8][9][10]. With the development of these Industry 4.0 technologies to assist data flow, a number of manufacturing activities such as remote sensing[11][12], real-time data acquisition and monitoring[13][14][15], process visualization (data, augmented reality, and virtual reality)[16][17], and control of all devices across a manufacturing network[18][19] is becoming more feasible. The implementation of Industry 4.0 standards by institutions and companies encourages them to implement a more robust, integrated data framework to connect the physical components to the virtual environment[1], enabling a more accurate representation of the physical parts in digitized space, leading to the realization and application of DTs.

1.1. Concept of “twins”

The concept of creating a “twin” of a process or a product can be traced back to the late 1960s when NASA ensembled two identical space vehicles for its Apollo project[20][21][22]. One of the two was used as a “twin” to mirror all the parts and conditions of the one that was sent to the space. In this case, the “twin” was used to simulate the real-time behavior of the counterpart.

The first definition of a “digital twin” appeared in 2002 by Michael Grieves in the context of an industry presentation concerning product lifecycle management (PLM) at the University of Michigan[23][24][25]. As described by Grieves, the DT is a digital informational construct of a physical system, created as an entity on its own and linked with the physical system[24].

Since the first definition of DT, interpretations from different perspectives have been proposed, with the most popular one given by Glaessegen and Stargel, noting that a DT is an integrated multiphysics, multiscale, probabilistic simulation of a complex product and uses the best available data, sensors, and models to mirror the life of its corresponding twin[26]. It is generally accepted that a complete DT consists of a physical component, a virtual component, and automated data communications between the physical and virtual components[2]. Ideally, the digital component should include all information of the system that could be potentially obtained from its physical counterpart. This ideal representation of the real physical system should be an ultimate goal of a DT, but for practical usage, simplified or partial DTs are the dominant ones in industry currently, including the employment of a digital model where the digital representation of a physical system exists without automated data communications in both ways, and a digital shadow where model exists with one-way data transfer from physical to virtual component[2].

1.2 Digitalization in Pharmaceutical Industry

Together with the US Food and Drug Administration (FDA)’s vision to develop a maximally efficient, agile, flexible pharmaceutical manufacturing sector that reliably produces high quality drugs without extensive regulatory oversight[27], the pharmaceutical industry is embracing the general digitalization trend. Industries, with the help of academic institutions and regulatory agencies, are starting to adopt Industry 4.0 and DT concepts and apply them to research and development, supply chain management, as well as manufacturing practice[9][28][29][30][31]. The digitalization move that combines Industry 4.0 with International Council for Harmonisation (ICH) guidelines to develop an integrated manufacturing control strategy and operating model is referred to as the Pharma 4.0[32].

However, according to the recent survey conducted by Reinhardt et al.[33], the preparedness of the industry for this digitalization move is still unsatisfactory. It is noted that most pharmaceutical and biopharmaceutical processes currently rely on quality control checks, laboratory testing, in-process control checks, and standard batch records to assure product quality, whereas the process data and models are of lower impact. Within pharmaceutical companies, there are gaps in knowledge and familiarization with the new digitalization move, resulting in a roadblock in strategic and shop floor implementation of such technologies.

2. Digital Twin Framework

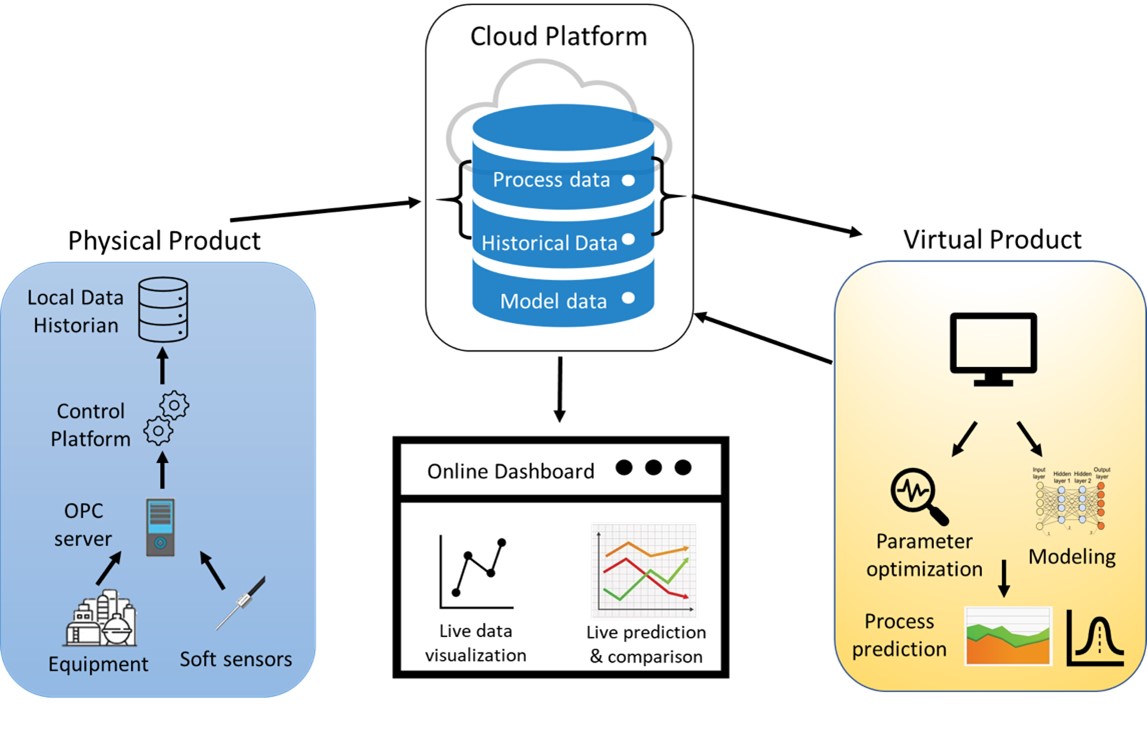

As mentioned in Section 1, a DT has a physical component, a virtual component, and automated data communication in between, which is realized through an integrated data management system. This synergy between the physical, virtual space, and the integrated data management platform is demonstrated in Figure 1. The physical component consists of all manufacturing sources for data, including different sensors and network equipment (e.g., routers, workstations)[34]. The virtual component needs to be a comprehensive digital representation of the physical component in all aspects[8]. The models are built on prior knowledge, historical data, and the data collected in real-time from the physical components to improve its predictions continuously, thus capturing the fidelity of the physical space. The data management platform includes databases, data transmission protocols, operation data, and model data. The platform should also support data visualization tools in addition to process prediction, dynamic data analysis, and optimization[34].

Figure 1. Physical component, virtual component, and data management platform of a general digital twin (DT) framework.

3. Digital Twin in Pharmaceutical Manufacturing

In pharmaceutical manufacturing, the potential of using DTs to facilitate smart manufacturing can be seen in different phases of process development and production. In the process design stage, the use of a DT can significantly accelerate the selection process of a manufacturing route and its unit operations as it is able to represent physical parts with various models. The understanding of process variations can be obtained from DT simulations, which allows for the prediction of product quality, productivity, and process attributes, reducing the time and costs for physical experiments[35]. In the operation phase, real-time process performance can be monitored and visualized at any time, and the DT can analyze the system in a continuous manner to provide control and optimization insights of the process[35]. The DT can also be used as a training platform for operators and engineers, as the real-time scenario simulation and on-the-job feedback can be realized through DT. With regards to pre- and post-manufacturing tasks, the DT platform can assist with tasks including but not limited to material tracking, serialization, and quality assurance.

Some key requirements for achieving smart manufacturing with DT include real-time system monitoring and control using Process Analytical Technology (PAT), continuous data acquisition from equipment, intermediate and final products, and a continuous global modeling and data analysis platform[29]. The pharmaceutical industry has taken several steps towards this by using techniques such as Quality-by-Design (QbD)[36], Continuous Manufacturing (CM)[36], flowsheet modeling[37], and PAT implementations[38]. Some of the tools have been investigated extensively, but the overall integration and development of DTs are still under infancy.

3.1 PAT Methods

A key component in the development of a DT is data collection. In addition to readings from equipment, (critical) quality attributes also need to be collected from physical plants in a timely manner for use in the virtual component. The models and analyses are reliant on good data. Several traditional technologies exist to determine CQAs such as sieve analysis and High-Performance Liquid Chromatography (HPLC), but these cannot provide real-time data and are performed away from the production line rather than in-line or at-line. Thus, PAT tools have been explored and developed to address these issues[39].

PAT tools in the pharmaceutical industry have a wide range of applications, including measuring particle size of crystals[40], blend uniformity[41], testing tablet content uniformity[42], etc. Spectroscopy tools (Nuclear Magnetic Resonance (NMR), Ultraviolet (UV), Raman, near-infrared, mid-infrared, online mass spectrometry) constitute one of the major techniques used to measure the CQAs of pharmaceutical processes. Raman and Near-Infrared Spectroscopy (NIRS) are commonly used in the industry. Raman Spectroscopy has been employed for the on-line monitoring of powder blending processes[43]. Since acquisition times for Raman can be higher, NIRS is preferred for real-time measurements. NIRS has been used for real-time monitoring of powder density[15] and blend uniformity of processes[41]. NIRS has also been integrated with control platforms for process monitoring and control[44]. Baranwal et al.[45] employed NIRS to replace HPLC methods to predict API concentration in bi-layer tablets. PAT tools have also been used by the pharmaceutical industry to determine the particle size distribution of the product[46]. Several available optical tools such as Focused Beam Reflectance Measurement (FBRM)[47], a high-resolution camera system[48] have also been employed in the industry for particle size analysis. Some studies have utilized a network of PAT tools to achieve a monitoring system to help monitor and control a unit process[39][49].

The US FDA has also taken steps in promoting the use of PAT tools in pharmaceutical manufacturing with the goal of ensuring final product quality[50]. The pharmaceutical industry has adopted PAT in various applications throughout the drug-substance manufacturing process[51]. Although this has certainly led to an increase in the usage of PAT tools, their applications still remain focused on research and development rather than in full-scale manufacturing[38]. In the limited number of cases where they were employed in manufacturing, they have been successful in reducing manufacturing costs and improving the monitoring of product quality[52]. The development of different PAT methods, with their compelling application as an integral part of a monitoring and control strategy[53], has established a building block in gathering essential data from the physical component, enabling the further development of process model and DT.

3.2. Process Modeling

DTs highly depend on the use of data and models, and in the pharmaceutical industry, there is a growing interest in the development and application of methods and tools that facilitate that[54]. Different types of models have been developed for batch and continuous process simulations, material property identification and prediction, system analyses, and advanced control. Papadakis et al. recently proposed a framework for selecting efficient reaction pathways for pharmaceutical manufacturing[55], which includes a series of modeling workflows for reaction pathway identification, reaction and separation analysis, process simulation, evaluation, optimization, and operation[54]. The overall framework would yield an optimized reaction process with identified design space and process analytical technology information. The models developed under this framework can all be used as the virtual component within a DT framework to provide further process understanding and control of the manufacturing plant.

As mentioned in Section 2.2, the modeling approaches can be classified as mechanistic modeling, data-driven modeling, and hybrid modeling. For mechanistic modeling approaches in pharmaceutical manufacturing, the discrete-element method (DEM), finite-element method (FEM), and computational fluid dynamics (CFD) are often used[56]. To simulate the particle-level or bulk behavior of the material flow in different pharmaceutical unit operations, DEM is a powerful tool and has been applied widely[57][58][59], though its high computational cost limits its practical use when running locally. With HPC and cloud computing, it is possible to integrate DEM simulations with the overall process, resulting in a near-real-time model. For model fluid flow in pharmaceutical processes, including API drying and fluidized beds, CFD and FEM are popularly implemented[56]. These two methods are also heavily utilized in biopharmaceutical manufacturing (see Section 4.2).

Data-driven modeling methods involve the collection and usage of a large amount of experimental data to generate models, and the resulting models are based on the provided datasets only. Commonly implemented approaches in pharmaceutical manufacturing include the artificial neural network (ANN)[60][61], multivariate statistical analysis, Monte Carlo[62], etc. These methods are less computationally intensive, but due to the lack of underlying physical understanding in the trained models, the prediction outside of the space of the dataset is often unsatisfactory.

There is also a recent trend in developing various types of hybrid modeling techniques to model complex pharmaceutical manufacturing processes, while lowering the demand of computational cost and data availability. Population balance modeling (PBM), with a comparatively lower computational cost, has been extensively used to model blending and granulation processes[63][64], and a PBM–DEM hybrid model has also been used to improve model accuracy while maintaining reasonable computational costs[65]. Other semi-empirical hybrid models, such as the ones that incorporate material properties into process models[66], and to investigate the effect of material properties in residence time distribution (RTD) and process parameters[58][67][68][69][70], have also been developed for different powder processing unit operations[71][72]. These models, when incorporated with a full DT framework, will facilitate the overall product and process design and development, accelerating the drug-to-market timeline.

In addition to developing models for single pharmaceutical unit operations, a flowsheet model integrating the entire manufacturing process can be used to predict the process dynamics affected by material properties and operating conditions of different unit operations. More importantly, systematic process analysis of the flowsheet model, such as sensitivity analysis, design space identification, and optimization, can all be performed with the flowsheet model. This provides insight into the characteristics and bottlenecks of the process and thus facilitates the development of control strategies[37]. Throughout the years of development, many researchers and pharmaceutical companies have developed mature approaches in conducting these analyses offline during the process design phase[71][73][37][74][75] . Flowsheet models are needed for the development of DTs. However, flowsheet models are stand-alone, so they cannot automatically update adapting to the physical plant. In current research, there is limited communication between the flowsheet model and the plant, which is a challenge in the development of a DT.

3.3. Data Integration

The implementation of IoT devices in pharmaceutical manufacturing lines leads to the acquisition of vast amounts of data. This collection of process data and CQAs needs to be transmitted to the virtual component in real-time and in an efficient manner. In addition to these, several pharmaceutical process models also require material properties for accurate prediction. Thus, a central database location is required for access to all datasets for the virtual component[76]. In addition, the applications and databases should also be compliant with 21 CFR Part 11 data integrity requirements in accordance with US FDA’s guidance[77]. The database not only serves as a warehouse for real product data but can also be used to store results from simulations performed in the virtual component and optimized process parameters. It would also serve the purpose of relaying back these optimized process parameters to the real product.

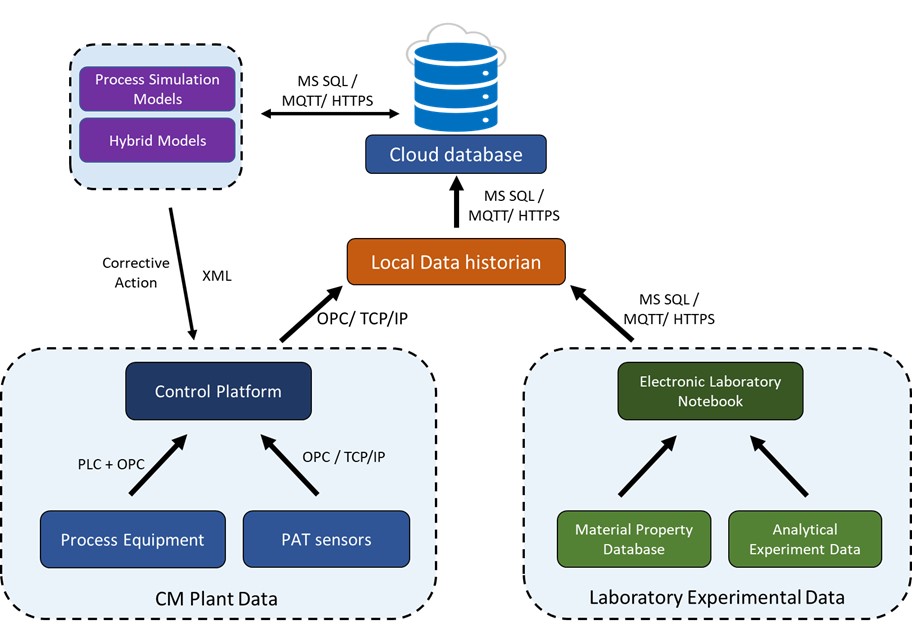

Several studies have attempted to achieve an integrated data framework in downstream pharmaceutical manufacturing[76][78][44][79][80][81][82]. Some of these studies were focused on implementing a control system for the direct compression line[44][70][82] . Cao et al.[76] presented an ISA-88 compliant manufacturing execution system (MES) where the batch data were stored on a cloud database as well as on a local data historian. The communications between the equipment and the control platform were performed in a similar manner for all the studies. The process control system (PCS) created a database based on the input recipe, and the database was replicated directly into the local data historian. The communication between the historian and PCS can be achieved using TCP/IP and OPC since each software is hosted on different computer systems on the same network. The historian database can in turn be duplicated onto the cloud using network protocols such as MQTT, HTTPS, etc. Some authors have also presented ontologies for efficient data flow for laboratory experiments performed during pharmaceutical manufacturing[83][84][85]. Cao et al.[76] also addressed the collection of laboratory data in an ISA-88 applicable recipe-based electronic laboratory notebook—many of the presented studies focused primarily on integrating one component of a completely integrated data management system. Figure 2 illustrates a sample data integration framework, where data collected from the manufacturing plant as well as laboratory experiments are uploaded to a cloud database using the mentioned protocols. The data can then be used in the virtual component for simulations, and corrective actions can be sent back to the control platform.

Figure 2. Framework for dataflow in a continuous direct compaction tablet line. The text over the arrow indicates options for data transfer protocols.

4. Digital Twin in Biopharmaceutical Manufacturing

Biopharmaceutical manufacturing focuses on the production of large molecule-based products in heterogeneous mixtures, which can be used to treat cancer, inflammatory, and microbiological diseases[86][87]. To fulfill the FDA regulations and obtain safe products, biopharmaceutical operations should be strictly controlled and operate under a sterilized process environment.

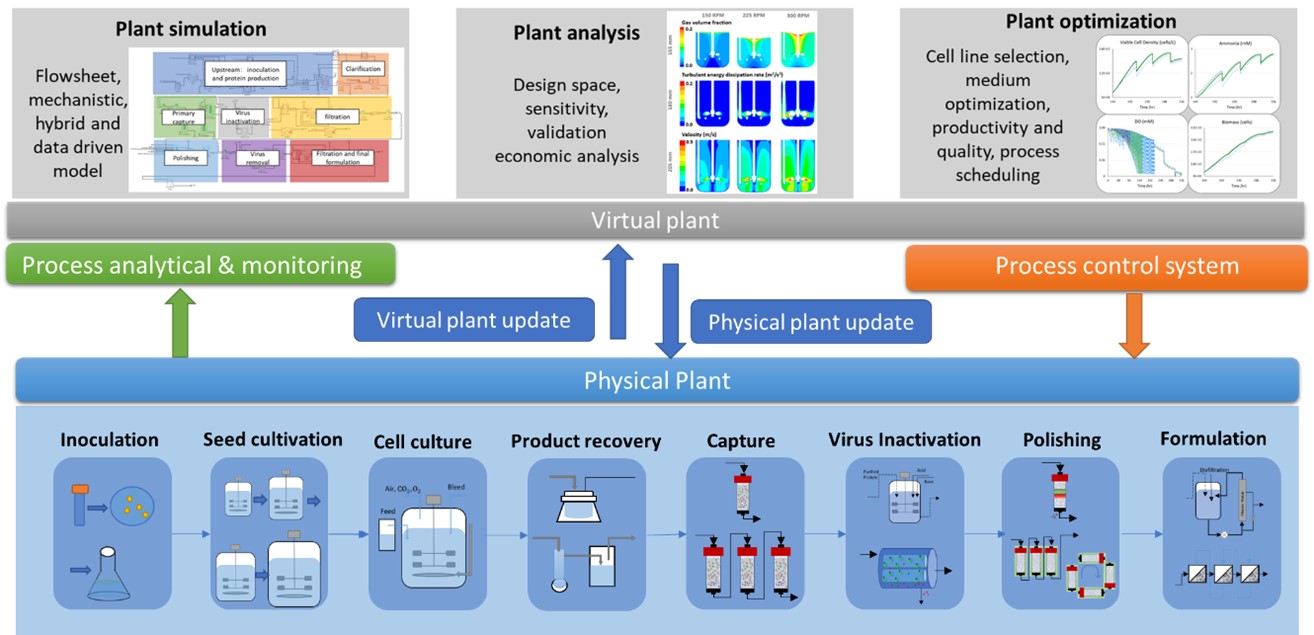

In recent years, there is an increasing demand for biologic-based drugs that drives the need for manufacturing efficiency and effectiveness[88] . Thus, many companies are transitioning from batch to continuous operation mode and employing smart manufacturing systems[87]. DT integrates the physical plant, data collection, data analysis, and system control[4], which can assist biopharmaceutical manufacturing in product development, process prediction, decision making, and risk analysis, as shown in Figure 4. Monoclonal Antibody production is selected as an example to represent the physical plant, which includes cell inoculation, seed cultivation, production bioreactor, recovery, primary capture, virus inactivation, polishing, and final formulation. These operations produce and purify protein products. Quality (majorly protein structure and composition) and impurities need to be monitored and transported to a virtual plant for analysis and virtual plant updates. Virtual plant includes plant simulation, analysis, and optimization, which guide the physical plant diagnosis and update with the help of the process control system. Integrated mAb production flowsheet modeling, bioreactor analysis and design space and biomass optimization are selected as examples shown in the three sections in the figure. However, the capabilities of virtual plant are not limited to the examples list above. To understand the progress of DT development in biopharmaceutical manufacturing, this section reviews the process monitoring, modeling and data integration (virtual plant, physical plant communication) in the existed industry and analyzed possibilities and gaps to achieve integrated biopharma-DT manufacturing.

4.1. PAT Methods

Biological products are highly sensitive to cell-line and operating conditions, while the fractions and structures of the product molecules are closely related to drug efficacy[89]. Thus, having a real-time process diagnostic and control system is essential to maintain consistent product quality. However, process contamination needs to be strictly controlled in the biopharmaceutical manufacturing; thus, the monitoring system should not be affected by fouling nor interfere with media to maintain monitoring accuracy, sensitivity, stability, and reproducibility[90]. In general, among different unit operations, process parameters and quality attributes need to be captured.

Biechele et al.[90] presented a review of sensing applied in bioprocess monitoring. In general, process monitoring includes physical, chemical, and biological variables. In the gas phase, the commonly used sensing system consists of semiconducting, electrochemical, and paramagnetic sensors, which can be applied to oxygen and carbon dioxide measurements[90][91]. In the liquid phase, dissolved oxygen, carbon dioxide, and pH values have been monitored by an in-line electrochemical sensor. However, media composition, protein production, and qualities such as glycan fractions are mostly measured by online or at-line HPLC or GC/MS[91][92]. The specific product quality monitoring methods are reviewed by Guerra et al.[93] and Pais et al.[94].

Recently, spectroscopy methods have been developed for accurate and real-time monitoring for both upstream and downstream operations. The industrial spectroscopy applications mainly focus on cell growth monitoring and culture fluid components quantifications[95]. UV/Vis and multiwavelength UV spectroscopy have been used for in-line real-time protein quantification[95]. NIR has been used for off-line raw material and final product testing[95]. Raman spectroscopy has been used for viable cell density, metabolites, and antibody concentration measurements[96][97]. In addition, spectroscopy methods can also be used for process CQA monitoring, such as host cell protein and protein post-translational modifications[92][98] . Research shows that in-line Raman spectroscopy and Mid-IR have capabilities to monitor protein concentration, aggregation, host cell proteins (HCPs), and charge variants[99][100]. The spectroscopy methods are usually supported with chemometrics, which require data pretreatments such as background correction, spectral smoothing, and multivariant analysis for quantitative and qualitative analysis of the attributes. Many different applications of spectroscopic sensing are reviewed in the literature[95][92][93][98].

4.2. Process Modeling

The application of DT in biopharmaceutical manufacturing requires a complete virtual description of physical plant within a simulation platform[4]. This means that the simulation should capture the important process dynamics in each unit operation within an integrated model. Previous reviews have focused on the process modeling methods for both upstream and downstream operations[88][101][102][103][104][105].

For upstream bioreactor, extracellular fluid dynamics[106][107][108], system heterogeneities, and intracellular biochemical pathways[109][110][111][112][113][114][115][116][117][118][119][120] can be captured. Process modeling supports early-stage cell-line development, obtains optimal media formulations, and enables prediction of the overall bioreactor performance, including cell activities, metabolites’ concentrations, productivity, and product quality under different process parameters[121][122]. The influence from various parameters such as temperature, pH, dissolved oxygen, feeding strategies, and amino acid concentrations can be captured and further used to optimize process operations[123][124][125][126][127].

For downstream operation, modeling strategies have focused on selecting design parameters, adjusting operating conditions, and buffer usage to achieve high protein productivity and purities efficiently. The different operating conditions include (1) flowrate, buffer pH, or salt concentration effects for chromatography operation[127][128][129][130][131]; (2) residence time, buffer concentration, and pH used for virus inactivation; (3) feed protein concentration, flux, retentate pressure operated for filtration[132]. Thus, the product concentration and various types of impurities can be predicted for each unit operation. The detailed modeling methods have been reviewed in the literature[133].

In recent years, biopharmaceutical companies are shifting from batch to continuous operations. It remains an unanswered question if it is feasible to start up a new, fully continuous process plant or replace specific unit operations with continuous units. Integrated process modeling provides a virtual platform to test various operating strategies such as batch, continuous, and hybrid operating modes[134]. These different operating modes can be compared based on life cycle analysis and economic analysis for different target products under various operation scales[134][135][136][137][138].

For flowsheet modeling, there are two approaches available in the literature, which include mechanistic and data-driven models. Due to the high computational cost, mechanistic modeling mostly focuses on the integration of a limited number of units, such as the combination of multiple chromatography operations[139]. Data-driven/empirical models are generally used to integrate all the unit operations in a computationally efficient way. Mechanistic models for a single unit can be integrated with other units that are built by the data-driven model to optimize a specific unit in the integrated process[140]. Mass flow and RTD models[141] can be included in the model to examine different scenarios of adding and replacing new unit operations and adjusting process parameters. Coupling with the control system, flowsheet modeling will be able to achieve real-time decision making and optimize the overall process operation automatically[142].

The data-driven models can be further integrated with Monte Carlo analysis or linear/nonlinear programming for risk assessment and process scheduling. Zahel et al.[143] applied Monte Carlo simulation in the end-to-end data-driven model, which can be used to estimated process capabilities and provide risk-based decision making following a change in the manufacturing operations.

4.3. Data Integration

Data obtained in the biopharmaceutical monitoring system are usually heterogeneous in data types and time scales. They can be collected from different sensors, production lines (laboratory or manufacturing), and at different time intervals. With the development of real-time PAT sensors, a large amount of data is obtained during biopharmaceutical manufacturing. Thus, data preprocessing is essential to handle missing data, perform data visualization, and reduce dimension[144]. Casola et al.[145] presented data mining-based algorithms to stem, classify, filter, and cluster historical real-time data in batch biopharmaceutical manufacturing. Lee et al.[146] applied data fusion to combine multiple spectroscopic techniques and predict the composition of raw materials. These preprocessing algorithms remove noise from the dataset and allow the data to be used in a virtual component directly.

In DTs, virtual components and physical components should communicate frequently. Thus, the virtual platforms need to have the flexibility to adjust their model-structure for different products and operating conditions. Herold and King[147] presented an algorithm that used biological phenomena to identify fed-batch bioreactor process model structure automatically. Luna and Martinez[148] used experimental data to train the imperfect mathematical model and corrected model prediction errors. Although there are no such applications for the integrated process, these works show the possibilities to achieve physical and virtual component communication.

In biopharmaceutical manufacturing, the integrated database can guide process-wide automatic monitoring and control[149]. Fahey et al. applied six sigma and CRISP-DM methods and integrated data collection, data mining, and model predictions for upstream bioreactor operations. Although the process optimization and control have not been considered in this work, it still shows the capabilities to handle large amounts of data for predictive process modeling[150]. Feidl et al.[149] used a supervisory control and data acquisition (SCADA) system to collect and store data from different unit operations at each sample time and developed a monitoring and control system in MATLAB. The work shows the integration of supervisory control with a data acquisition system in a fully end-to-end biopharmaceutical plant. However, process modeling has not been considered during the process operations, which cannot support process prediction and analysis.

5. Conclusions

DTs are a crucial development of the close integration of manufacturing information and physical resources that raise much attention across industries. The critical parts of a fully developed DT include the physical and virtual components, and the interlinked data communication channels. Following the development of IoT technologies, there are many applications of DT in various industries, but the progress is lagging for pharmaceutical and biopharmaceutical manufacturing. This review paper summarizes the current state of DT in the two application scenarios, providing insights to stakeholders and highlighting possible challenges and solutions of implementing a fully integrated DT.

This entry is adapted from the peer-reviewed paper 10.3390/pr8091088

References

- Legner, C.; Eymann, T.; Hess, T.; Matt, C.; Böhmann, T.; Drews, P.; Mädche, A.; Urbach, N.; Ahlemann, F. Digitalization: Opportunity and Challenge for the Business and Information Systems Engineering Community. Bus. Inf. Syst. Eng. 2017, 59, 301–308.

- Kritzinger, W.; Karner, M.; Traar, G.; Henjes, J.; Sihn, W. Digital Twin in manufacturing: A categorical literature review and classification. IFAC-PapersOnLine 2018, 51, 1016–1022.

- Oztemel, E.; Gursev, S. Literature review of Industry 4.0 and related technologies. J. Intell. Manuf. 2018, 31, 127–182.

- Tao, F.; Cheng, J.; Qi, Q.; Zhang, M.; Zhang, H.; Sui, F. Digital twin-driven product design, manufacturing and service with big data. Int. J. Adv. Manuf. Technol. 2018, 94, 3563–3576.

- Venkatasubramanian, V. The promise of artificial intelligence in chemical engineering: Is it here, finally? AIChE J. 2019, 65, 466–478.

- Bao, J.; Guo, D.; Li, J.; Zhang, J. The modelling and operations for the digital twin in the context of manufacturing. Enterp. Inf. Syst. 2018, 13, 534–556.

- Tao, F.; Qi, Q.; Wang, L.; Nee, A.Y.C. Digital Twins and Cyber–Physical Systems toward Smart Manufacturing and Industry 4.0: Correlation and Comparison. Engineering 2019, 5, 653–661.

- Haag, S.; Anderl, R. Digital twin—Proof of concept. Manuf. Lett. 2018, 15, 64–66.

- Litster, J.; Bogle, I.D.L. Smart Process. Manufacturing for Formulated Products. Engineering 2019, 5, 1003–1009.

- Tourlomousis, F.; Chang, R.C. Dimensional Metrology of Cell-matrix Interactions in 3D Microscale Fibrous Substrates. Procedia CIRP 2017, 65, 32–37.

- Khan, M.; Wu, X.; Xu, X.; Dou, W. Big data challenges and opportunities in the hype of Industry 4.0. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017.

- Li, X.; Li, D.; Wan, J.; Vasilakos, A.V.; Lai, C.-F.; Wang, S. A review of industrial wireless networks in the context of Industry 4.0. Wirel. Netw. 2015, 23, 23–41.

- Uhlemann, T.H.J.; Schock, C.; Lehmann, C.; Freiberger, S.; Steinhilper, R. The Digital Twin: Demonstrating the Potential of Real Time Data Acquisition in Production Systems. Procedia Manuf. 2017, 9, 113–120.

- Belanger, J.; Venne, P.; Paquin, J.-N. The What, Where and Why of Real-Time Simulation. Planet RT. 2010, 1, 37–49.

- Roman-Ospino, A.D.; Singh, R.; Ierapetritou, M.; Ramachandran, R.; Mendez, R.; Ortega-Zuniga, C.; Muzzio, F.J.; Romanach, R.J. Near infrared spectroscopic calibration models for real time monitoring of powder density. Int. J. Pharm. 2016, 512, 61–74.

- Damiani, L.; Demartini, M.; Guizzi, G.; Revetria, R.; Tonelli, F. Augmented and virtual reality applications in industrial systems: A qualitative review towards the industry 4.0 era. IFAC-PapersOnLine 2018, 51, 624–630.

- Zühlke, D.; Gorecky, D.; Schmitt, M.; Loskyll, M. Human-machine-interaction in the industry 4.0 era. In Proceedings of the 2014 12th IEEE International Conference on Industrial Informatics (INDIN), Porto Alegre, Brazil, 27–30 July 2014.

- Zhuang, C.; Liu, J.; Xiong, H. Digital twin-based smart production management and control framework for the complex product assembly shop-floor. Int. J. Adv. Manuf. Technol. 2018, 96, 1149–1163.

- Leng, J.; Zhang, H.; Yan, D.; Liu, Q.; Chen, X.; Zhang, D. Digital twin-driven manufacturing cyber-physical system for parallel controlling of smart workshop. J. Ambient Intell. Humaniz. Comput. 2018, 10, 1155–1166.

- Rosen, R.; von Wichert, G.; Lo, G.; Bettenhausen, K.D. About The Importance of Autonomy and Digital Twins for the Future of Manufacturing. IFAC-PapersOnLine 2015, 48, 567–572.

- Mayani, M.G.; Svendsen, M.; Oedegaard, S.I. Drilling Digital Twin Success Stories the Last 10 Years. In Proceedings of the SPE Norway One Day Seminar, Bergen, Norway, 18 April 2018.

- Schleich, B.; Anwer, N.; Mathieu, L.; Wartzack, S. Shaping the digital twin for design and production engineering. CIRP Ann. 2017, 66, 141–144.

- Grieves, M. Digital Twin: Manufacturing Excellence through Virtual Factory Replication. White Paper 2014, 1, 1–7.

- Grieves, M.; Vickers, J. Digital Twin: Mitigating Unpredictable, Undesirable Emergent Behavior in Complex. Systems. In Transdisciplinary Perspectives on Complex Systems; Springer: Cham, Switzerland, 2017; pp. 85–113.

- Stark, R.; Fresemann, C.; Lindow, K. Development and operation of Digital Twins for technical systems and services. CIRP Ann. 2019, 68, 129–132.

- Glaessgen, E.H.; Stargel, D.S. The Digital Twin Paradigm for Future NASA and U.S. Air Force Vehicles. In Proceedings of the 53rd AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics and Materials Conference—Special Session on the Digital Twin, Honolulu, HI, USA, 23–26 April 2012.

- O’Connor, T.F.; Yu, L.X.; Lee, S.L. Emerging technology: A key enabler for modernizing pharmaceutical manufacturing and advancing product quality. Int. J. Pharm. 2016, 509, 492–498.

- Ding, B. Pharma Industry 4.0: Literature review and research opportunities in sustainable pharmaceutical supply chains. Process Saf. Environ. Prot. 2018, 119, 115–130.

- Barenji, R.V.; Akdag, Y.; Yet, B.; Oner, L. Cyber-physical-based PAT (CPbPAT) framework for Pharma 4.0. Int. J. Pharm. 2019, 567, 118445.

- Steinwandter, V.; Borchert, D.; Herwig, C. Data science tools and applications on the way to Pharma 4.0. Drug Discov. Today 2019, 24, 1795–1805.

- Lopes, M.R.; Costigliola, A.; Pinto, R.; Vieira, S.; Sousa, J.M.C. Pharmaceutical quality control laboratory digital twin—A novel governance model for resource planning and scheduling. Int. J. Prod. Res. 2019, 1–15. doi:10.1080/00207543.2019.1683250.

- Kumar, S.; Talasila, D.; Gowrav, M.; Gangadharappa, H. Adaptations of Pharma 4.0 from Industry 4.0. Drug Invent. Today 2020, 14, 405–415.

- Reinhardt, I.C.; Oliveira, D.J.C.; Ring, D.D.T. Current Perspectives on the Development of Industry 4.0 in the Pharmaceutical Sector. J. Ind. Inf. Integr. 2020, 18, 100131.

- Zhang, C.; Xu, W.; Liu, J.; Liu, Z.; Zhou, Z.; Pham, D.T. A Reconfigurable Modeling Approach for Digital Twin-based Manufacturing System. Procedia CIRP 2019, 83, 118–125.

- Barrasso, D. Developing and applying digital twins for Continuous Drug Product Manufacturing. In Proceedings of the PSE Advanced Process Modeling Forum, Tarrytown, NY, USA, 10–12 September 2019.

- Ierapetritou, M.; Muzzio, F.; Reklaitis, G. Perspectives on the continuous manufacturing of powder-based pharmaceutical processes. AIChE J. 2016, 62, 1846–1862.

- Boukouvala, F.; Niotis, V.; Ramachandran, R.; Muzzio, F.J.; Ierapetritou, M.G. An. integrated approach for dynamic flowsheet modeling and sensitivity analysis of a continuous tablet manufacturing process. Comput. Chem. Eng. 2012, 42, 30–47.

- Kamble, R.; Sharma, S.; Varghese, V.; Mahadik, K. Process analytical technology (PAT) in pharmaceutical development and its application. Int. J. Pharm. Sci. Rev. Res. 2013, 23, 212–223.

- Simon, L.L.; Pataki, H.; Marosi, G.; Meemken, F.; Hungerbühler, K.; Baiker, A.; Tummala, S.; Glennon, B.; Kuentz, M.; Steele, G.; et al. Assessment of Recent Process. Analytical Technology (PAT) Trends: A Multiauthor Review. Org. Process Res. Dev. 2015, 19, 3–62.

- Yu, Z.; Chew, J.; Chow, P.; Tan, R. Recent advances in crystallization control: An industrial perspective. Chem. Eng. Res. Des. 2007, 85, 893–905.

- Sierra-Vega, N.O.; Román-Ospino, A.; Scicolone, J.; Muzzio, F.J.; Romañach, R.J.; Méndez, R. Assessment of blend uniformity in a continuous tablet manufacturing process. Int. J. Pharm. 2019, 560, 322–333.

- Goodwin, D.J.; van den Ban, S.; Denham, M.; Barylski, I. Real time release testing of tablet content and content uniformity. Int. J. Pharm. 2018, 537, 183–192.

- De Beer, T.R.M.; Bodson, C.; Dejaegher, B.; Walczak, B.; Vercruysse, P.; Burggraeve, A.; Lemos, A.; Delattre, L.; Heyden, Y.V.; Remon, J.P.; et al. Raman spectroscopy as a process analytical technology (PAT) tool for the in-line monitoring and understanding of a powder blending process. J. Pharm. Biomed. Anal. 2008, 48, 772–779.

- Singh, R.; Sahay, A.; Muzzio, F.; Ierapetritou, M.; Ramachandran, R. A systematic framework for onsite design and implementation of a control system in a continuous tablet manufacturing process. Comput. Chem. Eng. 2014, 66, 186–200.

- Baranwal, Y.; Román-Ospino, A.D.; Keyvan, G.; Ha, J.M.; Hong, E.P.; Muzzio, F.J.; Ramachandran, R. Prediction of dissolution profiles by non-destructive NIR spectroscopy in bilayer tablets. Int. J. Pharm. 2019, 565, 419–436.

- Shekunov, B.Y.; Chattopadhyay, P.; Tong, H.H.; Chow, A.H. Particle size analysis in pharmaceutics: Principles, methods and applications. Pharm. Res. 2007, 24, 203–227.

- Wu, H.; White, M.; Khan, M. Quality-by-Design (QbD): An. integrated process analytical technology (PAT) approach for a dynamic pharmaceutical co-precipitation process characterization and process design space development. Int. J. Pharm. 2011, 405, 63–78.

- Meng, W.; Román-Ospino, A.D.; Panikar, S.S.; O'Callaghan, C.; Gilliam, S.J.; Ramachandran, R.; Muzzio, F.J. Advanced process design and understanding of continuous twin-screw granulation via implementation of in-line process analytical technologies. Adv. Powder Technol. 2019, 30, 879–894.

- Ostergaard, I.; Szilagyi, B.; de Diego, H.L.; Qu, H.; Nagy, Z.K. Polymorphic Control and Scale-Up Strategy for Antisolvent Crystallization Using Direct Nucleation Control. Cryst. Growth Des. 2020, 20, 2683–2697.

- U.S. Department of Health and Human Services, F.D.A. PAT-A Framework for Innovative Pharmaceutical Development, Manufacturing and Quality Assurance; U.S. Department of Health and Human Services, F.D.A.: Rockville, MD, USA, 2004.

- Bakeev, K.A. Process Analytical Technology: Spectroscopic Tools and Implementation Strategies for the Chemical and Pharmaceutical Industries, 2th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2010.

- James, M.; Stanfield, C.F.; Bir, G. A Review of Process. Analytical Technology (PAT) in the U.S. Pharmaceutical Industry. Curr. Pharm. Anal. 2006, 2, 405–414.

- Nagy, Z.K.; Fevotte, G.; Kramer, H.; Simon, L.L. Recent advances in the monitoring, modelling and control of crystallization systems. Chem. Eng. Res. Des. 2013, 91, 1903–1922.

- Simon, L.L.; Kiss, A.A.; Cornevin, J.; Gani, R. Process engineering advances in pharmaceutical and chemical industries: Digital process design, advanced rectification, and continuous filtration. Curr. Opin. Chem. Eng. 2019, 25, 114–121.

- Papadakis, E.; Woodley, J.M.; Gani, R. Perspective on PSE in pharmaceutical process development and innovation. In Process. Systems Engineering for Pharmaceutical Manufacturing; Elsevier: Amsterdam, Netherlands, 2018; pp. 597–656.

- Pandey, P.; Bharadwaj, R.; Chen, X. Modeling of drug product manufacturing processes in the pharmaceutical industry. In Predictive Modeling of Pharmaceutical Unit Operations; Woodhead Publishing: Sawston, Cambridge, UK, 2017; pp. 1–13.

- Escotet-Espinoza, M.S.; Foster, C.J.; Ierapetritou, M. Discrete Element Modeling (DEM) for mixing of cohesive solids in rotating cylinders. Powder Technol. 2018, 335, 124–136.

- Toson, P.; Siegmann, E.; Trogrlic, M.; Kureck, H.; Khinast, J.; Jajcevic, D.; Doshi, P.; Blackwood, D.; Bonnassieux, A.; Daugherity, P.D.; et al. Detailed modeling and process design of an advanced continuous powder mixer. Int. J. Pharm. 2018, 552, 288–300.

- Bhalode, P.; Ierapetritou, M. Discrete element modeling for continuous powder feeding operation: Calibration and system analysis. Int. J. Pharm. 2020, 585, 119427.

- Rantanen, J.; Khinast, J. The Future of Pharmaceutical Manufacturing Sciences. J. Pharm. Sci. 2015, 104, 3612–3638.

- Sajjia, M.; Shirazian, S.; Kelly, C.B.; Albadarin, A.B.; Walker, G. ANN Analysis of a Roller Compaction Process. in the Pharmaceutical Industry. Chem. Eng. Technol. 2017, 40, 487–492.

- Pandey, P.; Katakdaunde, M.; Turton, R. Modeling weight variability in a pan coating process using Monte Carlo simulations. AAPS PharmSciTech 2006, 7, E2–E11.

- Prakash, A.V.; Chaudhury, A.; Barrasso, D.; Ramachandran, R. Simulation of population balance model-based particulate processes via parallel and distributed computing. Chem. Eng. Res. Des. 2013, 91, 1259–1271.

- Metta, N.; Verstraeten, M.; Ghijs, M.; Kumar, A.; Schafer, E.; Singh, R.; De Beer, T.; Nopens, I.; Cappuyns, P.; Van Assche, I.; et al. Model development and prediction of particle size distribution, density and friability of a comilling operation in a continuous pharmaceutical manufacturing process. Int. J. Pharm. 2018, 549, 271–282.

- Barrasso, D.; Tamrakar, A.; Ramachandran, R. Model. Order Reduction of a Multi-scale PBM-DEM Description of a Wet Granulation Process via ANN. Procedia Eng. 2015, 102, 1295–1304.

- Bostijn, N.; et al. A multivariate approach to predict the volumetric and gravimetric feeding behavior of a low feed rate feeder based on raw material properties. Int. J. Pharm. 2018, 557, 342–353.

- Van Snick, B.; Grymonpre, W.; Dhondt, J.; Pandelaere, K.; Di Pretoro, G.; Remon, J.P.; De Beer, T.; Vervaet, C.; Vanhoorne, V. Impact of blend properties on die filling during tableting. Int. J. Pharm. 2018, 549, 476–488.

- Escotet-Espinoza, M.S.; Moghtadernejad, S.; Oka, S.; Wang, Y.; Roman-Ospino, A.; Schäfer, E.; Cappuyns, P.; Van Assche, I.; Futran, M.; Ierapetritou, M.; et al. Effect of tracer material properties on the residence time distribution (RTD) of continuous powder blending operations. Part. I of II: Experimental evaluation. Powder Technol. 2019, 342, 744–763.

- Escotet-Espinoza, M.S.; Moghtadernejad, S.; Oka, S.; Wang, Z.; Wang, Y.; Roman-Ospino, A.; Schäfer, E.; Cappuyns, P.; Van Assche, I.; Futran, M.; et al. Effect of material properties on the residence time distribution (RTD) characterization of powder blending unit operations. Part. II of II: Application of models. Powder Technol. 2019, 344, 525–544.

- Escotet-Espinoza, M.S.; Vadodaria, S.; Singh, R.; Muzzio, F.J.; Ierapetritou, M.G. Modeling the effects of material properties on tablet compaction: A building block for controlling both batch and continuous pharmaceutical manufacturing processes. Int. J. Pharm. 2018, 543, 274–287.

- Wang, Z.; Escotet-Espinoza, M.S.; Ierapetritou, M. Process analysis and optimization of continuous pharmaceutical manufacturing using flowsheet models. Comput. Chem. Eng. 2017, 107, 77–91.

- Rogers, A.; Hashemi, A.; Ierapetritou, M. Modeling of Particulate Processes for the Continuous Manufacture of Solid-Based Pharmaceutical Dosage Forms. Processes 2013, 1, 67–127.

- Bhosekar, A.; Ierapetritou, M. Advances in surrogate based modeling, feasibility analysis, and optimization: A review. Comput. Chem. Eng. 2018, 108, 250–267.

- Metta, N.; Ghijs, M.; Schäfer, E.; Kumar, A.; Cappuyns, P.; Assche, I.V.; Singh, R.; Ramachandran, R.; Beer, T.D.; Ierapetritou, M.; et al. Dynamic Flowsheet Model. Development and Sensitivity Analysis of a Continuous Pharmaceutical Tablet Manufacturing Process. Using the Wet Granulation Route. Processes 2019, 7, 234.

- Wang, Z.; Escotet-Espinoza, M.S.; Singh, R.; Ierapetritou, M. Surrogate-based Optimization for Pharmaceutical Manufacturing Processes. In Computer Aided Chemical Engineering; Espuña, A., Graells, M., Puigjaner, L., Eds.; Elsevier: Amsterdam, Netherlands, 2017; pp. 2797–2802.

- Cao, H.; Mushnoori, S.; Higgins, B.; Kollipara, C.; Fermier, A.; Hausner, D.; Jha, S.; Singh, R.; Ierapetritou, M.; Ramachandran, R. A Systematic Framework for Data Management and Integration in a Continuous Pharmaceutical Manufacturing Processing Line. Processes 2018, 6, 53.

- U.S. Department of Health and Human Services, F.D.A. Data Integrity and Compliance with Drug CGMP. U.S. Department of Health and Human Services, F.D.A: Silver Spring USA, 2018.

- Venkatasubramanian, V.; Zhao, C.; Joglekar, G.; Jain, A.; Hailemariam, L.; Suresh, P.; Akkisetty, P.; Morris, K.; Reklaitis, G.V. Ontological informatics infrastructure for pharmaceutical product development and manufacturing. Comput. Chem. Eng. 2006, 30, 1482–1496.

- Su, Q.; Bommireddy, Y.; Shah, Y.; Ganesh, S.; Moreno, M.; Liu, J.; Gonzalez, M.; Yazdanpanah, N.; O'Connor, T.; Reklaitis, G.V.; et al. Data reconciliation in the Quality-by-Design (QbD) implementation of pharmaceutical continuous tablet manufacturing. Int. J. Pharm. 2019, 563, 259–272.

- Ganesh, S.; Moreno, M.; Liu, J.; Gonzalez, M.; Nagy, Z.; Reklaitis, G. Sensor Network for Continuous Tablet Manufacturing. In 13th International Symposium on Process. Systems Engineering (PSE 2018); Elsevier: Amsterdam, Netherlands, 2018, pp. 2149–2154.

- Sudarshan, G. Continuous Pharmaceutical Manufacturing: Systems Integration for Process Operations Management. Ph.D. Thesis, Purdue University Graduate School, West Lafayette, IN, USA, 2020.

- Singh, R.; Sahay, A.; Karry, K.M.; Muzzio, F.; Ierapetritou, M.; Ramachandran, R. Implementation of an advanced hybrid MPC–PID control system using PAT tools into a direct compaction continuous pharmaceutical tablet manufacturing pilot plant. Int. J. Pharm. 2014, 473, 38–54.

- Hailemariam, L.; Venkatasubramanian, V. Purdue Ontology for Pharmaceutical Engineering: Part., I. Conceptual Framework. J. Pharm. Innov. 2010, 5, 88–99.

- Hailemariam, L.; Venkatasubramanian, V. Purdue Ontology for Pharmaceutical Engineering: Part. II. Applications. J. Pharm. Innov. 2010, 5, 139–146.

- Zhao, C.; Jain, A.; Hailemariam, L.; Suresh, P.; Akkisetty, P.; Joglekar, G.; Venkatasubramanian, V.; Reklaitis, G.V.; Morris, K.; Basu, P. Toward intelligent decision support for pharmaceutical product development. J. Pharm. Innov. 2006, 1, 23–35.

- Badr, S.; Sugiyama, H. A PSE perspective for the efficient production of monoclonal antibodies: Integration of process, cell, and product design aspects. Curr. Opin. Chem. Eng. 2020, 27, 121–128.

- Lin-Gibson, S.; Srinivasan, V. Recent Industrial Roadmaps to Enable Smart Manufacturing of Biopharmaceuticals. IEEE Trans. Autom. Sci. Eng. 2019, 2019, 1–8.

- Narayanan, H.; Luna, M.F.; von Stosch, M.; Cruz Bournazou, M.N.; Polotti, G.; Morbidelli, M.; Butte, A.; Sokolov, M. Bioprocessing in the Digital Age: The Role of Process Models. Biotechnol. J. 2020, 15, e1900172.

- Read, E.K.; Park, J.T.; Shah, R.B.; Riley, B.S.; Brorson, K.A.; Rathore, A.S. Process analytical technology (PAT) for biopharmaceutical products: Part., I. concepts and applications. Biotechnol. Bioeng. 2010, 105, 276–84.

- Biechele, P.; Busse, C.; Solle, D.; Scheper, T.; Reardon, K. Sensor systems for bioprocess monitoring. Eng. Life Sci. 2015, 15, 469–488.

- Zhao, L.; Fu, H.-Y.; Zhou, W.; Hu, W.-S. Advances in process monitoring tools for cell culture bioprocesses. Eng. Life Sci. 2015, 15, 459–468.

- Roch, P.; Mandenius, C.-F. On-line monitoring of downstream bioprocesses. Curr. Opin. Chem. Eng. 2016, 14, 112–120.

- Guerra, A.; von Stosch, M.; Glassey, J. Toward biotherapeutic product real-time quality monitoring. Crit. Rev. Biotechnol. 2019, 39, 289–305.

- Pais, D.A.M.; Carrondo, M.J.T.; Alves, P.M.; Teixeira, A.P. Towards real-time monitoring of therapeutic protein quality in mammalian cell processes. Curr. Opin. Biotechnol. 2014, 30, 161–167.

- Classen, J.; Aupert, F.; Reardon, K.F.; Solle, D.; Scheper, T. Spectroscopic sensors for in-line bioprocess monitoring in research and pharmaceutical industrial application. Anal. Bioanal. Chem. 2017, 409, 651–666.

- Berry, B.N.; Dobrowsky, T.M.; Timson, R.C.; Kshirsagar, R.; Ryll, T.; Wiltberger, K. Quick generation of Raman spectroscopy based in-process glucose control to influence biopharmaceutical protein product quality during mammalian cell culture. Biotechnol. Prog. 2016, 32, 224–234.

- Mehdizadeh, H.; Lauri, D.; Karry, K.M.; Moshgbar, M.; Procopio-Melino, R.; Drapeau, D. Generic Raman-based calibration models enabling real-time monitoring of cell culture bioreactors. Biotechnol. Prog. 2015, 31, 1004–1013.

- Abu-Absi, N.R.; Martel, R.P.; Lanza, A.M.; Clements, S.J.; Borys, M.C.; Li, Z.J. Application of spectroscopic methods for monitoring of bioprocesses and the implications for the manufacture of biologics. Pharm. Bioprocess. 2014, 2, 267–284.

- Rathore, A.S.; Kateja, N.; Kumar, D. Process integration and control in continuous bioprocessing. Curr. Opin. Chem. Eng. 2018, 22, 18–25.

- Wasalathanthri, D.P.; Rehmann, M.S.; Song, Y.; Gu, Y.; Mi, L.; Shao, C.; Chemmalil, L.; Lee, J.; Ghose, S.; Borys, M.C.; et al. Technology outlook for real-time quality attribute and process parameter monitoring in biopharmaceutical development—A review. Biotechnol. Bioeng. 2020, 117. doi: 10.1002/bit.27461

- Tang, P.; et al. Kinetic modeling of Chinese hamster ovary cell culture: Factors and principles. Crit. Rev. Biotechnol. 2020, 40, 265–281.

- Farzan, P.; Mistry, B.; Ierapetritou, M.G. Review of the important challenges and opportunities related to modeling of mammalian cell bioreactors. AIChE J. 2017, 63, 398–408.

- Baumann, P.; Hubbuch, J. Downstream process development strategies for effective bioprocesses: Trends, progress, and combinatorial approaches. Eng. Life Sci. 2017, 17, 1142–1158.

- Smiatek, J.; Jung, A.; Bluhmki, E. Towards a Digital Bioprocess. Replica: Computational Approaches in Biopharmaceutical Development and Manufacturing. Trends Biotechnol. 2020. doi:10.1016/j.tibtech.2020.05.008

- Olughu, W.; Deepika, G.; Hewitt, C.; Rielly, C. Insight into the large-scale upstream fermentation environment using scaled-down models. J. Chem. Technol. Biotechnol. 2019, 94, 647–657.

- Li, X.; Scott, K.; Kelly, W.J.; Huang, Z. Development of a Computational Fluid Dynamics Model for Scaling-up Ambr Bioreactors. Biotechnol. Bioprocess Eng. 2018, 23, 710–725.

- Farzan, P.; Ierapetritou, M.G. A Framework for the Development of Integrated and Computationally Feasible Models of Large-Scale Mammalian Cell Bioreactors. Processes 2018, 6, 82.

- Menshutina, N.V.; Guseva, E.V.; Safarov, R.R.; Boudrant, J. Modelling of hollow fiber membrane bioreactor for mammalian cell cultivation using computational hydrodynamics. Bioprocess Biosyst. Eng. 2020, 43, 549–567.

- Xu, J.; Tang, P.; Yongky, A.; Drew, B.; Borys, M.C.; Liu, S.; Li, Z.J. Systematic development of temperature shift strategies for Chinese hamster ovary cells based on short duration cultures and kinetic modeling. MAbs 2019, 11, 191–204.

- Sokolov, M.; Ritscher, J.; MacKinnon, N.; Souquet, J.; Broly, H.; Morbidelli, M.; Butte, A. Enhanced process understanding and multivariate prediction of the relationship between cell culture process and monoclonal antibody quality. Biotechnol. Prog. 2017, 33, 1368–1380.

- Villiger, T.K.; Scibona, E.; Stettler, M.; Broly, H.; Morbidelli, M.; Soos, M. Controlling the time evolution of mAb N-linked glycosylation—Part II: Model–based predictions. Biotechnol. Prog. 2016, 32, 1135–1148.

- Kotidis, P.; Jedrzejewski, P.; Sou, S.N.; Sellick, C.; Polizzi, K.; del Val, I.J.; Kontoravdi, C. Model–based optimization of antibody galactosylation in CHO cell culture. Biotechnol. Bioeng. 2019, 116, 1612–1626.

- Radhakrishnan, D.; Robinson, A.S.; Ogunnaike, B. Controlling the Glycosylation Profile in mAbs Using Time-Dependent Media Supplementation. Antibodies 2017, 7, 1.

- Karst, D.J.; Steinebach, F.; Soos, M.; Morbidelli, M. Process performance and product quality in an integrated continuous antibody production process. Biotechnol. Bioeng. 2017, 114, 298–307.

- Shirahata, H.; Diab, S.; Sugiyama, H.; Gerogiorgis, D.I. Dynamic modelling, simulation and economic evaluation of two CHO cell-based production modes towards developing biopharmaceutical manufacturing processes. Chem. Eng. Res. Des. 2019, 150, 218–233.

- Xing, Z.; Kenty, B.; Koyrakh, I.; Borys, M.; Pan, S.-H.; Li, Z.J. Optimizing amino acid composition of CHO cell culture media for a fusion protein production. Process Biochem. 2011, 46, 1423–1429.

- Spahn, P.N.; Hansen, A.H.; Hansen, H.G.; Arnsdorf, J.; Kildegaard, H.F.; Lewis, N.E. A Markov chain model for N-linked protein glycosylation–towards a low-parameter tool for model-driven glycoengineering. Metab. Eng. 2016, 33, 52–66.

- Hutter, S.; Villiger, T.K.; Brühlmann, D.; Stettler, M.; Broly, H.; Soos, M.; Gunawan, R. Glycosylation flux analysis reveals dynamic changes of intracellular glycosylation flux distribution in Chinese hamster ovary fed-batch cultures. Metab. Eng. 2017, 43, 9–20.

- Nolan, R.P.; Lee, K. Dynamic model of CHO cell metabolism. Metab. Eng. 2011, 13, 108–124.

- Bayrak, E.S.; Wang, T.; Cinar, A.; Undey, C. Computational Modeling of Fed-Batch Cell Culture Bioreactor: Hybrid. Agent-Based Approach. IFAC-PapersOnLine 2015, 48, 1252–1257.

- Kiparissides, A.; Pistikopoulos, E.N.; Mantalaris, A. On the model-based optimization of secreting mammalian cell (GS-NS0) cultures. Biotechnol. Bioeng. 2015, 112, 536–548.

- Kotidis, P.; Demis, P.; Goey, C.H.; Correa, E.; McIntosh, C.; Trepekli, S.; Shah, N.; Klymenko, O.V.; Kontoravdi, C. Constrained global sensitivity analysis for bioprocess design space identification. Comput. Chem. Eng. 2019, 125, 558–568.

- Narayanan, H.; Sokolov, M.; Morbidelli, M.; Butte, A. A new generation of predictive models: The added value of hybrid models for manufacturing processes of therapeutic proteins. Biotechnol. Bioeng. 2019, 116, 2540–2549.

- Psichogios, D.C.; Ungar, L. A hybrid neural network-first principles approach to process modeling. AIChE J. 1992, 38, 1499–1511,

- Von Stosch, M.; Hamelink, J.-M.; Oliveira, R. Hybrid modeling as a QbD/PAT tool in process development: An industrial E. coli case study. Bioprocess Biosyst. Eng. 2016, 39, 773–784.

- Zalai, D.; Koczka, K.; Párta, L.; Wechselberger, P.; Klein, T.; Herwig, C. Combining mechanistic and data-driven approaches to gain process knowledge on the control of the metabolic shift to lactate uptake in a fed-batch CHO process. Biotechnol. Prog. 2015, 31, 1657–1668.

- Selvarasu, S.; Kim, D.Y.; Karimi, I.A.; Lee, D.-Y. Combined data preprocessing and multivariate statistical analysis characterizes fed-batch culture of mouse hybridoma cells for rational medium design. J. Biotechnol. 2010, 150, 94–100.

- Lienqueo, M.E.; Mahn, A.; Salgado, J.C.; Shene, C. Mathematical Modeling of Protein Chromatograms. Chem. Eng. Technol. 2012, 35, 46–57.

- Shi, C.; Gao, Z.-Y.; Zhang, Q.-L.; Yao, S.-J.; Slater, N.K.H.; Lin, D.-Q. Model.-based process development of continuous chromatography for antibody capture: A case study with twin-column system. J. Chromatogr. A 2020, 1619, 460936.

- Wang, G.; Briskot, T.; Hahn, T.; Baumann, P.; Hubbuch, J. Estimation of adsorption isotherm and mass transfer parameters in protein chromatography using artificial neural networks. J. Chromatogr. A 2017, 1487, 211–217.

- Baur, D.; Angarita, M.; Müller-Späth, T.; Morbidelli, M. Optimal model-based design of the twin-column CaptureSMB process improves capacity utilization and productivity in protein A affinity capture. Biotechnol. J. 2016, 11, 135–145.

- Huter, J.M.; Strube, J. Model.-Based Design and Process. Optimization of Continuous Single Pass Tangential Flow Filtration Focusing on Continuous Bioprocessing. Processes 2019, 7, 317.

- Mandenius, C.-F.; Brundin, A. Bioprocess optimization using design-of-experiments methodology. Biotechnol. Prog. 2008, 24, 1191–1203.

- Pleitt, K.; Somasundaram, B.; Johnson, B.; Shave, E.; Lua, L.H.L. Evaluation of process simulation as a decisional tool for biopharmaceutical contract development and manufacturing organizations. Biochem. Eng. J. 2019, 150, 107252.

- Arnold, L.; Lee, K.; Rucker-Pezzini, J.; Lee, J.H. Implementation of Fully Integrated Continuous Antibody Processing: Effects on Productivity and COGm. Biotechnol. J. 2019, 14, 1800061.

- Pollock, J.; Coffman, J.; Ho, S.V.; Farid, S.S. Integrated continuous bioprocessing: Economic, operational, and environmental feasibility for clinical and commercial antibody manufacture. Biotechnol. Prog. 2017, 33, 854–866.

- Yang, O.; Prabhu, S.; Ierapetritou, M. Comparison between Batch and Continuous Monoclonal Antibody Production and Economic Analysis. Ind. Eng. Chem. Res. 2019, 58, 5851–5863.

- Walther, J.; Godawat, R.; Hwang, C.; Abe, Y.; Sinclair, A.; Konstantinov, K. The business impact of an integrated continuous biomanufacturing platform for recombinant protein production. J. Biotechnol. 2015, 213, 3–12.

- Pirrung, S.M.; van der Wielen, L.A.M.; van Beckhoven, R.F.W.C.; van de Sandt, E.J.A.X.; Eppink, M.H.M.; Ottens, M. Optimization of biopharmaceutical downstream processes supported by mechanistic models and artificial neural networks. Biotechnol. Prog. 2017, 33, 696–707.

- Zobel-Roos, S.; Schmidt, A.; Mestmäcker, F.; Mouellef, M.; Huter, M.; Uhlenbrock, L.; Kornecki, M.; Lohmann, L.; Ditz, R.; Strube, J. Accelerating Biologics Manufacturing by Modeling or: Is Approval under the QbD and PAT Approaches Demanded by Authorities Acceptable Without a Digital-Twin? Processes 2019, 7, 94.

- Sencar, J.; Hammerschmidt, N.; Jungbauer, A. Modeling the Residence Time Distribution of Integrated Continuous Bioprocesses. Biotechnol. J. 2020, e2000008. doi:10.1002/biot.202000008

- Gomis-Fons, J.; Schwarz, H.; Zhang, L.; Andersson, N.; Nilsson, B.; Castan, A.; Solbrand, A.; Stevenson, J.; Chotteau, V. Model–based design and control of a small-scale integrated continuous end-to-end mAb platform. Biotechnol. Prog. 2020, e2995. doi:10.1002/btpr.2995

- Zahel, T.; Hauer, S.; Mueller, E.M.; Murphy, P.; Abad, S.; Vasilieva, E.; Maurer, D.; Brocard, C.; Reinisch, D.; Sagmeister, P.; et al. Integrated Process. Modeling-A Process. Validation Life Cycle Companion. Bioengineering 2017, 4, 86.

- Gangadharan, N.; Turner, R.; Field, R.; Oliver, S.G.; Slater, N.; Dikicioglu, D. Metaheuristic approaches in biopharmaceutical process development data analysis. Bioprocess Biosyst. Eng. 2019, 42, 1399–1408.

- Casola, G.; Siegmund, C.; Mattern, M.; Sugiyama, H. Data mining algorithm for pre-processing biopharmaceutical drug product manufacturing records. Comput. Chem. Eng. 2019, 124, 253–269.

- Lee, H.W.; Christie, A.; Xu, J.; Yoon, S. Data fusion-based assessment of raw materials in mammalian cell culture. Biotechnol. Bioeng. 2012, 109, 2819–2828.

- Herold, S.; King, R. Automatic identification of structured process models based on biological phenomena detected in (fed-)batch experiments. Bioprocess Biosyst. Eng. 2014, 37, 1289–1304.

- Luna, M.F.; Martínez, E.C. Iterative modeling and optimization of biomass production using experimental feedback. Comput. Chem. Eng. 2017, 104, 151–163.

- Feidl, F.; Vogg, S.; Wolf, M.; Podobnik, M.; Ruggeri, C.; Ulmer, N.; Wälchli, R.; Souquet, J.; Broly, H.; Butté, A.; et al. Process–wide control and automation of an integrated continuous manufacturing platform for antibodies. Biotechnol. Bioeng. 2020, 117, 1367–1380.

- Fahey, W.; Jeffers, P.; Carroll, P. A business analytics approach to augment six sigma problem solving: A biopharmaceutical manufacturing case study. Comput. Ind. 2020, 116, 103153.