An NCS consists of control loops joined through communication networks in which both the control signal and the feedback signal are exchanged between the system and the controller.

- controller design analysis

- networked control systems (NCSs)

- network security

- delays

- sampling

1. Introduction

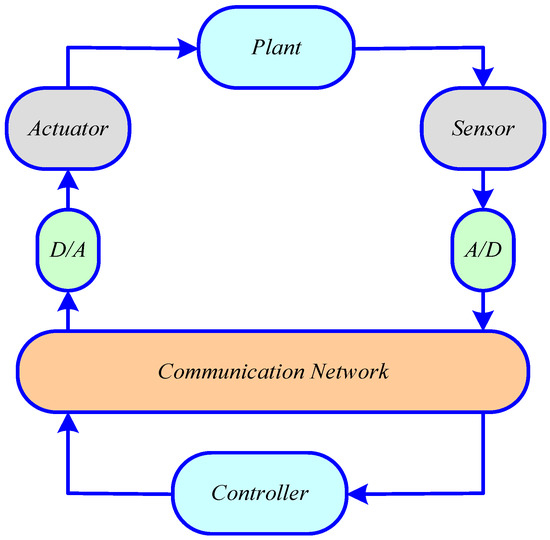

A networked control system (NCS) consists of control loops connected through communication networks, in which both the control signal and the feedback signal are exchanged between the system/plant and the controller. There are two types of approaches for design of NCSs, namely control of network approach and control over network approach. Only the control over network approach-based NCSs are considered in this review. A simple block diagram of this type of networked system is shown in Figure 1.

Figure 1. A simple block representation of a networked control system (NCS).

In an NCS, the plant output is measured using the sensors. These signals are converted into digital signals using the analog-digital (A/D) convertors, which are transmitted to the controller via a communication network. The controller determines the control signal based on the sensor output, which is transmitted back to the plant using same communication channel. The control signal before being fed to the actuator section of the plant, is converted from digital to analog signal using the digital-analog (D/A) convertor. In this manner, the plant dynamics can be controlled from a remote location.

2. Advantages and Disadvantages of NCS

There are several underlying advantages of an NCS, which is termed as the next generation control system. Key merits of networked control systems (NCSs) are as follows:

- (i)

-

Effective reduction of system complexity: It is possible to minimize the complexity of control systems by interfacing them with the communication network. With the communication network, the data related to multiple plants can be easily stored at a single server which reduces the complexity of control systems.

- (ii)

-

Efficient sharing of network data: Several parameters pertaining to the plant can be exchanged easily using the communication networks, which helps in the design of control algorithms.

- (iii)

-

Simple to take intellectual decisions based on the information: The information can be used to make intelligent decisions easily.

- (iv)

-

Eliminates unnecessary wiring: Today, it is possible to transmit data wirelessly at a very high speed. The wiring for interfacing controllers and plants can also be avoided. The wireless sensor networks are advanced enough to make the wireless control is a reality.

- (v)

-

Simple to scale the networks by adding additional sensors, actuators, and controller: The wireless sensors and actuators can be replaced easily, which reduces the maintenance cost of the NCS. The controllers can also be replaced economically as compared to the traditional wired controllers. The expansion of sensors, actuators and controllers can also be achieved easily.

- (vi)

-

Cyber-physical interface for tele-execution of control: The NCS provides a platform for cyber-physical interface and tele-execution of controls. The NCS permits the remote control of the plant.

- (vii)

-

Wide range of applications: Applications in the area of distributed power systems, robots, unmanned aerial vehicles (UAVs), automobiles, space discovery, terrestrial discovery, industrial unit automation, remote problem-solving and troubleshooting, perilous environments, aircraft, production plant monitoring, and many more. More and more applications of NCS are coming into existence every day.

The NCS is also plagued by several problems. The disadvantages of NCS are:

-

(i.)

-

Loss of ability to determine the time of incoming data: The time at which the data arrives cannot be determined exactly, so uniform sampling cannot be used.

-

(ii.)

-

Loss of data integrity: Data may be lost during the transmission process, so it cannot give the complete information.

-

(iii.)

-

Communication Latency: In remotely located NCSs, due to the communication latency, the control action may not be implemented with immediate effect.

-

(iv.)

-

Complexity and Congestion: As the number of nodes increases, so does the complexity of the communication system, causing congestion and time delays. Therefore, the deployment in an industry with tens of thousands of sensors and actuators can be a challenging task.

3. Designing of Control System from Continuous Domain to Networked Control Domain

In the beginning, control signals were generated using analog computers. Frequency analysis and Laplace transform were the primary tools for analysis. The main drawbacks of this system are its limited accuracy, limited bandwidth, drift, noise, and limited capabilities to manage nonlinearities. Known delays could be handled at the time of control synthesis using the well-known Smith predictor.

Digital controllers replaced the analog technology with the advancement of processors. However, controlling an analog plant with a discrete electronic system inevitably introduces timing distortions. In particular, it will become necessary to sample and convert the sensor measurements to digital data and also convert them back to analog values. Sampling theory and z transform became the standard tools for the design and analysis of digital control systems analysis. For z transform, it is assumed that the sampling is uniform. Thus, for the design of digital controllers, periodic sampling became the standard. Note that, at the infancy of digital controls, as the computing power was poor and memory was expensive, it was vital to minimize the complexity of the controllers and the operating power. It is not obvious that the periodic sampling assumption is always the best choice. For example, adaptive sampling has been used in Reference [1], where the sampling frequency is changed based on the derivative of the error signal, and is far better than equidistant sampling in terms of computed samples (but possibly not in terms of disturbance rejection [2]). A summary of these efforts is provided in References [3][4]. However, with the decreasing computational costs, interest in adaptive sampling reduced, and the linearity preservation property of equidistant sampling has helped it to stay the undisputed standard.

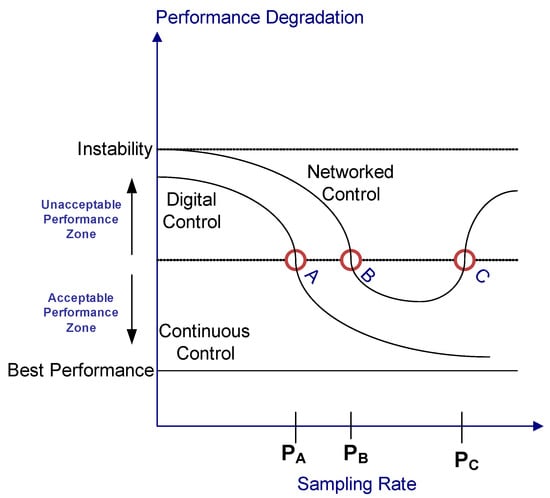

From the computing side, real-time scheduling modeling and analysis were introduced in Reference [5]. This scheduling was based on restrictive assumptions, one of them being the periodicity of the tasks. Even if more assumptions were progressively introduced to cope with the practical problems, the periodicity presumption remains popular [6]. Moreover, the topology of the network can vary with time, allowing the mobility of the control devices. Hence, the whole control system can be highly adaptive in a dynamic environment. In particular, wireless communications allow for the rapid deployment of networks for connecting remotely located devices. However, networking also has problems, such as variable delays, message de-sequencing, and periodic data loss. These timing uncertainties and disturbances are in addition to the problems introduced by the digital controllers. Figure 2 shows the trade-off between control performance and the sampling rate.

Figure 2. Trade-off between control performance and the sampling rate.

Figure 2 shows that the control performance is only applicable for a range of sampling rate from PB to PC. Increasing the sampling rate above PC increases the network-induced delays. This will result in the degradation of control performance.

Based on the above discussion, the difference between the conventional control systems and the NCS is been summarized in Table 1 as:

Table 1. Differences between networked and conventional control systems.

| Parameters | Networked System | Conventional System |

|---|---|---|

| Vital Function | Control of physical equipment | Data processing and transfer |

| Applications | Manufacturing, processing and utility distribution | Corporate and home environments |

| Hierarchical Process | Deep, functionally separated hierarchies | Shallow, integrated hierarchies |

| Failure Mode and its effect | High | Low |

| Reliability Requirement | High | Moderate |

| Deterministic | High | Low |

| Data Composition | Small packets of periodic and aperiodic traffic | Large, aperiodic packets |

| Reliability | Required | Not required |

| Operating Environment | Hostile conditions, often featuring high levels of dust, heat, and vibration | Clean environments, often specifically intended for sensitive equipment |

4. Co-Design Approach for the NCSs

The design of an NCS integrates the domains of control system, communication, and real-time computing. The increasing complexity of the computer systems, and their networks, requires advanced methods specifically suited for the NCS. The main issue to be addressed is the achievement of the control objective (i.e., a combination of security, performance, and reliability requirements), despite the disturbances. For instance, sharing of common computing resources and communication bandwidths by competing control loops, alongside the other functions, introduces random delays and data losses. Moreover, the use of heterogeneous computers and communication systems increases the complexity of the NCS.

Control plays a significant role in interconnected complex systems for their reliable performance [7]. The interconnection of elements and sub-systems, coming from different technologies, and which are subject to various constraints, calls for a design that can solve the conflicting constraints. Besides achieving the desired performance in normal situations, the reliability and safety-related problems are of concern for the system developers. A fundamental concept that is dependability, which is the device property that features various attributes, such as access, reliability, safety, confidentiality, integrity, and maintainability [8]. Being confronted with faults, errors, and failures, a system’s dependability could be achieved in numerous ways, i.e., fault prevention, fault threshold, and fault forecasting [9].

Except in the event of failures due to hardware or software components, most procedures run with nominal behavior, but, neither the process nor the execution resource parameters are completely known or modeled. One method is to allocate the system resources conservatively, which results in the wastage of resources. From the control viewpoint, specific inadequacies to be considered include poor timing, delays, and data loss. Control usually deals with modeling uncertainty, powerful adaptation, and disturbance attenuation. More correctly, as shown with recent results obtained on NCS [10], control loops tend to be robust and can tolerate networking and computing disturbances, up to a certain level. Therefore, the timing deviations, such as jitter or data loss, as long as they remain within the bounds, may be viewed as the nominal features of the system, but not as exceptions. Robustness allows for provisioning the execution resources according to needs that are average than for worst cases, and to take into account system reconfiguration only once the failures surpass the abilities of the controller tolerance that is running.

An NCS is composed of a collection of heterogeneous devices and information sub-systems. For designing the NCS, many conflicting constraints must be simultaneously fixed before reaching a satisfactory solution that is implementable. Issues related to networking control tracking performance, robustness, redundancy, reconfigurability, energy consumption, expense effectiveness, etc., are to be addressed. Traditional control usually deals with a procedure that requires a solitary computer, and the limitations of the communication links and computing resources usually do not notably affect its performance. Existing tools dealing with the modeling and identification, robust control, fault diagnosis and isolation, fault tolerant control, and flexible real-time scheduling need to be enhanced, adapted, and extended to handle the networked characteristics of the control system. Finally, the thought of a co-design system approach has emerged that allows the integration of control, as well as communication within the NCS design [10].

This entry is adapted from the peer-reviewed paper 10.3390/su13052962

References

- Dorf, R.; Farren, M.; Phillips, C. Adaptive sampling frequency for sampled-data control systems. IEEE Trans. Autom. Control 1962, 7, 38–47.

- Smith, M. An evaluation of adaptive sampling. IEEE Trans. Autom. Control 1971, 16, 282–284.

- Hsia, T. Comparisons of adaptive sampling control laws. IEEE Trans. Autom. Control 1972, 17, 830–831.

- Hsia, T. Analytic design of adaptive sampling control law in sampled data systems. IEEE Trans. Autom. Control 1974, 19, 39–42.

- Liu, C.; Layland, J. Scheduling algorithms for multiprogramming in hard real-time environment. J. ACM 1973, 20, 40–61.

- Audsley, N.; Burns, A.; Davis, R.; Tindell, K.; Wellings, A. Fixed priority preemptive scheduling: An historical perspective. Real-Time Syst. 1995, 8, 173–198.

- Murray, R.M.; Aström, K.J.; Boyd, S.P.; Brockett, R.W.; Stein, G. Future directions in control in an information-rich world. IEEE Control Syst. Mag. 2003, 23, 20–33.

- Laprie, J.-C. (Ed.) Dependability: Basic Concepts and Terminology; Springer: New York, NY, USA, 1992.

- Avižienis, A.; Laprie, J.C.; Randell, B. Fundamental Concepts of Dependability; Report 01145; LAAS: Toulouse, France, 2000.

- Baillieul, J.; Antsaklis, P.J. Control and communication challenges in networked real-time systems. Proc. IEEE 2007, 95, 9–28.