Anticoagulant drugs have been used to prevent and treat thrombosis. However, they are associated with risk of hemorrhage. Therefore, prior to their clinical use, it is important to assess the risk of bleeding and thrombosis. In case of older anticoagulant drugs like heparin and warfarin, dose adjustment is required owing to narrow therapeutic ranges. The established monitoring methods for heparin and warfarin are activated partial thromboplastin time (APTT)/anti-Xa assay and prothrombin time – international normalized ratio (PT-INR), respectively. Since 2008, new generation anticoagulant drugs, called direct oral anticoagulants (DOACs), have been widely prescribed to prevent and treat several thromboembolic diseases. Although the use of DOACs without routine monitoring and frequent dose adjustment has been shown to be safe and effective, there may be clinical circumstances in specific patients when measurement of the anticoagulant effects of DOACs is required. Recently, anticoagulation therapy has received attention when treating patients with coronavirus disease 2019 (COVID-19).

- prothrombin time

- activated partial thromboplastin time

- heparin

- warfarin

- direct oral anticoagulants

1. Introduction

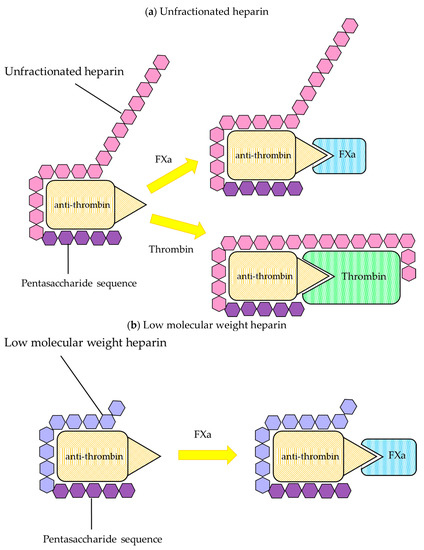

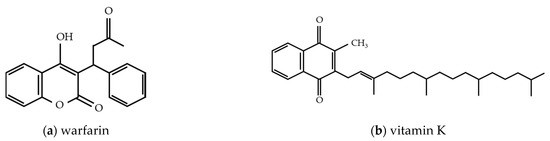

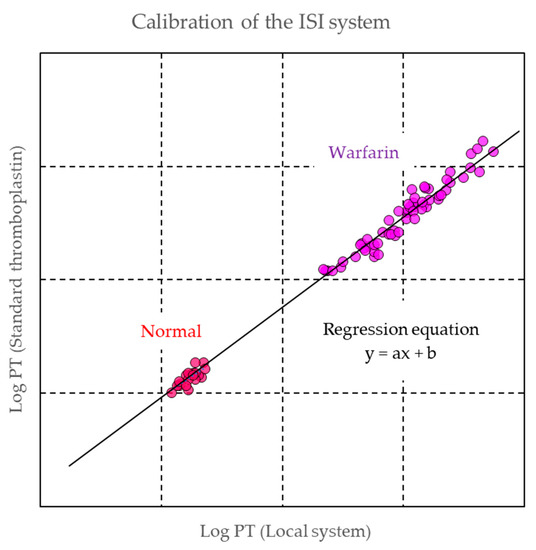

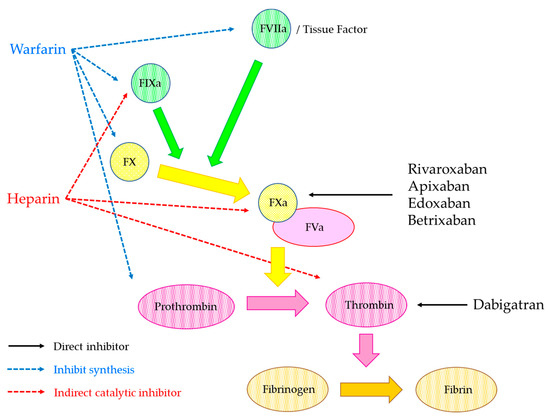

Thrombosis disorders require prompt treatment with anticoagulant drugs at therapeutic doses. Although these drugs are effective and useful for the prevention and treatment for thrombosis, the drugs are associated with the occurrence of hemorrhage, and therefore, the identification of patients at increased risk of bleeding is clinically important for selecting the optimal treatment and duration of anticoagulant therapy. Traditionally, heparin—consisting of unfractionated heparin (UFH), low molecular weight heparin (LMWH), and fondaparinux—is used widely. Heparin binds to antithrombin via its pentasaccharide, catalyzing the inactivation of thrombin and other clotting factors [1]. Warfarin, a vitamin K antagonist used to hamper γ-carboxylation of vitamin K-dependent coagulation factors, has also been used historically as an anticoagulant [1][2]. Drug monitoring in clinical laboratory tests is required when using these drugs. In recent years, direct oral anticoagulants (DOACs) have been developed, including direct factor IIa (i.e., dabigatran) and factor Xa inhibitors (i.e., rivaroxaban, apixaban, and edoxaban), which can overcome several of the limitations of warfarin treatment, such as food interactions and the need for frequent monitoring using clinical laboratory tests. DOACs are reported to have a superior safety profile compared with warfarin in some thrombosis disorders [3][4][5][6][7][8][9][10]. Nevertheless, even with the new generation of anticoagulant agents, the most relevant and frequent complication of anticoagulant treatment is major hemorrhage, which is associated with significant morbidity, mortality, and considerable costs [11][12][13]. Although routine monitoring of these drugs is not required, assessment of anticoagulant effect may be desirable in special situations and assessment of the individual bleeding risk might be relevant while considering the selection of the appropriate anticoagulant drug and treatment duration [14].

In addition to the introduction of new anticoagulant drugs, anticoagulation therapy has received attention with regard to treating patients suffering from coronavirus disease 2019 (COVID-19). Patients with COVID-19 associated pneumonia exhibit abnormal coagulation and organ dysfunction, and coagulopathy based on abnormal coagulation has been associated with a higher mortality rate [15][16]. Thus, a suitable treatment for the coagulopathy and thrombosis is required.

This review covers the anticoagulant mechanisms of heparin, warfarin, and DOACs as well as the measurement methods for these drugs. In addition, we attempt to provide the latest information on thrombosis mechanism in COVID-19 from the view of biological chemistry.

2. Anticoagulants

2.1. Heparin

2.1.1. The Properties of Heparin

2.1.2. Biological Properties

Unfractionated Heparin

Low Molecular Weight Heparin

2.1.3. Monitoring of Heparin

2.2. Warfarin

2.2.1. Properties of Warfarin

2.2.2. Monitoring of Warfarin

2.3. Direct Oral Anticoagulant (DOAC)

2.3.1. Properties of DOAC

| Dabigatran | Rivaroxaban | Apixaban | Edoxaban | Betrixaban | |

|---|---|---|---|---|---|

| Target | Thrombin | Factor Xa | Factor Xa | Factor Xa | Factor Xa |

| Primary clearance | Renal | Renal | Fecal | Renal | Fecal |

| Tmax | 1.5–3 h | 2–3 h | 3–4 h | 1–2 h | 3–4 h |

| Half-life | 12–14 h | 5–13 h | 12 h | 10–14 h | 19–27 h |

2.3.2. Measurement of DOACs

The use of DOACs without routine monitoring and frequent dose adjustment has been shown to be safe and effective in the majority of patients, thus making them more convenient anticoagulants than warfarin [70]. However, it was reported that there would be clinical circumstances in specific patients when measurements for the anticoagulant effects of DOAC would be required. The following cases have been discussed for the utility of the measurements [62][71].

-

(i)

-

Persistent bleeding or thrombosis

-

(ii)

-

Decreased drug clearance resulting from impaired kidney function or liver disease

-

(iii)

-

Identification of subtherapeutic or supratherapeutic levels in patients taking other drugs that are known to significantly affect pharmacokinetics

-

(iv)

-

Extremely body weight: <40 kg or >120 kg

-

(v)

-

Perioperative management

-

(vi)

-

Reversal of anticoagulant

-

(vii)

-

Suspicion of overdosage

-

(viii)

-

Adherence to treatment protocol

The measurement assays have been developed to fulfill these requirements. It was considered that the optimal laboratory assays were dependent on the cases and the tests were classified as two types as follows: qualitative (presence or absence) and quantitative (ng/mL).

Qualitative Assays for DOACs

Quantitative Assay for DOAC Measurement

Liquid chromatography-mass spectrometry/mass spectrometry (LC-MS/MS) is considered the gold standard method for the quantitative assay for DOAC measurement because it has high degree of specificity, sensitivity, selectivity, and reproducibility, and this method is often used in clinical development to evaluate DOAC pharmacokinetics [95][96][97]. The lower limits of detection (LoD) and quantitation (LoQ) in LC-MS/MS for DOAC detection has been reported as 0.025–3 ng/mL and the reportable range of quantitation has also been reported as 5–500 ng/mL [98][99][100][101][102][103]. Although LC-MS/MS is considered the most accurate method for DOAC measurements, it is not widely used because of the limitation of availability and complexity associated with this testing. In addition, LC-MS/MS has a high intra- and inter-assay coefficient of variation and it needs to be calibrated with the purified drug, which contributes to series-to-series variations. Thus, the drug-calibrated clot-based or chromogenic methods have been developed and adapted to automated coagulation analyzers.

One of the clot-based kits for dabigatran measurement is Hemoclot thrombin inhibitor (Hyphen BioMed, Neuville-sur-Oise, France). For detection, the diluted tested plasma is mixed with normal pooled plasma. Then, clotting is initiated by addition of human thrombin and the clotting time is measured in coagulation analyzers. The clotting time is directly related to the concentration of dabigatran, and the concentration is calculated from the calibration curve obtained from the dabigatran calibrator. It was shown that the clotting method and LC-MS/MS analysis correlated well, and the agreement was good [98]. Stangier et al. also reported that this kit enabled the accurate assessment of dabigatran concentrations within the therapeutic concentration range without inter-laboratory variability [104]. The correlation between the clotting method and qualitative tests as APTT and thrombin time has also been investigated. The APTT demonstrated a modest correlation with the dabigatran concentration measured by the clotting method but the correlation became less reliable at higher dabigatran levels and it was recognized that the sensitivity was different among APTT reagents. The TT was sensitive to the presence of dabigatran and showed >300 s in more than 60 ng/mL concentration. It was confirmed that the TT was too sensitive to quantify dabigatran levels [105]. The other study also demonstrated that APTT and TT could not identify the drug presence in too low or too high concentrations, respectively [106]. For dabigatran concentration detection, it is considered that the clotting method is a more suitable assay. In addition, there was an accurate correlation reported for ecarin clotting time or ecarin chromogenic assay and dabigatran concentrations [74]. Although the upper limit of the measurements is dependent on the ecarin unit and the composition, it was reported that these assays could detect dabigatran up to 940 ng/mL.

Chromogenic anti-Xa assay has been used in clinical laboratories for several decades as a method to assess heparin concentration. This assay is appropriate for measuring direct factor Xa inhibitor drug concentration. The chromogenic anti-Xa assay principle is a kinetics method based on the inhibition of factor Xa at a constant and limiting concentration by the tested factor Xa inhibitor. The samples are mixed with an excess amount of factor Xa, and factor Xa inhibitor binds to factor Xa. The remaining factor Xa is then measured by its amidolytic activity on a factor Xa specific chromogenic substrate, and the residual factor Xa cleaves the substrate. The amount of cleaved substrate is inversely proportional to the concentration of factor Xa inhibitor in the tested samples. The concentration is calculated from the calibration curve obtained from rivaroxaban, apixaban, and edoxaban calibrator, respectively. It has been proved that this kit has the correlation against LC-MS/MS, and it was also shown that the inter variability was small [100][107][108]. Douxfils et al. reported that the correlation between PT and LC-MS/MS was not linear and the sensitivity to drugs differed among reagents [100], it could be difficult to perform the standardization to estimate drug concentration in PT reagents. Biophen DiXaI is useful to quantify drug concentration because the kit has high sensitivity to drugs and adapted to automated coagulation analyzers. DOACs have short half-lives, their concentration is time-dependent, and the concentration of peak time is quite different from that of trough time. The information when patients take the drugs is useful for the interpretation of the drug concentration data.

This entry is adapted from the peer-reviewed paper 10.3390/biomedicines9030264

References

- Hirsh, J.; Raschke, R. Heparin and low-molecular-weight heparin. Chest 2004, 126, 188–203.

- Dorgalaleh, A.; Favaloro, E.J.; Bahraini, M.; Rad, F. Standardization of Prothrombin time/international normalized ratio (PT/INR). Int. J. Lab. Hematol. 2020, in press.

- Schulman, S.; Kearon, C.; Kakkar, A.K.; Mismetti, P.; Schellong, S.; Eriksson, H.; Baanstra, D.; Schnee, J.; Goldhaber, S.Z. Dabigatran versus warfarin in the treatment of acute venous thromboembolism. N. Engl. J. Med. 2009, 361, 2342–2352.

- Bauersachs, R.; Berkowitz, S.D.; Brenner, B.; Buller, H.R.; Decousus, H.; Gallus, A.S.; Lensing, A.W.; Misselwitz, F.; Prins, M.H.; Raskob, G.E.; et al. Oral rivaroxaban for symptomatic venous thromboembolism. N. Engl. J. Med. 2010, 363, 2499–2510.

- Büller, H.R.; Prins, M.H.; Lensin, A.W.A.; Decousus, H.; Jacobson, B.F.; Minar, E.; Chlumsky, J.; Verhamme, P.; Wells, P.; Agnelli, G.; et al. Oral rivaroxaban for the treatment of symptomatic pulmonary embolism. N. Engl. J. Med. 2012, 366, 1287–1297.

- Agnelli, G.; Buller, H.R.; Cohen, A.; Curto, M.; Gallus, A.S.; Johnson, M.; Masiukiewicz, U.; Pak, R.; Thompson, J.; Raskob, G.E.; et al. Oral apixaban for the treatment of acute venous thromboembolism. N. Engl. J. Med. 2013, 369, 799–808.

- Büller, H.R.; Décousus, H.; Grosso, M.A.; Mercuri, M.; Middeldorp, S.; Prins, M.H.; Raskob, G.E.; Schellong, S.M.; Schwocho, L.; Segers, A.; et al. Edoxaban versus warfarin for the treatment of symptomatic venous thromboembolism. N. Engl. J. Med. 2013, 369, 1406–1415.

- Schulman, S.; Kakkar, A.K.; Goldhaber, S.Z.; Schellong, S.; Eriksson, H.; Mismetti, P.; Christiansen, A.V.; Friedman, J.; Le Maulf, F.; Peter, N.; et al. Treatment of acute venous thromboembolism with dabigatran or warfarin and pooled analysis. Circulation 2014, 129, 764–772.

- Schulman, S.; Kearon, C.; Kakkar, A.K.; Schellong, S.; Eriksson, H.; Baanstra, D.; Kvamme, A.M.; Friedman, J.; Mismetti, P.; Goldhaber, S.Z. Extended use of dabigatran, warfarin, or placebo in venous thromboembolism. N. Engl. J. Med. 2013, 368, 709–718.

- Agnelli, G.; Buller, H.R.; Cohen, A.; Curto, M.; Gallus, A.S.; Johnson, M.; Porcari, A.; Raskob, G.E.; Weitz, J.I. Apixaban for extended treatment of venous thromboembolism. N. Engl. J. Med. 2013, 368, 699–708.

- Lancaster, T.R.; Singer, D.E.; Sheehan, M.A.; Oertel, L.B.; Maraventano, S.W.; Hughes, R.A.; Kistler, J.P. The impact of long-term warfarin therapy on quality of life. Arch. Intern. Med. 1991, 151, 1944–1949.

- Ghate, S.R.; Biskupiak, J.; Ye, X.; Kwong, W.J.; Brixner, D.I. All-cause and bleeding-related health care costs in warfarin-treated patients with atrial fibrillation. J. Manag. Care Pharm. 2011, 17, 672–684.

- Carrier, M.; Le Gal, G.; Wells, P.S.; Rodger, M.A. Systematic review: Case-fatality rates of recurrent venous thromboembolism and major bleeding events among patients treated for venous thromboembolism. Ann. Intern. Med. 2010, 152, 578–589.

- Klok, F.A.; Kooiman, J.; Huisman, M.V.; Konstantinides, S.; Lankeit, M. Predicting anticoagulant-related bleeding in patients with venous thromboembolism: A clinically oriented review. Eur. Respir. J. 2014, 45, 201–210.

- Guan, W.J.; Ni, Z.Y.; Hu, Y.; Liang, W.H.; Ou, C.Q.; He, J.X.; Liu, L.; Shan, H.; Lei, C.L.; Hui, D.S.C.; et al. Clinical characteristics of coronavirus disease 2019 in China. N. Engl. J. Med. 2020, 382, 1708–1720.

- Tang, N.; Li, D.; Wang, X.; Sun, Z. Abnormal coagulation parameters are associated with poor prognosis in patients with novel coronavirus pneumonia. J. Thromb. Haemost. 2020, 18, 844–847.

- McLean, J. The thromboplastic action of cephalin. Am. J. Physiol. Content 1916, 41, 250–257.

- Rosenberg, R.D.; Lam, L. Correlation between structure and function of heparin. Proc. Natl. Acad. Sci. USA 1979, 76, 1218–1222.

- Turpie, A.G.; Gallus, A.S.; Hoek, J.A. A synthetic pentasaccharide for the prevention of deep-vein thrombosis after total hip re-placement. N. Engl. J. Med. 2001, 344, 619–625.

- Herbert, J.M.; Herault, J.P.; Bernat, A.; van Amsterdam, G.M.; Lormeau, J.C.; Petitou, M.; van Boeckel, C.; Hoffmann, P.; Meuleman, D.G. Biochemical and pharmacological properties of SANORG 34006, a potent and long-acting synthetic pentasaccharide. Blood 1998, 91, 4197–4205.

- Eriksson, B.I.; Bauer, K.A.; Lassen, M.R.; Turpie, A.G. Fondaparinux compared with enoxaparin for the prevention of venous thromboembolism after hip-fracture surgery. N. Engl. J. Med. 2001, 345, 1298–1304.

- Lam, L.; Silbert, J.; Rosenberg, R. The separation of active and inactive forms of heparin. Biochem. Biophys. Res. Commun. 1976, 69, 570–577.

- Andersson, L.-O.; Barrowcliffe, T.; Holmer, E.; Johnson, E.; Sims, G. Anticoagulant properties of heparin fractionated by affinity chromatography on matrix-bound antithrombin III and by gel filtration. Thromb. Res. 1976, 9, 575–583.

- Bârzu, T.; Molho, P.; Tobelem, G.; Petitou, M.; Caen, J. Binding and endocytosis of heparin by human endothelial cells in culture. Biochim. Biophys. Acta Bioenergy 1985, 845, 196–203.

- Cook, B.W. Anticoagulation management. Semin. Interv. Radiol. 2010, 27, 360–367.

- de Swart, C.A.; Nijmeyer, B.; Roelofs, J.M.; Sixma, J.J. Kinetics of intravenously administered heparin in normal humans. Blood 1982, 60, 1251–1258.

- Bjornsson, T.D.; Wolfram, K.M.; Kitchell, B.B. Heparin kinetics determined by three assay methods. Clin. Pharmacol. Ther. 1982, 31, 104–113.

- Dawes, J.; Papper, D.S.; Pepper, D.S. Catabolism of low-dose heparin in man. Thromb. Res. 1979, 14, 845–860.

- Leentjens, J.; Peters, M.; Esselink, A.C.; Smulders, Y.; Kramers, C. Initial anticoagulation in patients with pulmonary embolism: Thrombolysis, unfractionated heparin, LMWH, fondaparinux, or DOACs? Br. J. Clin. Pharmacol. 2017, 83, 2356–2366.

- Hirsh, J.; Levine, M.N. Low molecular weight heparin. Blood 1992, 79, 1–7.

- Casu, B.; Oreste, P.; Torri, G.; Zoppetti, G.; Choay, J.; Lormeau, J.C.; Petitou, M.; Sinaɕ, P. The structure of heparin oligosaccharide fragments with high anti-(factor Xa) activity containing the minimal antithrombin III-binding sequence Chemical and13C nuclear-magnetic-resonance studies. Biochem. J. 1981, 197, 599–609.

- Lindahl, U.; Thunberg, L.; Backstrom, G.; Riesenfeld, J.; Nordling, K.; Bjork, I. Extension and structural variability of the an-tithrombin-binding sequence in heparin. J. Biol. Chem. 1984, 259, 12368–12376.

- Gray, E.; Mulloy, B.; Barrowcliffe, T.W. Heparin and low-molecular-weight heparin. Thromb. Haemost. 2008, 99, 807–818.

- Basu, D.; Gallus, A.; Hirsh, J.; Cade, J. A Prospective study of the value of monitoring heparin treatment with the activated partial thromboplastin time. N. Engl. J. Med. 1972, 287, 324–327.

- Kitchen, S.; Cartwright, I.; Woods, T.A.; Jennings, I.; Preston, F.E. Lipid composition of seven APTT reagents in relation to heparin sensitivity. Br. J. Haematol. 1999, 106, 801–808.

- Smythe, M.A.; Priziola, J.; Dobesh, P.P.; Wirth, D.; Cuker, A.; Wittkowsky, A.K. Guidance for the practical management of the heparin anticoagulants in the treatment of venous thromboembolism. J. Thromb. Thrombolysis 2016, 41, 165–186.

- Garcia, D.A.; Baglin, T.P.; Weitz, J.I.; Samama, M.M. Parenteral anticoagulants: Antithrombotic therapy and prevention of thrombosis: 9th ed.: American college of chest physicians evidence-based clinical practice guidelines. Chest 2012, 141, 24–43.

- Vandiver, J.W.; Vondracek, T.G. Antifactor Xa levels versus activated partial thromboplastin time for monitoring unfractionated heparin. Pharmacother. J. Hum. Pharmacol. Drug Ther. 2012, 32, 546–558.

- Arachchillage, D.; Kamani, F.; Deplano, S.; Banya, W.; Laffan, M. Should we abandon the APTT for monitoring unfractionated heparin? Thromb. Res. 2017, 157, 157–161.

- Samama, M.M. Contemporary laboratory monitoring of low molecular weight heparins. Clin. Lab. Med. 1995, 15, 119–123.

- Abbate, R.; Gori, A.M.; Farsi, A.; Attanasio, M.; Pepe, G. Monitoring of low-molecular-weight heparins in cardiovascular disease. Am. J. Cardiol. 1998, 82, 33L–36L.

- Kessler, C.M. Low molecular weight heparins: Practical considerations. Semin. Hematol. 1997, 34, 35–42.

- Linkins, L.-A.; Julian, J.A.; Rischke, J.; Hirsh, J.; Weitz, J.I. In vitro comparison of the effect of heparin, enoxaparin and fondaparinux on tests of coagulation. Thromb. Res. 2002, 107, 241–244.

- Thomas, O.; Lybeck, E.; Strandberg, K.; Tynngård, N.; Schött, U. Monitoring low molecular weight heparins at therapeutic levels: Dose-responses of, and correlations and differences between aPTT, anti-factor Xa and Thrombin generation assays. PLoS ONE 2015, 10, e0116835.

- Newman, D.J.; Cragg, G.M. Natural products as sources of new drugs over the 30 years from 1981 to 2010. J. Nat. Prod. 2012, 75, 311–335.

- Lip, G.Y.H. Recommendations for thromboprophylaxis in the 2012 focused update of the ESC guidelines on atrial fibrillation: A commentary. J. Thromb. Haemost. 2013, 11, 615–626.

- Leite, P.M.; Martins, M.A.P.; Castilho, R.O. Review on mechanisms and interactions in concomitant use of herbs and warfarin therapy. Biomed. Pharmacother. 2016, 83, 14–21.

- Garcia, D.; Libby, E.; Crowther, M.A. The new oral anticoagulants. Blood 2010, 115, 15–20.

- Poller, L.; Ibrahim, S.; Keown, M.; Pattison, A.; Jespersen, J. The prothrombin time/international normalized ratio (PT/INR) Line: Derivation of local INR with commercial thromboplastinsand coagulometers—Two independent studies. J. Thromb. Haemost. 2011, 9, 140–148.

- Poller, L. International Normalized Ratios (INR): The first 20 years. J. Thromb. Haemost. 2004, 2, 849–860.

- Meijer, P.; Kynde, K.; van den Besselaar, A.M.; Van Blerk, M.; Woods, T.A. International normalized ratio (INR) testing in Europe: Between-laboratory comparability of test results obtained by Quick and Owren reagents. Clin. Chem. Lab. Med. 2018, 56, 1698–1703.

- World Health Organization Expert Committee on Biological Standardization. Sixty-Second Report; No.979; WHO: Geneva, Switzerland, 2013.

- Favaloro, E.J. How to generate a more accurate laboratory-based international normalized ratio: Solutions to obtaining or ver-ifying the mean normal prothrombin time and international sensitivity index. Semin. Thromb. Hemost. 2019, 45, 10–21.

- Van den Besselaar, A.M.H.P.; Barrowcliffe, T.W.; Houbouyan-Reveillard, L.L.; Jespersen, J.; Johnston, M.; Poller, L.; Tripodi, A. Subcommittee on Control of Anticoagulation of the Scientific and Standardization Committee of the ISTH. Guidelines on preparation, certification, and use of certified plasmas for ISI calibration and INR determination. J. Thromb. Haemost. 2004, 2, 1946–1953.

- Clinical Laboratory Standards Institute. H57—A Protocol for the Evaluation, Validation, and Implementation of Coagulometers; Clinical and Laboratory Standards Institute: Wayne, PA, USA, 2008; Volume 28, pp. 1–33.

- Kearon, C.; Ginsberg, J.S.; Kovacs, M.J.; Anderson, D.R.; Wells, P.; Julian, J.A.; MacKinnon, B.; Weitz, J.I.; Crowther, M.A.; Dolan, S.; et al. Comparison of low-intensity warfarin therapy with conventional-intensity warfarin therapy for long-term prevention of re-current venous thromboembolism. N. Engl. J. Med. 2003, 349, 631–639.

- Hylek, E.M.; Skates, S.J.; Sheehan, M.A.; Singer, D.E. An analysis of the lowest effective intensity of prophylactic anticoagulation for patients with nonrheumatic atrial fibrillation. N. Engl. J. Med. 1996, 335, 540–546.

- Hering, D.; Piper, C.; Bergemann, R.; Hillenbach, C.; Dhm, M.; Huth, C.; Horstkotte, D. Thromboembolic and bleeding compli-cations following St. Jude Medical valve replacement: Results of the German Experience with low-intensity anticoagulation study. Chest 2005, 127, 53–59.

- Makris, M.; van Veen, J.J.; Maclean, R. Warfarin anticoagulation reversal: Management of the asymptomatic and bleeding pa-tient. J. Thromb. Thromblysis 2010, 29, 171–181.

- Ageno, W.; Gallus, A.S.; Wittkowsky, A.; Crowther, M.; Hylek, E.M.; Palareti, G. Oral anticoagulant therapy: Antithrombotic therapy and prevention of thrombosis, 9th ed.: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines. Chest 2012, 141, 44S–88S.

- Holbrook, A.; Schulman, S.; Witt, D.M.; Vandvik, P.O.; Fish, J.; Kovacs, M.J.; Svensson, P.J.; Veenstra, D.L.; Crowther, M.; Guyatt, G.H. Evidence-based management of anticoagulant therapy: Antithrombotic Therapy and Prevention of Thrombosis, 9th ed.: American College of Chest Physicians Evidence-Based Clinical Practice Guidelines. Chest 2012, 141, 152S–184S.

- Baglin, T.; Hillarp, A.; Tripodi, A.; Elalamy, I.; Buller, H.; Ageno, W. Measuring oral direct inhibitors (ODIs) of thrombin and factor Xa: A recommendation from the Subcommittee on Control of Anticoagulation of the Scientific and Standardization Committee of the International Society on Thrombosis and Haemostasis. J. Thromb. Haemost. 2013, 11, 756–760.

- Barnes, G.D.; Ageno, W.; Ansell, J.; Kaatz, S. Recommendation on the nomenclature for oral anticoagulants: Communication from the SSC of the ISTH. J. Thromb. Haemost. 2015, 13, 1154–1156.

- Dale, B.J.; Chan, N.C.; Eikelboom, J.W. Laboratory measurement of the direct oral anticoagulants. Br. J. Haematol. 2016, 172, 315–336.

- Stangier, J. Clinical pharmacokinetics and pharmacodynamics of the oral direct thrombin inhibitor dabigatran etexilate. Clin. Pharmacokinet. 2008, 47, 285–295.

- Perzborn, E.; Strassburger, J.; Wilmen, A.; Pohlmann, J.; Roehrig, S.; Schlemmer, K.-H.; Straub, A. In vitro and in vivo studies of the novel antithrombotic agent BAY 59-7939-an oral, direct Factor Xa inhibitor. J. Thromb. Haemost. 2005, 3, 514–521.

- Ogata, K.; Mendell-Harary, J.; Tachibana, M.; Masumoto, H.; Oguma, T.; Kojima, M.; Kunitada, S. Clinical safety, tolerability, pharmacokinetics, and pharmacodynamics of the novel factor Xa inhibitor edoxaban in healthy volunteers. J. Clin. Pharmacol. 2010, 50, 743–753.

- Weitz, J.I.; Harenberg, J. New developments in anticoagulants: Past, present and future. Thromb. Haemost. 2017, 117, 1283–1288.

- Huisman, M.V.; Klok, F.A. Pharmacological properties of betrixaban. Eur. Heart J. Suppl. 2018, 20, E12–E15.

- Mueck, W.; Schwers, S.; Stampfuss, J. Rivaroxaban and other novel oral anticoagulants: Pharmacokinetics in healthy subjects, specific patient populations and relevance of coagulation monitoring. Thromb. J. 2013, 11, 10.

- Cuker, A.; Siegal, D. Monitoring and reversal of direct oral anticoagulants. Hematol. Am. Soc. Hematol. Educ. Program. 2015, 2015, 117–124.

- Gosselin, R.C.; Adcock, D.M. The laboratory’s 2015 perspective on direct oral anticoagulant testing. J. Thromb. Haemost. 2016, 14, 886–893.

- Douxfils, J.; Mullier, F.; Loosen, C.; Chatelain, C.; Chatelain, B.; Dogne, J.M. Assessment of the impact of rivaroxaban on coagu-lation assays: Laboratory recommendations for the monitoring of rivaroxaban and review of the literature. Thromb. Res. 2012, 130, 956–966.

- Robert, S.; Chatelain, C.; Douxfils, J.; Mullier, F.; Chatelain, B.; Dogné, J.-M. Impact of dabigatran on a large panel of routine or specific coagulation assays. Thromb. Haemost. 2012, 107, 985–997.

- Thom, I.; Cameron, G.; Robertson, D.; Watson, H.G. Measurement of rivaroxaban concentrations demonstrates lack of clinical utility of a PT, dPT and APTT test in estimating levels. Int. J. Lab. Hematol. 2018, 40, 493–499.

- Gosselin, R.; Grant, R.P.; Adcock, D.M. Comparison of the effect of the anti-Xa direct oral anticoagulants apixaban, edoxaban, and rivaroxaban on coagulation assays. Int. J. Lab. Hematol. 2016, 38, 505–513.

- Flaujac, C.; Delavenne, X.; Quenet, S.; Horellou, M.-H.; Laporte, S.; Siguret, V.; Lecompte, T.; Gouin-Thibault, I. Assessment of apixaban plasma levels by laboratory tests: Suitability of three anti-Xa assays. Thromb. Haemost. 2014, 111, 240–248.

- Chatelain, C.; Chatelain, B.; Douxfils, J.; Dogné, J.-M.; Mullier, F. Impact of apixaban on routine and specific coagulation assays: A practical laboratory guide. Thromb. Haemost. 2013, 110, 283–294.

- Zafar, M.U.; Vorchheimer, D.A.; Gaztanaga, J.; Velez, M.; Yadegar, D.; Moreno, P.R.; Kunitada, S.; Pagan, J.; Fuster, V.; Badimon, J.J. Antithrombotic effects of factor Xa inhibition with DU-176b: Phase-I study of an oral, direct factor Xa inhibitor using an ex-vivo flow chamber. Thromb. Haemost. 2007, 98, 883–888.

- Morishima, Y.; Kamisato, C. Laboratory measurements of the oral direct factor Xa inhibitor edoxaban: Comparison of pro-thrombin time, activated partial thromboplastin time, and thrombin generation assay. Am. J. Clin. Pathol. 2015, 143, 241–247.

- Helin, T.A.; Pakkanen, A.; Lassila, R.; Joutsi-Korhonen, L. Laboratory assessment of novel oral anticoagulants: Method suitability and variability between coagulation laboratories. Clin. Chem. 2013, 59, 807–814.

- Halbmayer, W.-M.; Weigel, G.; Quehenberger, P.; Tomasits, J.; Haushofer, A.C.; Aspoeck, G.; Loacker, L.; Schnapka-Koepf, M.; Goebel, G.; Griesmacher, A. Interference of the new oral anticoagulant dabigatran with frequently used coagulation tests. Clin. Chem. Lab. Med. 2012, 50, 1601–1605.

- Hillarp, A.; Baghaei, F.; Blixter, I.F.; Gustafsson, K.M.; Stigendal, L.; Sten-Linder, M.; Strandberg, K.; Lindahl, T.L. Effects of the oral, direct factor Xa inhibitor rivaroxaban on commonly used coagulation assays. J. Thromb. Haemost. 2011, 9, 133–139.

- Liesenfeld, K.-H.; Schäfer, H.G.; Trocóniz, I.F.; Tillmann, C.; Eriksson, B.I.; Stangier, J. Effects of the direct thrombin inhibitor dabigatran on ex vivo coagulation time in orthopaedic surgery patients: A population model analysis. Br. J. Clin. Pharmacol. 2006, 62, 527–537.

- Kher, A.; Dieri, R.A.; Hemker, H.C.; Beguin, S. Laboratory assessment of antithrombotic therapy: What tests and if so why? Pathophysiol. Haemost. Thromb. 1997, 27, 211–218.

- Conrad, K.A. Clinical pharmacology and drug safety: Lessons from hirudin. Clin. Pharmacol. Ther. 1995, 58, 123–126.

- Fox, I.; Dawson, A.; Loynds, P.; Eisner, J.; Findlen, K.; Levin, E.; Hanson, D.; Mant, T.; Wagner, J.; Maraganore, J. Anticoagulant activity of hirulog™, a direct thrombin inhibitor, in humans. Thromb. Haemost. 1993, 69, 157–163.

- Lessire, S.; Douxfils, J.; Baudar, J.; Bailly, N.; Dincq, A.-S.; Gourdin, M.; Dogné, J.-M.; Chatelain, B.; Mullier, F. Is thrombin time useful for the assessment of dabigatran concentrations? An in vitro and ex vivo study. Thromb. Res. 2015, 136, 693–696.

- Dager, W.E.; Gosselin, R.C.; Kitchen, S.; Dwyre, D. Dabigatran Effects on the international normalized ratio, activated partial thromboplastin time, thrombin time, and fibrinogen: A multicenter, in vitro study. Ann. Pharmacother. 2012, 46, 1627–1636.

- Martinoli, J.-L.; Leflem, L.; Guinet, C.; Plu-Bureau, G.; Depasse, F.; Perzborn, E.; Samama, M.M. Assessment of laboratory assays to measure rivaroxaban—An oral, direct factor Xa inhibitor. Thromb. Haemost. 2010, 103, 815–825.

- Ieko, M.; Ohmura, K.; Naito, S.; Yoshida, M.; Sakuma, I.; Ikeda, K.; Ono, S.; Suzuki, T.; Takahashi, N. Novel assay based on diluted prothrombin time reflects anticoagulant effects of direct oral factor Xa inhibitors: Results of multicenter study in Japan. Thromb. Res. 2020, 195, 158–164.

- Kumano, O.; Suzuki, S.; Yamazaki, M.; An, Y.; Yasaka, M.; Ieko, M. for the Japanese study group for the effect confirmation of direct oral anticoagulants. The basic evaluation of the newly developing modified diluted prothrombin time reagent for direct oral anticoagulants measurements. In Proceedings of the XXVIII Virtual Congress on International Society on Thrombosis and Haemostasis, Online. 12–14 July 2020.

- Kumano, O.; Suzuki, S.; Yamazaki, M.; An, Y.; Yasaka, M.; Ieko, M. for the Japanese study group for the effect confirmation of direct oral anticoagulants. New formula of “ratio of inhibited thrombin generation” based on modified diluted prothrombin time reagent predicts bleeding risk of patients with low coagulation activity in direct oral anticoagulant therapy. In Proceedings of the XXVIII Virtual Congress on International Society on Thrombosis and Haemostasis, Online. 12–14 July 2020.

- Letertre, L.R.; Gudmundsdottir, B.R.; Francis, C.W.; Gosselin, R.C.; Skeppholm, M.; Malmstrom, R.E.; Moll, S.; Hawes, E.; Francart, S.; Onundarson, P.T. A single test to assay warfarin, dabigatran, rivaroxaban, apixaban, unfractionated heparin, and enoxaparin in plasma. J. Thromb. Haemost. 2016, 14, 1043–1053.

- Rohde, G. Determination of rivaroxaban—A novel, oral, direct Factor Xa inhibitor—In human plasma by high-performance liquid chromatography–tandem mass spectrometry. J. Chromatogr. B 2008, 872, 43–50.

- Wang, J.; Song, Y.; Pursley, J.; Wastall, P.; Wright, R.; Lacreta, F.; Frost, C.; Barrett, Y.C. A randomised assessment of the pharmacokinetic, pharmacodynamic and safety interaction between apixaban and enoxaparin in healthy subjects. Thromb. Haemost. 2012, 107, 916–924.

- Bathala, M.S.; Masumoto, H.; Oguma, T.; He, L.; Lowrie, C.; Mendell, J. Pharmacokinetics, biotransformation, and mass balance of edoxaban, a selective, direct factor Xa Inhibitor, in humans. Drug Metab. Dispos. 2012, 40, 2250–2255.

- Schmitz, E.E.; Boonen, K.K.; van den Heuvel, D.J.A.; Van Dongen, J.J.; Schellings, M.W.M.; Emmen, J.J.; Van Der Graaf, F.; Brunsveld, L.L.; Van De Kerkhof, D.D. Determination of dabigatran, rivaroxaban and apixaban by ultra-performance liquid chromatography—Tandem mass spectrometry (UPLC-MS/MS) and coagulation assays for therapy monitoring of novel direct oral anticoagulants. J. Thromb. Haemost. 2014, 12, 1636–1646.

- Dogné, J.-M.; Mullier, F.; Chatelain, B.; Rönquist-Nii, Y.; Malmström, R.E.; Hjemdahl, P.; Douxfils, J. Comparison of calibrated dilute thrombin time and aPTT tests with LC-MS/MS for the therapeutic monitoring of patients treated with dabigatran etexilate. Thromb. Haemost. 2013, 110, 543–549.

- Douxfils, J.; Tamigniau, A.; Chatelain, B.; Chatelain, C.; Wallemacq, P.; Dogne, J.M.; Mullier, F. Comparison of calibrated chro-mogenic anti-Xa assay and PT tests with LC-MS/MS for the therapeutic monitoring of patients treated with rivaroxaban. Thromb. Haemost. 2013, 110, 723–731.

- Antovic, J.P.; Skeppholm, M.; Eintrei, J.; Bojia, E.E.; Soderblom, L.; Norberg, E.M.; Onelov, L.; Ronquist-Nii, Y.; Pohanka, A.; Beck, O.; et al. Evaluation of coagulation assays versus LC-MS/MS for determinations of dabigatran concentrations in plasma. Eur. J. Clin. Pharmacol. 2013, 69, 1875–1881.

- Skeppholm, M.; Hjemdahl, P.; Antovic, J.P.; Muhrbeck, J.; Eintrei, J.; Rönquist-Nii, Y.; Pohanka, A.; Beck, O.; Malmström, R.E. On the monitoring of dabigatran treatment in “real life” patients with atrial fibrillation. Thromb. Res. 2014, 134, 783–789.

- Skeppholm, M.; Al-Aieshy, F.; Berndtsson, M.; Al-Khalili, F.; Rönquist-Nii, Y.; Söderblom, L.; Östlund, A.Y.; Pohanka, A.; Antovic, J.; Malmström, R.E. Clinical evaluation of laboratory methods to monitor apixaban treatment in patients with atrial fibrillation. Thromb. Res. 2015, 136, 148–153.

- Stangier, J.; Feuring, M. Using the HEMOCLOT direct thrombin inhibitor assay to determine plasma concentrations of dabigatran. Blood Coagul. Fibrinolysis 2012, 23, 138–143.

- Butler, J.; Malan, E.; Chunilal, S.; Tran, H.; Hapgood, G. The effect of dabigatran on the activated partial thromboplastin time and thrombin time as determined by the Hemoclot thrombin inhibitor assay in patient plasma samples. Thromb. Haemost. 2013, 110, 308–315.

- Samoš, M.; Stančiaková, L.; Ivanková, J.; Staško, J.; Kovář, F.; Dobrotová, M.; Galajda, P.; Kubisz, P.; Mokáň, M. Monitoring of dabigatran therapy using hemoclot thrombin inhibitor assay in patients with atrial fibrillation. J. Thromb. Thrombolysis 2015, 39, 95–100.

- Studt, J.-D.; Alberio, L.; Angelillo-Scherrer, A.; Asmis, L.M.; Fontana, P.; Korte, W.; Mendez, A.; Schmid, P.; Stricker, H.; Tsakiris, D.A.; et al. Accuracy and consistency of anti-Xa activity measurement for determination of rivaroxaban plasma levels. J. Thromb. Haemost. 2017, 15, 1576–1583.

- Lessire, S.; Dincq, A.S.; Siriez, R.; Pochet, L.; Sennesael, A.L.; Vornicu, O.; Hardy, M.; Deceuninck, O.; Douxifils, J.; Mullier, F. Assessment of low plasma concentrations of apixaban in the periprocedural setting. Int. J. Lab. Hematol. 2020, 42, 394–402.