The GNSS information is vulnerable to external interference and causes failure when unmanned aerial vehicles (UAVs) are in a fully autonomous flight in complex environments such as high-rise parks and dense forests. This paper presents a pan-tilt based visual servoing (PBVS) method for obtaining world coordinate information. The system is equipped with an Inertial Measurement Unit (IMU), an air pressure sensor, a magnetometer, and a pan-tilt-zoom(PTZ) camera. In this paper, we explain the physical model and the application method of the PBVS system which can be briefly summarized as follows. We track the operation target with a UAV carrying a camera and output the information about the UAV's position and the angle between the PTZ and the anchor point. In this way, we can obtain the current absolute position information of the UAV with its absolute altitude collected by the height sensing unit and absolute geographic coordinate information and altitude information of the tracked target. We have set up an actual UAV experimental environment. In order to meet the calculation requirements, some sensor data will be sent to the cloud through the network.Through the field tests, it can be concluded that the systematic deviation of the overall solution is less than the error of ordinary GNSS sensor equipment, and it can provide navigation coordinate information for the UAV in complex environments. Compared with traditional visual navigation systems, our scheme has the advantage of obtaining absolute, continuous, accurate and efficient navigation information in a short distance (within 15m from the target). This system can be used in scenarios that require autonomous cruise, such as self-powered inspections of UAVs, patrols in parks, etc.

- unmanned aerial vehicle (UAV)

- pan-tilt based visua

- navigation coordinate information

Multi-rotor UAVs are widely used due to simple operation and convenient take-off and landing[1][2][3][4][5]. For example, the State Grid of China has now implemented unmanned aerial vehicle inspections of transmission lines above 110KV, and its annual number exceeds 800,000. Traditional multi-rotor UAVs are controlled by artificial remote control and the main disadvantages are high labor costs and limited cruising distance. With the development of UAV control technology, the multi-rotor UAV control method is gradually shifting to autonomous flight. The UAV autonomous flight environment is normally divided into cruising in the open environment according to the positioning point and channel cruising under the disturbed environment. As one of the most important components of autonomous flight, the autonomous navigation and positioning algorithm of multi-rotor UAVs has become a hotspot of current research. In addition, due to the limitations of the multi-rotor UAV structure, its payload and computing power are greatly restricted. Therefore, it is worthwhile to find out a method which can realize autonomous and reliable positioning of the UAV with less resource occupation.

In this paper, a method for obtaining navigation coordinates of a UAV, PBVS, is proposed. This method uses UAV bringing cameras to carry out UAV navigation. In this section, the mathematical model of how the PBVS system obtains the navigation coordinates of the UAV is explained.

1.Problem Description

Nowadays, UAV autonomous cruise operation mode can be described as follows. First, the control center selects cruise routes and targets based on autonomous cruise requirements, then obtains the location and monitoring service time. The UAV cruise route is specified in this way. Then, according to the designated route, the UAV takes off from the tarmac, and finds the target according to the cruise route, as well as takes pictures or videos and returns to the control center through wireless to communicate, and finally returns to the base. In the work process, the UAV has a fixed-point image shooting, such as power line insulator shooting and so on. The characteristics of this type of operation are as follows. 1. Geographic location information of the operation target is known. 2. The target posture of each UAV operation remains unchanged. 3. The UAV with the camera is always tracking the target during the operation.

However, when the UAV collects images of the target in the autonomous cruise process, the GNSS signal is susceptible to interference such as power line inspection, building exploration, etc., so other auxiliary sensing equipment such as visual navigation is needed. Current visual navigation methods allow navigation with carrying extra cameras. However, in practical work, due to the limited load capacity of the UAV, it is difficult to work when carrying non-essential equipment. Methods for rebuilding UAVs at the lowest cost and navigating with the original camera carried by the UAV has become an emerging issue.

UAV needs to use a single camera to judge the scene. In the process of UAV shooting, it is often necessary to obtain the shooting information in real time rather than just one shot. At the same time,in the process of navigation, the camera needs to track the target in real time to get the location information. In the process of tracking, we will face two challenges: 1. To ensure that the target is not lost; 2. To ensure the clarity of the target shooting. First of all, in order to ensure that the target can be followed by the camera in real time when the UAV is in irregular motion, the best way is to make the image appear in the center of the field of view, so as to ensure that the PTZ has sufficient response time for the camera movement in all directions . Moreover, the unclear shooting of the tracking target is also an important reason for the loss of the target. Although many theories have been proposed to eliminate these influences, they are not comprehensive. There are two imaging methods of camera, one is to take pictures with global shutter, but if the exposure time is too long, the pictures will produce image paste phenomenon . The other way is shooting with rolling shutter, but if the progressive scanning speed is not enough, the shooting result may appear any situation such as "tilt", "swing uncertain" or "partial exposure" . The best way to overcome the blur is to reduce the relative motion between the camera and the target. Therefore, the PBVS system keeps the target on the optical axis of the camera all the time through the pan tilt control camera, so as to ensure the effectiveness of target tracking to the greatest extent.

2.Model Establishment

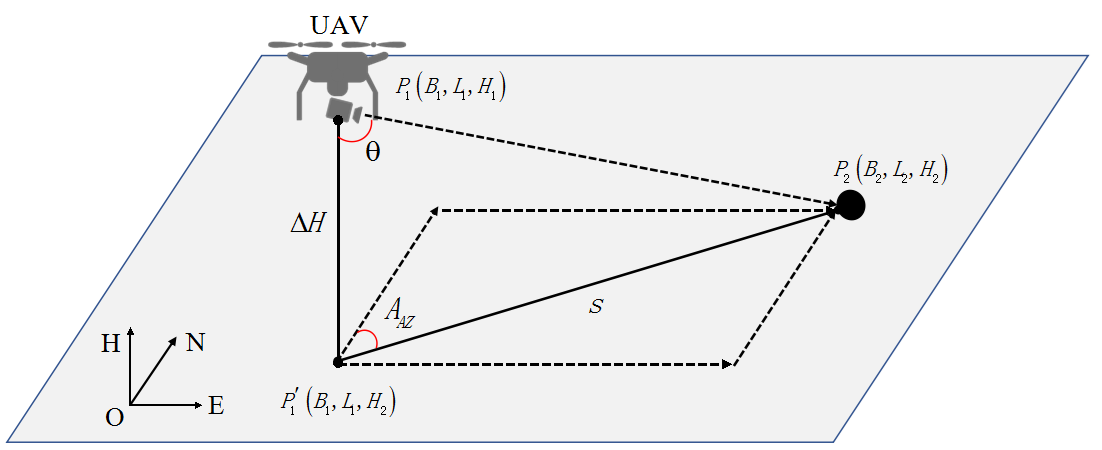

Real-time camera information acceleration and angular velocity can be measured by the IMU and magnetometer carried by the camera. We can infer the current position of UAV in the world geographic coordinate system. Method is shown in figure 1

Fig. 1. Schematic diagram of the spatial geometric relationship between UAV and target object

3 Simulation and experiment

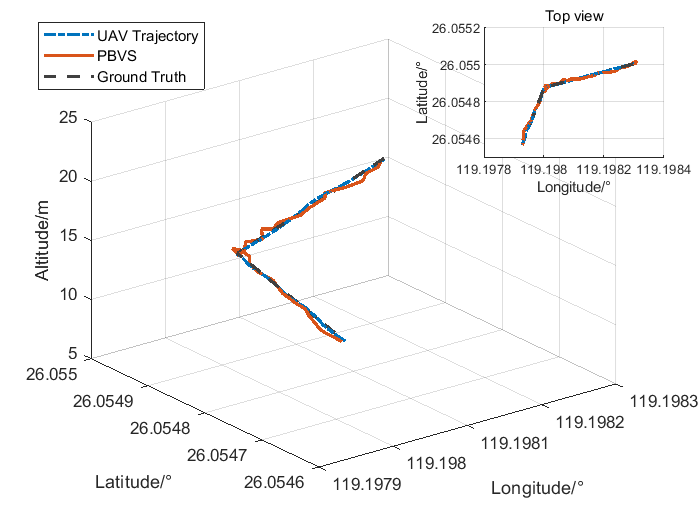

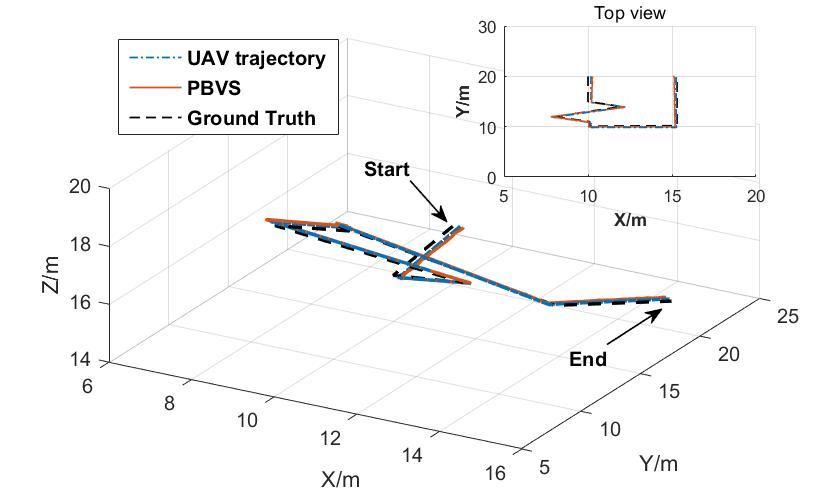

The simulation environment is established very close to the real experiment. Besides, the simulation results are analyzed qualitatively and quantitatively, and the performance of autonomous navigation is evaluated. In the actual experiment, we build a real experimental platform to test the performance of the proposed system. We also tested the accuracy of autonomous navigation. Also, we compared with other methods and proved the superiority of the PBVS method. Test results are shown in figure 2.

(b)

Fig. 2.The test results: a) Simulation;b)Real flight

4 Conclusion

In this paper, we propose a pan-tilt based visual servoing (PBVS) method for obtaining the world coordinate information. We utilize the vision system carried by the UAV for target recognition and leverage the PTZ control system to adjust the servo to move the target to the center of the field of view. The relative position relationship between the UAV and the anchor point can be calculated through geometry after information about the height of UAV and the angle of PTZ is collected. When the latitude and longitude coordinates of the anchor point are known, the current position of the UAV including latitude and longitude information can be calculated based on the position calculation in the world coordinate system. The innovation of this paper is that changes of the PTZ angle are utilized to calculate the relative position relationship between the UAV and the target and obtain the real-time world coordinates of the UAV during the target tracking process. With experimental verification, the system can accurately calculate the coordinates of the UAV based on the coordinates of the target object. In the environment where the UAV positioning information is not available, this paper provides a novel approach to achieving absolute positioning.

This entry is adapted from the peer-reviewed paper 10.3390/s20082241

References

- Liu Z, Du Y, Chen Y; Simulation and Experiment on the Safety Distance of Typical ±500 kV DC Transmission Lines and Towers for UAV Inspection. High Volt Eng 2019, 45(2), 426-432, .

- Nan Zhao; Weidang Lu; Min Sheng; Yunfei Chen; Jie Tang; F. Richard Yu; Kai-Kit Wong; UAV-Assisted Emergency Networks in Disasters. Ten Challenges in Advancing Machine Learning Technologies toward 6G 2019, 26, 45-51, 10.1109/mwc.2018.1800160.

- Chong Shen; Xiaochen Liu; Huiliang Cao; Yuchen Zhou; Jun Liu; Jun Tang; Xiaoting Guo; Huang Haoqian; Xuemei Chen; Brain-Like Navigation Scheme based on MEMS-INS and Place Recognition. Applied Sciences 2019, 9, 1708, 10.3390/app9081708.

- Abdulla Al Kaff; David Martín; Fernando García; Arturo De La Escalera; José María Armingol; Survey of computer vision algorithms and applications for unmanned aerial vehicles. Expert Systems with Applications 2018, 92, 447-463, 10.1016/j.eswa.2017.09.033.

- Qingqing Wu; Yong Zeng; Rui Zhang; Joint Trajectory and Communication Design for Multi-UAV Enabled Wireless Networks. IEEE Transactions on Wireless Communications 2018, 17, 2109-2121, 10.1109/twc.2017.2789293.