As one of the most important tools of human-computer interaction, dynamic gesture recognition technology has attracted great attention and been applied to various industries. Meanwhile, with the advent of some high-precision deep vision sensors, the reliable data can be achieved for dynamic gesture recognition. Currently, however, the researches of dynamic gesture recognition still has certain limitations and challenges. In order to explore more methods to improve the performance of the dynamic gesture recognition, based on the Leap Motion Controller, a recognition system was proposed to recognize American Sign Language and some daily gestures.

- dynamic gesture recognition

- Long Short-Term Memory network

- Leap Motion Controller

1. Introduction

The main purpose of human–computer interaction is to allow users to freely control the device with some simple operations [1]. The human–computer interaction techniques include face recognition, language recognition, text recognition, and so on. As one of the important and powerful interaction methods, dynamic hand gesture recognition has attracted wide attention and been used in various fields, such as the video game industry, food industry, and machinery industry [2][3][4].

In order to improve the performance of the dynamic hand gesture recognition on American Sign Language (ASL) and handicraft gestures , we present a gesture recognition system in this paper, which consists of the LMC and the two-layer Bidirectional Recurrent Neural Network (BRNN). In the first stage, the proposed algorithm accurately determines the start and end of dynamic hand gestures by calculating the changes in hand rotation angle and palm speed between two adjacent frames, which can ensure the validity of features. Then, to obtain a better model, the features of a single finger and adjacent fingers are introduced into the input vector of the model. In the next stage, we compare the effects of changes in the hyper-parameter on the accuracy of the classifier and improve the performance of the model. In the third stage, the validation and comparison are performed on the proposed system.

2. Method

Dynamic gesture recognition relies on gesture tracking. LMC uses binocular RGB high-definition cameras to improve gesture positioning accuracy and reduce the problems caused by occlusions between fingers. The infrared camera is used to filter images, which greatly reduces the impact of the background environment. Finally, a convolutional neural network is used to perform multi-layer convolution filtering on the image to extract feature data and provide it to the user. In terms of real-time and accuracy, LMC can provide gesture data more stably and accurately, providing a stable data guarantee for applications in some fields.

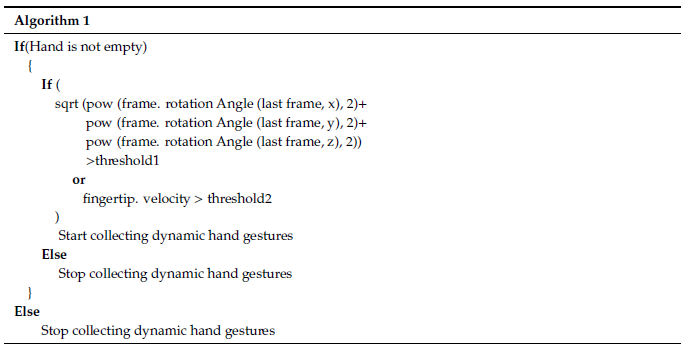

However, a challenge in dynamic hand gesture data collection is how to determine the start and end of a dynamic hand gesture. When LMC performs gesture acquisition, it obtains time-based dynamic sequences. Therefore, during the gesture collection, it is necessary to determine when the gesture execution starts and stops according to the threshold. This work uses the palm rotation threshold in a three-dimensional coordinate system and finger speed threshold to determine the start and end points of the dynamic hand gesture. The rotation of the palm needs to be obtained through comparison and calculation of the current frame and the historical frames, and the change in finger speed can be obtained through the library function that comes with LMC. The Algorithm 1 is shown below:

3. Model

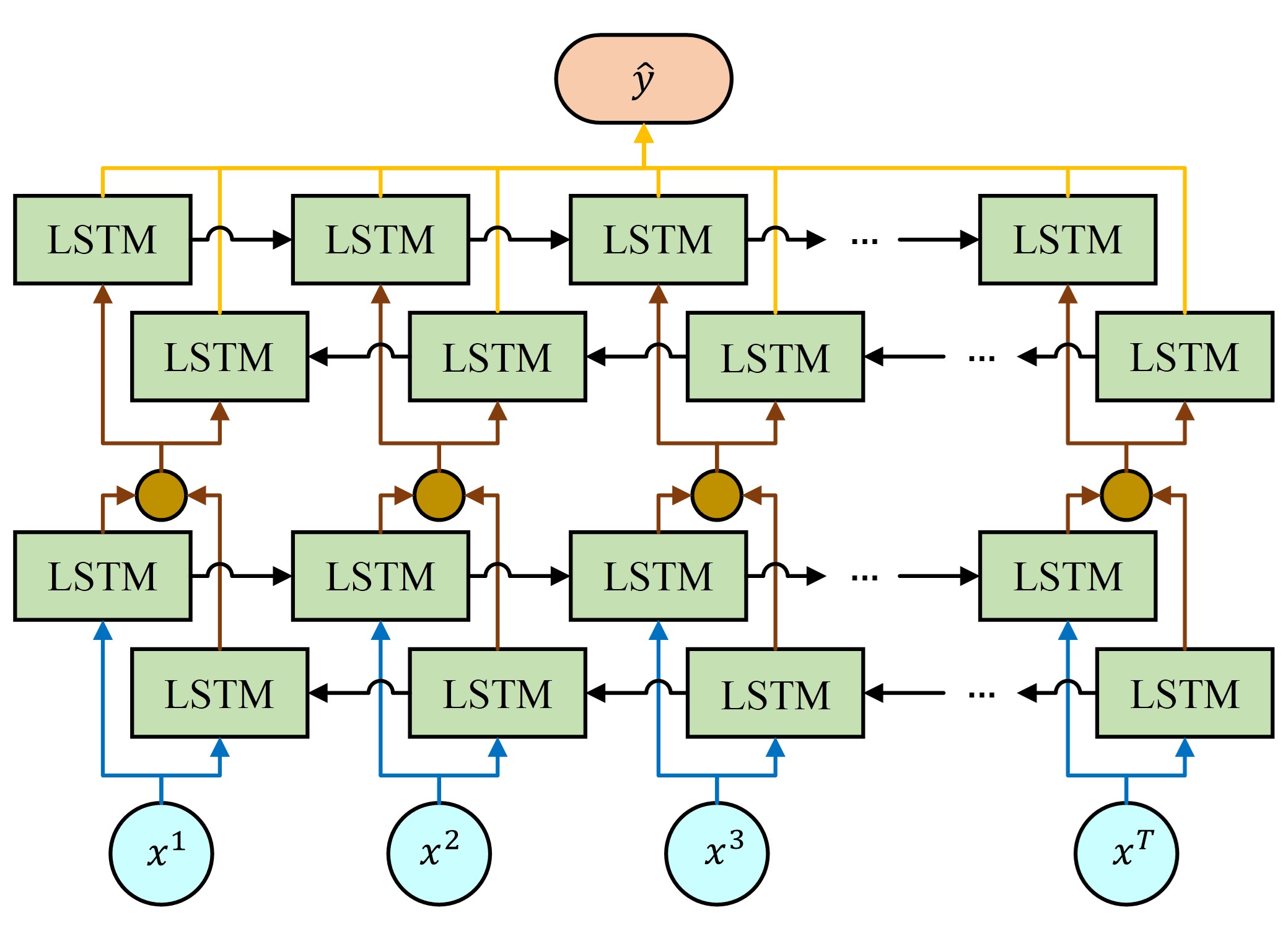

The common Recurrent Neural Network provides an extremely useful method to handle time-based sequences which shows correlations between closely linked data elements in the sequence. However, in the one-way Recurrent Neural Network, the current unit can only output the outcome based on the information of previous units. Especially, in some problems, the output of the current unit is not only related to previous units, but also related to the future units. In this case, it is possible to use two separate recurrent neural networks and then somehow merge outputs. In the single layer Bidirectional Recurrent Neural Network, one Recurrent Neural Network goes in a forward direction. On the contrary, another one goes in backward direction. At each time point, the input is provided to two independent Long Short-Term Memory units in opposite directions and they combine their outcomes based on the hidden state. In our work, we adopt this structure to build a two-layer BRNN and combine outcomes of the final Long Short-Term Memory unit of both networks

This entry is adapted from the peer-reviewed paper 10.3390/s20072106

References

- Parimalam, A.; Shanmugam, A.; Raj, A.S.; Murali, N.; Murty, S.A.V.S. Convenient and elegant HCI features of PFBR operator consoles for safe operation. In Proceedings of the 4th International Conference on Intelligent Human Computer Interaction (IHCI), Kharagpur, India, 27–29 December 2012; pp. 1–9.

- Minwoo, K.; Jaechan, C.; Seongjoo, L.; Yunho, J. IMU Sensor-Based Hand Gesture Recognition for Human-Machine Interfaces. Sensors 2019, 19, 3827–3839.

- Cheng, H.; Yang, L.; Liu, Z.C. Survey on 3D hand gesture recognition. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 1659–1673.

- Cheng, H.; Dai, Z.J.; Liu, Z.C. Image-to-class dynamic time warping for 3D hand gesture recognition. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), San Jose, CA, USA, 15–19 July 2013; pp. 1–6.