1. Introduction

In recent years, the Building Information Modeling (BIM) methodology was transferred from the realm of new construction to that of the built heritage. Since the early studies by Murphy and Dore [

1,

2], the scientific literature on Heritage or Historic-BIM (H-BIM) has expanded [

3,

4,

5,

6,

7,

8], aiming to illustrate how geometrical data can be linked to: architectural grammar and styles [

9,

10,

11], material characterization [

12], degradation patterns [

13], façade interventions and historical layers [

14,

15], structural damage and FEM analysis [

16,

17,

18], data collection and simulation of environmental parameters [

19], archival photographs [

20] and text documents [

21,

22].

Hichri et al. [

8] and Macher et al. [

23] emphasized that H-BIM techniques require the transition from the existing condition of the object to the modeling environment. The shift from the

as-built condition (registration of a building after construction) to the

as-is representation (registration of its current condition) implies reference to surveying data, as point clouds acquired via laser scanning or photogrammetry [

24] and reverse engineering techniques. On the one hand, the elaboration of 3D surveying for the construction of BIM models, known as Scan-to-BIM [

21], is seen as a manual, time-consuming and subjective process [

3,

5]; on the other hand, the emergence of Artificial Intelligence (AI) techniques in the architectural heritage domain [

25,

26,

27] is reshaping the approach of heritage experts towards the interpretation, recognition and classification of building components on raw surveying information.

2. Historic-Building Information Modeling and Artificial Intelligence

2.1. State-of-the-Art Scan-to-BIM Reconstruction Processes

Scan-to-BIM processes [

7,

21,

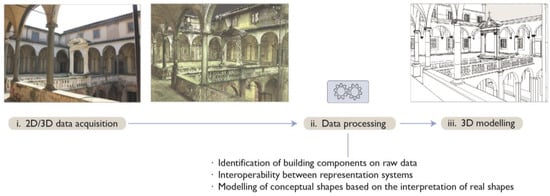

28] focus on translating existing survey data, as point clouds, into BIM. They involve three main steps: (i) data acquisition by laser scanning or photogrammetry; (ii) processing of survey data; and (iii) 3D modelling (

Figure 1). In the processing phase (ii), it is essential to semantically describe the objects that make up a building over unstructured point clouds [

28]. This interpretative issue is a major bottleneck in current research, as the main limits of the Scan-to-BIM processing workflow are identified as:

Figure 1. Steps of the Scan-to-BIM workflow using example from Grand-Ducal cloister, Pisa Charterhouse.

-

Difficulties in modeling complex or irregular elements and representing architectural details of existing buildings [

1,

29,

30], and the need to intervene with classification, hierarchical organization and simplification assumptions [

14,

23];

-

Measurement uncertainties [

23], as surveying data may contain occlusions [

31];

-

Compared to BIM for new constructions, there is an absence of pre-defined, extensive libraries of parametric objects [

3] and lack of existing standards for H-BIM artefacts [

1,

28,

30];

-

High conversion effort [

1], since most BIM software for new buildings offer tools for the construction of regular and standardized objects while the free-form geometry modeling functions that are available are limited [

15,

29,

32,

33].

For the above limits, Scan-to-BIM techniques are never unambiguous. However, they can be distinguished based on the degree of human involvement in the data processing stage, classified as manual or semi-automated.

2.1.1. Manual Scan-to-BIM Methods

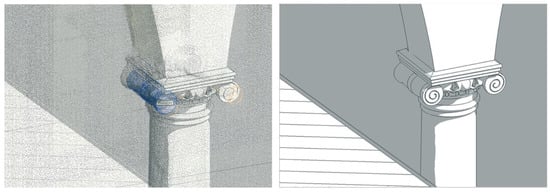

Most common approaches to the Scan-to-BIM are manual, as they require visual recognition and subsequent manual tracing of building components starting from a point cloud (

Figure 2). Extensive literature reviews provided by Logothetis et al. [

6], Volk et al. [

7], Tang et al. [

34] and more recently by López et al. [

3] and Pocobelli et al. [

4], demonstrate that manual methods [

1,

35], although widely consolidated, result in time-consuming, laborious processes. Indeed, operators are asked to manually identify, isolate and reconstruct each class of building elements [

7,

23]. This entails a considerable amount of time and resources, besides implying the risk of making too subjective choices [

36].

Figure 2. Instantiation of a capital by direct reconstruction over the raw point cloud using example from Grand-Ducal cloister, Pisa Charterhouse.

2.1.2. Semi-Automated Scan-to-BIM Methods

Fundamental issues in the definition of semi-automated methods are the recognition and labelling of data points on raw point clouds with a named object or object class (e.g., windows, columns, walls, roofs, etc.) [

34,

35,

36,

37,

38]. Existing methods can be distinguished according to the solution identified over time for this issue:

Primitive fitting methods. They fit simple geometries, such as planes, cylinders and spheres [

39], to sets of points in the scene via robust estimation of the primitive parameters. The Random Sample and Consensus [

40] and the Hough Transform [

41] are common algorithms of this type, used in commercial solutions for the semi-automatic recognition of walls, slabs and pipes, proposed by software houses [

3,

42,

43,

44] including: EdgeWise Building by ClearEdge3D (

clearedge3d.com) as a complement for Autodesk Revit; Scan-to-BIM Revit plug-in by IMAGINiT Technologies (

imaginit.com); and Buildings Pointfuse from Arithmetica (

pointfuse.com). Primitive fitting methods mostly apply to indoor environments [

31,

37,

38,

42] for the detection of planar elements, as floors and walls [

23,

37,

45]. Shape extraction and BIM conversion are limited to simple geometries with standardized dimensions; application to complex existing architectural structures, varying in forms and types, is hardly possible unless the model is oversimplified [

23,

42].

Mesh-reconstruction methods. For each architectural component or group thereof, a mesh is reconstructed via triangulation techniques, starting from the distribution of points in the original point cloud. References [

15,

17,

29,

31,

47,

48,

49] converted 3D textured meshes derived from surveying into BIM objects; however, the mesh manipulation and geometric modification are limited as the mesh models cannot be edited and controlled by parametric BIM modeling [

29].

Reconstruction by shape grammar and object libraries. Such approaches rely on the construction of suitable 3D libraries of architectural elements (families) to handle the complexity of materials and components that characterizes historic architecture [

10,

50,

51,

52]. In detail, De Luca et al. [

53] studied the formalization of architectural knowledge based on the analysis of architectural treatises, to generate template shape libraries of classical architecture. Murphy et al. [

54] modelled interactive parametric objects based on manuscripts ranging from Vitruvius to Palladio to the architectural pattern books of the 18th century. Since relying on the formalization of architectural languages as derived from treatises of historical architecture, such methods are valid regardless of the modeling type or representation chosen [

53].

Reconstruction by generative modelling. In this case, the reconstruction is again guided by the formalization of architectural knowledge, and VPLs are considered to manipulate each geometry by interactively programming, via a graphical coding language made up of nodes and wires, the set of modeling procedures, primitive adjustments and duplication operations performed in 3D space [

33,

53,

55]. Grasshopper, a visual programming interface for Rhino3D, and Dynamo, a plug-in for Autodesk Revit, are commonly used for these tasks in the case of new constructions. By contrast, VPLs are rarely exploited for existing monuments and sites. The 3D content could be created, based on surveying data [

17,

48,

55,

56,

57], by a series of graphic generation instructions, repeated rules and algorithms [

58]. The release of Rhino.Inside.Revit (

rhino3d.com/it/features/rhino-inside-revit, accessed on 18 December 2022), allowing Grasshopper to run inside BIM software as Autodesk Revit, goes in the direction of novel VPL-to-BIM connection tools.

2.2. State-of-the-Art AI-Based Semantic Segmentation

In the digital heritage field, ML and Deep Learning (DL) techniques emerge to help digital data interpretation, semantic structuring and enrichment of a studied object [

25] e.g., to assist the identification of architectural components [

59], the re-assembly of dismantled parts [

60], the recognition of hidden or damaged wall regions [

61], and the mapping of spatial and temporal distributions of historical phenomena [

62].

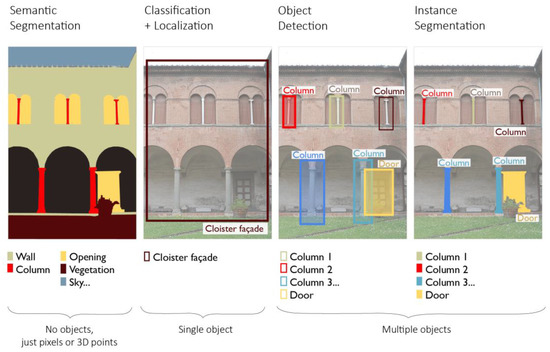

In the architectural heritage domain, AI techniques have proven to be crucial in streamlining the so-called semantic segmentation process, understood as the reasoned subdivision of a building into its architectural components (e.g., roof, wall, window, molding, etc.), starting from surveying data. With respect to other common computer vision tasks exploiting AI, such as object recognition, instance localization and segmentation, the semantic segmentation process classifies pixels or points as belonging to a certain label and performs this operation for multiple objects of the 2D image or of the 3D unstructured scene (Figure 3). The term semantic, indeed, underlines that the breakdown is done by referring to prior knowledge on the studied 2D/3D architectural scenes.

Figure 3. Semantic segmentation compared to other computer vision tasks using example from Cloister of the National Museum of San Matteo, Pisa.

Though earlier experiments of digital heritage classification were geared towards the semantic segmentation of images [

61,

63,

64], research is now moving in the direction of segmenting textured polygonal meshes [

27] and/or 3D point clouds [

65]. In the architectural domain, the classification is either focused on automatically recognizing, via ML algorithms and through a suitable amount of training data, on the one hand, the presence of alterations on historical buildings [

66] or the mapping of materials (

texture-based approaches) [

27,

67,

68], and, on the other hand, the distinction into architectural components based on prior historical knowledge (

geometry-based approaches) [

26,

69,

70,

71].

Depending on the type of approach chosen, the classification can act on either two kinds of properties of the raw data: (a) geometric features, such as height, planarity, linearity, sphericity, etc. [

72], that are better suited for the recognition of architectural components based on respective shapes of elements, or (b) colorimetric attributes, such as RGB, HSL or HSV color spaces [

66], that are widely used for the identification of decay patterns (as biological patina or colonization, chromatic alterations, spots, etc.) or of materials.

Geometry-based classification techniques, formerly exploited for classifying urban scenes [

17,

72,

73], are now applied to the scale of the individual building, for the segmentation of walls, moldings, vaults, columns, roofs, etc. [

70]. Grilli et al. [

70] investigated the effectiveness of covariance features [

72] in training a Random Forest (RF) classifier [

74] for architectural heritage, even demonstrating the existence of a correlation between such features and many main dimensions of architectural elements.

This entry is adapted from the peer-reviewed paper 10.3390/s23052497