Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is an old version of this entry, which may differ significantly from the current revision.

In the field of UAV-based object tracking, the use of infrared mode can improve the robustness of the tracker in the scene with severe illumination changes, occlusion and expand the applicable scenarios of UAV-based object tracking tasks. Inspired by the great achievements of Transformer architecture in the field of RGB object tracking, a dual-mode object tracking network based on Transformer can be designed.

- RGBT tracking

- Drone based object tracking

- transformer

- feature aggregation

1. 引言

Object tracking is one of the fundamental tasks in computer vision and has been widely used in robot vision, video analysis, autonomous driving and other fields [1]. Among them, the drone scene is an important application scenario for object tracking which assist drones in playing a crucial role in urban governance, forest fire protection, traffic management, and other fields. Given the initial position of a target, object tracking is to capture the target in subsequent video frames. Thanks to the availability of large datasets of visible images [2], visible-based object tracking algorithms have made significant progress and achieved state-of-the-art results in recent years. Currently, due to the diversification of drone missions, visible object tracking is unable to meet the diverse needs of drones in various application scenarios [3]. Due to the limitations of visible imaging mechanisms, object tracking heavily relies on optimal optical conditions. However, in realistic drone scenarios, UAVs are required to perform object tracking tasks in dark and foggy environments. In such situations, visible imaging conditions are inadequate, resulting in significantly noisy images. Consequently, object tracking algorithms based on visible imaging fail to function properly.

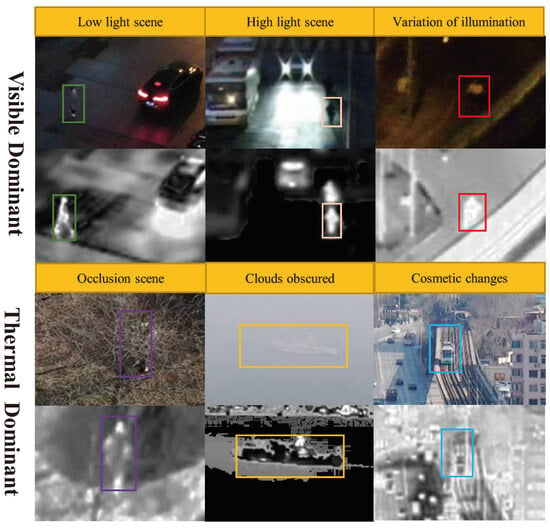

Infrared images are produced by measuring the heat emitted by objects. Compared with visible images, infrared images have relatively poor visual effects and complementary target location information [4,5]. In addition, infrared images are not sensitive to changes in scene brightness, and thus maintain good imaging results even in poor lightning environments. However, the imaging quality of infrared images is poor and the spatial resolution and grayscale dynamic range are limited, resulting in a lack of details and texture information in the images. In contrast, visible images are very rich in details and texture features. In summary, visible and infrared object tracking has received increasing attention as it can meet the mission requirements of drones in various scenarios, due to the complementary advantages of infrared and visible images (Figure 1).

Figure 1. These are some visible-infrared image pairs captured by drones. In some scenarios, visible images may be difficult to distinguish different objects, while infrared images can continue to work in these scenarios. Therefore, information from visible and infrared modalities can complement each other in these scenarios. Introducing information from the infrared modality is very beneficial for achieving comprehensive object tracking in drone missions.

Currently, two main kinds of methods in visual object tracking are deep learning (DL)-based methods and correlation filter (CF)-based approaches [1]. The methods based on correlation filtering utilize Fast Fourier Transform (FFT) to perform correlation operation in the frequency domain, which have a very fast processing speed and run in real-time. However, their accuracy and robustness are poor. The methods based on neural network mainly utilize the powerful feature extraction ability of neural network. Their accuracy is better than that of correlation filtering based methods while their speed is slower. With the proposal of Siamese networks [6,7], the speed of neural network-based tracking methods has been greatly improved. In recent years, the neural network-based algorithm has become the mainstream method for object tracking.

2. Related Works

2.1 RGBT Tracking Algorithms

Many RGBT trackers have been proposed so far [12–15]. Due to the rapid development of RGB trackers, current RGBT trackers mainly consider the problem of dual-modal information fusion within mature trackers finetuned on the RGBT tracking task, where the key is to fuse visible and infrared image information. Several fusion methods are proposed, which are categorized as image fusion, feature fusion and decision fusion. For image fusion, the mainstream approach is to fusion image pixels based on weights [16,17], but the main information extracted from image fusion is the homogeneous information of the image pairs, and the ability to extract heterogeneous information from infrared-visible image pairs is not strong. At the same time, image fusion has certain requirements for registration between image pairs, which can lead to cumulative errors and affect tracking performance. Most trackers aggregate the representation by fusing features [18,19]. Feature fusion is a

more advanced semantic fusion compared with image fusion. There are many ways to fuse features, but the most common way is to aggregate features using weighting. Feature fusion has the potential of high flexibility and can be trained with massive unpaired data, which is well-designed to achieve significant promotion. Decision fusion models each modality independently and the scores are fused to obtain the final candidate. Compared with image fusion and feature fusion, decision fusion is the fusion method on a higher level, which uses all the information from visible and infrared images. However, it is difficult to determine the decision criteria. Luo et al. [12] utilize independent frameworks to track in RGB-T data and then the results are combined by adaptive weighting. Decision fusion avoids the heterogeneity of different modalities and is not sensitive to modality registration. Finally, these fusion methods can also be used complementarily. For example, Zhang [11] used image fusion, feature fusion and decision fusion simultaneously for information fusion and achieved good results in multiple tests.

more advanced semantic fusion compared with image fusion. There are many ways to fuse features, but the most common way is to aggregate features using weighting. Feature fusion has the potential of high flexibility and can be trained with massive unpaired data, which is well-designed to achieve significant promotion. Decision fusion models each modality independently and the scores are fused to obtain the final candidate. Compared with image fusion and feature fusion, decision fusion is the fusion method on a higher level, which uses all the information from visible and infrared images. However, it is difficult to determine the decision criteria. Luo et al. [12] utilize independent frameworks to track in RGB-T data and then the results are combined by adaptive weighting. Decision fusion avoids the heterogeneity of different modalities and is not sensitive to modality registration. Finally, these fusion methods can also be used complementarily. For example, Zhang [11] used image fusion, feature fusion and decision fusion simultaneously for information fusion and achieved good results in multiple tests.

2.2Transformer

Transformer originates from natural language processing (NLP) for machine translation and has been introduced to vision recently with great potential [8]. Inspired by

the success in other fields, researchers have leveraged Transformer for tracking. Briefly, Transformer is an architecture for transforming one sequence into another one with the help of attention-based encoders and decoders. The attention mechanism can determine which parts of the sequence are important, breaking through the receptive field limitation of traditional CNN networks and capturing global information from the input sequence.

However, the attention mechanism requires more training data to establish global relationships. Therefore, Transformer will have a lower effect than traditional CNN networks in some tasks with smaller sample size and more emphasis on regional relationships [20]. Additionally, the attention mechanism is able to replace correlation filtering operations in the Siamese network by finding the most relevant region to the template in the search area in a global scope. The method of [9] applies Transformer to enhance and fuse features in the Siamese tracking for performance improvement.

the success in other fields, researchers have leveraged Transformer for tracking. Briefly, Transformer is an architecture for transforming one sequence into another one with the help of attention-based encoders and decoders. The attention mechanism can determine which parts of the sequence are important, breaking through the receptive field limitation of traditional CNN networks and capturing global information from the input sequence.

However, the attention mechanism requires more training data to establish global relationships. Therefore, Transformer will have a lower effect than traditional CNN networks in some tasks with smaller sample size and more emphasis on regional relationships [20]. Additionally, the attention mechanism is able to replace correlation filtering operations in the Siamese network by finding the most relevant region to the template in the search area in a global scope. The method of [9] applies Transformer to enhance and fuse features in the Siamese tracking for performance improvement.

2.3. UAV RGB-Infrared Tracking

Currently, there are few visible-light-infrared object tracking algorithms available for drones, mainly due to two reasons. Firstly, there is a lack of training data for visible -infrared images of drones. Previously, models were trained using infrared images generated from visible images due to the difficulty in obtaining infrared images. With the emergence of datasets such as LasHeR [21], it is now possible to directly use visible and infrared images for training. In addition, there are also datasets such as GTOT [22], RGBT210 [23], RGBT234 [24], etc. available for evaluating RGBT tracking algorithm performance. However, in the field of RGBT object tracking for drones, only the VTUAV [11] dataset is available. Due to the different imaging perspectives of images captured by drones compared to normal images, training algorithms with other datasets does not yield good results. Secondly, existing algorithms have slow running speeds, making them difficult to use directly. Existing mainstream RGBT object tracking algorithms are based on deep learning, which have to deal with both visible and infrared images at the same time, with a large amount of data, a complex algorithmic structure and a low processing speed, such as JMMAC (4fps) [25], FANet (2fps) [18], MANnet (2fps) [26]. In drone scenarios, there is a high demand for speed in RGBT object tracking algorithms for drones. It is necessary to simplify the algorithm structure and improve its speed.

This entry is adapted from the peer-reviewed paper 10.3390/drones7090585

This entry is offline, you can click here to edit this entry!