Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Md Tanzil Shahria and Version 2 by Sirius Huang.

Being an emerging technology, robotic manipulation has encountered tremendous advancements due to technological developments starting from using sensors to artificial intelligence. Over the decades, robotic manipulation has advanced in terms of the versatility and flexibility of mobile robot platforms. Thus, robots are now capable of interacting with the world around them. To interact with the real world, robots require various sensory inputs from their surroundings, and the use of vision is rapidly increasing, as vision is unquestionably a rich source of information for a robotic system.

- computer vision

- robot manipulation

- sensors

- vision-based control

1. Introduction

Robotic manipulation alludes to the manner in which robots directly and indirectly interact with surrounding objects. Such interaction includes picking and grasping objects [1][2][3][1,2,3], moving objects from place to place [4][5][4,5], folding laundry [6], packing boxes [7], operating as per user requirement, etc. Object manipulation is considered the pivotal role of robotics. Over time, robot manipulation has encountered considerable changes that cause technological development in both industry and academia.

Manual robot manipulation was one of the initial steps of automation [8][9][8,9]. A manual robot refers to a manipulation system that requires continuous human involvement to operate [10]. In the beginning, spatial algebra [11], forward kinematics [12][13][14][12,13,14], differential kinematics [15][16][17][15,16,17], inverse kinematics [18][19][20][21][22][18,19,20,21,22], etc. were explored by researchers for pick and place tasks, which is not the only application of robotic manipulation systems but the stepping-stone for a wide range of possibilities [23]. The capability of gripping, holding, and manipulating objects requires dexterity, perception of touch, and response from eyes and muscles; mimicking all these attributes is a complex and tedious task [24]. Thus, researchers have explored a wide range of algorithms to adopt and design more efficient and appropriate models for this task. Through time, manual manipulators got advanced and had individual control systems according to their specification and application [25][26][25,26].

Along with the individual use of robotic manipulation systems, it has a wide range of industrial applications nowadays as it can be applied to complex and diverse tasks [27]. Hence, typical manipulative devices have become less suited in these times [28]. Different kinds of new technologies, such as wireless communication, augmented reality [29], etc., are being adopted and applied in manipulation systems to uncover the most suitable and friendly human–robot collaboration model for specific tasks [30]. To make the process more efficient and productive and to obtain successful execution, researchers have introduced automation in this field [31].

To habituate to the automated system, researchers first introduced automation in the motion planning technique [3][32][3,32], which eventually contributed to the automated robotic manipulation system. Automated and semi-automated manipulation systems not only boost the performance of industrial robots but also contribute to other fields of robotics such as mobile robots [33], assistive robots [34], swarm robots [35], etc. While designing the automated system, the utilization of vision is increasing rapidly as vision is undoubtedly a loaded source of information [36][37][38][36,37,38]. By properly utilizing vision-based data, a robot can identify, map, localize, and calculate various measurements of any object and respond accordingly to complete its tasks [39][40][41][42][39,40,41,42]. Various studies confirm that vision-based approaches are more appropriate in different fields of robotics such as swarm robotics [35], fruit-picking robots [1], robotic grasping [43], mobile robots [33][44][45][33,44,45], aerial robotics [46], surgical robots [47], etc. To process the vision-based data, different approaches are being introduced by the researchers. However, learning-based approaches are at the center of such autonomous approaches, as in the real world, there are too many deviations and learning algorithms that help the robot gain knowledge from its experience with the environment [48][49][50][48,49,50]. Among different learning methods, various neural network-based models [51][52][53][54][51,52,53,54], deep learning-based models [49][50][54][55][56][49,50,54,55,56], and transfer learning models [57][58][59][60][57,58,59,60] are mostly exercised by the experts of manipulation systems, whereas different filter-based approaches are also popular among researchers [61][62][63][61,62,63].

2. Current State

A common structural assumption for manipulative tasks for a robot is that an object or set of objects in the environment is what the robot is trying to manipulate. Because of this, generalization via objects—both across different objects and between similar (or identical) objects in different task instances—is an important aspect of learning to manipulate. Commonly used object-centric manipulation skills and task model representations are often sufficient to generalize across tasks and objects, but adapting to differences in shape, properties, and appearance is required. A wide range of robotic manipulation problems can be solved using the vision-based approach as it works as a better sensory source for the system. Because of that and the availability of a fast processing power, vision-based approaches have become very popular among researchers who are working on robotic manipulation-based problems. A chronological observation depicting the contributions of the researchers based on the addressed problems and their outcomes is compiled in Table 1.Table 1.

Chronological progression of the vision-based approach.

| Year | Addressed Problems | Contributions | Outcomes |

|---|---|---|---|

| 2016 [64][65][66][64,65,66] | Manipulation [64] and grasping control strategies [66] using eye-tracking and sensory-motor fusion [65]. | Object detection, path planning, and navigation [64]; Control of an endoscopic manipulator [65]; Sensory-motor fusion-based manipulation and grasping control strategy for a robotic hand–eye system [66]. | The proposed approach has improved performance in calibration, task completion, and navigation [64]; Shows better a performance than endoscope manipulation by an assistant [64]; Demonstrates responsiveness and flexibility [66]. |

| 2017 [67][68][69][70][71][72][73][67,68,69,70,71,72,73] | Following human user with robotic blimp [70]; Deformable object manipulation [67]; Tracking and navigation for aerial vehicles [68][73][68,73]; Object detection without GPU support [69]; Automated object recognition for assistive robots [71]; Path-finding for humanoid robot [72]. | Robotic rope manipulation using vision-based learning model [67]; Robust vision-based tracking system for a UAV [68]; Real-time robotic object detection and recognition model [69]; Behavioral stability in humanoid robots and path-finding algorithms [72]; Robust real-time navigation [70] and long-range object tracking system [70][73][70,73]. | Robot successfully manipulates a rope [67]; System achieves robust tracking in real-time [68] and proved to be efficient in object detection [69]; Robotic blimp can follow humans [70]; System was able to detect and recognize objects [71]; Algorithm successfully able to find a path to guide the robot [72]; System arrived at an operational stage for lighting and weather conditions [73]. |

| 2018 [74][75]79][80][81][74[76][77][,7578,76],77,78[,79,80,81] | Real-time mobile robot controller [74]; Target detection for safe UAV landing [75]; Vision-based grasping [76], object sorting [79], and dynamic manipulation [77]; Multi-task learning [78]; Learn complex skills from raw sensory inputs [80]; Autonomous landing of a quadrotor on moving targets [81]. | Sensor-independent controller for real-time mobile robots [74]; Detection and landing system for drones [75]; GDRL-based grasping benchmark [76]; Effective robotic framework for extensible RL [77]; Complete controller for generating robot arm trajectories [78]; Successfully inaugurate a camera-robot system [79]; Successful framework to learn a deep dynamics model on images [80]; Autonomous NN-based landing controller of UAVs on moving targets in search and secure applications [81]. | The mobile robot reaches its goal [74]; The system finds targets and lands safely [75]; System grasps better than other algorithms [76]; Real-world reinforcement learning can handle large datasets and models [77]; Method is a versatile manipulator that can accurately correct errors [78]; Placement of objects by the robot gripper [79]; Generalization to a wide range of tasks [80]; Successful autonomous quadrotor landing on fixed and moving platforms [81]. |

| 2019 [82][83][84][85][86][87][88][89][82,83,84,85,86,87,88,89] | Nonlinear approximation for mobile robots [82]; Control of cable-driven robots [83]; Leader–follower formation control [84]; Motion control for a free-floating robot [85]; Control of soft robots [86]; Approach an object when obstacles are present [87]; Needle-based percutaneous using robotic technologies [88]; Natural interaction control of surgical robots [89]. | Effective recurrent neural network-based controller for robots [82]; Robust method for analyzing the stability of the cable-driven robots [83]; Effective formation control for a multi-agent system [84]; Efficient vision-based system for a free-floating robot [85]; Stable framework for soft robots [86]; Useful system to increase the autonomy of people with upper-body disabilities [87]; Accurate system to identify the needle position and orientation [88]; Smooth model to use eye movements to control a robot [89]. | System outperforms existing ones [82]; Vision-based control is a good alternative to model-based control [83]; Control protocol completes formation tasks with visibility constraints [84]; Method eliminates false targets and improves positioning precision [85]; System maintained an acceptable accuracy and stability [86]; A person successfully controlled the robotic arm using the system [87]; Framework shows the proposed robotic hardware’s efficiency [88]; movement was feasible and convenient [89]. |

| 2020 [90][91][92][93][94][95][90,91,92,93,94,95] | Grasping under occlusion [90]; Recognition and manipulation of objects [91]; Controllers for decentralized robot swarms [92]; Robot manipulation via human demonstration [93]; Robot manipulator using Iris tracking [94]; Object tracking of visual servoing [95]. | Robust grasping method for a robotic system [90]; Effective stereo algorithm for manipulation of objects [91]; Successful framework to control decentralized robot swarms [92]; Generalized framework for activity recognition from human demonstrations [93]; Real-time iris tracking method for the ophthalmic robotic system [94]; Successful method for conventional template matching [95]. | Method’s effectiveness validated through experiments [90]; R-CNN method is very stable [91]; Architecture shows promising performance for large-sized swarms [92]; Proposed approach achieves good generalized performance [93]; Tracker is suitable for the ophthalmic robotic system [94]; Control system demonstrates significant improvement to feature tracking and robot motion [95]. |

| 2021 [96][97][98][99][100][101][102][96,97,98,99,100,101,102] | Human–robot handover applications [96]; Imitation learning for robotic manipulation [97]; Reaching and grasping objects using a robotic arm [98]; Integration of libraries for real-time computer vision [99]; Mobility and key challenges for various construction applications [100]; Obtaining the spatial information of operated target [101]; Training actor–critic methods is RL [102]. | Efficient human–robot hand-over control strategy [96]; Intelligent vision-guided imitation learning framework for robotic exactitude manipulation [97]; Robotic hand–eye coordination system to achieve robust reaching ability [98]; Upgraded vision of a real-time computer vision system [99]; Mobile robotic system for object manipulation using autonomous navigation and object grasping [100]; Calibration-free monocular vision-based robot manipulation [101]; Attention-driven robot manipulation for discretization of the translation space [102] | Control shows promising and effective results [96]; Object can reach the goal positions smoothly and intelligently using the framework [97]; Dual neural-network-based controller leads to higher success rate and better control performance [98]; Successfully implemented and tested on the latest technologies [99]; UGV autonomously navigates toward a selected location [100]; Performance of the method has been successfully evaluated [101]; Algorithm achieves state-of-the-art performance on several difficult robotics tasks [102]. |

| 2022 [103][104][105][106]][103,104[,105107],106[108],107[,108109,109] | Micro-manipulation on cells [103]; Collision-free navigation [104]; Highly nonlinear continuum manipulation [105]; Complexity of RL in broad range of robotic manipulation task [106]; Uncertainty in DNN-based prediction for robotic grasping [107]; Path planning for a robotic arm in a 3D workspace [108]; Object tracking and control of a robotic arm in real-time [109]. | Path planning for magnetic micro-robots [103]; Neural radiance fields (NeRFs) for navigation in 3D environment [104]; Aerial continuum manipulation systems (ACMSs) [105]; Attention-driven robotic manipulation [106]; Robotic grabbing in distorted RGB-D data [107]; Real-time path generation with lower computational cost [108]; Real-time object tracking with reduced stress load and a high rate of success. [109]. | Magnetic micro-robots performed accurately in complex environment [103]; NeRFs outperforms the dynamically informed INeRF baseline [104]; simulation demonstrates good results [105]; ARM was successful on a range of RLBench tasks [106]; System performs better than end-to-end networks in difficult conditions [107]; System significantly eased the limitations of prior research [108]; System effectively locates the robotic arm in the desired location with very high accuracy [109]. |

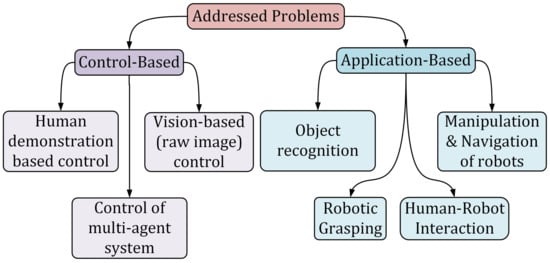

Figure 12.

Categorization of problems addressed by the researchers.

3. Applications

Vision-based autonomous robot manipulation for various applications has received a lot of attention in the recent decade. Manipulation based on vision occurs when a robot manipulates an item utilizing computer vision with the feedback from the data of one or more camera sensors. The increased complexity of jobs performed by fully autonomous robots has resulted from advances in computer vision and artificial intelligence. A lot of research is going on in the computer vision field, and it may be able to provide us with more natural, non-contact solutions in the future. Human intelligence is also required for robot decision-making and control in situations in which the environment is mainly unstructured, the objects are unfamiliar, and the motions are unknown. A human–robot interface is a fundamental approach for teleoperation solutions because it serves as a link between the human intellect and the actual motions of the remote robot. The current approach of robot-manipulator in teleoperation, which makes use of vision-based tracking, allows the communication of tasks to the robot manipulator in a natural way, often utilizing the same hand gestures that would ordinarily be used for a task. The use of direct position control of the robot end-effector in vision-based robot manipulation allows for greater precision in manipulating robots. Manipulation of deformable objects, autonomous vision-based tracking systems, tracking moving objects of interest, visual-based real-time robot control, vision-based target detection as well as object recognition, multi-agent system leader–follower formation control using a vision-based tracking scheme and vision-based grasping method to grasp the target object for manipulation are some of the well-known applications in vision-based robot manipulation. Researchers have classified the application of vision-based works into six categories: manipulation of the object, vision-based tracking, object detection, pathfinding/navigation, real-time remote control, and robotic arm/grasping. The summary of recent vision-based applications are mentioned in Table 2.Table 2.

Application of vision-based works.

| Study | Manipulation of Object | Vision-Based Tracking | Object Detection | Path Finding/ Navigation | Real-Time Remote Control | Robotic Arm/ Grasping |

|---|---|---|---|---|---|---|

| [67] | ✓ | |||||

| [68] | ✓ | |||||

| [69] | ✓ | |||||

| [70] | ✓ | ✓ | ||||

| [71] | ✓ | |||||

| [72] | ✓ | ✓ | ||||

| [73] | ✓ | ✓ | ||||

| [74] | ✓ | ✓ | ||||

| [75] | ✓ | ✓ | ||||

| [76] | ✓ | |||||

| [77] | ✓ | ✓ | ✓ | |||

| [78] | ✓ | ✓ | ✓ | ✓ | ||

| [79] | ✓ | ✓ | ✓ | ✓ | ||

| [80] | ✓ | ✓ | ||||

| [81] | ✓ | ✓ | ||||

| [82] | ✓ | ✓ | ||||

| [83] | ✓ | ✓ | ||||

| [84] | ✓ | ✓ | ||||

| [85] | ✓ | ✓ | ||||

| [86] | ✓ | ✓ | ✓ | |||

| [90] | ✓ | ✓ | ||||

| [91] | ✓ | ✓ | ||||

| [92] | ✓ | ✓ | ✓ | |||

| [93] | ✓ | ✓ | ||||

| [96] | ✓ | ✓ | ||||

| [97] | ✓ | ✓ | ||||

| [64] | ✓ | ✓ | ||||

| [94] | ✓ | ✓ | ||||

| [65] | ✓ | ✓ | ||||

| [87] | ✓ | ✓ | ✓ | ✓ | ||

| [95] | ✓ | ✓ | ||||

| [88] | ✓ | ✓ | ||||

| [89] | ✓ | ✓ | ✓ | ✓ | ||

| [66] | ✓ | ✓ | ||||

| [98] | ✓ | ✓ | ✓ | |||

| [99] | ✓ | |||||

| [100] | ✓ | ✓ | ||||

| [101] | ✓ | ✓ | ||||

| [102] | ✓ | |||||

| [103] | ✓ | ✓ | ||||

| [104] | ✓ | |||||

| [105] | ✓ | |||||

| [106] | ✓ | |||||

| [107] | ✓ | |||||

| [108] | ✓ | |||||

| [109] | ✓ | ✓ |