Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Conner Chen and Version 4 by Conner Chen.

Holographic-type communication (HTC) permits new levels of engagement between remote users. It is anticipated that it will give a very immersive experience while enhancing the sense of spatial co-presence. In addition to the newly revealed advantages, however, stringent system requirements are imposed, such as multi-sensory and multi-dimensional data capture and reproduction, ultra-lightweight processing, ultra-low-latency transmission, realistic avatar embodiment conveying gestures and facial expressions, support for an arbitrary number of participants, etc.

- HTC

- HTC implementation challenges

- HTC system

1. Overview of a Basic HTC System

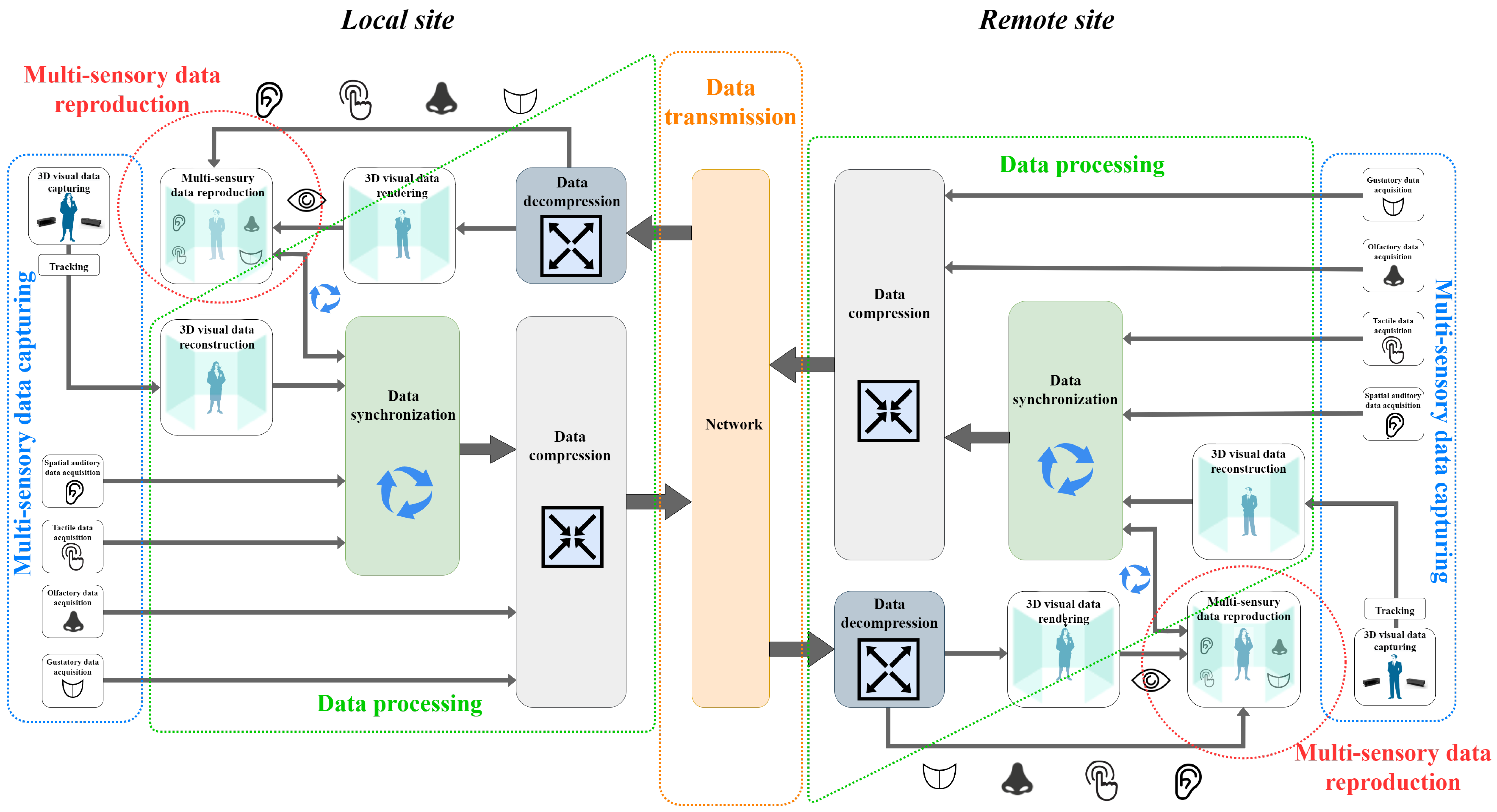

In general, to implement a low-latency holographic-type communication (HTC) system, there are a few main operations that must be performed at both local and remote user sites. These are multi-sensory data capturing and reproduction, data processing, and data transmission. A block diagram of a basic HTC system is visualized in Figure 1. When a user acts as a transmitter in the forward direction of the holographic communication pipeline, he/she (and eventually his/her local space) must be faithfully captured, processed, and transmitted to the remote interlocutor. The capturing step involves spatially aware data acquisition for all the five human senses, i.e., visual, auditory, tactile, olfactory, and gustatory, performed by the blocks of three-dimensional (3D) data capturing, spacial auditory data acquisition, tactile data acquisition, olfactory data acquisition, and gustatory data acquisition, respectively. If multiple visual data sources are used, they must be preliminarily synchronized. The tracking block is involved in the capturing step to dynamically track the user’s gestures and movements. Then, the acquired data are subjected to various processing measures, such as 3D visual data reconstruction, multi-source stream synchronization, and data compression. The 3D data reconstruction is performed by the block of 3D data reconstruction, where multi-view data alignment, noise filtering, hole filling, mesh fitting, object detection and extraction, etc., are performed. The synchronization between the streams coming from the local site, and the synchronization between the local and remote signals are both completed by the data synchronization block. The data compression is performed by the data compression block. Finally, the heterogeneous data are transmitted through a network channel, as the channel is represented by the network block. When the same user acts as a receiver in the backward direction of the holographic communication pipeline, he/she needs to receive, process, and reproduce the data obtained from the remote interlocutor. First, the received data are decompressed by the data decompression block. Then, their visual part is spatially rendered by the 3D data rendering block, and together with the remaining multi-sensory information, they are synchronously reproduced to the local user via the multi-sensory data reproduction block. Note that the forward and backward direction performances are carried out simultaneously.

Figure 1. HTC system overview.

One typical application of such an HTC system is teleconsultation, where a local expert may be assisted by a remote specialist during specific task completion. Teleconsultation can be useful in various fields, such as medicine, architecture, education, design, etc. For this purpose, the remote specialist has to be immersed in the local expert’s environment in real time. Therefore, the local expert and his/her surroundings must be captured, reconstructed, and further transmitted with low-latency to the remote specialist. Then, the remote specialist will be able to faithfully perceive the captured space and to immediately give instructions for the specific task completion. At the same time, the specialist can also be captured, reconstructed, and transmitted to the local expert, making him/her feel as if they are both present in the same space. The spatially aware communication between the participants and the possibility of conveying non-verbal cues such as gaze, gestures and face expressions permit new levels of remote user engagement. The interaction becomes much more realistic, comparable to a real face-to-face scenario, and enables faster and intuitive collaboration.

2. Main Technological Challenges in Implementing HTC

2.1. I/O Technologies

Visual perception is usually considered as the most important of the human senses; therefore, I/O (Input/output) technology efforts are mainly concentrated on 3D visual content capturing and visualization. However, even though volumetric data are much more informative than two-dimensional (2D) content, they are also more challenging to be obtained, especially in the context of HTC. This is due to several reasons. First, data capturing is required to be performed in real time. This automatically excludes the usage of highly accurate laser scanners or 3D reconstruction from multiple 2D images on a photogrammetry basis. Consequently, the second main challenge is the accuracy of the acquired data. The fulfillment of the real-time capturing requirement forces the developers to rely on more inaccurate but real-time-enabled devices, such as structured light-based sensors (PrimeSense cameras, Kinect for Xbox 360 sensor), Time-of-Flight (ToF) cameras (Microsoft Kinect v2 and Kinect Azure, [1][2][3]), or stereo cameras (Intel RealSense cameras D series, [4]). A comparison of the three methods for 3D data acquisition is given in [5]. Currently, because of their disadvantages, structured light sensors are sparsely used in contrast to the other two types of technologies. However, they also suffer from low precision capturing capabilities, low resolution, distance range limitations (for both long and short perspectives), and most importantly—noise addition. Another key limitation is the narrow field of view (FOV). As an example, the Kinect Azure reaches 120° × 120°, which is still much less than what human eyes may perceive (200–220° × 130–135°). The narrow FOV, as well as the fact that an object cannot be captured entirely from a single shot, impose the need of a multi-camera setup. This requires the deployment of more than one camera sensors that must be precisely calibrated. However, although camera calibration is a well-studied topic, it enforces the usage of calibration markers, can produce appearance of alignment errors, and has lower reconstruction precision. Multiple camera installation in a customer scenario is also a hurdle because of the significant increase in the financial costs. Using 360° cameras (e.g., Ricoh Theta, Samsung 360 Round, Insta 360 Pro, etc.) is also a popular solution to extensively capture the environment. They provide a high level of immersion in terms of visualization, but user movements are limited to the camera position only, which corresponds to perceiving just three degrees of freedom [6]. Finally, when speaking of capturing dynamics, high frame rates are required to optimally convey the kinematics of movements without any lagging. Naturally, this leads to increasing computational and transmission overload. Along with 3D visual content capturing, 3D visual content visualization is an indispensable part of one HTC system. An ideal 3D displaying technology must provide users with highly immersive experiences supported by very high-resolution imaging within a large FOV and a possibility for real-time interaction [7]. These factors must be simultaneously maintained while ensuring the user perception with the following physical (depth) cues: accommodation, conversion, stereo disparity, and motion parallax. Currently, based on the reviewed papers on telepresence systems for remote communication and collaboration, VR (Virtual reality)/AR head-mounted displays (HMDs) are the most often used in terms of visualization. A comparison between different AR and VR headsets available on the market can be found in [8]. However, even though considered as the technology that can provide the highest immersive experience, HMDs are still far from reaching their full potential of mimicking the human visual system. The FOV and the resolution provided by the headsets are less than what human eyes may experience in real conditions, which impacts the level of the perceived Quality of Experience (QoE). Another challenge in designing 3D displays is the provision of user comfort [7]. In [9], some disadvantages of the current HMDs are indicated, such as limited battery life, limited usage by a single user at the same time, heaviness and inconvenience, obtrusiveness, low availability, high setup cost, and high requirements on computation hardware. However, the greatest obstacle to providing comfort is the so-called convergence–accommodation conflict. It is expressed in the mismatch of the lens accommodation and the eye convergence depth cues. The image that is projected on the HMD is displayed at a fixed distance from the human eye, which produces constant accommodation, while the convergence angle may vary according to the scene [10]. This is reflected in the user experiencing discomfort such as nausea, dizziness, oculomotor, and disorientation. Some other factors such as quality of visualization, visual simulation, type of content, type of user locomotion, and time of HMD exposure may also provoke the user to experience some type of sickness. The authors of [11] extensively surveyed the impact of the above factors in the occurrence of nausea, oculomotor, and disorientation experiences by some VR users. However, along with the technical and comfort problems, the challenge of using HMD in shared and social spaces also needs attention [12]. This includes the social acceptability of HMD, tracking isolation and exclusion, shared experiences in shared spaces, and ethical implications of public MR. To combat the limitations of HMD displays, super-multi-view displays or light field displays (LFD) [9][10][13] and volumetric displays [10] are starting to appear. They are considered as non-obtrusive systems that may be observed by an arbitrary number of users (with some considerations); the same is not possible with the HMD. LFDs fight the accommodation–convergence conflict by being able to reproduce some level of accommodation. This is possible thanks to moving the image plane in and out of the display plane by redirecting the light rays to different voxel regions of the display panel [10]. However, the continuous increase in the provided depth is limited by diffraction occurrence among the voxels [10]. Research efforts are directed toward multi-plane LFD, but occlusions between the different planes cause new types of difficulties [10]. Significant work on LFD technology is presented by Holgrafika [14]. In [10], volumetric displays are considered as being able to overcome some of the multi-plane LFD limitations, but again, the projection of an image outside the panel volume is not possible. The authors of [10] outline the three main challenges impeding holography: realistic holographic pattern computation from the 3D information in a reasonable amount of time, data transmission to the visualization technology, and development of a suitable 3D display that can reproduce large holograms with high resolution at high refresh rates. Except for the visual perception, a real face-to-face communication scenario involves the utilization of all others human senses—auditory, tactile, olfactory, and gustatory. However, most of the studies concentrate their efforts just on visual perception, aiming to provide realistic spatial information for users and their environments. Meanwhile, they fail to address the need for spatial consistency between video and audio, which is a mandatory condition for the realism of audio–visual perception. Just a few of the examined studies consider the case of implementing spatial audio [15] or declare the need for it [16][17][18]. Similar to auditory sensation, tactility is also a premise for immersive interaction, but it is usually neglected due to its challenging integration in the communication systems [19]. Currently, the most common tactile appliances are the so-called smart gloves and e-skin-based interfaces, which are often uncomfortable to use and thus negatively impact users’ QoE. Another issue is how to ensure bidirectional interaction by simultaneously providing tactile sensation and localized haptic feedback, which is still in the infancy stage of research. However, many studies declare the need for ensuring haptic feedback [20][21] and multi-sensory interaction for all participants [16][18][22][23][24]. The stimulation of the last two human senses, namely smell and taste, is even more limited and much more challenging to incorporate in future HTC systems. Currently, SENSIKS [25] is an appropriate example of involvement of the so-called sensory reality pods. They are closed and controllable cabinets devoted to provide multi-sensory experiences equipped with different programmable actuators. Although it is a promising approach for providing immersive interaction including all five human senses, it is not applicable for commercialization in future HTC systems due to its high cost, especially when multi-user scenarios are involved.2.2. Data Processing

The main challenge to data processing in HTC systems is how to ensure the ultra-lightweight computation of great amounts of data with low latency. The processing may include various operations depending on the use case scenario. Here, only the compulsory ones are discussed. These are: 3D data reconstruction and rendering, compression and decompression, and stream synchronization. The 3D reconstruction of visual data captured from multiple perspectives requires a precise camera calibration. However, even with perfect calibration, the alignment often results in visual artifacts. So, further processing such as noise filtering, hole filling, etc., is required, leading to additional time expense. However, the greatest obstacle is not that multiple tasks need to be performed but the big amount of data that must be processed. Imagine working with dense point-cloud data, this means that billions of 3D points must be processed. Such volumes of data are also challenging for channel transmission. Therefore, to reduce the transmission overload and to decrease the subsequent bandwidth requirements, optimized compression and decompression techniques are mandatory. Recently, the Moving Picture Experts Group (MPEG) developed two point-cloud compression (PCC) techniques, called geometry-based PCC and video-based PCC [26]. While the video-based PCC projects the 3D data over 2D plane images and further utilizes the existent image compression techniques, the geometry-based PCC works directly on the point-cloud data. Each of the methods has their advantages and appropriate field of application [26][27][28][29]. However, determining the spatial–temporal correlation of a dynamic point cloud is still a tremendous task, especially when near to real-time transmission is required. Currently, deep learning methods are attracting great attention in the context of density point-cloud compression [27][30][31][32][33]. However, encoding and decoding times still exceed the run-time limits that low-latency communication imposes, and further improvements are needed in this direction. To decrease the compression time, either the amount of data should be reduced, or the computation power should be increased [34]. Therefore, it is essential to find a trade-off between developing efficient compression techniques, thus increasing the computational latency while decreasing the network bandwidth and latency, and vice versa [35]. In terms of 3D data rendering (according to the data obtained from the remote user), the quality of rendered 3D objects is a function of the wireless channel quality, known as cliff and leveling effect. The authors of [30][36] propose upgraded point-cloud delivery schemes based on graph neural networks and Graph Fourier Transform, where, however, rendering quality improvements according to the wireless channel state require additional communication overload. The stream synchronization, both between the signals originating from different sensors in the local site and between the streams sourcing from different sites, is one of the stringent HTC requirements. Speaking of multi-sensor stream synchronization means that all types of signals coming from the local site, e.g., video, audio, and tactile, must be accurately synchronized [35] to guarantee the faithfulness of users’ perception. Moreover, if the visual information is to be obtained by exploiting several capturing devices in a dynamic scenario, all the devices must be synchronized to ensure complete and consistent imaging and movement of the reconstructed objects. In a multi-user holographic communication setup, the synchronization of the streams sourcing from different participants sites is mandatory. A few factors may lead to an increase in synchronization errors, including network distance between the participant sites, resulting in experienced latency, varying network path conditions, and source frame production conditions [37]. The network latency evaluation is mandatory, but it is not enough to choose the synchronization approach. The changing network conditions could reveal varying latency times, known as jitter, which may strongly corrupt the synchronization of the arriving streams, further resulting in decreased QoE. In [38], a novel cloud-based HTC teleportation platform is proposed which supports adaptive frame buffering and end-to-end signaling techniques against the network uncertainties. In [37], the performance of an edge-computing-based mechanism for stream synchronization is evaluated under different network conditions. However, working on synchronization mechanisms and their evaluation within systems with a much greater number of users performing faster dynamics is a challenge to be considered as research on HTC progresses. Considering all the operations above and other additional processing such as user/object detection, extraction, tracking, positioning, mesh reconstruction, etc., it is obvious that the ultra-lightweight processing is really a challenge. Powerful graphical processing units are very helpful but not always available at the user site. Therefore, using the advantage of the network edge computing would be necessary for customer support [34][38][39]. Data processing challenges are given in Table 3.2.3. Data Transmission of Holographic Data

In this subsection, three main challenges related to holographic data transmission are considered: ultra-high bandwidth, ultra-low-latency, and network optimization [35]. Three Kinect v2 sensors capture a dynamic scene at 30 fps. Each sensor provides point-cloud data with 217,088 points per frame, which gives a total of 651,264 points per frame for the three sensors. For each single point, geometry characteristics are represented by 32-bit x, y, and z values, and color attributes are described with 8-bit r, g, and b values. The calculation for the total amount of data at 30 fps is 651,264 × (3 × 32 + 3 × 8) × 30 = 2,344,550,400 bps. That is approximately 2.2 Gbps. In addition, assuming light field visualization that demands super-multi-view capturing, this will result in a much greater amount of information, going as high as Tbps. As a result, significant bandwidth resources will be required. It is obvious that HTC imposes huge network throughput demands. The giga- and terabits per second rates require ultra-high transmission bandwidths and the utilization of higher frequency bands, in addition to the application of efficient modulation techniques. Although 5G (Fifth-generation mobile network) promises to support such demands [40], the realization of HTC is in a development stage and still very far from true commercialization. To lighten the bandwidth requirement, one effective technique is adaptive streaming [41], benefiting from the knowledge of users’ location and focus. This includes the transmission of just the user’s observable parts of the scene [42], as well as the transmission of point-cloud objects that are closer to the user with higher density [43]. Thus, the bandwidth consumption can be significantly reduced while still perceiving the same QoE. This solution, however, requires semantic knowledge of the scene [34] and accurate user motion and gaze tracking. Applying user view-point prediction is beneficial for volumetric streaming in an efficient manner [34]. The second challenge is the achievement of end-to-end low-latency transmission—from the local site capturing to the remote site rendering. At each step, including data acquisition and reconstruction, application-specific processing, compression, transmission, decompression, rendering, and visualization, additional delay is imposed. All the reviewed studies that evaluate experienced latency declare values of a couple of hundreds of milliseconds [6][15][22][23][44][45][46][47][48][49], which is too much for HTC (fewer than 15 ms motion-to-photon latency is acceptable according to [50][51], and fewer than 50–100 ms end-to-end latency according to [51]). A devoted transmission scheme for ultra-low-latency HTC is strongly needed. Based on the literature review, the current networking technologies that are increasingly exploited are the real-time streaming protocol (RTSP) [22][45][47] and dynamic adaptive streaming over HTTP (DASH) [41][46][52]. Although not originally invented for HTC purposes, WebRTC (Web Real-time communication) platform [15][44][53][54][55] and Photon Unity Network (PUN) module [17][53][56][57][58][59][60] are commonly used by HTC system developers. However, a protocol that is specially designed for HTC does not exist. Usual transport layer protocols support either low-latency (user datagram protocol (UDP)-based) or reliability (transmission control protocol (TCP)-based), or exploit some mechanisms to try to combine both (e.g., quick UDP internet connections (QUIC)). Nonetheless, none of them are absolutely suitable for holographic data transmission, so an optimized solution is still in demand [34]. The third challenge is the network structure optimization, which is to aid in the fulfillment of the increasing HTC requirements, particularly ultra-lightweight processing, ultra-low-latency, and ultra-high bandwidth. The authors of [61] declare the need for an intelligent approach for network organization, where AI ( Artificial intelligence) will help the network to constantly adapt according to its resource availability and depending on the HTC users’ behavior. The authors also state that a significant part of the computation must be migrated from the user site to the network, so FoV and resolution can be increased, and interactions using natural gestures can be easily enabled. The migration of the computation to the network site can significantly reduce the energy consumption of XR (Extended reality) devices, thus increasing their battery life. Therefore, a higher degree of functionality can be added while reducing devices’ size and weight and enhancing user comfort. However, such computing and network upgrades must be achieved without trade-offs in terms of cost and quality [61]. According to the International Telecommunication Union (ITU)’s technical report [62], AI has a main role in optimizing the performance of future networks (Intelligent Operation Networks (IONs), as the authors call them). IONs must support computing-aware network capabilities and intelligent load-balancing simultaneously among multiple users in a coordinated way. Therefore, they must be adjustable according to the services demanded and network resources available. The requirements of IONs are computing awareness (since network and computing converge), joint network and computing resource scheduling, network protocol programmability (flexible configuration of future networks’ resources), flexible addressing, distributed and intelligent network management, multiple access capability, and fast routing and re-routing. In [63], AI is again indicated as a key technology with which to support AI-based service awareness capabilities. According to the authors, networks have to provide individual users with efficient coding and decoding, optimized transmission, QoE assurance, and coordinated scheduling capabilities for full-sensing holographic communication services. The authors of [35] propose “Cross layer optimization approach”. It includes end-user optimizations (view-point prediction, sensor synchronization, Quality of Service (QoS)/QoE/cybersickness assessment, and 3D tiling and multiple representation encoding), transport layer optimizations (low-latency optimization, intelligent buffering, caching, and smarter re-transmission), and novel network architectures. According to the novel network architectures, different Software-Defined Network (SDN) architectures must be examined, so better network performance for HTC applications can to be achieved. A fully distributed SDN architecture is indicated by the authors to achieve the lowest latency. Additionally, Network Slicing and Service Function Chaining (SFC) are also considered to further optimize the use of network resources. To conclude, applying strategies for intelligent network deployment is very important for future holographic service enablement. First, it can play a significant role in the optimization of holographic data transportation via the intelligent utilization of network resources. Second, such a deployment can be very beneficial for the end users who are not able to perform the heavy computation demanded by HTC.2.4. System Scalability

For a system to be considered as scalable, it must be able to accommodate and serve an arbitrary number of users. In current 2D video conferencing systems, this is not an issue, because they can support the communication between multiple users. However, when talking about scaling the HTC system, there are plenty of issues. The bandwidth requirements go higher, the low latency must be assured along a greater number of users, and the synchronization and fusion (including tracking and positioning) between the user avatars, movements, environments, etc., become even more of a complex task. Scalability between different devices, between a different number of collaborators, and between different degrees of virtuality should be also considered [64]. Solutions with centralized control such as the one described in [43] will be needed. Some of the reviewed papers consider their systems as scalable, however, they support only up to a few users and do not manage to implement fully immersive low-latency HTC [21][23][45][46][55][56][57][58][60][65][66][67][68][69].References

- Microsoft. Azure Kinect and Kinect Windows v2 Comparison. Available online: https://learn.microsoft.com/en-us/azure/kinect-dk/windows-comparison (accessed on 27 September 2022).

- Tölgyessy, M.; Dekan, M.; Chovanec, L.; Hubinskỳ, P. Evaluation of the azure Kinect and its comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413.

- He, Y.; Chen, S. Recent advances in 3D data acquisition and processing by time-of-flight camera. IEEE Access 2019, 7, 12495–12510.

- Sence, I.R. Compare Cameras. Available online: https://www.intelrealsense.com/compare-depth-cameras/ (accessed on 27 September 2022).

- Hackernoon. 3 Common Types of 3D Sensors: Stereo, Structured Light, and ToF. Available online: https://hackernoon.com/3-common-types-of-3d-sensors-stereo-structured-light-and-tof-194033f0 (accessed on 27 September 2022).

- Rhee, T.; Thompson, S.; Medeiros, D.; Dos Anjos, R.; Chalmers, A. Augmented virtual teleportation for high-fidelity telecollaboration. IEEE Trans. Vis. Comput. Graph. 2020, 26, 1923–1933.

- Chang, C.; Bang, K.; Wetzstein, G.; Lee, B.; Gao, L. Toward the next-generation VR/AR optics: A review of holographic near-eye displays from a human-centric perspective. Optica 2020, 7, 1563–1578.

- VRcompare. VRcompare—The Internet’s Largest VR & AR Headset Database. Available online: https://vr-compare.com/ (accessed on 7 October 2022).

- Pan, X.; Xu, X.; Dev, S.; Campbell, A.G. 3D Displays: Their Evolution, Inherent Challenges and Future Perspectives. In Proceedings of the Future Technologies Conference, Jeju, Korea, 22–24 April 2021; pp. 397–415.

- Blanche, P.A. Holography, and the future of 3D display. Light Adv. Manuf. 2021, 2, 446–459.

- Saredakis, D.; Szpak, A.; Birckhead, B.; Keage, H.A.; Rizzo, A.; Loetscher, T. Factors associated with virtual reality sickness in head-mounted displays: A systematic review and meta-analysis. Front. Hum. Neurosci. 2020, 14, 96.

- Gugenheimer, J.; Mai, C.; McGill, M.; Williamson, J.; Steinicke, F.; Perlin, K. Challenges Using Head-Mounted Displays in Shared and Social Spaces. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; pp. 1–8.

- Kara, P.A.; Tamboli, R.R.; Doronin, O.; Cserkaszky, A.; Barsi, A.; Nagy, Z.; Martini, M.G.; Simon, A. The key performance indicators of projection-based light field visualization. J. Inf. Disp. 2019, 20, 81–93.

- Holografika. Pioneering 3D Light Field Displays. Available online: https://holografika.com/ (accessed on 18 October 2022).

- Lawrence, J.; Goldman, D.B.; Achar, S.; Blascovich, G.M.; Desloge, J.G.; Fortes, T.; Gomez, E.M.; Häberling, S.; Hoppe, H.; Huibers, A.; et al. Project Starline: A high-fidelity telepresence system. ACM Trans. Graph. 2021, 40, 242.

- Orlosky, J.; Sra, M.; Bektaş, K.; Peng, H.; Kim, J.; Kos’ myna, N.; Hollerer, T.; Steed, A.; Kiyokawa, K.; Akşit, K. Telelife: The future of remote living. arXiv 2021, arXiv:2107.02965.

- Yoon, L.; Yang, D.; Chung, C.; Lee, S.H. A Full Body Avatar-Based Telepresence System for Dissimilar Spaces. arXiv 2021, arXiv:2103.04380.

- Montagud, M.; Li, J.; Cernigliaro, G.; El Ali, A.; Fernández, S.; Cesar, P. Towards socialVR: Evaluating a novel technology for watching videos together. Virtual Real. 2022, 26, 1–21.

- Ozioko, O.; Dahiya, R. Smart tactile gloves for haptic interaction, communication, and rehabilitation. Adv. Intell. Syst. 2022, 4, 2100091.

- Wang, Y.; Wang, P.; Luo, Z.; Yan, Y. A novel AR remote collaborative platform for sharing 2.5 D gestures and gaze. Int. J. Adv. Manuf. Technol. 2022, 119, 6413–6421.

- Wang, J.; Qi, Y. A Multi-User Collaborative AR System for Industrial Applications. Sensors 2022, 22, 1319.

- Yu, K.; Gorbachev, G.; Eck, U.; Pankratz, F.; Navab, N.; Roth, D. Avatars for teleconsultation: Effects of avatar embodiment techniques on user perception in 3D asymmetric telepresence. IEEE Trans. Vis. Comput. Graph. 2021, 27, 4129–4139.

- Regenbrecht, H.; Park, N.; Duncan, S.; Mills, S.; Lutz, R.; Lloyd-Jones, L.; Ott, C.; Thompson, B.; Whaanga, D.; Lindeman, R.W.; et al. Ātea Presence—Enabling Virtual Storytelling, Presence, and Tele-Co-Presence in an Indigenous Setting. IEEE Technol. Soc. Mag. 2022, 41, 32–42.

- Kim, D.; Jo, D. Effects on Co-Presence of a Virtual Human: A Comparison of Display and Interaction Types. Electronics 2022, 11, 367.

- Sensiks. Sensory Reality Pods & Platform. Available online: https://www.sensiks.com/ (accessed on 19 October 2022).

- Schwarz, S.; Preda, M.; Baroncini, V.; Budagavi, M.; Cesar, P.; Chou, P.A.; Cohen, R.A.; Krivokuća, M.; Lasserre, S.; Li, Z.; et al. Emerging MPEG standards for point cloud compression. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 9, 133–148.

- Cao, C.; Preda, M.; Zaharia, T. 3D Point Cloud Compression: A Survey. In Proceedings of the 24th International Conference on 3D Web Technology, Los Angeles, CA, USA, 26–28 July 2019; pp. 1–9.

- Liu, H.; Yuan, H.; Liu, Q.; Hou, J.; Liu, J. A comprehensive study and comparison of core technologies for MPEG 3-D point cloud compression. IEEE Trans. Broadcast. 2019, 66, 701–717.

- Graziosi, D.; Nakagami, O.; Kuma, S.; Zaghetto, A.; Suzuki, T.; Tabatabai, A. An overview of ongoing point cloud compression standardization activities: Video-based (V-PCC) and geometry-based (G-PCC). APSIPA Trans. Signal Inf. Process. 2020, 9, e13.

- Fujihashi, T.; Koike-Akino, T.; Chen, S.; Watanabe, T. Wireless 3D Point Cloud Delivery Using Deep Graph Neural Networks. In Proceedings of the ICC 2021-IEEE International Conference on Communications, Montreal, QC, Canada, 14–23 June 2021; pp. 1–6.

- Wang, J.; Ding, D.; Li, Z.; Ma, Z. Multiscale Point Cloud Geometry Compression. In Proceedings of the 2021 Data Compression Conference (DCC), Snowbird, UT, USA, 23–26 March 2021; pp. 73–82.

- Wang, J.; Zhu, H.; Liu, H.; Ma, Z. Lossy point cloud geometry compression via end-to-end learning. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 4909–4923.

- Yu, S.; Sun, S.; Yan, W.; Liu, G.; Li, X. A Method Based on Curvature and Hierarchical Strategy for Dynamic Point Cloud Compression in Augmented and Virtual Reality System. Sensors 2022, 22, 1262.

- van der Hooft, J.; Vega, M.T.; Wauters, T.; Timmerer, C.; Begen, A.C.; De Turck, F.; Schatz, R. From capturing to rendering: Volumetric media delivery with six degrees of freedom. IEEE Commun. Mag. 2020, 58, 49–55.

- Clemm, A.; Vega, M.T.; Ravuri, H.K.; Wauters, T.; De Turck, F. Toward truly immersive holographic-type communication: Challenges and solutions. IEEE Commun. Mag. 2020, 58, 93–99.

- Fujihashi, T.; Koike-Akino, T.; Watanabe, T.; Orlik, P.V. HoloCast+: Hybrid digital-analog transmission for graceful point cloud delivery with graph Fourier transform. IEEE Trans. Multimed. 2021, 24, 2179–2191.

- Anmulwar, S.; Wang, N.; Pack, A.; Huynh, V.S.H.; Yang, J.; Tafazolli, R. Frame Synchronisation for Multi-Source Holograhphic Teleportation Applications-An Edge Computing Based Approach. In Proceedings of the 2021 IEEE 32nd Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Helsinki, Finland, 13–16 September 2021; pp. 1–6.

- Selinis, I.; Wang, N.; Da, B.; Yu, D.; Tafazolli, R. On the Internet-Scale Streaming of Holographic-Type Content with Assured User Quality of Experiences. In Proceedings of the 2020 IFIP Networking Conference (Networking), Paris, France, 22–25 June 2020; pp. 136–144.

- Qian, P.; Huynh, V.S.H.; Wang, N.; Anmulwar, S.; Mi, D.; Tafazolli, R.R. Remote Production for Live Holographic Teleportation Applications in 5G Networks. IEEE Trans. Broadcast. 2022, 68, 451–463.

- Agiwal, M.; Roy, A.; Saxena, N. Next generation 5G wireless networks: A comprehensive survey. IEEE Commun. Surv. Tutor. 2016, 18, 1617–1655.

- van der Hooft, J.; Wauters, T.; De Turck, F.; Timmerer, C.; Hellwagner, H. Towards 6dof http Adaptive Streaming through Point Cloud Compression. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 2405–2413.

- Zhu, W.; Ma, Z.; Xu, Y.; Li, L.; Li, Z. View-dependent dynamic point cloud compression. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 765–781.

- Cernigliaro, G.; Martos, M.; Montagud, M.; Ansari, A.; Fernandez, S. PC-MCU: Point Cloud Multipoint Control Unit for Multi-User Holoconferencing Systems. In Proceedings of the 30th ACM Workshop on Network and Operating Systems Support for Digital Audio and Video, Istanbul, Turkey, 10–11 June 2020; pp. 47–53.

- Blackwell, C.J.; Khan, J.; Chen, X. 54-6: Holographic 3D Telepresence System with Light Field 3D Displays and Depth Cameras over a LAN. In Proceedings of the SID Symposium Digest of Technical Papers; Wiley Online Library: Hoboken, NJ, USA, 2021; Volume 52, pp. 761–763.

- Roth, D.; Yu, K.; Pankratz, F.; Gorbachev, G.; Keller, A.; Lazarovici, M.; Wilhelm, D.; Weidert, S.; Navab, N.; Eck, U. Real-Time Mixed Reality Teleconsultation for Intensive Care Units in Pandemic Situations. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 27 March–1 April 2021; pp. 693–694.

- Langa, S.F.; Montagud, M.; Cernigliaro, G.; Rivera, D.R. Multiparty Holomeetings: Toward a New Era of Low-Cost Volumetric Holographic Meetings in Virtual Reality. IEEE Access 2022, 10, 81856–81876.

- Kachach, R.; Perez, P.; Villegas, A.; Gonzalez-Sosa, E. Virtual Tour: An Immersive Low Cost Telepresence System. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 504–506.

- Fadzli, F.E.; Ismail, A.W. A Robust Real-Time 3D Reconstruction Method for Mixed Reality Telepresence. Int. J. Innov. Comput. 2020, 10.

- Vellingiri, S.; White-Swift, J.; Vania, G.; Dourty, B.; Okamoto, S.; Yamanaka, N.; Prabhakaran, B. Experience with a Trans-Pacific Collaborative Mixed Reality Plant Walk. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 238–245.

- Li, R. Towards a New Internet for the Year 2030 and Beyond. In Proceedings of the Third annual ITU IMT-2020/5G Workshop and Demo Day, Switzerland, Geneva, 18 July 2018; pp. 1–21.

- Qualcomm, Ltd. VR And AR Pushing Connectivity Limits; Qualcomm, Ltd.: San Diego, CA, USA, 2018.

- Jansen, J.; Subramanyam, S.; Bouqueau, R.; Cernigliaro, G.; Cabré, M.M.; Pérez, F.; Cesar, P. A Pipeline for Multiparty Volumetric Video Conferencing: Transmission of Point Clouds over Low Latency DASH. In Proceedings of the 11th ACM Multimedia Systems Conference, Istanbul, Turkey, 8–11 June 2020; pp. 341–344.

- Gasques, D.; Johnson, J.G.; Sharkey, T.; Feng, Y.; Wang, R.; Xu, Z.R.; Zavala, E.; Zhang, Y.; Xie, W.; Zhang, X.; et al. ARTEMIS: A Collaborative Mixed-Reality System for Immersive Surgical Telementoring. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–14.

- Quin, T.; Limbu, B.; Beerens, M.; Specht, M. HoloLearn: Using Holograms to Support Naturalistic Interaction in Virtual Classrooms. In Proceedings of the 1st International Workshop on Multimodal Immersive Learning Systems, MILeS 2021, Virtual, 20–24 September 2021.

- Gunkel, S.N.; Hindriks, R.; Assal, K.M.E.; Stokking, H.M.; Dijkstra-Soudarissanane, S.; Haar, F.T.; Niamut, O. VRComm: An End-to-End Web System for Real-Time Photorealistic Social VR Communication. In Proceedings of the 12th ACM Multimedia Systems Conference, Istanbul, Turkey, 28 September–1 October 2021; pp. 65–79.

- Pakanen, M.; Alavesa, P.; van Berkel, N.; Koskela, T.; Ojala, T. “Nice to see you virtually”: Thoughtful design and evaluation of virtual avatar of the other user in AR and VR based telexistence systems. Entertain. Comput. 2022, 40, 100457.

- Gamelin, G.; Chellali, A.; Cheikh, S.; Ricca, A.; Dumas, C.; Otmane, S. Point-cloud avatars to improve spatial communication in immersive collaborative virtual environments. Pers. Ubiquitous Comput. 2021, 25, 467–484.

- Kim, H.i.; Kim, T.; Song, E.; Oh, S.Y.; Kim, D.; Woo, W. Multi-Scale Mixed Reality Collaboration for Digital Twin. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bari, Italy, 4–8 October 2021; pp. 435–436.

- Fadzli, F.; Kamson, M.; Ismail, A.; Aladin, M. 3D Telepresence for Remote Collaboration in Extended Reality (xR) Application. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Chennai, India, 16–17 September 2020; Volume 979, p. 012005.

- Olin, P.A.; Issa, A.M.; Feuchtner, T.; Grønbæk, K. Designing for Heterogeneous Cross-Device Collaboration and Social Interaction in Virtual Reality. In Proceedings of the 32nd Australian Conference on Human-Computer Interaction, Sydney, NSW, Australia, 2–4 December 2020; pp. 112–127.

- Ericsson. The Spectacular Rise of Holographic Communication; Ericsson: Stockholm, Sweden, 2022.

- ITU. Representative Use Cases and Key Network Requirements for Network 2030; ITU: Geneva, Switzerland, 2020.

- Huawei. Communications Network 2030; Huawei: Shenzhen, China, 2021.

- Memmesheimer, V.M.; Ebert, A. Scalable extended reality: A future research agenda. Big Data Cogn. Comput. 2022, 6, 12.

- Lee, Y.; Yoo, B. XR collaboration beyond virtual reality: Work in the real world. J. Comput. Des. Eng. 2021, 8, 756–772.

- Kim, H.; Young, J.; Medeiros, D.; Thompson, S.; Rhee, T. TeleGate: Immersive Multi-User Collaboration for Mixed Reality 360 Video. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 27 March–1 April 2021; pp. 532–533.

- Jasche, F.; Kirchhübel, J.; Ludwig, T.; Tolmie, P. BeamLite: Diminishing Ecological Fractures of Remote Collaboration through Mixed Reality Environments. In Proceedings of the C&T’21: Proceedings of the 10th International Conference on Communities & Technologies-Wicked Problems in the Age of Tech, Seattle, WA, USA, 20–25 June 2021; pp. 200–211.

- Weinmann, M.; Stotko, P.; Krumpen, S.; Klein, R. Immersive VR-Based Live Telepresence for Remote Collaboration and Teleoperation. Available online: https://www.dgpf.de/src/tagung/jt2020/proceedings/proceedings/papers/50_DGPF2020_Weinmann_et_al.pdf (accessed on 18 October 2022).

- He, Z.; Du, R.; Perlin, K. Collabovr: A Reconfigurable Framework for Creative Collaboration in Virtual Reality. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Porto de Galinhas, Brazil, 9–13 November 2020; pp. 542–554.

More