Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Vivi Li and Version 1 by Natasha Padfield.

Electroencephalogram (EEG)-based brain–computer interfaces (BCIs) provide a novel approach for controlling external devices. BCI technologies can be important enabling technologies for people with severe mobility impairment. Endogenous paradigms, which depend on user-generated commands and do not need external stimuli, can provide intuitive control of external devices.

- brain–computer interface (BCI)

- brain–machine interface (BMI)

- electroencephalogram (EEG)

- endogenous

- control

- motor imagery (MI)

1. Introduction

This paperntry presents a systematic review of the literature related to online endogenous electroencephalogram (EEG) brain–computer interfaces (BCIs) for external device control. Controlling dynamic devices provides unique challenges, since they must be able to reliably perform actions such as navigating a cluttered space. Endogenous EEG control involves commands that are generated by actions executed by the users themselves and do not require external stimuli, as is the case for exogenous EEG control. An example of external stimuli is flickering lights, which are required for steady-state visually evoked potential (SSVEP) BCIs.

2. BCIs in the Physical World: Applications and Paradigms

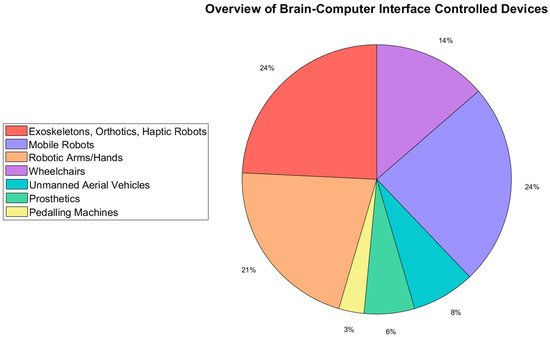

Figure 1 shows a breakdown of the different BCI-controlled dynamic devices in the reviewed papers. The most popular devices were mobile robots and assistive limb-movement devices (exoskeletons, orthotics, and haptic robots), with each having a share of 24%. The share for mobile robots went up to 38% if powered wheelchairs were included, as these are a form of specialized mobile robots. The share for assistive limb devices increased to 51% if prosthetics, robotic arms, and hands were included, representing articulated devices. The least common devices were unmanned aerial vehicles (UAVs), prosthetics, and pedaling machines. Most of the UAVs were quadcopters [26[1][2][3][4],27,28,29], with only one study presenting another type of UAV [30][5]. UAVs present numerous challenges in terms of their many degrees of freedom and difficulty in mastering control, even when using a manual remote controller. Pedaling machines are typically used in stroke rehabilitation studies [31][6], which constitute a smaller niche of research. Finally, prosthetics are generally aimed at subjects who have amputations but generally have reasonable muscle signals in the residual (stump) limb, which has led to the development of numerous reliable EMG devices [32][7]. These constitute a viable alternative to BCI-controlled prosthetics. Conversely, BCI-controlled exoskeletons, orthotics, mobile robots, wheelchairs, and robotic arms can all be used by subjects who have spinal or neurological injuries resulting in reduced or negligible residual muscle signals in limbs, creating research openings for BCI control of such devices.

Figure 1.

A pie chart showing a breakdown of all the different BCI-controlled devices in the literature reviewed.

2.1. Motor Imagery Paradigms

Considering Table 1, traditional motor imagery, which consists of MI related to distinct limbs, such as movement in the hands, feet (or legs), and tongue, was the most popular paradigm. This paradigm presented a particularly intuitive method of control for exoskeletons [34[12][13],35], in which the user can imagine walking or sitting [35][13]; rehabilitative pedaling machines, in which the user can imagine the pedaling motion [36][14]; and robotic hands, in which the hand can mimic imagined flexion or extension [37,38][15][16]. Many systems depended wholly or in part on classifying left- and right-hand MI, possibly because these produced distinct pattens in the right and left hemispheres, respectively, that can be accurately classified [15][17]. Furthermore, these MI actions can be intuitively related to left and right steering commands for mobile devices such as robots. Nevertheless, systems based on traditional MI tended to use four commands or less, possibly because it was challenging to accurately decode multiclass MI [28,29][3][4]. Furthermore, MI-based paradigms required significant user training for reliable use, and could suffer from a relatively high BCI illiteracy rate [4][18]. Enhanced intuition and control of MI-driven prosthetics and orthotics could be achieved by adding functional electrical stimulation, which activated the subjects’ own muscles [39,40][19][20]. In 2018, Yu et al. [1][8] presented a sequential motor imagery and finite-state-machine approach for controlling a motorized wheelchair. The sequential motor imagery approach involved the subject issuing commands by completing two mental activities sequentially (for example: left-hand and then right-hand MI), as opposed to just one mental command (for example: left-hand MI), as in the traditional MI paradigm. The finite-state machine is used to enable the same sequential command to be used for different actions, depending on the current state of the system. These approaches were used to increase the degrees of freedom of the BCI, and are discussed in more depth in Section 9. Jeong et al. [41][21] presented a BCI for controlling a robotic arm based on single-limb MI. Decoding single-limb movements was more challenging than decoding the movements of different limbs, as in traditional MI [41][21]. However, it presented an opportunity for more intuitive control paradigms, with the user being able to control the movements on a robotic arm in 3D space by imagining similar movements in their own limb. In order to master suitable control of MI-based BCIs, subjects require extensive training [4][18]. Traditional MI EEG subject training schemes used the discrete trial (DT) approach, in which subjects were directed by cues to execute a MI task for a certain amount of time and were given on-screen feedback; for example, through a progress bar or a moving object [4,42][18][22]. Edelman et al. [42][22] presented a novel training method called continuous pursuit (CP), in which subjects were trained through a gamelike interface that required them to control a cursor in following a randomly moving icon on-screen. They noted that subjects were more engaged, elicited stronger brain signal patterns, and could potentially be trained in less time when using CP as opposed to DT. In test trials involving a robotic arm, the level of performance obtained during CP training for cursor control was maintained, indicating that the CP approach was effective for training subjects to control a dynamic device.Table 1.

A summary of EEG paradigms, devices, and control functions in the literature for single-paradigm systems.

| Paper | Paradigm | Device | No. of Classes | Classes and Control Function | Accuracy |

|---|---|---|---|---|---|

| Choi, 2020 [35][13] | Traditional MI | Lower limb exoskeleton | 3 | Gait MI—walking; sitting MI—sitting down; idle state—no action | 86% |

| Gordleeva, 2020 [43][23 | |||||

| Yu, 2018 | |||||

| [ | |||||

| 1 | |||||

| ] | |||||

| [ | |||||

| 8 | |||||

| ] | |||||

| Sequential MI | |||||

| Wheelchair | |||||

| 6 | |||||

| Left hand, right hand, and idle state identified by classifier. Four commands obtained by sequential paradigm, used to execute six functions through a finite-state machine: start, stop, accelerate, decelerate, turn left, turn right. | |||||

| 94% | |||||

| Jeong, 2020 | |||||

| [ | |||||

| 41][21] | Single-limb MI | Robotic arm | 6 | MI of same arm moving up, down, left, right, backward and forward, which were imitated by the robotic arm. | 66% (for a reach-and-grab task); 47% (for a beverage-drinking task) |

| Junwei, 2018 [25][38] | Spelling | Wheelchair | 4 | Spell the desired commands: FORWARD, BACKWARD, LEFT, RIGHT | 93% |

| Kobayashi, 2018 [57][39] | Self-induced emotive State | Wheelchair | 4 | Delight—move forward; anger—turn left; sorrow—turn right; pleasure—move backward. | N/A |

| Ji, 2021 [58][40] | Facial movement | Robotic arm | 3 | Detect double blink, long blink, and normal blink (idle state) to navigate VR menus and interfaces to control a robotic arm | N/A |

| Li, 2018 [59][41] | Prosthetic hand | 3 | Raised brow—hand opened; furrowed brow—hand closed; right smirk—rightward wrist rotation; left smirk—leftward wrist rotation | 81% | |

| Banach, 2021 [24][42] | Sequential facial movement | Wheelchair | 7 | Eyes-open and eyes-closed states identified by the classifier. Seven commands generated using three-component encodings of the states. Commands: turn left, turn right, turn left 45°, accelerate, decelerate, forward, backward. | N/A |

| Alhakeem, 2020 [60][43] | 6 | Eye blinks and jaw clench were used to create six commands using three-component encodings: forward, backward, stop, left, right, keep moving. | 70% |

2.2. Spelling and Induced Emotions

Other endogenous paradigms included spelling [25][38] and self-induced emotion paradigms [57][39]. Spelling [25][38] is a control paradigm that could present an intuitive control approach. However, only one system in the literature used this method, applying it to wheelchair control [25][38]. Furthermore, although the system obtained a success rate in trials of over 90%, it was a synchronous system. The success rate denoted the rate of correct completion of a navigation task on the wheelchair. Moreover, the idle state was not included as a class; thus, to keep moving forward, the user had to continuously spell the word “FORWARD”, which was mentally taxing and impractical. Self-induced emotions [57][39], which can be generated by recalling memories or emotive concepts, were found to be effective for asynchronous control of a wheelchair, with a success rate between 73% and 85% in executing four commands. However, induced emotions are a highly counter-intuitive approach because emotions cannot be logically related to navigational commands; for example, induced anger and sorrow were used to issue commands to turn left or right [57][39]. This was in contrast to more intuitive systems based on spelled commands such as “LEFT” and “RIGHT” [25][38] or imagined left-hand and right-hand movements [55][35] that could be easily associated with the commands to turn left and right. Furthermore, users had to make an effort to self-induce emotions despite their current mood, which may be challenging in everyday life. Self-inducing emotion may also be more mentally demanding than other paradigms, such as facial movement, which use relatively straightforward actions such as blinks [58,59][40][41].2.3. Facial-Movement Paradigms

Facial-movement paradigms have been touted as a viable alternative to other endogenous paradigms such as MI because they have much lower BCI illiteracy rates [59][41]. This is because facial movements such as blinks and eyebrow or mouth movements produce distinct signal characteristics in EEG data that can be detected with a relatively high accuracy [58,59][40][41]. Sequential facial-movement paradigms have also been used to augment the number of commands executed using a small number of facial actions. These operate in a similar way to sequential MI commands, requiring the user to carry out sequences of facial actions, typically three [24][42], to generate commands. As with sequential MI commands, this requires the user to remember sets of arbitrary sequences of commands, which may require training for successful adaptation. Facial-movement paradigms can lead to counterintuitive or impractical commands such as a furrowed brow for closing a prosthetic hand [59][41] or closing one’s eyes for prolonged periods of time while navigating a wheelchair [24][42].2.4. Multiparadigm Systems

Table 2 shows the details of mixed-paradigm systems. Three of these systems merged traditional MI with other paradigms to attempt to overcome the relatively poor classification accuracy of MI systems when compared to exogenous systems such as SSVEP [9][44]. Ortiz et al. [61][45] proposed a mixed paradigm based on MI classification and attention detection for controlling an exoskeleton. Attention detection is used to identify when the subject has a high attention level, which can occur when concentrating on generating MI. They applied this system to a two-class problem: MI for walking, which made the exoskeleton walk; and the idle state, which led to stopping. In this approach, the EEG data was passed through two independent decoders, one to identify the MI and one to identify the attention level. They then merged the decisions of the two decoders in an ensemble based on the certainties of each decoder output. Using MI and attention together resulted in an accuracy of 67%, compared to 56% for MI and 58% for attention alone. In the online testing phase, as given in Table 2, the traditional MI paradigm outperformed the MI and attention paradigms, and the authors hypothesized that this was due to lags in the online system. In all the experiments presented, the accuracies were relatively low, considering it is widely accepted that having an accuracy of over 70% is required for a reliable online system [4][18].Table 2.

A summary of EEG paradigms, devices, and control functions in the literature for multiparadigm systems.

| Paper | Paradigm | Device | No. of Classes | Classes and Control Function | Accuracy | ||||

|---|---|---|---|---|---|---|---|---|---|

| Ortiz, 2020 [61][45] | Traditional MI + attention | Lower-limb exoskeleton | 2 | Walk MI—walking; idle—just stand | Traditional MI: 63%; MI + attention: 45% |

||||

| ] | |||||||||

| Tang, 2020 [2][46 | 2 | ]MI of dominant foot—walking; idle—standing still | 78% | ||||||

| Traditional MI + facial movement | Wheelchair | 4 | Left-hand MI—turn left; right-hand MI—turn right; eye blink—go straight | 84% | Wang, 2018 [44][24] | 3 | Left-hand MI—sitting; right-hand MI—standing up; feet MI—walking | ||

| Kucukyildiz, 2017 [33][11 | >70% | ||||||||

| ] | Mental arithmetic + reading | Wheelchair | 3 | Idle—turn left; mental arithmetic—turn right | N/A | Liu, 2017 [45][25] | 2 | Left-hand MI—moving left leg; right-hand MI—moving right leg | >70% |

| Ang, 2017 [46][26] | Haptic robot | 2 | MI in the stroke-affected hand; idle state | ~74% | |||||

| Cantillo-Negrete, 2018 [47][27] | Orthotic hand | 2 | MI in dominant hand (healthy subjects) or stroke-affected hand (patients)—moving; idle state—do nothing | >60% | |||||

| Xu, 2020 [48][28] | Robotic arm | 4 | Left-hand MI—turn left; right-hand MI—turn right; both hands—move up; relaxed hands—move down | 78% (for left vs. right and up vs. down experiments); 66% (for left, right, up, and down experiments) | |||||

| Zhang, 2019 [49][29] | 3 | Left-hand MI—turn left; right-hand MI—turn right; tongue MI—move forward | 73% | ||||||

| Xu, 2019 [50][30] | 2 | Left-hand MI—left planar movements; right-hand MI—right planar movements | >70% | ||||||

| Edelman, 2019 [42][22] | Robotic hand | 4 | Left-hand MI—left planar movements; right-hand MI—right planar movements; both-hands MI—upward planar movements; rest—downward planar movements | N/A | |||||

| Spychala, 2020 [37][15] | 3 | MI hand flexion or extension for similar behavior in robotic hand, idle state—maintain hand posture | ~60% | ||||||

| Moldoveanu, 2019 [51][31] | Robotic glove | 2 | Left-hand MI and right-hand MI—controlled movement of robotic glove | N/A | |||||

| Zhuang, 2021 [52][32] | Mobile robot | 4 | Left MI—turn left; right MI—turn right; push MI—accelerate; pull MI—decelerate | N/A (>80% for offline) | |||||

| Batres-Mendoza, 2021 [12][9] | 3 | Left-hand MI—turn left; right-hand MI—turn right; idle state—maintain behavior | 98% | ||||||

| Tonin, 2019 [13][10] | 2 | Left-hand MI—turn left; right-hand MI—turn right. Idle rest state inferred from probability output of classifier. | ~80% | ||||||

| Hasbulah, [53][33], 2019 | 4 | Left-hand MI—turn left; right-hand MI—turn right; left-foot movement—move forward; right-foot movement—move backward | 64% | ||||||

| Ai, 2019 [54][34] | 4 | Left-hand MI—turn left; right-hand MI—turn right; both-feet MI—move forward; tongue MI—move backward | 80% | ||||||

| Jafarifarmand, 2019 [55][35] | 2 | Left-hand MI—turn left; right-hand MI—turn right | N/A | ||||||

| Andreu-Perez, 2018 [14][36] | 2 | Left-hand MI—turn right; right-hand MI—turn left. If the probability output of the classifier was less than 80%, maintain current state. | 86% | ||||||

| Cardoso, 2021 [31][6] | Pedaling machine | 2 | Pedaling MI—cycle; idle state—remain stationary | N/A | |||||

| Romero-Laiseca, 2020 [56][37] | 2 | Pedaling MI—cycle; idle state—remain stationary | ~100% (healthy subjects); ~41.2–91.67% (stroke patients) | ||||||

| Gao, 2021 [40][20] | Prosthetic leg | 3 | Left-hand MI—walking on terrain; right-hand MI—ascending stairs; foot MI—descend stairs | N/A | |||||

3. Shared Control

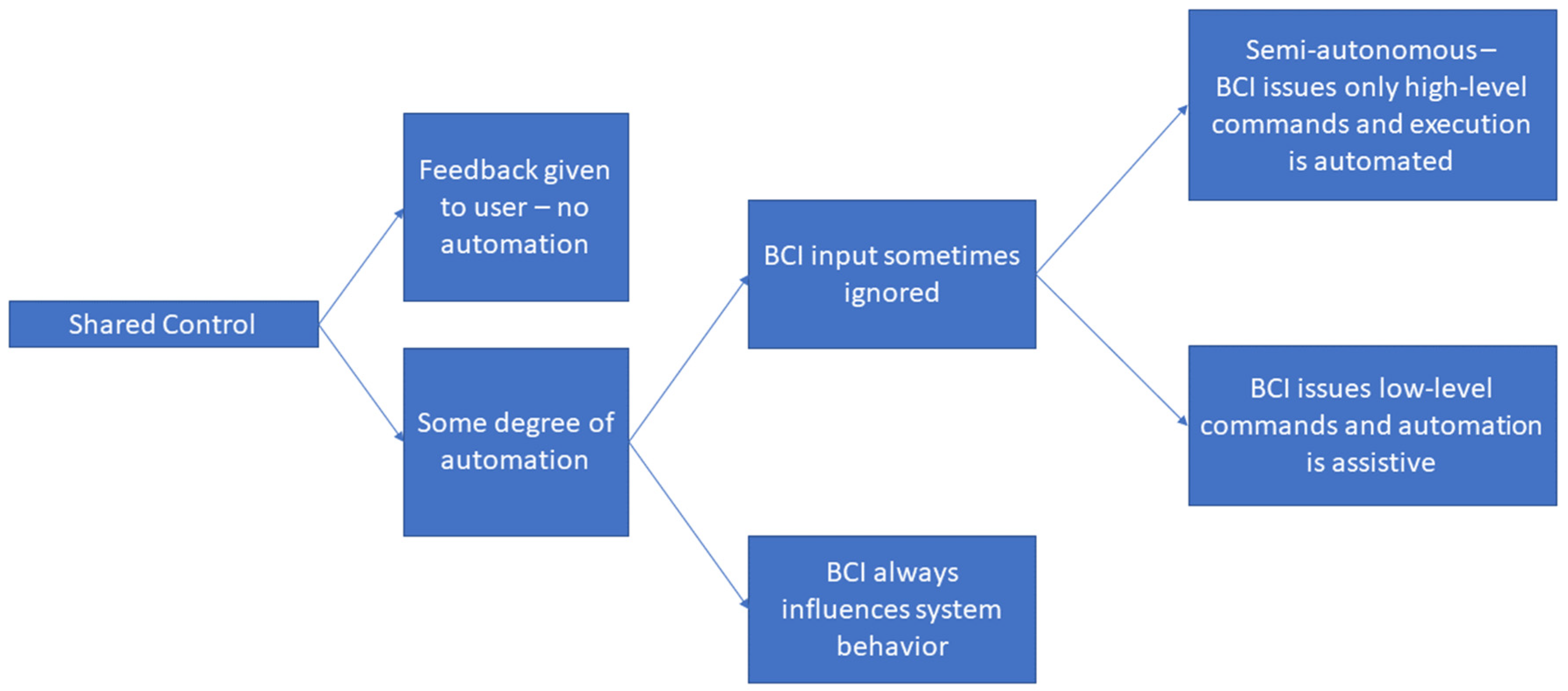

Shared control involves merging the BCI decoder output with information from environmental sensors to control the dynamic device. Shared control has been mainly used to implement emergency stopping, obstacle avoidance, and semiautonomous navigation in dynamic devices. A total of 27% of the papers reviewed (18 papers) explicitly discussed shared control as a part of the proposed systems. However, this did not mean that the other systems did not use information from sensors on the dynamic devices to regulate the behavior of the system. In fact, it was common for the BCI to be used to issue high-level commands to devices such as exoskeletons, orthotics, or robotic arms with grasping capabilities, then these devices executed those commands using internal sensors for guidance [40,43,44,62,63,64,65][20][23][24][47][48][49][50]. This section, however, reviews papers that explicitly discussed shared-control strategies as part of the presented work. Shared control presents a trade-off between the autonomy of the user in controlling the system through the BCI decoder and the control actions taken based on feedback from sensors. This leads to different levels of shared control, with higher levels of shared control leading to a greater impact of the sensor information on device performance. This section discusses these levels of shared control, which ranged from passive communication of sensor information to the user at the lowest level to semiautonomous systems that could navigate to a target selected by the user at the highest level. The lowest level of shared control in the reviewed literature facilitated passive communication from the dynamic device to the user [66][51]. The earliest examples of this were in the BCI-controlled quadcopter studies by LaFleur et al. [27][2] (2013) and Kim et al. [26][1] (2014), in which a video stream recorded from the hull of a quadcopter was fed back to the subject. Later, in the study by Li et al. [66][51], an RGB sensor was attached to a mobile robot, and incoming images were processed using a deep learning simultaneous localization and mapping (SLAM) algorithm to identify objects. The maps were then displayed on a graphical user interface (GUI) with possible obstacles highlighted. The user was responsible for carrying out obstacle avoidance based on this feedback. This type of shared control would be useful for applications involving remote control, such as robots that explore debris after an earthquake or flying a drone from another location. This is the lowest level of shared control, since it merely feeds back sensor information to the user, leaving them to carry out obstacle avoidance and navigation. Gandhi et al. [67][52] presented a slightly higher level of shared control in which the user could control the movements of a mobile robot by selecting directional commands such as left, right, forward, backward, and halt from an intelligent adaptive user interface. The intelligent adaptive user interface ordered the commands according to sensor feedback so that the commands facilitating obstacle avoidance were at the top of the menu, whereas more risky commands were toward the bottom of the menu. This made it easier for the subject to easily select the recommended commands that would support obstacle avoidance while still giving them full autonomy to select any navigation command in the system. Menu navigation was carried out through MI commands. A similar approach was presented by Shi et al. [30][5] for an UAV. A laser range finder and video camera were used to identify obstacles in the vehicle’s path. When an obstacle was detected, the UAV hovered and the user was presented with a menu of options for commands that would facilitate obstacle avoidance (the options could consist of turn left, turn right, or continue ahead, depending on the situation). The user could, however, choose to not take the suggested actions, override the menu, and then control the UAV fully through the BCI. Some studies used shared control for emergency stopping. Emergency-stopping systems offer a higher level of shared control, since the feedback from the sensors can automatically stop movement of the device, thus superseding the BCI decoder output during emergency situations. Infrared [24][42], ultrasound [12][9], and Kinect sensors [33][11] have all been used to detect obstacles and stop the movement of a mobile device if an obstacle was nearby. Once the device was stopped, the user was required to use BCI commands to navigate around the object. Navigating around obstacles manually through a BCI, however, can be tedious [5][53]. Furthermore, some devices, such as robotic manipulators, require fine movement control that may not be possible with current EEG-based BCI decoders [68][54]. These issues can be addressed through higher-level shared control systems that implement automatic obstacle avoidance or the execution of fine motor control. Obstacle-avoidance algorithms use incoming sensor data to guide the movement of a device around objects. The earliest example of obstacle avoidance being used with a BCI for dynamic device control was in the 2003 work of Millán et al. [16][55]. In this BCI, the subject controlled the direction of travel (left, right, or forward) of a mobile robot through a maze, and the mobile robot could perform obstacle avoidance or emergency stops automatically if there was a risk of a collision. When the robot encountered a wall, it followed the wall until the subject issued a command that moved the robot away from the wall. Details of the control algorithms were not included in the paperntry. A later study [52][32] implemented obstacle avoidance such that whenever an object was identified, the mobile robot maximized the distance between the robot and the obstacle. During obstacle avoidance, the BCI-issued user commands were ignored. Although this approach prevented collisions, maximum margin obstacle avoidance may be unsuitable for applications in which subjects need to approach objects at close range; for example, to inspect them or pick them up. In 2015, Leeb et al. [5][53] presented a telepresence robot that used the artificial potential fields method to enable the robot to approach objects at close range. Artificial potential fields are a standard path-planning approach [5,69][53][56] and involve modeling objects in the environment as either attractive forces, which pull the planned path toward them, or repellant forces, which push the path away. Infrared sensors on the robot were used to identify nearby objects [5][53], and the objects were modeled by attractive forces if the BCI decoder indicated that the direction of travel was toward that object. Otherwise, the object was modeled by a repulsive force. Using this approach, the telepresence robot could navigate a cluttered living environment. The shared control approach was effective: when comparing the average results obtained when using shared control to when the robot was controlled with just the BCI decoder; using shared control resulted in trials that were 33% faster and required 15 fewer commands. Another study used artificial potential fields to guide the movement of a robotic arm toward targets based on the BCI decoder output and targets identified through a Kinect sensor [69][56]. In this work, the objects were detected using the Kinect sensor, and the BMI decoder’s output gave an indication toward which target the subject would like to move the robotic arm. This target was then assigned an attractive force, and the arm moved toward the target. A blending parameter was used to balance the influence of the BCI decoder and the sensor on the movement of the arm to ensure that the user could exert influence over the system. This blending parameter was used to fuse the robotic arm velocity obtained from the BCI decoder with the ideal velocity vector, which would transport the arm directly toward the target that the system predicted the subject wanted to reach. This blending parameter ensured that if the system had incorrectly interpreted the direction of movement desired by the subject, the arm did not immediately move to the incorrect target, while at the same time it enabled the sensor data to aid the movement of the arm toward the desired object, possibly reducing mental fatigue and frustration in the user [69][56]. Shared control has also been used to facilitate finer reach and grasp motions in a robotic arm, which can be challenging to execute when using just the BCI decoder output [49,68][29][54]. For example, Li et al. [68][54] facilitated control of a robotic manipulator in three dimensions through a shared-control strategy. They divided the workspace of the manipulator into two areas: an inner area where the targets were located, and an outer area. The manipulator was governed by different control laws depending on the area. When in the outer area, the user commands issued by the BCI for moving left, right, up, down, forward, and backward were closely followed; however, when the manipulator joint was pushed outside of the bounded outer area, the joint was reset, pulling the manipulator toward the inner area again. The inner workspace was divided into a fine grid of possible locations, and the navigation of the manipulator was guided toward one of these definite locations. At each update, the manipulator was moved to one of the six grid-points neighboring its current position, and the location selected depended on the current location of the manipulator and the output command from the BCI. This enabled finer control of the movement of the manipulator within the inner area. Shared control has also been used to implement clean-cut control modes between the BCI and the underlying controller [50][30]. In the approach by Xu et al. [50][30], the user, through the BCI decoder, was generally in full control of the movements of a robotic arm. However, the arm was equipped with a depth camera that could sense targets, and once the arm was within a certain distance from a detected target, an autonomous controller took over the grasping motion to seize the target object. This removed the need for a user to issue a grasping command, and saved the user from the possibly frustrating process of eliciting fine motor control through the BCI alone. However, this approach may be inappropriate for grasping objects in a crowded environment, since the object on which the subject is focused may not be clear. Semiautonomous systems provided the highest level of shared control in the reviewed literature. The gaze-and-BCI upper limb exoskeleton presented by Frisoli et al. [70][57] enabled a subject to select a target object through eye-gaze tracking and activate the upper limb exoskeleton through MI, and then the exoskeleton moved autonomously to grasp the target. A Kinect camera was used to identify the objects in the subjects’ view range, and then the eye-gaze tracking data was transposed onto the segmented Kinect image in order to identify the object at which the subject was looking. Another semiautonomous system was the BCI-controlled wheelchair by Zhang et al. [71][58], in which the subject selected a destination from a menu, and then the wheelchair performed path planning, path following, and obstacle avoidance to guide the subject to the desired destination. Menu navigation was carried out using an MI paradigm, while path planning was carried out using the popular A* algorithm, which finds the shortest path between two locations, and proportional–integral control was used for path following [71][58]. Figure 2 shows a taxonomy for the shared control approaches discussed throughout this section. Based on the reviewed literature, shared control could be split into two major branches: those that just passed sensor feedback to the BCI user [66[51][52],67], and those that had some element of automation involving a controller that executed actions based on sensor data. Shared-control paradigms with an element of automation could be further divided into those in which the BCI always had influence over the behavior of the system, even when obstacle avoidance was being carried out [5[53][56],69], and those in which the input from the BCI was sometimes ignored, with the sensor-based controller completely taking over operation at certain times. The latter type of shared control could be clearly grouped into two branches: the first consisted of BCIs that enabled users to issue low-level commands and an automatic controller with an assistive nature that was designed to take over operation infrequently during specific events such as emergency stops [12,24,33,50,68][9][11][30][42][54]; the second consisted of semiautonomous systems in which the BCI was used to issue high-level commands, such as selecting the destination for a BCI-controlled wheelchair, and then the sensor-based controller executed those commands [70,71][57][58].

Figure 2.

A taxonomy of the shared-control approaches proposed in the reviewed literature.

4. Obtaining Stable Control from BCI Decoders

The majority of the papers wresearchers reviewed obtained the control signal directly from the output of the BCI classifier [2,28,31,33,37,43,46,48,50,56,59,60,61,63,66,72][3][6][11][15][23][26][28][30][37][41][43][45][46][48][51][59]. For asynchronous systems, this generally involved windowing the EEG data and obtaining a classification output at a regular rate [2,28,33,37,43,46,48,50,56,59,60,61,63,66,71,72][3][11][15][23][26][28][30][37][41][43][45][46][48][51][58][59]. This classification output was used to drive the external device. However, BCI decoders are known to have an unstable output that is prone to spurious misclassifications [13[10][34],54], and some of the reviewed papers attempted to mitigate this to provide a more stable BCI control signal for control [13,35,54][10][13][34]. The two main approaches in the literature for obtaining stable control signals were false-alarm approaches and smoothing approaches.4.1. False-Alarm Approaches

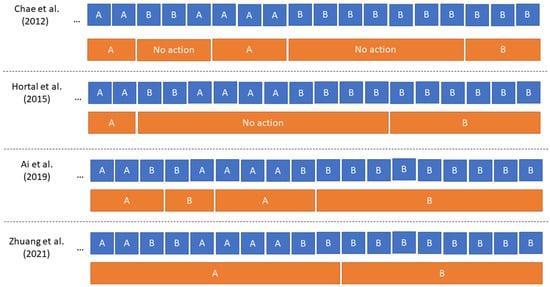

The false-alarm approach was the most straightforward one used in the literature to obtain a smoother control signal [52,54][32][34]. This approach is based on the assumption that a subject cannot change their mental state instantaneously. Thus, when the system identifies a change in mental state at the classifier output, either dynamic operation is paused until the output stabilizes, or the current state is maintained until a certain number of consecutive classifier outputs are of the same state. In 2012, Chae et al. [73][60] presented a comprehensive fading feedback rule that was based on the false-alarm approach. In this approach, in order to execute a command, a “buffer” of four similar consecutive classification outputs for the command had to be achieved. Once the buffer was full, the system continued to execute the command as long as the BCI output remained consistent. If the classification output did not match the current control state, command execution paused, and one level was removed from the buffer. If the subject wished to continue in the current command state, then they had to issue a correct classifier output to top up the buffer before execution of the command could recommence. Otherwise, to change state, the subject had to execute three more BCI decoder outputs that were not the current state to reduce the buffered values to zero, and then execute four consecutive correct classifications of the new desired state to refill the buffer and begin executing the new command. This meant that the fastest way to change state involved the BCI classifier outputting the same label for eight consecutive samples. Chae et al. [73][60] verified that this approach improved the classification accuracy, but resulted in longer decision times for the BCI system. In 2015, Hortal et al. [74][61] presented a slightly different approach for controlling a robotic arm: the latest five consecutive classification labels determined by the BCI decoder were considered, and if all five were the same the movement, the command was executed; otherwise, no action was taken and the arm remained at rest. More recent approaches, such as those of Ai et al. [54][34] (2019) and Zhuang et al. [52][32] (2021), used more straightforward approaches for false-alarm systems that only required two or three consecutive samples, respectively, to be similar. This introduced lower latency than the eight-step process for changing the buffer state in the work of Chae et al. [73][60]. Although Ai et al. [54][34] commented that the false-alarm system could reduce false positives, this benefit was not verified experimentally in [54][34] or by Zhuang et al. [52][32], and neither cited literature to support this design choice. Verification of the effectiveness of the false-alarm approach is desirable. Figure 3 compares the operation of the four different false-alarm approaches discussed in this section. This illustrative example is for a two-class problem, in which classes A and B are related to different mental states that cause different types of movement in the dynamic device. The blue bars show the output of the BCI classifier at the previous time step, and the orange bars show the action taken in the dynamic device at the current time step. It is immediately evident that there was a significant philosophical shift in these false-alarm approaches: the earlier approaches of Chae et al. [73][60] and Hortal et al. [74][61] halted the operation of the device when the BCI classifier outputted a different label and a false alarm was triggered, while the more recent approaches by Ai et al. [54][34] and Zhuang et al. [52][32] just maintained the current device state through the false-alarm condition. By halting operation earlier, approaches could successfully reduce the time spent executing an unwanted command, but this resulted in episodic movements of the device, which could affect the smoothness of operation that subjects might expect in an asynchronous BCI. Later works that maintained the current state gave the user a better impression of continuous device control when spurious misclassifications occurred. However, they could remain in a control state for longer than the subject intended if the classifier was susceptible to high levels of instability.

Figure 3. Comparing different false-alarm approaches. The blue bars show the BCI classifier output at the previous time step, and the orange bars show the decision made at the current time step. The example is for a two-class problem in which A denotes the classifier label for one mental state and B is the label for the other state. Each mental state was related to a different movement in the dynamic device. During “no action” phases, movement of the device was paused. In this example, it was assumed that at the start, the BCI classifier was outputting in class A for a long period (more than eight consecutive samples). Four different approaches are presented, namely those by Chae et al. [73][60], Hortal et al. [74][61], Ai et al. [54][34] and Zhuang et al. [52][32].

4.2. Smoothing Approaches

Some works in the literature smoothed the control signal [5,13,41,45][10][21][25][53]. The most straightforward approach in the literature involved averaging the label probabilities outputted from the classifier over a number of consecutive time steps, with studies using two [41][21], three [45][25], four [75][62] or eight steps [16][55]. Leeb et al. [5][53] used a weighted average of the present BCI decoder output and the previous smoothed output sample. The weighting parameter could be tuned for each individual subject. The class associated with the highest smoothed probability was the class assigned in the control signal. In 2019, Tonin et al. [13][10] presented a dynamical smoothing method that drove a control signal to one of three stable points, corresponding to left- and right-turn actions for mobile robot control and the idle state, which corresponded to no robot-turning action while the robot continually moved forward. The approach involved calculating two mathematical forces that were then used to determine the change in the control signal from one time step to the next. The first force was influenced by the value of the control signal at the previous time step, while the second was influenced by the BCI decoder output at the current time step. The proposed approach was compared to the weighted average smoothing approach of Leeb et al. [5][53] for navigating a mobile robot toward one of six targets within a fixed space. The approach by Tonin et al. resulted in a shorter length of the trajectory to reach a predefined target and a higher accuracy in terms of times the targets were successfully reached.5. Overcoming the Limited Degrees of Freedom in Endogenous BCIs

One of the core issues in endogenous BCIs is that even with state-of-the-art signal-processing and classification techniques, the number of classes that can be accurately classified is limited [1,28][3][8]. This is evident in Table 1, which shows that endogenous systems based on mental commands were often limited to four commands or less. Since dynamic devices typically have a high number of degrees of freedom, innovative solutions have been found to facilitate their control through endogenous BCIs. The main solutions in the reviewed literature were sequential control paradigms, hybridization of the BCI, and menus that could be navigated through limited commands.5.1. Sequential Command Paradigms

Sequential command paradigms require the subject to issue commands by executing two endogenous mental activities sequentially, as briefly introduced in Section 6. A sequential paradigm involves training a classifier on a number of singular endogenous activities, such as left-hand MI. However, during online use of the BCI, subjects issue commands by sequentially carrying out mental activities; for example, imagining left-hand MI followed by right-hand MI. Postprocessing of the classifier output is used to identify the sequential activities and thus the command to be issued. The earliest reporting of a sequential command paradigm was in the 2014 synchronous BCI by Li et al. [68][54], which was designed to control a robotic manipulator. In that paper, a classifier was trained on five different classes; namely, the rest state and four MI classes, which were left-hand, right-hand, foot and tongue MI. From these five classes, they obtained six commands through the following pairings: left-hand MI followed by idle to move left, right-hand MI followed by idle to move right, tongue MI followed by idle to move up, foot MI followed by idle to move down, left-hand MI followed by foot MI to move forward, and right-hand MI followed by foot MI to move backward. In their 2018 paper, Yu et al. [1][8] presented a similar sequential MI-based paradigm, but applied it to asynchronous control of a wheelchair. The system was based on a linear discriminant analysis (LDA) classifier that could classify left-hand MI, right-hand MI, and idle-state data. The user could generate four commands using these three classes by carrying out these sequential commands: left-hand MI then idle, right-hand MI then idle, left-hand MI then right-hand MI, and right-hand MI then left-hand MI. The sequentially executed commands were determined by performing template-matching postprocessing on the output of the classifier. Sequential-command paradigms have also been used in conjunction with facial-movement commands. In their 2021 paper, Banach et al. [24][42] obtained seven different commands to control wheelchair movement from endogenous brain signals obtained from two activities; namely, eyes open and eyes closed. Unlike the works of Yu et al. [1][8] and Li et al. [68][54], which required the user to issue two-step sequential commands, Banach et al.’s study used a three-step sequential command setup in a synchronous manner. Since each step required three seconds, each command took up to nine seconds to perform, introducing an impractical latency into the system. Although sequential paradigms are able to increase the number of commands and have been shown to be effective for both synchronous and asynchronous BCIs, there is no rigorous comparison between a sequential command paradigm and a standard BCI paradigm. For example, Yu et al. [1][8] obtained four commands from three mental activities—left- and right-hand MI and idle—using the sequential MI approach; however, this was not compared to using a standard four-class MI paradigm consisting of, for example, left-hand, right-hand, legs, and tongue MI. Thus, several open questions remain, such as whether this two-step command paradigm with three mental activities will have a lower accuracy than a similar one-step command paradigm due to its sequential nature; in other words, does this still result in a higher accuracy than the four-class MI paradigm, which is expected to have lower accuracy? If the sequential paradigm results in better accuracy, is this worth the trade-off of higher latency? Since multistep paradigms require subjects to remember compound commands that may not be intuitive, does the cognitive load of such paradigms make them less practical and attractive to users? Thus, the true value of a sequential paradigm as opposed to a single-step paradigm is still an open question.5.2. Finite-State Machines

In their 2018 paper, Yu et al. [1][8] used a finite-state machine to increase the number of commands that could be issued by the subject to control a wheelchair. The previous subsection discussed how a sequential command paradigm was used to increase the number of commands from three to four. The finite-state machine was then used to increase the number of commands that the subject could issue to six, thus enabling them to trigger commands to start, stop, turn left, turn right, accelerate, and decelerate. This was because different sequential commands were linked to different actions in the wheelchair, depending on the current state of the system. For example, if the wheelchair was at rest, the left-idle sequential command could be used to start the wheelchair moving at a low speed, while issuing the same command while the wheelchair was already moving would result in an acceleration. A success rate of 94% was obtained for navigation tasks, which involved traversing a set route that included passing by specific waypoints and evading an obstacle. The “success rate” referred to the percentage of tasks successfully completed, with success being defined as reaching all the waypoints and not colliding with the obstacle. Together, the finite-state machine and sequential paradigm were effective in circumventing the issue of poor classification of the multiclass MI data by restricting the classifier to three classes. However, the finite-state machine required the user to remember the multiple-state-dependent meanings for some commands, which may have further increased the mental load on the user.5.3. Hybrid BCIs: Increasing the Degrees of Freedom through Additional Biosignals

In this entreviewy, the term hybrid BCI (hBCI) refers to systems that use other biosignals in addition to EEG for driving an external device. Hybrid BCIs have primarily been used to increase the number of commands that can be issued through an EEG-based BCI by enabling the subject to issue commands through another biosignal paradigm [29,34,76][4][12][63]. In 2016, Soekadar et al. [34][12] presented an hBCI for controlling a motorized hand exoskeleton that combined EEG and electrooculogram (EOG) signals. EEG signals related to grasping intention were used to activate the exoskeleton to grasp. EOG signals related to horizontal eye movements were used to open the exoskeleton. This device was successfully used by subjects with quadriplegia and could be used to aid daily living activities based on grasping motions, such as holding a mug or book. Hybrid BCIs have been widely applied to BCI-controlled quadcopters [26,28[1][3][4][5],29,30], possibly because these devices present a large number of degrees of freedom and can be challenging to control even with standard manual controllers. Additional biosignals have been used primarily to increase the number of control commands, but they have also been used to increase system robustness [26,28,29,30][1][3][4][5]. In 2014, Kim et al. [26][1] presented a BCI-controlled quadcopter that used EEG and eye-gaze tracking. A camera on the hull of the quadcopter fed back a real-time image based on the quadcopter’s location, and the subject controlled the movement of the quadcopter using eye movements. There were two main control modes: in mode A, the subject could control the forward, backward, left translational, and right translational motion of the device by looking up, down, left, or right, respectively; using similar eye movements in mode B, the subject could control upward motion, downward motion, left rotation, and right rotation. Dual-modality commands were used to control take-off/landing (simultaneous concentration and eyes closed) and change between control modes A and B (concentration and looking at the center of the screen). Chen et al. [29][4] presented a BCI-controlled quadcopter that used left-hand and right-hand MI to control landing and take-off, and EOG signals to control navigation during flight. To control the direction of quadcopter movement (left, right, forward, or backward), the subject looked at a four-icon menu in which each icon was linked to a particular function. The hBCI was found to be effective, exhibiting online command decoding accuracies between 95% and 96% depending on the subject. However, restricting the user to focusing on a menu during flight limited this system’s practicality: when flying a quadcopter, the subject will usually be looking at the device, or in the least observing a livestream of its movements on a screen. Khan et al. [28][3] presented a more practical hBCI for controlling a quadcopter. The system could accept up to eight commands, four of which were supplied through EEG and four of which were supplied through functional near-infrared spectroscopy (fNIRS). The EEG-based commands, which used a facial-movement paradigm, were horizontal eye movements for landing, double blink to decrease altitude, eye movements in the vertical plane for anticlockwise rotation, and triple blink to move forward. The fNIRS commands consisted of mental activities, including imagined object rotation for take-off, mental arithmetic to increase altitude, word generation for clockwise rotation, and mental counting for backward movements. As a safety feature, the authors ensured complementary commands were assigned to different recording modalities. For example, the take-off command was issued using an EEG-derived command, whereas the “landing” command was issued using fNIRS. This ensured that if an erroneous command was interpreted using one modality—possibly due to a malfunction or poor signals being received at that time—a corrective command could be issued by the subject using the other modality. In the offline analysis, the fNIRS classifier obtained an accuracy of 77% and the EEG classifier obtained an accuracy of 86%. The paper did not provide a performance measure for the online flying task. Unlike the approach proposed by Chen [29][4], the subject could continuously observe the motion of the quadcopter during use. However, the commands lacked intuition, and forward movement required continuous triple blinking, which could induce fatigue. Furthermore, both works were tested in simple flying conditions that did not require any obstacle avoidance.5.4. Menu Navigation with Limited Commands

Some systems allowed subjects to choose the behavior of the dynamic device through a GUI-based menu that could be navigated using a limited number of commands. The menu used in the work of Gandhi et al. [67][52] sequentially presented subjects with pairs of options. The subject could pick one command from the five available in the menu to control the movement of a robot; namely, forward, backward, turn left, turn right, and halt. The menu also had a final option called “main” to return to the top of the menu. The options in the menu were organized in pairs, and the subject could select using left-hand or right-hand MI depending on whether they wanted to issue the command on the left or right, respectively. If the subject did not want to select either of the options, they could remain in the relaxed (idle) state, and the system would then show the next pair of commands. This enabled the subjects to select one of five commands using just two different MI activities and the rest state. Zhang et al. [71][58] presented a menu that could be used by the subject to select one of 25 different target points within a room to which they want the wheelchair to move. The menu was presented to the subject as a horizontal list of the numbers 1–25. The list was divided in two, and the user could choose to select either the left-hand side of the list by issuing a left-hand MI command or the right-hand side of the list by issuing a right-hand MI command. The selected half of the list was then displayed to the user with the halfway point indicated, and the user again selected the half of the list in which the desired location could be found. Eventually, the user was able to select the desired location from the final two destinations on the list. In all, the selection process had to be carried out five times in order to obtain the final destination, which resulted in a significant latency when using the system. The menu-based approaches discussed in this subsection provided different levels of control to the user. Zhang et al. [71][58] proposed a semiautonomous system in which the subject could only select the desired destination, and then the wheelchair navigated autonomously to it. The implementation by Gandhi et al. [67][52] offered the subject lower-level control of the system by enabling them to select a dynamic action such as turning left. The approaches discussed in this section made use of the relatively high classification accuracy that can be obtained when just two MI classes are used. Nevertheless, the sequential selection process involved in these menus can be long and tedious, introducing latencies that may make them impractical for a real-life BCI.References

- Kim, B.H.; Kim, M.; Jo, S. Quadcopter Flight Control Using a Low-Cost Hybrid Interface with EEG-Based Classification and Eye Tracking. Comput. Biol. Med. 2014, 51, 82–92.

- LaFleur, K.; Cassady, K.; Doud, A.; Shades, K.; Rogin, E.; He, B. Quadcopter Control in Three-Dimensional Space Using a Noninvasive Motor Imagery-Based Brain-Computer Interface. J. Neural Eng. 2013, 10, 046003.

- Khan, M.J.; Hong, K.S. Hybrid EEG-FNIRS-Based Eight-Command Decoding for BCI: Application to Quadcopter Control. Front. Neurorobotics 2017, 11, 6.

- Chen, C.; Zhou, P.; Belkacem, A.N.; Lu, L.; Xu, R.; Wang, X.; Tan, W.; Qiao, Z.; Li, P.; Gao, Q.; et al. Quadcopter Robot Control Based on Hybrid Brain-Computer Interface System. Sens. Mater. 2020, 32, 991–1004.

- Shi, T.; Wang, H.; Zhang, C. Brain Computer Interface System Based on Indoor Semi-Autonomous Navigation and Motor Imagery for Unmanned Aerial Vehicle Control. Expert Syst. Appl. 2015, 42, 4196–4206.

- Cardoso, V.F.; Delisle-Rodriguez, D.; Romero-Laiseca, M.A.; Loterio, F.A.; Gurve, D.; Floriano, A.; Valadão, C.; Silva, L.; Krishnan, S.; Frizera-Neto, A.; et al. Effect of a Brain–Computer Interface Based on Pedaling Motor Imagery on Cortical Excitability and Connectivity. Sensors 2021, 21, 2020.

- Zecca, M.; Micera, S.; Carrozza, M.C.; Dario, P. Control of Multifunctional Prosthetic Hands by Processing the Electromyographic Signal. Crit. Rev. Biomed. Eng. 2002, 30, 459–485.

- Yu, Y.; Liu, Y.; Jiang, J.; Yin, E.; Zhou, Z.; Hu, D. An Asynchronous Control Paradigm Based on Sequential Motor Imagery and Its Application in Wheelchair Navigation. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2367–2375.

- Batres-Mendoza, P.; Guerra-Hernandez, E.I.; Espinal, A.; Perez-Careta, E.; Rostro-Gonzalez, H. Biologically-Inspired Legged Robot Locomotion Controlled with a BCI by Means of Cognitive Monitoring. IEEE Access 2021, 9, 35766–35777.

- Tonin, L.; Bauer, F.C.; Millán, J.d.R. The Role of the Control Framework for Continuous Teleoperation of a Brain–Machine Interface-Driven Mobile Robot. IEEE Trans. Robot. 2019, 36, 78–91.

- Kucukyildiz, G.; Ocak, H.; Karakaya, S.; Sayli, O. Design and Implementation of a Multi Sensor Based Brain Computer Interface for a Robotic Wheelchair. J. Intell. Robot. Syst. Theory Appl. 2017, 87, 247–263.

- Soekadar, S.R.; Witkowski, M.; Gómez, C.; Opisso, E.; Medina, J.; Cortese, M.; Cempini, M.; Carrozza, M.C.; Cohen, L.G.; Birbaumer, N.; et al. Hybrid EEG/EOG-Based Brain/Neural Hand Exoskeleton Restores Fully Independent Daily Living Activities after Quadriplegia. Sci. Robot. 2016, 1, eaag3296.

- Choi, J.; Kim, K.T.; Jeong, J.H.; Kim, L.; Lee, S.J.; Kim, H. Developing a Motor Imagery-Based Real-Time Asynchronous Hybrid BCI Controller for a Lower-Limb Exoskeleton. Sensors 2020, 20, 7309.

- Cardoso, V.F.; Delisle-Rodriguez, D.; Romero-Laiseca, M.A.; Loterio, F.A.; Gurve, D.; Floriano, A.; Krishnan, S.; Frizera-Neto, A.; Filho, T.F.B. BCI Based on Pedal End-Effector Triggered through Pedaling Imagery to Promote Excitability over the Feet Motor Area. Res. Biomed. Eng. 2022, 38, 439–449.

- Spychala, N.; Debener, S.; Bongartz, E.; Müller, H.H.O.; Thorne, J.D.; Philipsen, A.; Braun, N. Exploring Self-Paced Embodiable Neurofeedback for Post-Stroke Motor Rehabilitation. Front. Hum. Neurosci. 2020, 13, 461.

- Meng, J.; Zhang, S.; Bekyo, A.; Olsoe, J.; Baxter, B.; He, B. Noninvasive Electroencephalogram Based Control of a Robotic Arm for Reach and Grasp Tasks. Sci. Rep. 2016, 6, 38565.

- Pfurtscheller, G.; Neuper, C. Motor Imagery and Direct Brain-Computer Communication. Proc. IEEE 2001, 89, 1123–1134.

- Perdikis, S.; Millan, J.d.R. Brain-Machine Interfaces: A Tale of Two Learners. IEEE Syst. Man Cybern. Mag. 2020, 6, 12–19.

- Müller-Putz, G.; Rupp, R. The EEG-Controlled MoreGrasp Grasp Neuroprosthesis for Individuals with High Spinal Cord Injury—Multipad Electrodes for Screening and Closed-Loop Grasp Pattern Control. In Proceedings of the International Functional Electrical Stimulation Society 21st Annual Conference, London, UK, 17–20 July 2017.

- Gao, H.; Luo, L.; Pi, M.; Li, Z.; Li, Q.; Zhao, K.; Huang, J. EEG-Based Volitional Control of Prosthetic Legs for Walking in Different Terrains. IEEE Trans. Autom. Sci. Eng. 2021, 18, 530–540.

- Jeong, J.H.; Shim, K.H.; Kim, D.J.; Lee, S.W. Brain-Controlled Robotic Arm System Based on Multi-Directional CNN-BiLSTM Network Using EEG Signals. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1226–1238.

- Edelman, B.J.; Meng, J.; Suma, D.; Zurn, C.; Nagarajan, E.; Baxter, B.S.; Cline, C.C. Noninvasive Neuroimaging Enhances Continuous Neural Tracking for Robotic Device Control. Sci. Robot. 2019, 4, eaaw6844.

- Gordleeva, S.Y.; Lobov, S.A.; Grigorev, N.A.; Savosenkov, A.O.; Shamshin, M.O.; Lukoyanov, M.V.; Khoruzhko, M.A.; Kazantsev, V.B. Real-Time EEG-EMG Human-Machine Interface-Based Control System for a Lower-Limb Exoskeleton. IEEE Access 2020, 8, 84070–84081.

- Wang, C.; Wu, X.; Wang, Z.; Ma, Y. Implementation of a Brain-Computer Interface on a Lower-Limb Exoskeleton. IEEE Access 2018, 6, 38524–38534.

- Liu, D.; Chen, W.; Pei, Z.; Wang, J. A Brain-Controlled Lower-Limb Exoskeleton for Human Gait Training. Rev. Sci. Instrum. 2017, 88, 104302.

- Ang, K.K.; Guan, C. EEG-Based Strategies to Detect Motor Imagery for Control and Rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 392–401.

- Cantillo-Negrete, J.; Carino-Escobar, R.I.; Carrillo-Mora, P.; Elias-Vinas, D.; Gutierrez-Martinez, J. Motor Imagery-Based Brain-Computer Interface Coupled to a Robotic Hand Orthosis Aimed for Neurorehabilitation of Stroke Patients. J. Healthc. Eng. 2018, 2018, 1624637.

- Xu, B.; Li, W.; He, X.; Wei, Z.; Zhang, D.; Wu, C.; Song, A. Motor Imagery Based Continuous Teleoperation Robot Control with Tactile Feedback. Electronics 2020, 9, 174.

- Zhang, W.; Sun, F.; Wu, H.; Tan, C.; Ma, Y. Asynchronous Brain-Computer Interface Shared Control of Robotic Grasping. Tsinghua Sci. Technol. 2019, 24, 360–370.

- Xu, Y.; Ding, C.; Shu, X.; Gui, K.; Bezsudnova, Y.; Sheng, X.; Zhang, D. Shared Control of a Robotic Arm Using Non-Invasive Brain–Computer Interface and Computer Vision Guidance. Robot. Auton. Syst. 2019, 115, 121–129.

- Moldoveanu, A.; Ferche, O.M.; Moldoveanu, F.; Lupu, R.G.; Cinteza, D.; Constantin Irimia, D.; Toader, C. The TRAVEE System for a Multimodal Neuromotor Rehabilitation. IEEE Access 2019, 7, 8151–8171.

- Zhuang, J.; Geng, K.; Yin, G. Ensemble Learning Based Brain-Computer Interface System for Ground Vehicle Control. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 5392–5404.

- Haziq Hasbulah, M.; Azni Jafar, F.; Hisham Nordin, M.; Yokota, K.; Tuah Jaya, H.; Tunggal, D. Brain-Controlled for Changing Modular Robot Configuration by Employing Neurosky’s Headset. (IJACSA) Int. J. Adv. Comput. Sci. Appl. 2019, 10, 114–120.

- Ai, Q.; Chen, A.; Chen, K.; Liu, Q.; Zhou, T.; Xin, S.; Ji, Z. Feature Extraction of Four-Class Motor Imagery EEG Signals Based on Functional Brain Network. J. Neural Eng. 2019, 16, 026032.

- Jafarifarmand, A.; Badamchizadeh, M.A. EEG Artifacts Handling in a Real Practical Brain-Computer Interface Controlled Vehicle. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1200–1208.

- Andreu-Perez, J.; Cao, F.; Hagras, H.; Yang, G.Z. A Self-Adaptive Online Brain-Machine Interface of a Humanoid Robot Through a General Type-2 Fuzzy Inference System. IEEE Trans. Fuzzy Syst. 2018, 26, 101–116.

- Romero-Laiseca, M.A.; Delisle-Rodriguez, D.; Cardoso, V.; Gurve, D.; Loterio, F.; Posses Nascimento, J.H.; Krishnan, S.; Frizera-Neto, A.; Bastos-Filho, T. A Low-Cost Lower-Limb Brain-Machine Interface Triggered by Pedaling Motor Imagery for Post-Stroke Patients Rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 988–996.

- Junwei, L.; Ramkumar, S.; Emayavaramban, G.; Vinod, D.F.; Thilagaraj, M.; Muneeswaran, V.; Pallikonda Rajasekaran, M.; Venkataraman, V.; Hussein, A.F. Brain Computer Interface for Neurodegenerative Person Using Electroencephalogram. IEEE Access 2019, 7, 2439–2452.

- Kobayashi, N.; Nakagawa, M. BCI-Based Control of Electric Wheelchair Using Fractal Characteristics of EEG. IEEJ Trans. Electr. Electron. Eng. 2018, 13, 1795–1803.

- Ji, Z.; Liu, Q.; Xu, W.; Yao, B.; Liu, J.; Zhou, Z. A Closed-Loop Brain-Computer Interface with Augmented Reality Feedback for Industrial Human-Robot Collaboration. Int. J. Adv. Manuf. Technol. 2021, 116, 1–16.

- Li, R.; Zhang, X.; Lu, Z.; Liu, C.; Li, H.; Sheng, W.; Odekhe, R. An Approach for Brain-Controlled Prostheses Based on a Facial Expression Paradigm. Front. Neurosci. 2018, 12, 1–15.

- Banach, K.; Małecki, M.; Rosół, M.; Broniec, A. Brain-Computer Interface for Electric Wheelchair Based on Alpha Waves of EEG Signal. Bio-Algorithms Med-Syst. 2021, 17, 165–172.

- Alhakeem, Z.M.; Ali, R.S.; Abd-Alhameed, R.A. Wheelchair Free Hands Navigation Using Robust DWT-AR Features Extraction Method with Muscle Brain Signals. IEEE Access 2020, 8, 64266–64277.

- Wankhade, M.M.; Chorage, S.S. An Empirical Survey of Electroencephalography-Based Brain-Computer Interfaces. Bio-Algorithms Med-Syst. 2020, 16, 20190053.

- Ortiz, M.; Ferrero, L.; Iáñez, E.; Azorín, J.M.; Contreras-Vidal, J.L. Sensory Integration in Human Movement: A New Brain-Machine Interface Based on Gamma Band and Attention Level for Controlling a Lower-Limb Exoskeleton. Front. Bioeng. Biotechnol. 2020, 8, 735.

- Tang, X.; Li, W.; Li, X.; Ma, W.; Dang, X. Motor Imagery EEG Recognition Based on Conditional Optimization Empirical Mode Decomposition and Multi-Scale Convolutional Neural Network. Expert Syst. Appl. 2020, 149, 113285.

- Wang, Z.; Zhou, Y.; Chen, L.; Gu, B.; Liu, S.; Xu, M.; Qi, H.; He, F.; Ming, D. A BCI Based Visual-Haptic Neurofeedback Training Improves Cortical Activations and Classification Performance during Motor Imagery. J. Neural Eng. 2019, 16, 066012.

- Liu, Y.; Su, W.; Li, Z.; Shi, G.; Chu, X.; Kang, Y.; Shang, W. Motor-Imagery-Based Teleoperation of a Dual-Arm Robot Performing Manipulation Tasks. IEEE Trans. Cogn. Dev. Syst. 2019, 11, 414–424.

- Li, Z.; Yuan, Y.; Luo, L.; Su, W.; Zhao, K.; Xu, C.; Huang, J.; Pi, M. Hybrid Brain/Muscle Signals Powered Wearable Walking Exoskeleton Enhancing Motor Ability in Climbing Stairs Activity. IEEE Trans. Med. Robot. Bionics 2019, 1, 218–227.

- Menon, V.G.; Jacob, S.; Joseph, S.; Almagrabi, A.O. SDN-Powered Humanoid with Edge Computing for Assisting Paralyzed Patients. IEEE Internet Things J. 2020, 7, 5874–5881.

- Li, J.; Li, Z.; Feng, Y.; Liu, Y.; Shi, G. Development of a Human-Robot Hybrid Intelligent System Based on Brain Teleoperation and Deep Learning SLAM. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1664–1674.

- Gandhi, V.; Prasad, G.; Coyle, D.; Behera, L.; McGinnity, T.M. EEG-Based Mobile Robot Control through an Adaptive Brain-Robot Interface. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 1278–1285.

- Leeb, R.; Tonin, L.; Rohm, M.; Desideri, L.; Carlson, T.; Millán, J.D.R. Towards Independence: A BCI Telepresence Robot for People with Severe Motor Disabilities. Proc. IEEE 2015, 103, 969–982.

- Li, T.; Hong, J.; Zhang, J.; Guo, F. Brain-Machine Interface Control of a Manipulator Using Small-World Neural Network and Shared Control Strategy. J. Neurosci. Methods 2014, 224, 26–38.

- Millán, J.d.R.; Mouriño, J. Asynchronous BCI and Local Neural Classifiers: An Overview of the Adaptive Brain Interface Project. IEEE Trans. Neural Syst. Rehabil. Eng. 2003, 11, 159–161.

- Kim, Y.J.; Nam, H.S.; Lee, W.H.; Seo, H.G.; Leigh, J.H.; Oh, B.M.; Bang, M.S.; Kim, S. Vision-Aided Brain-Machine Interface Training System for Robotic Arm Control and Clinical Application on Two Patients with Cervical Spinal Cord Injury. BioMedical Eng. Online 2019, 18, 14.

- Frisoli, A.; Loconsole, C.; Leonardis, D.; Bannò, F.; Barsotti, M.; Chisari, C.; Bergamasco, M. A New Gaze-BCI-Driven Control of an Upper Limb Exoskeleton for Rehabilitation in Real-World Tasks. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2012, 42, 1169–1179.

- Zhang, R.; Li, Y.; Yan, Y.; Zhang, H.; Wu, S.; Yu, T.; Gu, Z. Control of a Wheelchair in an Indoor Environment Based on a Brain-Computer Interface and Automated Navigation. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 128–139.

- Bhattacharyya, S.; Konar, A.; Tibarewala, D.N. Motor Imagery and Error Related Potential Induced Position Control of a Robotic Arm. IEEE/CAA J. Autom. Sin. 2017, 4, 639–650.

- Chae, Y.; Jeong, J.; Jo, S. Toward Brain-Actuated Humanoid Robots: Asynchronous Direct Control Using an EEG-Based BCI. IEEE Trans. Robot. 2012, 28, 1131–1144.

- Hortal, E.; Planelles, D.; Costa, A.; Iáñez, E.; Úbeda, A.; Azorín, J.M.; Fernández, E. SVM-Based Brain-Machine Interface for Controlling a Robot Arm through Four Mental Tasks. Neurocomputing 2015, 151, 116–121.

- Do, A.H.; Wang, P.T.; King, C.E.; Chun, S.N.; Nenadic, Z. Brain-Computer Interface Controlled Robotic Gait Orthosis. J. Neuroeng. Rehabil. 2013, 10, 111.

- Hong, K.S.; Khan, M.J.; Hong, M.J. Feature Extraction and Classification Methods for Hybrid FNIRS-EEG Brain-Computer Interfaces. Front. Hum. Neurosci. 2018, 12, 246.

More