Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Shiwei Lin and Version 2 by Vivi Li.

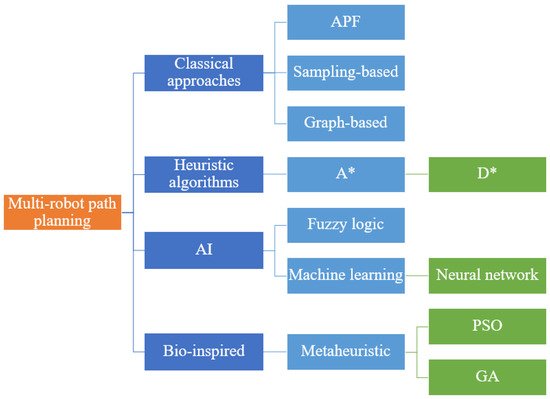

Numerous path-planning studies have been conducted in past decades due to the challenges of obtaining optimal solutions. The multi-robot path-planning approaches have been classified as classical approaches, heuristic algorithms, bio-inspired techniques, and artificial intelligence approaches. Bio-inspired techniques are the most employed approaches, and artificial intelligence approaches have gained more attention recently.

- multi-robot path planning

- bio-inspired algorithms

- robots

1. Introduction

Robot applications have been widely implemented in various areas, including industry [1], agriculture [2], surveillance [3], search and rescue [4], environmental monitoring [5], and traffic control [6]. A robot is referred to as an artificial intelligence system that integrates microelectronics, communication, computer science, and optics [7]. Due to the development of robotics technology, mobile robots have been utilized in different environments, such as Unmanned Aerial Vehicles (UAVs) for aerospace, Automated Guided Vehicles (AGVs) for production, Unmanned Surface Vessels (USVs) for water space, and Autonomous Underwater Vehicles (AUVs) for underwater.

To perform tasks, employing a set of vehicles cooperatively and simultaneously has gained interest due to the increased demand. Multiple robots can execute tasks in parallel and cover larger areas. The system continues working even with the failure of one robot [8], and it has the advantages of robustness, flexibility, scalability, and spatial distribution [9]. Each robot has its coordinates and independent behavior for a multi-robot system, and it can model the cooperative behavior of real-life situations [10]. For reliable operation of the robot, the robotics system must address the path/motion planning problem. Path planning aims to find a collision-free path from the source to the target destination.

Path planning is an NP-hard problem in optimization, and it involves multiple objectives, resulting in its solution being polynomial verified [11]. The robots are aimed to accomplish the tasks in the post-design stage with higher reliability, higher speed, and lower energy consumption [12]. Task allocation, obstacle avoidance, collision-free execution, and time windows are considered [13]. Multi-robot path planning has high computational complexity, which results in a lack of complete algorithms that offer solution optimality and computational efficiency [14].

Substantial optimality criteria have been considered in path planning, such as the rendezvous and operation time, path length, velocity smoothness, safety margin, and heading profiles for generating optimal paths [15]. During missions, the robot systems have limitations, such as limited communication with the center or other robots, stringent nonholonomic mission constraints, and limited mission length because of weight, size, and fuel constraints [16]. The planned path must be a smooth curve due to nonholonomic motion constraints and support kinematic constraints with geometric continuity. Furthermore, the path’s continuity is significant for collaborative transport [17].

Path-planning approaches can be divided into offline and real-time implementation. Offline generation of a multi-robot path cannot exploit the cooperative abilities, as there is little or no interaction between robots, leading to the multi-robot system not ensuring that the robots are moving along a predefined path or formation [18]. Real-time systems have been proposed to overcome the problems created by offline path generation, and these can maximize the efficiency of algorithms. The chart of offline/real-time implementation included in the literature review is exhibited in the discussion section.

Decision-making strategies can be classified as centralized and decentralized approaches. The centralized system has the central decision-maker, and thus the degree of cooperation is higher than in the decentralized approach. All robots are treated as one entity in the high-dimensional configuration space [19]. A central planner assigns tasks and plans schedules for each robot, and the robots start operation after completion of the planning [20]. The algorithms used in the centralized structure are without limitation because the centralized system has better global support for robots.

However, the decentralized approach is more widely employed in real-time implementation. Decentralized methods are typical for vehicle autonomy and distributed computation [21]. These have the robots communicate and interact with each other and have higher degrees of flexibility, robustness, and scalability, thereby, supporting dynamic changes. The robots execute computations and produce suboptimal solutions [20]. The decentralized approach includes task planning and motion planning, and it reduces computational complexity with limited shared information [22].

Many surveys have been conducted for the mobile robot path planning strategies [23][24][25][23,24,25]; however, these papers only focus on single robot navigation without cooperative planning. This rentryview’s motivation is to introduce the state-of-art path-planning algorithms of multi-robot systems and provide an analysis of multi-robot decision-making strategies, considering real-time performance. This entrypaper not only investigates 2D or ground path planning but also the 3D environment.

2. Multi-Robot Path Planning Approaches

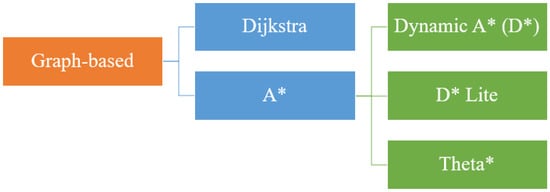

Figure 1 presents the classification of multi-robot path-planning algorithms, and it is divided into three categories: classical approaches, heuristic algorithms, and artificial intelligence (AI)-based approaches. The subcategories are linked to the primary categories and only display the significant subcategories. The classical approaches include the Artificial potential field, sampling-based, and graph-based approaches. The heuristic algorithms mainly consist of A* and D* search algorithms. The AI-based approaches are the most common algorithms for multi-robot systems, and the bio-inspired approaches take most of the attention. Metaheuristic has been applied to most of the research, and the famous algorithms are PSO and GA. From [26], GA and PSO are the most commonly used approaches.

Figure 1.

Classification of multi-robot path planning approaches.

2.1. Classical Approaches

2.1.1. Artificial Potential Field (APF)

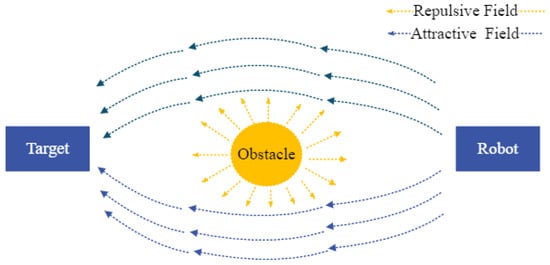

The APF uses its control force for path planning, and the control force sums up the attractive and the repulsive potential field. The illustration of APF is shown in Figure 2; the blue force indicates the attractive field, and the yellow force represents the repulsive field. The APF establishes path-planning optimization and dynamic particle models, and the additional control force updates the APF for multi-robot formation in a realistic and known environment [27]. Another APF-based approach is presented for a multi-robot system in a warehouse.

Figure 2.

Illustration of the APF algorithm.

2.1.2. Sampling-Based

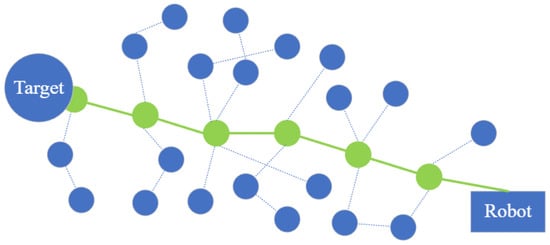

The rapidly exploring random tree (RRT) searches high-dimensional and nonconvex space by using a space-filling tree randomly, and the tree is built incrementally from samples to grow towards unreached areas. The sampling-based approach’s outline is demonstrated in Figure 3, and the generated path is highlighted in green. For a multi-robot centralized approach, multi-robot path-planning RRT performs better in optimizing the solution and exploring search space in an urban environment than push and rotate, push and swap, and the Bibox algorithm [35]. The discrete-RRT extends the celebrated RRT algorithm in the discrete graph with a speedy exploration of the high-dimensional space of implicit roadmaps [36].

Figure 3.

Demonstration of the RRT algorithm.

2.2. Heuristic Algorithms

2.2.1. A* Search

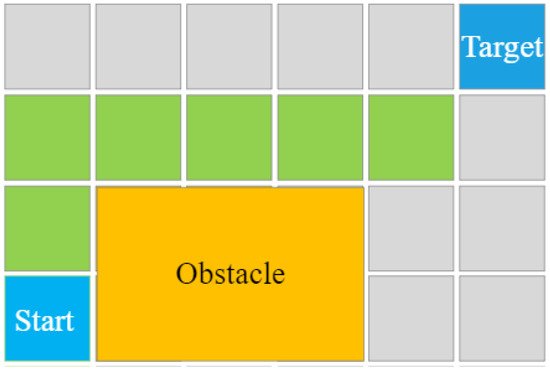

The A* search algorithm is one of the most common heuristic algorithms in path planning. Figure 4 shows the simple example of the gird-based A* algorithm, and the path is highlighted in green. It uses the heuristic cost to determine the optimal path on the map. The relaxed-A* is used to provide an optimal initial path and fast computation, and Bezier-splines are used for continuous path planning to optimize and control the curvature of the path and restrict the acceleration and velocity [17].

Figure 4.

Simple example of the A* algorithm.

2.2.2. Other Heuristic Algorithms

A constructive heuristic approach is presented to perceive multiple regions of interest. It aims to find the robot’s path with minimal cost and cover target regions with heterogeneous multi-robot settings [6]. Conflict-Based Search is proposed for multi-agent path planning problems in the train routing problem for scheduling multiple vehicles and setting paths in [44][53]. For multi-robot transportation, a primal-dual-based heuristic is designed to solve the path planning problem as the multiple heterogeneous asymmetric Hamiltonian path problem, solving in a short time [45][54]. The linear temporal logic formula is applied to solve the multi-robot path planning by satisfying a high-level mission specification with Dijkstra’s algorithm in [46][55]. A modified Dijkstra’s algorithm is introduced for robot global path planning without intersections, using a quasi-Newton interior point solver to smooth local paths in tight spaces [47][56]. Moreover, cognitive adaptive optimization is developed with transformed optimization criteria for adaptively offering the accurate approximation of paths in the proposed real-time reactive system; it takes into account the unknown operation area and nonlinear characteristics of sensors [18]. The Grid Blocking Degree (GBD) is integrated with priority rules for multi-AGV path planning, and it can generate a conflict-free path for AGV to handle tasks and update the path based on real-time traffic congestion to overcome the problems caused by most multi-AGV path planning is offline scheduling [48][57]. Heuristic algorithms, minimization techniques, and linear sum assignment are used in [49][58] for multi-UAV coverage path and task planning with RGB and thermal cameras. [50][59] designed the extended Angular-Rate-Constrained-Theta* for a multi-agent path-planning approach to maintaining the formation in a leader–follower formation. Figure 5 displays the overview of the mentioned heuristic algorithms. The heuristic algorithms are widely used in path planning, and the heuristic cost functions are developed to evaluate the paths. The algorithms can provide the complete path in a grid-like map. However, for the requirement of flexibility and robustness, bio-inspired algorithms are proposed.

Figure 5.

Search algorithms.

2.3. Bio-Inspired Techniques

2.3.1. Particle Swarm Optimization (PSO)

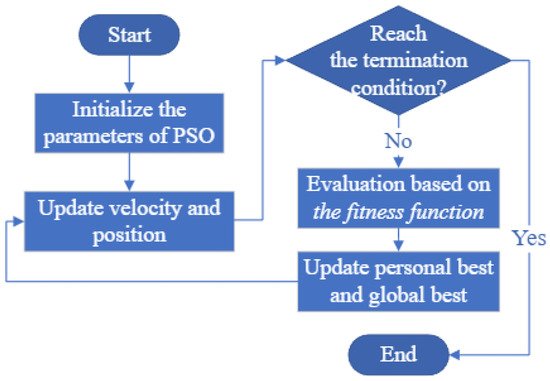

PSO is one of the most common metaheuristic algorithms in multi-robot path planning problems and formation. The flowchart of PSO is shown in Figure 6. It is a stochastic optimization algorithm based on the social behavior of animals, and it obtains global and local search abilities by maintaining a balance between exploitation and exploration [51][60]. Ref. [52][61] presents an interval multi-objective PSO using an ingenious interval update law for updating the global best position and the crowding distance of risk degree interval for the particle’s local best position. PSO is employed for multiple vehicle path planning to minimize the mission time, and the path planning problem is formulated as a multi-constrained optimization problem [53][62], while the approach has low scalability and execution ability.

Figure 6.

Flowchart of the PSO algorithm.

2.3.2. Genetic Algorithm (GA)

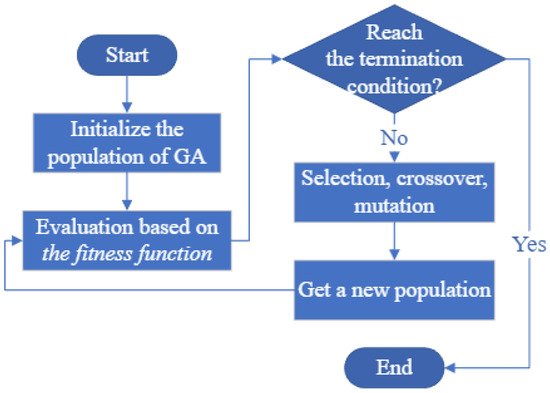

GA is widely utilized for solving optimization problems as an adaptive search technique, and it is based on a genetic reproduction mechanism and natural selection [63][72]. The flowchart of GA is indicated in Figure 7. Ref. [64][73] uses GA and reinforcement learning techniques for multi-UAV path planning, considers the number of vehicles and a response time, and a heuristic allocation algorithm for ground vehicles. GA solves the Multiple Traveling Sales Person problem with the stop criterion and the cost function of Euclidean distance, and Dubins curves achieve geometric continuity while the proposed algorithm cannot avoid the inter-robot collision or support online implementation [16].

Figure 7.

Flowchart of the GA algorithm.

2.3.3. Ant Colony Optimization (ACO)

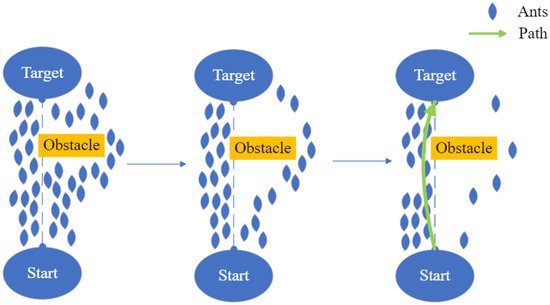

Ants will move along the paths and avoid the obstacle, marking available paths with pheromone, and the ACO treats the path with higher pheromone as the optimal path. The principle of ACO is demonstrated in Figure 8, and the path with a higher pheromone is defined as the optimal path marked by green. For collision-free routing and job-shop scheduling problems, an improved ant colony algorithm is enhanced by multi-objective programming for a multi-AGV system [75][84].

Figure 8.

Changes of the ACO algorithm with different timeslots.

2.3.4. Pigeon-Inspired Optimization (PIO)

Pigeon navigation tools inspired PIO, and it uses two operators for evaluating the solutions. Social-class PIO is proposed to improve the performances and convergence capabilities of standard PIO with inspiring by the inherent social-class character of pigeons [79][88], and it is combined with time stamp segmentation for multi-UAV path planning. Ref. [80][89] analyzing and comparing the changing trend of fitness value of local and global optimum positions to improve the PIO algorithm as Cauchy mutant PIO method, and the plateau topography and wind field, control constraints of UAVs are modeled for cooperative strategy and better robustness.2.3.5. Grey Wolf Optimizer (GWO)

GWO is inspired by the hunting behavior and leadership of grey wolves, and it obtain the solutions by searching, encircling, and attacking prey. An improved grey wolf optimizer is employed for the multi-constraint objective optimization model for multi-UAV collaboration under the confrontation environment. It considers fuel consumption, space, and time [81][90]. The improvements of the grey wolf optimizer are individual position updating, population initialization, and decay factor updating. An improved hybrid grey wolf optimizer is proposed with a whale optimizer algorithm in a leader–follower formation and fuses a dynamic window approach to avoid dynamic obstacles [82][91]. The leader–follower formation controls the followers to track their virtual robots based on the leader’s position and considers the maximum angular and linear speed of robots. Ref. [83][92] proposed a hybrid discrete GWO to overcome the weakness of traditional GWO, and it updates the grey wolf position vector to gain solution diversity with faster convergence in discrete domains for multi-UAV path planning, using greedy algorithms and the integer coding to convert between discrete problem space and the grey wolf space.2.4. Artificial Intelligence

2.4.1. Fuzzy Logic

Fuzzy logic uses the principle of “degree of truth” for computing the solutions. It can be applied for controlling the robot without the mathematical model but cannot predict the stochastic uncertainty in advance. As a result, a probabilistic neuro-fuzzy model is proposed with two fuzzy level controllers and an adaptive neuro-fuzzy inference system for multi-robot path planning and eliminating the stochastic uncertainties with leader–follower coordination [84][104]. The fuzzy C-means or the K-means methods filter and sort the camera location points, then use A* as a path optimization process for the multi-UAV traveling salesman problem in [5]. For collision avoidance and autonomous mobile robot navigation, Fuzzy-wind-driven optimization and a singleton type-1 fuzzy logic system controller are hybrids in the unknown environment in [85][105]. The wind-driven optimization algorithm optimizes the function parameters for the fuzzy controller, and the controller controls the motion velocity of the robot by sensory data interpretation. Ref. [86][106] proposed a reverse auction-based method and a fuzzy-based optimum path planning for multi-robot task allocation with the lowest path cost.2.4.2. Machine Learning

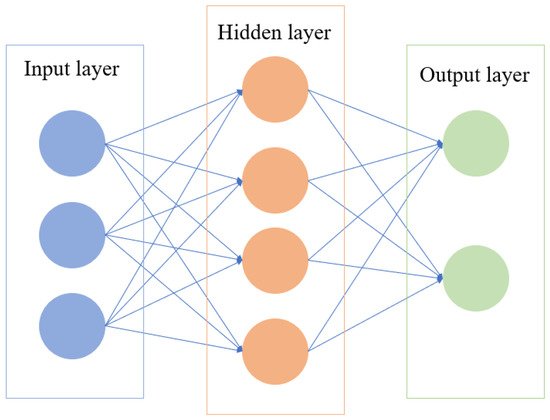

Machine learning simulates the learning behavior to obtain the solutions. It is used for path planning, embracing mobile computing, hyperspectral sensing, and rapid telecommunication for the rapid agent-based robust system [87][107]. Kernel smooth techniques, reinforcement learning, and the neural network are integrated for greedy actions for multi-agent path planning in an unknown environment [10] to overcome the shortcomings of traditional reinforcement learning, such as high time consumption, slow learning speed, and disabilities of learning in an unknown environment. The self-organizing neural network has self-learning abilities and competitive characteristics for the multi-robot system’s path planning and task assignment. Ref. [88][108] combined it with Glasius Bio-inspired neural network for obstacle avoidance and speed jump while the environment changes have not been considered in this approach. The biological-inspired self-organizing map is combined with a velocity synthesis algorithm for multi-robot path planning and task assignment. The self-organizing neural network supports a set of robots to reach multiple target locations and avoid obstacles autonomously for each robot with updating weights of the winner by the neurodynamic model [89][109]. Convolution Neural networks analyze image information to find the exact situation in the environment, and Deep q learning achieves robot navigation in a noble multi-robot path-planning algorithm [90][110]. This algorithm learns the mutual influence of robots to compensate for the drawback of conventional path-planning algorithms. In an unknown environment, a bio-inspired neural network is developed with the negotiation method, and each neuron has a one-to-one correspondence with the position of the grid map [91][111]. A biologically inspired neural network map is presented for task assignment and path planning, and it is used to calculate the activity values of robots in the maps of each target and select the winner with the highest activity value, then perform path planning [92][112]. The simple neural network diagram is exhibited in the following Figure 9.

Figure 9.

Diagram of a three-layer neural network.