Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Rita Xu and Version 1 by Kaile Du.

Given a query language, a Detection-based Vision-Language Understanding (DVLU) system needs to respond based on the detected regions (i.e.,bounding boxes). With the significant advancement in object detection, DVLU has witnessed great improvements in recent years, such as Visual Question Answering (VQA) and Visual Grounding (VG).

- detection-based vision-language understanding

- region collaborative network

- non-local network

1. Introduction

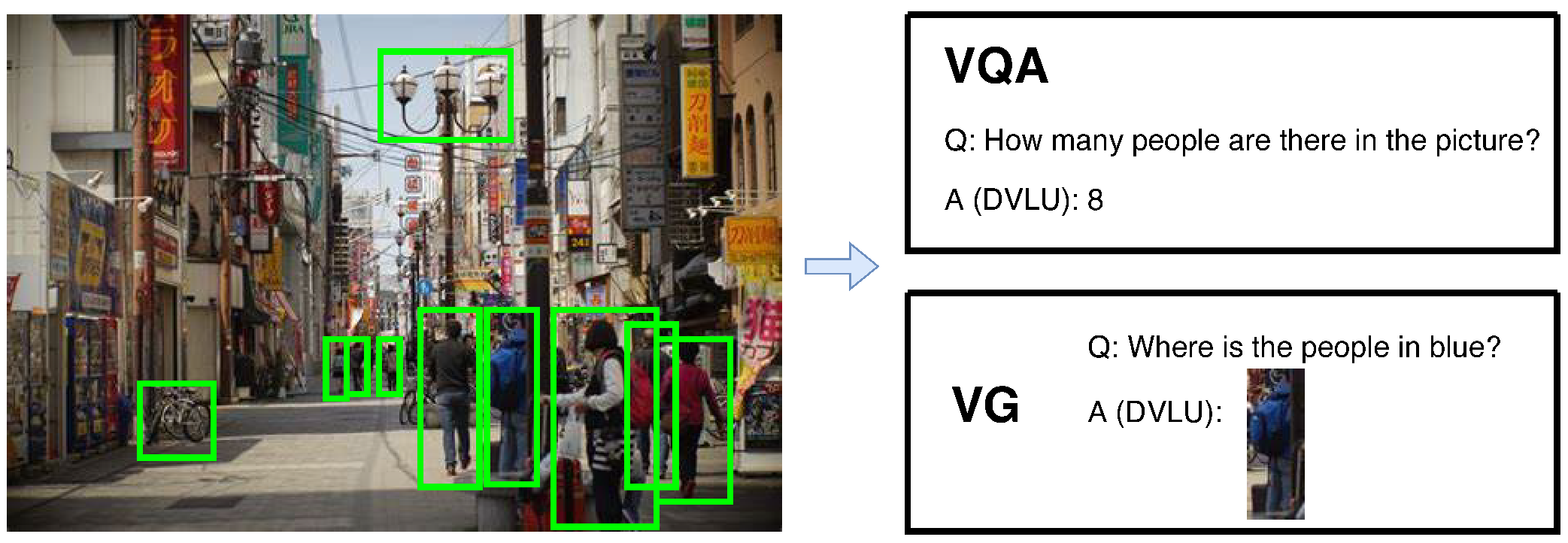

Visual object detection has been studied for years and has been applied to many applications such as smart city, robot vision, consumer electronics, security, autonomous driving, human–computer interaction, content-based image retrieval, intelligent video surveillance, and augmented reality [1,2,3][1][2][3]. Recently, many researchers found that vision-language understanding tasks based on detected regions or called Detection-based Vision-Language Understanding (DVLU) can achieve better performance than many traditional vision-language understanding methods that directly use the pixel-based image information [4,5,6,7][4][5][6][7]. For instance, the key to the success of the winning entries to the Visual Question Answering (VQA) challenge [8,9,10,11,12][8][9][10][11][12] is the use of detected regions. Moreover, the success of visual object detection leads to many new DVLU tasks, such as Visual Grounding [13[13][14][15][16][17],14,15,16,17], Visual Relationship Detection [18,19][18][19] and Scene Graph Generation [20,21,22,23,24][20][21][22][23][24]. With significant advancements made in the area of object detection, DVLU and its related tasks have attracted much attention in recent years. As shown in Figure 1, in a smart city, a monitor may shoot a picture from a crowded street, and a DVLU system can answer the human question and give the following response. For example, when given a query question “How many people are in the picture?”, a DVLU system can provide the correct answer via the detected regions.

Figure 1. A DVLU system in a smart city. Specifically, VQA needs to answer with natural language while VG needs to answer with a grounded region.

A DVLU task is defined as that given several detected regions (hereinafter, all regions refer to detected regions or bounding boxes for simplicity) from a nature image, a model automatically recognizes the semantic patterns or concepts inside the image. Specifically, a DVLU model first accepts some region inputs from an off-the-shelf object detection model (detector). By applying any well-designed model (i.e., a deep neural network or DNN), these detected regions can be treated as finer features or bottom-up attentions [6] of the original image. Then, each region is fed to the DVLU model as a kind of meaningful information to help understand images at a high level. Unlike usual image understanding, DVLU is based on multiple detected or labeled regions, which makes DVLU receive more fine-grained information than non-region image understanding.

Nevertheless, when facing numerous independent detected regions in an image, existing DVLU methods always ignore their distributions and relationships, which is inadequate for different regions consisting of a real-world scene. Furthermore, it is insufficient to separately reprocess each region for features after detecting them while ignoring that they were an integral whole and discarding their original cooperation. This motivates ourthe new design of collaboratively processing all the regions for DVLU, which will help a DVLU task make full use of regions to collaborate and enhance each other.

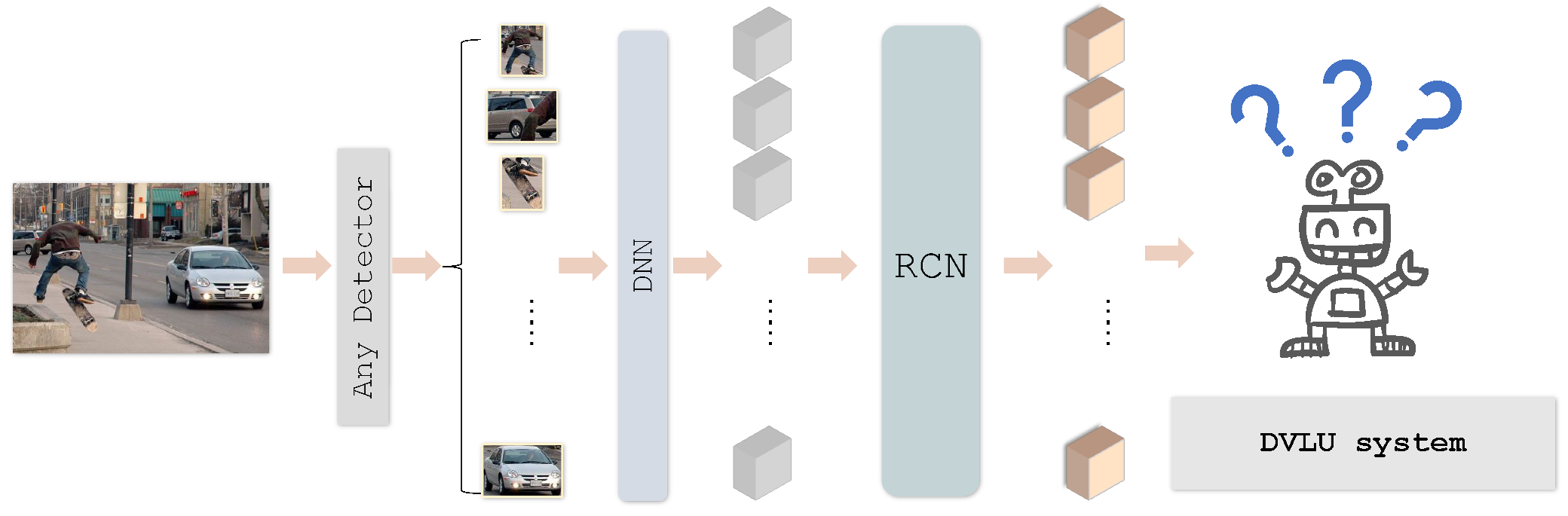

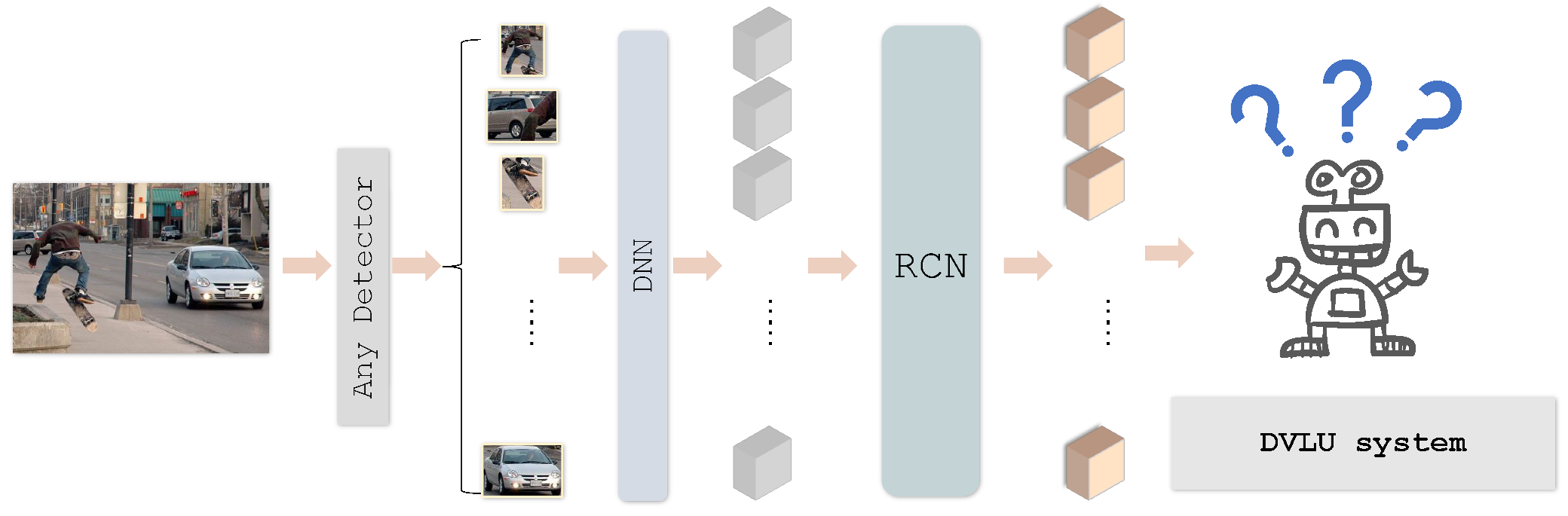

With RCN, the feature of a region is enhanced by all other regions in the image. As shown in Figure 2, in a DVLU pipeline, the RCN block can be easily inserted by keeping the dimension consistent. First, the RCN block receives the feature of regions as input. For each region feature, wresearchers compute Intra-Region Relation (IntraRR) across positions and channels to achieve self-enhancement. Then, the Inter-Region Relation (InterRR) is applied to all regions across positions and channels. To reduce redundant connections, weresearchers apply a pooling and sharing strategy relative to IntraRR. Finally, a series of the refined feature of regions are obtained by linear embedding. In general, weresearchers can easily add an RCN block to any DVLU model to process all the regions collaboratively. The proposed RCN is put into two DVLU tasks in the experiment: Visual Question Answering and Visual Grounding. The experimental results show that the proposed RCN block can significantly improve the performance against the baseline models.

Figure 2. Region Collaborative Network (RCN) can be inserted into a Detection-based Vision-Language Understanding (DVLU) pipeline. Any object detector can be used to generate regions.

2. Detection-Based Vision-Language Understanding

Detection-based Vision-Language Understanding (DVLU) attracted much attention in recent years due to the significant technological advancement of visual object detection [1,25,26,27,28][1][25][26][27][28]. With semantically labeled regions, DVLU methods can mine deeper-level information more effectively than those based only on pixel-based images. DVLU methods have been applied to many high-level vision-language tasks, such as VQA [6,7,13,29,30][6][7][13][29][30] and VG [13,31,32][13][31][32]. In VQA, Anderson et al. [6] build an attention model on detected bounding boxes for image captioning and visual question answering. Hinami et al. [33] incorporate the semantic specification of objects and intuitive specification of spatial relationships for detection-based image retrieval. The up–down [8] model is based on the principle of a joint embedding of the input question and image, followed by a multi-label classifier over a set of candidate answers. In pythia [9], they demonstrate that by making subtle but important changes to the model architecture and the learning rate schedule, fine-tuning image features, and adding data augmentation, pythia can significantly improve the performance of the up–down model. In VG, Deng et al. [13] propose to build the relations among regions, language, and pixels using an accumulated attention mechanism. Lyu et al. [32] propose constructing the graph relationships among all regions and using a graph neural network to enhance the region feature. In [34], the model exploits the reciprocal relation between the referent and context, either of them influences the estimation of the posterior distribution of the other, and thereby the search space of context can be significantly reduced. However, most of these methods do not fully use the relationship among regions, and some only consider the relative spatial relation of regions. In general, these methods ignore the cross-region problem. This may isolate each region from the whole scene and negatively affect performance.

3. Region Relation Network

Relationships among objects or regions have been studied for years. Some work is based on hyperspectral imaging [35,36,37,38][35][36][37][38] and some on deep learning [39,40,41,42,43][39][40][41][42][43]. One way is to study the explicit relationship between objects. It usually starts with detecting every object pair with the relationship in a given image [18,19][18][19]. After that, by combining detected relationship triplets (⟨subject, relationship, object⟩), an image can be transformed into a scene graph, where each node represents the region and each directed edge denotes the relationship between two regions [4,20,21,22][4][20][21][22]. Unfortunately, it is still unclear how to effectively use this structural topology for many end-user tasks, although it is based upon a large number of labeled relationships. Another way is to focus on building a latent relationship between objects. Zagorukyo et al. [44] explore and study several neural network architectures that compare image patches. Tseng et al. [45] was inspired by the non-local network [46] and proposed to build non-local ROI for cross-object perception. This preseaperrch is different from [45] in that the proposed RCN: (1) can be inserted into any DVLU model while keeping dimension consistency without modifying the original model; (2) computes intra-region and Inter-Region Relations in terms of both spatial positions and channels; and (3) applies to fully connected region features.

References

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep Learning for Generic Object Detection: A Survey. arXiv 2018, arXiv:1809.02165.

- Peng, C.; Weiwei, Z.; Ziyao, X.; Yongxiang, T. Traffic Accident Detection Based on Deformable Frustum Proposal and Adaptive Space Segmentation. Comput. Model. Eng. Sci. 2022, 130, 97–109.

- Yunbo, R.; Hongyu, M.; Zeyu, Y.; Weibin, Z.; Faxin, W.; Jiansu, P.; Shaoning, Z. B-PesNet: Smoothly Propagating Semantics for Robust and Reliable Multi-Scale Object Detection for Secure Systems. Comput. Model. Eng. Sci. 2022, 132, 1039–1054.

- Johnson, J.; Krishna, R.; Stark, M.; Li, L.J.; Shamma, D.; Bernstein, M.; Fei-Fei, L. Image Retrieval using Scene Graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3668–3678.

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969.

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-Up and Top-Down Attention for Image Captioning and Visual Question Answering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6077–6086.

- Fu, K.; Jin, J.; Cui, R.; Sha, F.; Zhang, C. Aligning where to see and what to tell: Image captioning with region-based attention and scene-specific contexts. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 2321–2334.

- Teney, D.; Anderson, P.; He, X.; van den Hengel, A. Tips and Tricks for Visual Question Answering: Learnings from the 2017 Challenge. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4223–4232.

- Jiang, Y.; Natarajan, V.; Chen, X.; Rohrbach, M.; Batra, D.; Parikh, D. Pythia v0. 1: The Winning Entry to the VQA Challenge 2018. arXiv 2018, arXiv:1807.09956.

- Biten, A.F.; Litman, R.; Xie, Y.; Appalaraju, S.; Manmatha, R. Latr: Layout-aware transformer for scene-text vqa. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 16548–16558.

- Cascante-Bonilla, P.; Wu, H.; Wang, L.; Feris, R.S.; Ordonez, V. Simvqa: Exploring simulated environments for visual question answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 5056–5066.

- Gupta, V.; Li, Z.; Kortylewski, A.; Zhang, C.; Li, Y.; Yuille, A. Swapmix: Diagnosing and regularizing the over-reliance on visual context in visual question answering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 5078–5088.

- Deng, C.; Wu, Q.; Wu, Q.; Hu, F.; Lyu, F.; Tan, M. Visual Grounding via Accumulated Attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7746–7755.

- Yu, L.; Lin, Z.; Shen, X.; Yang, J.; Lu, X.; Bansal, M.; Berg, T.L. Mattnet: Modular Attention Network for Referring Expression Comprehension. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1307–1315.

- Hu, R.; Rohrbach, M.; Andreas, J.; Darrell, T.; Saenko, K. Modeling Relationships in Referential Expressions with Compositional Modular Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1115–1124.

- Huang, S.; Chen, Y.; Jia, J.; Wang, L. Multi-View Transformer for 3D Visual Grounding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 15524–15533.

- Yang, L.; Xu, Y.; Yuan, C.; Liu, W.; Li, B.; Hu, W. Improving Visual Grounding with Visual-Linguistic Verification and Iterative Reasoning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 9499–9508.

- Dai, B.; Zhang, Y.; Lin, D. Detecting Visual Relationships with Deep Relational Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3076–3086.

- Lu, C.; Krishna, R.; Bernstein, M.S.; Li, F. Visual Relationship Detection with Language Priors. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 852–869.

- Xu, D.; Zhu, Y.; Choy, C.B.; Fei-Fei, L. Scene Graph Generation by Iterative Message Passing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5410–5419.

- Li, Y.; Ouyang, W.; Zhou, B.; Wang, K.; Wang, X. Scene Graph Generation from Objects, Phrases and Region Captions. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1261–1270.

- Zellers, R.; Yatskar, M.; Thomson, S.; Choi, Y. Neural Motifs: Scene Graph Parsing with Global Context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5831–5840.

- Teng, Y.; Wang, L. Structured sparse r-cnn for direct scene graph generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 19437–19446.

- Gao, K.; Chen, L.; Niu, Y.; Shao, J.; Xiao, J. Classification-then-grounding: Reformulating video scene graphs as temporal bipartite graphs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 19497–19506.

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28.

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788.

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based Convolutional Networks for Accurate Object Detection and Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 142–158.

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125.

- Chen, S.; Zhao, Q. REX: Reasoning-aware and Grounded Explanation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 15586–15595.

- Li, G.; Wei, Y.; Tian, Y.; Xu, C.; Wen, J.R.; Hu, D. Learning to Answer Questions in Dynamic Audio-Visual Scenarios. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 21–24 June 2022; pp. 19108–19118.

- Cirik, V.; Berg-Kirkpatrick, T.; Morency, L.P. Using Syntax to Ground Referring Expressions in Natural Images. Proc. AAAI Conf. Artif. Intell. 2018, 32, 6756–6764.

- Lyu, F.; Feng, W.; Wang, S. vtGraphNet: Learning weakly-supervised scene graph for complex visual grounding. Neurocomputing 2020, 413, 51–60.

- Hinami, R.; Matsui, Y.; Satoh, S. Region-based Image Retrieval Revisited. In Proceedings of the ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 528–536.

- Zhang, H.; Niu, Y.; Chang, S.F. Grounding referring expressions in images by variational context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4158–4166.

- Yan, Y.; Ren, J.; Tschannerl, J.; Zhao, H.; Harrison, B.; Jack, F. Nondestructive phenolic compounds measurement and origin discrimination of peated barley malt using near-infrared hyperspectral imagery and machine learning. IEEE Trans. Instrum. Meas. 2021, 70, 5010715.

- Sun, H.; Ren, J.; Zhao, H.; Yuen, P.; Tschannerl, J. Novel gumbel-softmax trick enabled concrete autoencoder with entropy constraints for unsupervised hyperspectral band selection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5506413.

- Sun, G.; Zhang, X.; Jia, X.; Ren, J.; Zhang, A.; Yao, Y.; Zhao, H. Deep fusion of localized spectral features and multi-scale spatial features for effective classification of hyperspectral images. Int. J. Appl. Earth Obs. Geoinf. 2020, 91, 102157.

- Qiao, T.; Ren, J.; Wang, Z.; Zabalza, J.; Sun, M.; Zhao, H.; Li, S.; Benediktsson, J.A.; Dai, Q.; Marshall, S. Effective denoising and classification of hyperspectral images using curvelet transform and singular spectrum analysis. IEEE Trans. Geosci. Remote Sens. 2016, 55, 119–133.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778.

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 25.

- Lyu, F.; Wu, Q.; Hu, F.; Wu, Q.; Tan, M. Attend and Imagine: Multi-label Image Classification with Visual Attention and Recurrent Neural Networks. IEEE Trans. Multimed. 2019, 21, 1971–1981.

- Du, K.; Lyu, F.; Hu, F.; Li, L.; Feng, W.; Xu, F.; Fu, Q. AGCN: Augmented Graph Convolutional Network for Lifelong Multi-label Image Recognition. arXiv 2022, arXiv:2203.05534.

- Mukhiddinov, M.; Cho, J. Smart glass system using deep learning for the blind and visually impaired. Electronics 2021, 10, 2756.

- Zagoruyko, S.; Komodakis, N. Learning to Compare Image Patches via Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4353–4361.

- Tseng, S.Y.R.; Chen, H.T.; Tai, S.H.; Liu, T.L. Non-local RoI for Cross-Object Perception. arXiv 2018, arXiv:1811.10002.

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-Local Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803.

More