Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Dimitris Mourtzis and Version 2 by Camila Xu.

A dashboard application is proposed and developed to act as a Digital Twin that would indicate the Measured Value to be held accountable for any future failures. The dashboard is implemented in the Java programming language, while information is stored into a Database that is aggregated by an Online Analytical Processing (OLAP) server. This achieves easy Key Performance Indicators (KPIs) visualization through the dashboard.

- digital twin

- decision support system

- factor analysis

- KPI

1. Introduction

Todays’ manufacturing era focuses on monitoring the process on shop-floor by utilizing various sensorial systems that are based on data collection [1][2][3][1,2,3]. The automated systems directly collect an enormous amount of performance data from the shop-floor (Figure 1), and are stored into a repository, in a raw or accumulated form [4][5][4,5]. However, automated decision making in the era of Industry 4.0 is yet to be discovered.

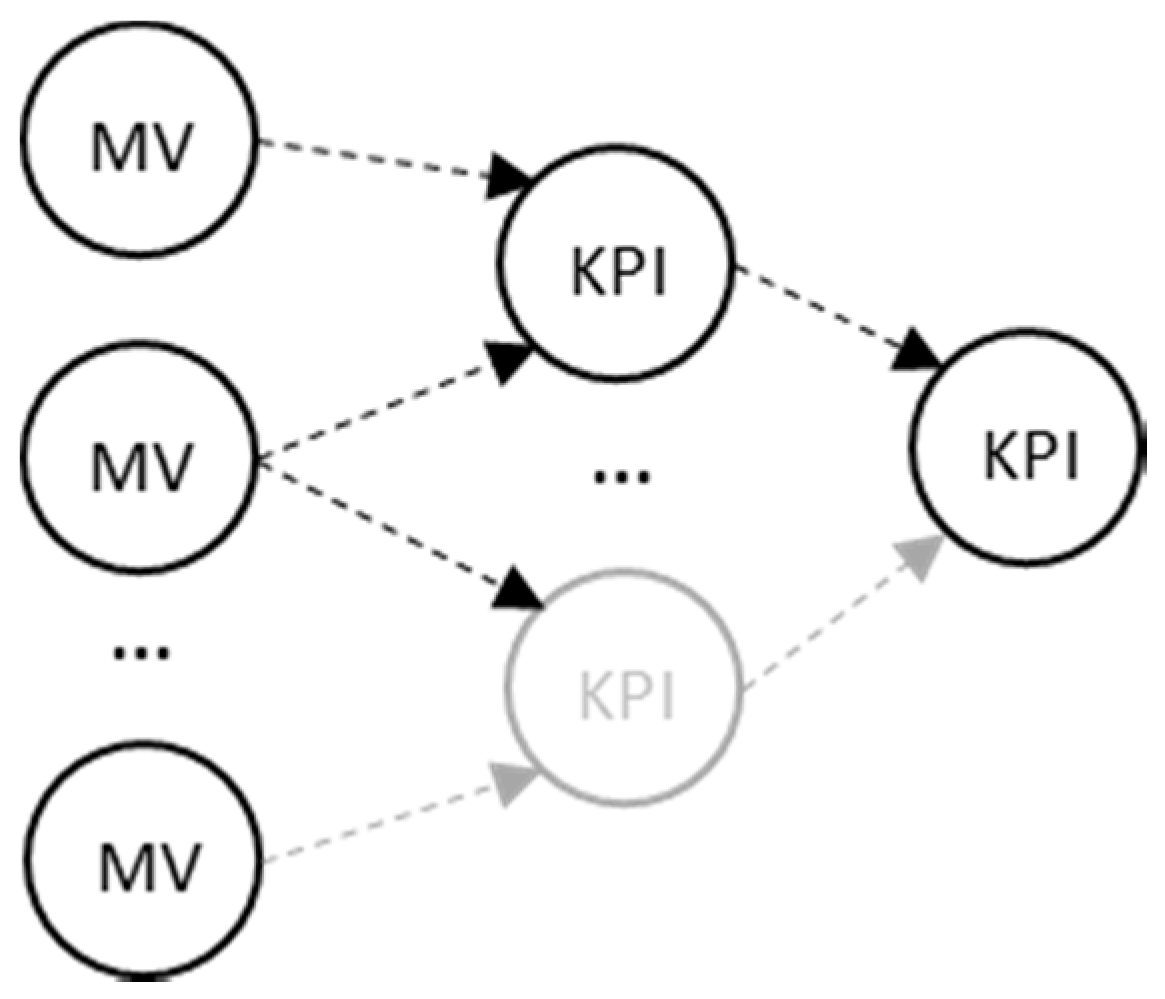

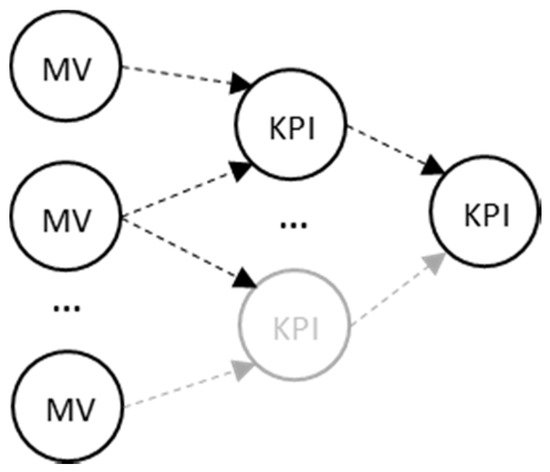

Figure 1. Key Performance Indications (KPIs) aggregation from Measured Values (MV).

2. KPIs and Digital Twin Platforms in Manufacturing

A lot of KPIs have been defined in literature (Manufacturing, Environmental, Design, and Customer) [6][7], and, despite the fact that methods have been developed for the precise acquisition of measured values [7][8], the interconnection of the values (Figure 1) can lead to mistaken decision making when one searches for the root cause of an alarm occurring in a manufacturing environment.

In addition, regarding manufacturing-oriented dashboard systems, they aid in the visualization of complex accumulations, trends, and directions of Key Performance Indications (KPIs) [8][9]. However, despite the situation awareness [9][10] that they offer, they are not able to support the right decision made at management level by elaborating automatically the performance metrics and achieving profitable production [10][11]. In the meantime, multiple criteria methods are widely used for decision support or the optimization of production, plant, or machine levels [1][11][1,12], based (mainly) on the four basic manufacturing attributes i.e.,: Cost, Time, Quality, and Flexibility levels [1]. This set of course can be extended. The “performance” that is collected from the shop-floor has been considered as measured values (MV) and their aggregation or accumulation into higher level Key Performance Indicators, as initially introduced by [12][13]. The goal of all these is the achievement of digital manufacturing [13][14].

On the other hand, the term digital twin can be considered as an “umbrella”, and it can be implemented with various technologies beneath, such as physics [14][15], machine learning [15][16], and data/control models [16][17]. A digital twin deals with giving some sort of feedback back to the system and it varies from process level [14][15] to system level [17][18], and it can even handle design aspects [18][19].

Regarding commercial dashboard solutions, they enable either manual or automated input of measured values for the production of KPIs [19][20][21][20,21,22]. However, their functionality is limited to the reporting features of the current KPI values, typically visualized with a Graphical User Interface (GUI), usually cluttered, with gauges, charts, or tabular percentages. Additionally, there is need to incorporate various techniques from machine learning or Artificial Intelligence in general [22][23] and signal processing techniques [23][24]. The typical functionality that is found in dashboards serves as a visual display of the most important information for one or more objectives, consolidated and arranged on a single screen, so as for the information to be monitored at a glance [8][9]. This is extended in order to analyze the aggregated KPIs and explore potential failures in the future by utilizing monitored production performances. Finding, however, the root cause of a problem occurring, utilizing the real time data in an efficient and fast way, is still being pursued.

3. Similar Methods and Constrains on Applicability

In general, categories in root-cause finding are hard to be defined, but if one borrows the terminology from Intrusion Detection Alarms [24][25], they can claim that there are three major categories: anomaly detection, correlation, and clustering. Specific examples of all three in decision making are given below, in the next paragraph. The alternative classifications of the Decision Support Systems that are given below permit this categorization; the first classification [25][26] regards: (i) Industry specific packages, (ii) Statistical or numerical algorithms, (iii) Workflow Applications, (iv) Enterprise systems, (v) Intelligence, (vi) Design, (vii) Choice, and (viii) Review. Using another criterion, the second classification [26][27] concerns: (a) communications driven, (b) data driven, (c) document driven, (d) knowledge driven, and (e) model driven.

There are several general purpose methods that are relevant and are mentioned here to achieve root-cause finding. Root Cause Analysis is a quite good set of techniques, however, it remains on the descriptive empirical strategy level [27][28][28,29]. Moreover, scorecards are quite descriptive and empirical, while the Analytical Hierarchical Process (AHP) requires criteria definition [29][30]. Finally, Factor Analysis, requires a specific kind of manipulation/modelling due to its stochastic character [30][31].

Regarding specific applications in manufacturing-related decision making, usually finding that the root-cause has to be addressed through identifying a defect in the production. Defects can refer to either product unwanted characteristics, or resources’ unwanted behaviour. Methods that have been previously used—regardless of the application—are Case Based Reasoning [31][32], pattern recognition [32][33][33,34], Analysis of Variance (ANOVA) [34][35], neural networks [35][36], Hypothesis testing [36][37], Time Series [37][38], and many others. However, none of these methods is quick enough to give the results from a deterministic point of view and without using previous measurements for training. Additionally, they are quite focused in application terms, which means that they cannot be used without re-calibration to a different set of KPIs. On the other hand, traditional Statistical Process Control (SPC) does not offer solutions without context, meaning the combination of the application and method [30][31].