Alzheimer’s disease (AD), the most familiar type of dementia, is a severe concern in modern healthcare. Around 5.5 million people aged 65 and above have AD, and it is the sixth leading cause of mortality in the US. AD is an irreversible, degenerative brain disorder characterized by a loss of cognitive function and has no proven cure. Deep learning techniques have gained popularity in recent years, particularly in the domains of natural language processing and computer vision. Since 2014, these techniques have begun to achieve substantial consideration in AD diagnosis research, and the number of papers published in this arena is rising drastically. Deep learning techniques have been reported to be more accurate for AD diagnosis in comparison to conventional machine learning models.

- Alzheimer’s disease

- deep learning

- biomarkers

- positron emission tomography

- Magnetic Resonance Imaging

- mild cognitive impairment

1. Introduction

2. DL for AD Diagnosis

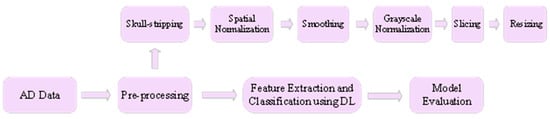

Figure 1 3 presents a framework for classification of the AD using DL. The AD dataset is pre-processed first using pre-processing techniques such as skull stripping, spatial normalization, smoothing, grayscale normalization, slicing and resizing. Skull stripping is used to segregate non-brain tissues from brain tissues. Spatial normalization normalizes images from diverse subjects to a common template. Smoothing improves the quality of the images by removing noise from the images. Grayscale normalization maps the pixel intensity values to a new and more suitable range. Slicing divides the image into multiple logical images. Finally, resizing is carried out in order to get the desired image size. Then the pre-processed data are fed as input to the DL model that performs feature extraction and classification of the input data. Finally, the model is evaluated using performance metrics such as accuracy, F1 score, area under curve (AUC), and mean squared error (MSE). The following presents a thorough literature review of DL techniques for AD diagnosis. Table 1 presents a summary of these research works.

2.1. Feed-Forward DNN for AD Diagnosis

Feed-forward DNN has been utilized by multiple studies for AD diagnosis. Amoroso et al. [6][31] proposed a method based on Random Forest (RF) and DNN for revealing the onset of Alzheimer’s in subjects with MCI. RF was used for feature selection, and DNN performed the classification of input. The RF consisted of 500 trees and performed 100 rounds, and in each round, 20 crucial features were chosen. DNN consisted of 11 layers with 2056 input units and four output units. ReLU and tanh were used as the activation functions, and categorical cross-entropy was used as the loss function. Adam was used as the optimizer in the DNN. The authors compared the proposed approach with SVM and RF, and it was shown that the proposed method outperforms these techniques. Kim and Kim [7][32] proposed a DNN-based model for the diagnosis of Alzheimer’s in its early stage. The model takes the EEG of the subjects as input and classifies it into two groups, MCI and HC (healthy controls). The authors compared the proposed approach with a shallow neural network, and it was demonstrated that the proposed model outperforms a shallow neural network. Rouzannezhad et al. [8][33] formulated a technique based on DNN for binary (MCI, CN) and multiclass (EMCI, LMCI, AD, CN) classification of subjects in order to detect AD in the premature stage. The authors fed multimodal data (MRI, PET and typical neurophysiological parameters) as input to the DNN. The DNN consisted of three hidden layers, and Adam was used as the optimizer. Moreover, dropout was used to avoid the over-fitting problem. Experiments carried out in the research work demonstrated that the proposed technique performs better than the single modal scenarios in which only MRI or PET was fed as input to the DNN model. Moreover, the fusion of typical neurophysiological data with MRI and PET further enhanced the efficiency of the approach. Fruehwirt et al. [9][34] formulated a model based on Bayesian DNN that predicts the severity of AD disease using EEG data. The proposed model consisted of two layers with 100 units each. The authors demonstrated that the proposed model is a good fit for predicting disease severity in clinical neuroscience. Orimaye et al. [10][35] proposed a hybrid model consisting of DNN and deep language models (D2NNLM) to predict AD. Experiments conducted in the study demonstrated that the proposed model predicts the conversion of MCI to AD with high accuracy. Ning et al. [11][36] formulated a neural network-based model for the classification of subjects into AD and CN categories. Moreover, the model predicts the conversion of MCI subjects to AD. MRI and genetic data were fed as input to the model. The authors compared the proposed model with logistic regression (LR), and it was demonstrated that the proposed model outperforms the LR model. Park et al. [12][37] proposed a model based on DNN that takes as input the integrated gene expressions and DNA methylation data and predicts the progression of AD. The authors demonstrated that the integrated data results in better model accuracy as compared to single-modal data. Moreover, the proposed model outperformed existing machine learning models. The authors used the Bayesian method to choose optimal parameters for the model. It was shown that a DNN with eight hidden layers, 306 nodes in each layer, the learning rate of 0.02, and a dropout rate of 0.85 attains the best performance. Benyoussef et al. [13][38] proposed a hybrid model consisting of KNN (K-Nearest Neighbor) and DNN for the classification of subjects into No-Dementia (ND), MCI and AD based on MRI data. In the proposed model, KNN assisted DNN in discriminating subjects that are easily diagnosable from hard to diagnose subjects. The DNN consisted of two hidden layers with 100 nodes each. Experimental results demonstrated that the proposed model successfully classified the different AD stages. Manzak et al. [14][39] formulated a model based on DNN for the detection of AD in the early stage. RF was used for feature extraction in the proposed model. Albright [15][40] predicted the progression of AD using DNN in both cases, i.e., the subjects who were CN initially and later got AD and subjects who were having MCI and converted to AD. Suresha and Parthasarathy [16][41] proposed a model based on DNN with the rectified Adam optimizer for the detection of AD. The authors utilized the Histogram of Oriented Gradients (HOG) to extract crucial features from the MRI scans. It was shown with the help of experiments that the proposed model outperformed the existing strategies by a good margin. Wang et al. [17][42] utilized gene expression data for studying the molecular changes caused due to AD. The study used a DNN model for identifying the crucial molecular networks that are responsible for AD detection.2.2. CNN for AD Diagnosis

The following studies utilized CNN for AD diagnosis. Suk and Shen [18][43] proposed a hybrid model based on Sparse Regression Networks and CNN for AD diagnosis. The model employed multiple Sparse Regression Networks for generating multiple target-level representations. These target-level representations were then integrated by CNN that optimally identified the output label. Billones et al. [19][44] altered the 16-layered VGGNet for classifying the subjects into three categories, AD, MCI and HC, based on structural MRI scans. Experiments conducted in the study demonstrated that the authors successfully performed classifications with good accuracy. The authors claimed that this was achieved without performing segmentation of the MR images. Sarraf and Tofighi [20][45] utilized LeNet architecture for the classification of the AD subjects from healthy ones based on functional MRI. The authors concluded that due to the shift-invariant and scale-invariant properties, CNN has got a massive scope in medical imaging. In another study, Sarraf and Tofighi [21][46] utilized LeNet architecture for classification of AD subjects from healthy ones based on structural MR images. The study attained an accuracy of 98.84%. In one more study, Sarraf and Tofighi [22][47] utilized LeNet and GoogleNet architectures for AD diagnosis based on Functional as well as structural MR images. Experiments conducted in the study demonstrated that these architectures performed better than state-of-the-art AD diagnosis techniques. Gunawardena et al. [23][48] formulated a method based on CNN for the diagnosis of AD in its early stage using structural MRI. The study compared the performance of the proposed method with SVM, and it was shown that the CNN model outperformed the SVM. The authors intend to incorporate two more MRI views (axial view and sagittal view) in addition to the coronal view used in this study in future. Basaia et al. [24][49] developed a model based on CNN for the diagnosis of AD using structural MR images. The study implemented data augmentation and transfer learning techniques for avoiding the over-fitting problem and improving the computational efficiency of the model. The authors claimed that the study overcomes limitations of the existing studies that usually focused on single-center datasets, which limits their usage. Wang et al. [25][50] designed an eight-layered CNN model for AD diagnosis. The authors compared three different activation functions, namely rectified linear unit (ReLU), sigmoid, and leaky ReLU and three different pooling functions, namely stochastic pooling, max pooling, and average pooling, for finding out the best model configuration. It was shown that the CNN model with leaky ReLU activation function and max pooling function gave the best results. Karasawa et al. [26][51] proposed a 3D-CNN based model for AD diagnosis using MR images. The architecture of proposed 3D-CNN is based on ResNet. It has 36 convolutional layers, a dropout layer, a pooling layer and a fully connected layer. Experiments conducted in the study demonstrated that the model outperformed several existing benchmarks. Tang et al. [27][52] proposed an AD diagnosis model based on 3D Fine-tuning Convolutional Neural Network (3D-FCNN) using MR images. The authors demonstrated that the proposed model outperformed several existing benchmarks in terms of accuracy and robustness. Moreover, the authors compared the 3D-FCNN model with 2D-CNN and it was shown that the proposed model performed better than 2D-CNN in binary as well as multi-class classification. Spasov et al. [28][53] proposed a multi-modal framework based on CNN for AD diagnosis using structural MRI, genetic measures and clinical assessment. The devised framework had much fewer parameters as compared to the other CNN models such as VGGNet, AlexNet, etc. This made the framework faster and less susceptible to problems such as over-fitting in case of scarce-data scenarios. Wang et al. [29][54] proposed a CNN based model for AD diagnosis using two crucial MRI modalities, namely fMRI and Diffusion Tensor Imaging (DTI). The model classified the subjects into three categories: AD, amnestic MCI and normal controls (NC). The authors proved that the proposed model performed better on multi-modal MRI than individual fMRI and DTI. Islam and Zhang [30][55] proposed a CNN-based model for AD diagnosis in the early stage using MR images. The authors trained the model using OASIS dataset, which is an imbalanced dataset. They used data augmentation to handle the imbalanced nature of the OASIS dataset. Experimental results demonstrated that the proposed model performed better than several state-of-the-art models. The authors plan to apply the proposed model to other AD datasets as well in future. Yue et al. [31][56] proposed a CNN-based model for AD diagnosis using structural MR images. The model classified the subjects into four categories: AD, EMCI, LMCI and NC. Experiments carried out in the research work demonstrated that the proposed model outperformed several benchmarks. Jian et al. [32][57] proposed a transfer learning-based approach for AD diagnosis using structural MRI. VGGNet16 trained on the ImageNet dataset was used as a feature extractor for AD classification. The proposed approach successfully classified the input into three different categories: AD, MCI and CN. Huang et al. [33][58] designed a multi-modal model based on 3D-VGG16 for the diagnosis of AD using MRI and FDG-PET modalities. The study demonstrated that the model does not require segmentation of the input. Moreover, the authors showed that the hippocampus of the brain is a crucial Region of Interest (ROI) for AD diagnosis. The authors intend to include other modalities as well in future. Goceri [34][59] proposed an approach based on 3D-CNN for AD diagnosis using MR Images. The proposed approach used Sobolev gradient as the optimizer, leaky ReLU as the activation function, and Max Pooling as the pooling function. The research work demonstrated that the combination of optimizer, activation function and pooling function implemented outperformed all the other combinations. Zhang et al. [35][60] utilized two independent CNNs for analyzing MR images and PET images separately. Then, correlation analysis of the outputs of the CNNs was performed to obtain the auxiliary diagnosis of AD. Finally, the auxiliary diagnosis result was combined with the clinical psychological diagnosis to obtain a comprehensive diagnostic output. The authors demonstrated that the proposed architecture is easy to implement and generates results closer to the clinical diagnosis. Basheera and Ram [36][61] proposed a model based on CNN for AD diagnosis using MR images. The MR images were divided into voxels first. Gaussian filter was used to enhance the quality of voxels and a skull stripping algorithm was used to filter out irrelevant portions from the voxels. Independent component analysis was applied to segment the brain into different regions. Finally, segmented gray matter was fed as input to the proposed model. Experimental results demonstrated that the proposed model outperformed several state-of-the-art models. Spasov et al. [37][62] proposed a parameter-efficient CNN model for predicting the MCI to AD conversion using structural MRI, demographic data, neuropsychological data, and APOe4 genetic data. Experiments carried out in the research work demonstrated that the proposed model performed better than several existing benchmarks. Ahmad and Pothuganti [38][63] performed a comparative analysis of SVM, Regional CNN (RCNN) and Fast Regional CNN for AD diagnosis. The study demonstrated that the Fast RCNN outperformed the other techniques. Lopez-Martin et al. [39][64] proposed a randomized 2D-CNN model for AD diagnosis in the early stage using MEG data. The research work demonstrated that the proposed model outperformed the classic machine learning techniques in AD diagnosis. Jiang et al. [40][65] proposed an eight-layered CNN model for AD diagnosis. The proposed model implemented batch normalization, data augmentation and drop-out regularization for achieving high accuracy. The authors compared the proposed model with several existing techniques, and it was demonstrated that the proposed model outperformed them. Nawaz et al. [41][66] proposed a 2D-CNN based model for AD diagnosis using MRI data. The proposed model classified the input images into three groups: AD, MCI and NC. The authors compared the proposed model with AlexNet and VGGNet architectures, and it was demonstrated that the proposed model outperformed these architectures. Bae et al. [42][67] modified the Inception-v4 model pre-trained on ImageNet dataset for AD classification using MRI data. The study used datasets from subjects with two different ethnicities. The study demonstrated that the model has the potential to be used as a fast and accurate AD diagnostic tool. Jo et al. [43][68] proposed a model based on CNN for finding the correlation between tau deposition in the brain and probability of having AD. The study also identified the regions in the brain that are crucial for AD classification. According to the study, these regions include hippocampus, para-hippocampus, thalamus and fusiform.2.3. AE for AD Diagnosis

The following studies utilized AE for AD diagnosis. Lu et al. [44][82] proposed a SAE-based model for predicting the progression of AD. The proposed model was named Multi-scale and Multi-modal Deep Neural Network (MMDNN) as it integrated information from multiple areas of the brain scanned using MRI and FDG-PET. Experiments carried out in the research work demonstrated that analyzing both MRI and FDG-PET gives better results than the single modal settings. Liu et al. [45][83] designed a SAE-based model for the diagnosis of AD in its early stage. The authors demonstrated that the designed model performed well even in the case of limited training data. Moreover, the authors analyzed the performance of the model against Single-Kernel SVM and Multi-Kernel SVM, and it was revealed that the proposed model outperformed these models. Lu et al. [46][84] proposed a DL model based on SAE for discriminating pre-symptomatic AD and non-progressive AD in subjects with MCI using metabolic features captured with FDG-PET. The parameters in the model were initialized using greedy layer-wise pre-training. Softmax-layer was added for performing the classification. The proposed model was compared with the existing benchmark techniques that utilized FDG-PET for capturing the metabolic features, and it was shown that it performed better than those techniques.2.4. RNN for AD Diagnosis

Lee et al. [47][85] proposed a RNN-based model that extracted temporal features from multi-modal data for forecasting the conversion of MCI subjects to AD patients. The data were fused between different modalities, including demographic information, MRI, CSF biomarkers and cognitive performance. The authors proved that the model outperformed the existing benchmarks. Furthermore, it was shown that the multi-modal model outperformed the individual single-modal models.2.5. DBN for AD Diagnosis

Ortiz et al. [48][86] proposed two methods based on DBN for the early diagnosis of AD. These methods worked on fused functional and structural MRI scans. The first one, named as DBN-voter, consisted of an ensemble of DBN classifiers and a voter. Four different voting schemes were analyzed in the study, namely majority voting, weighted voting, classifiers fusion using SVM, and classifiers fusion using DBN. As the second model, FEDBN-SVM used DBNs as feature extractors and carried out classification using SVM. It was demonstrated that FEDBN-SVM outperformed DBN-voter in addition to the existing benchmarks, and in the case of DBN-voter, DBNs with classifiers fusion using SVM performed better.2.6. GAN for AD Diagnosis

Ma et al. [49][87] proposed a GAN-based model for the differential diagnosis of frontotemporal dementia and AD pathology. The model extracted multiple features from MR images for classification. Moreover, data augmentation was performed in order to avoid over-fitting caused due to limited data problem. Experimental analysis carried out in the research work revealed that the model showed promising results in the differential diagnosis of frontotemporal dementia and AD pathology. The authors claimed that the proposed model could be used for the differential diagnosis in other neurodegenerative diseases as well.2.7. Hybrid DL Models for AD Diagnosis

The following studies utilized hybrid DL models for AD diagnosis. Zhang et al. [50][88] proposed 3D Explainable Residual Self-Attention Convolutional Neural Network (3D ResAttNet) for diagnosis of AD using structural MR images. The proposed model is a CNN with a self-attention residual mechanism, and explainable gradient-based localization class activation mapping was employed that provided visual analysis of AD predictions. The self-attention mechanism modeled the long-term dependencies in the input and the residual mechanism dealt with the vanishing gradient problem. The authors compared the proposed model with 3D-VGGNet and 3D-ResNet, and it was shown that the proposed model performed better than these models. Payan and Montana [51][89] formulated a model based on 3D-CNN for the prediction of AD using MR images. The study employs Sparse AE for pre-training the convolutional filters. Experiments conducted in the research work revealed that the model outperformed the existing benchmarks. Hosseini et al. [52][90] proposed a hybrid model consisting of AE and 3D-CNN for early stage diagnosis of AD. The variations in anatomical shapes of brain images were captured by AE, and classification was carried out using 3D-CNN. The authors compared the proposed model with the existing benchmarks, and it was established that the proposed model outperformed those techniques. Moreover, the authors plan to apply the proposed model for the diagnosis of other conditions such as autism, heart failure and lung cancer. Vu et al. [53][91] proposed an AD detection system based on High-Level Layer Concatenation Auto-Encoder (HiLCAE) and 3D-VGG16. HiLCAE was used as a pre-trained network for initializing the weights of 3D-VGG16. The proposed system worked on the fused MR and PET images. Experiments carried out in the research work demonstrated that the proposed system detected AD with good accuracy. The authors intend to develop deeper networks for both HiLCAE and VGG16 in future so as to improve the accuracy further. Warnita et al. [54][92] proposed a gated CNN-based approach for AD diagnosis using speech transcripts. The proposed approach captured temporal features from speech data and performed classification based on the extracted features. The authors plan to apply the proposed approach to different languages in the future. Feng et al. [55][93] proposed a hybrid model consisting of Stacked Bidirectional RNN (SBi-RNN) and two 3D-CNNs for diagnosis of AD in its early stage. CNNs extracted preliminary features from MRI and PET images, while SBi-RNN abstracted discriminative features from the cascaded output of CNNs. The output from SBi RNN was fed to a softmax classifier that generated the model output. Experiments conducted in the study demonstrated that the proposed model outperformed state-of-the-art models. Li and Liu [56][94] proposed a framework consisting of Bidirectional Gated Recurrent Unit (BGRU) and DenseNets for hippocampus analysis-based AD diagnosis. The DenseNets were trained to capture the shape and intensity of MR images and BGRU abstracted high-level features between the right and left hippocampus. Finally, a fully connected layer performed classification based on the extracted features. Experiments conducted in the study revealed that the proposed framework generated promising results. Oh et al. [57][95] proposed a model based on end-to-end learning using CNN for carrying out the following classifications: AD versus NC, pMCI (probable MCI) versus NC, sMCI (stable MCI) versus NC, and pMCI versus sMCI. The authors utilized Convolutional Auto-Encoder for performing AD versus NC classification, and transfer learning was implemented to perform pMCI versus sMCI classification. Experiments carried out in the study showed that the proposed model worked better than several existing benchmarks. Chien et al. [58][96] developed a system for assessing the risk of AD based on speech transcripts. The system consisted of three components: a data collection component that fetched data from the subject, a feature sequence generator that converted the speech transcripts into the features, and an AD assessment engine that determined whether the person had AD or not. The feature sequence generator was built using a deep convolutional RNN, and the AD assessment engine was realized using a bidirectional RNN with the gated recurrent unit. Experimental analysis carried out in the research work revealed that the system gives promising results. Kruthika et al. [59][97] proposed a hybrid model consisting of 3D Sparse AE, CNN and capsule network for detection of AD in its early stage. The authors revealed that the hybrid model worked better than the 3D-CNN. Basher et al. [60][98] proposed an amalgam of Hough CNN, Discrete Volume Estimation-CNN (DVE-CNN) and DNN for AD diagnosis using structural MR images. Hough CNN has been used to localize right and left hippocampi. DVE-CNN was utilized to mine volumetric features from the pre-processed 2D patches. Finally, DNN classified the input based on the features extracted using DVE-CNN. The study demonstrated that the proposed approach outperformed the existing benchmarks by a good margin. Roshanzamir et al. [61][99] utilized a bidirectional encoder with logistic regression for early prediction of AD using speech transcripts. The authors implemented the concept of data augmentation for dealing with the limited dataset problem. Experiments conducted in the study demonstrated that the bidirectional encoder with logistic regression outperformed the existing benchmarks. Zhang et al. [62][100] proposed a densely connected CNN with attention mechanism for AD diagnosis using structural MR images. The densely connected CNN extracted multiple features from the input data, and the attention mechanism fused the features from different layers to transform them into complex features based on which final classification was performed. It was established that the model outperformed several existing benchmark models.| Work | Year | Biomarker | DL Method | Dataset | Performance |

|---|---|---|---|---|---|

| [45][83] | 2014 | MRI and PET | SAE | ADNI-311 subjects (AD-65, cMCI-67, ncMCI-102, NC-77) | Accuracy (NC/AD): 87.76% Accuracy (NC/MCI): 76.92% |

| [50][88] | 2015 | MRI | Residual Self Attention 3D Convolutional Neural Network | ADNI-835 subjects (AD-200, MCI-404, NC-231) | Accuracy (NC/AD): 91.3% ± 0.012 Accuracy (sMCI/pMCI): 82.1% ± 0.092 |

| [51][89] | 2015 | MRI | CNN + Sparse AE | ADNI-2265 subjects (AD-755, MCI-755, HC-755) | Accuracy (HC/MCI/AD): 89.47% Accuracy (HC/AD): 95.39% Accuracy (AD/MCI): 86.84% Accuracy (HC/MCI): 92.11% |

| [18][43] | 2016 | MRI | CNN | ADNI-805 subjects (AD-186, MCI-393, NC-226) | Accuracy (NC/ADI): 91.02% ± 4.29 Accuracy (NC/MCI): 73.02% ± 6.44 Accuracy (sMCI/pMCI): 74.82% ± 6.80 |

| [19][44] | 2016 | MRI | CNN | ADNI-900 subjects (AD-300, MCI-300, HC-300) | Accuracy (HC/MCI/AD): 91.85% |

| [20][45] | 2016 | fMRI | CNN | ADNI-43 subjects (AD-28, NC-15) | Accuracy(NC/AD): 96.85% |

| [48][86] | 2016 | MRI and fMRI | DBN | ADNI-275 subjects (AD-70, MCI-111, LMCI-26, NC-68) | Accuracy (NC/AD): 90% Accuracy (MCI/AD): 84% Accuracy (NC/MCI): 83% |

| [52][90] | 2016 | MRI | CNN + AE | ADNI-210 subjects (AD-70, MCI-70, NC-70) | Accuracy (NC/MCI/AD): 89.1% |

| [21][46] | 2016 | MRI | CNN | ADNI-302 subjects (AD-211, HC-91) | Accuracy (HC/AD): 98.84% |

| [22][47] | 2016 | MRI and fMRI | CNN | ADNI (fMRI-144 subjects: AD-52, CN-92) ADNI(MRI-302 subjects: AD-211, CN-91) |

Accuracy (fMRI (CN/AD)): 99.9% Accuracy (MRI (CN/AD)): 98.84% |

| [6][31] | 2017 | MRI | DNN | ADNI-240 subjects (AD-60, cMCI-60, MCI-60,HC-60) | Accuracy (HC/MCI/cMCI/AD): 53.7 ± 1.9% |

| [23][48] | 2017 | MRI | CNN | ADNI-504 subjects (AD-101, MCI-234, CN-169) | Accuracy (CN/MCI/AD): 96% |

| [46][84] | 2018 | MRI and FDG-PET | SAE | ADNI-1051 subjects (NC-304, sMCI-409, pMCI-112, AD-226) | Accuracy (NC/AD): 93.58%, Accuracy (sMCI/pMCI): 81.55% |

| [7][32] | 2018 | EEG | DNN | Data collected from Chosun University Hospital and Gwangju Optimal Dementia Center located in South Korea-20 subjects (MCI-10, HC-10) | Accuracy (NC/MCI): 59.3% |

| [44][82] | 2018 | MRI and FDG-PET images | SAE | ADNI-1242 subjects (sNC-360, sMCI-409, pNC: 18, pMCI-217, sAD-238) | Accuracy (sMCI/pMCI): 82.93% |

| [24][49] | 2018 | MRI | CNN | ADNI-1409 subjects (AD-294, MCI-763, HC-352), Milan dataset-229 subjects (AD-124, MCI-50, HC-55) | Accuracy (HC/AD): 98.2% Accuracy (HC/cMCI): 87.7% Accuracy (HC/sMCI): 76.4% Accuracy (cMCI/AD): 75.8% Accuracy (sMCI/AD): 86.3% Accuracy (cMCI/sMCI): 74.9% |

| [8][33] | 2018 | MRI and AV-45 PET data | DNN | ADNI-896 subjects (CN-248, AD-149, EMCI-296, LMCI-193) | Accuracy (CN/EMCI): 84% Accuracy (CN/LMCI): 84.1% Accuracy (CN/AD): 96.8% Accuracy (EMCI/LMCI): 69.5% Accuracy (EMCI/AD): 90.3% Accuracy (LMCI/AD): 80.2% |

| [9][34] | 2018 | EEG | DNN | Data collected from Medical Universities of Graz, Innsbruck and Vienna, as well as Linz General Hospital—188 subjects (Probable AD-133, Possible AD-55) | Mean Squared Error (Probable AD/Possible AD): 12.17 |

| [53][91] | 2018 | MRI and FDG-PET | AE + CNN | ADNI-615 subjects (AD-193, MCI-215, NC-207) | Accuracy (MCI/AD): 93% Accuracy (NC/MCI): 95% Accuracy (NC/AD): 98.8% Accuracy (NC/MCI/AD): 91.13% |

| [25][50] | 2018 | MRI | CNN | OASIS dataset-126 subjects (AD-28, HC-98) and data from local hospitals-70 subjects (AD-70) | Accuracy (HC/AD): 97.65% |

| [26][51] | 2018 | MRI | CNN | ADNI-1728 subjects (AD-346, MCI-450, LMCI-358, NC-574) | Accuracy (NC/AD): 94% Accuracy (NC/MCI): 90% Accuracy (NC/MCI/AD): 87% |

| [27][52] | 2018 | MRI | CNN | ADNI-391 subjects (AD-150, MCI-129, NC-112) | Accuracy (NC/AD): 96.81% Accuracy (MCI/AD): 88.43% Accuracy (NC/MCI): 92.62% Accuracy (NC/MCI/AD): 91.32% |

| [10][35] | 2018 | Speech transcripts | DNN | DementiaBank dataset | AUC (MCI/AD): 0.815 |

| [28][53] | 2018 | MRI, clinical assessment and genetic (APOe4) measures | CNN | ADNI-800 subjects (AD-200, MCI-400, NC-200) | Accuracy (NC/MCI/AD): 99% |

| [29][54] | 2018 | fMRI and Diffusion Tensor Imaging (DTI) | CNN | ADNI-105 subjects (AD-35, aMCI-30, NC-40) | Accuracy (NC/aMCI/AD): 92.06% |

| [54][92] | 2018 | Speech transcripts | Gated CNN | DementiaBank dataset-267 subjects (AD-169, HC-98) | Accuracy (HC/AD): 73.6% |

| [11][36] | 2018 | MRI and single nucleotide polymorphism (SNP) data | DNN | ADNI-721 subjects (AD-138, MCI-358, CN-225) | AUC (CN/MCI/AD): 0.992 |

| [30][55] | 2018 | MRI | CNN | OASIS dataset-416 subjects | Accuracy (Non Demented/very Mild/Mild/Moderate): 93% |

| [55][93] | 2018 | MRI and PET | CNN + RNN | ADNI-397 subjects (AD-93, pMCI-76, sMCI-128, CN-100) | Accuracy (NC/AD): 94.29% Accuracy (NC/pMCI): 84.66% Accuracy (NC/sMCI): 64.47% |

| [31][56] | 2018 | MRI | CNN | ADNI-1663 subjects (AD-336, MCI-542, CN-785) | Accuracy (NC/LMCI): 94.5% Accuracy (NC/AD): 96.9% Accuracy (LMCI/AD): 97.2% Accuracy (EMCI/AD): 97.81% Accuracy (EMCI/LMCI): 94.8% |

| [12][37] | 2019 | gene expression and DNA methylation profiles | DNN | GSE33000 and GSE44770 (gene expression), prefrontal cortex GSE80970 (DNA methylation) | Accuracy (NC/AD): 82.3% |

| [13][38] | 2019 | MRI | DNN | OASIS-416 subjects | Accuracy (NC/AD): 86.66% |

| [32][57] | 2019 | MRI | CNN | ADNI-150 subjects (AD-50, CN-50, MCI-50) | Accuracy (CN/AD): 99.14% Accuracy (AD/MCI): 99.3% Accuracy (CN/MCI): 99.2% |

| [14][39] | 2019 | MRI | DNN | ADNI-291 subjects (AD-97, CN-194) | Accuracy (CN/AD): 67% |

| [56][94] | 2019 | MRI | CNN + RNN | ADNI-807 subjects (AD-194, MCI-397, NC-216) | Accuracy (NC/AD): 91.0% Accuracy (NC/MCI): 75.8% Accuracy (sMCI/pMCI): 74.6% |

| [57][95] | 2019 | MRI | AE+ CNN | ADNI-694 subjects (AD-198, NC-230, sMCI-101, pMCI-166) | Accuracy (AD/NC): 86.60% ± 3.66% Accuracy (pMCI/NC): 77.37% ± 3.55% Accuracy (sMCI/NC): 63.04% ± 4.16% Accuracy (pMCI/AD): 60.97% ± 5.33% Accuracy (sMCI/AD): 75.06% ± 3.86 |

| [33][58] | 2019 | MRI and FDG-PET | CNN | ADNI-2145 subjects (AD-647, sMCI-441, pMCI-326, HC-731) | Accuracy (NC/AD): 90.10% Accuracy (NC/pMCI): 87.46% Accuracy (sMCI/pMCI): 76.90% |

| [34][59] | 2019 | MRI | CNN | ADNI-315 subjects (AD-185, HC-130) | Accuracy (HC/AD): 98.06% |

| [47][85] | 2019 | Demographic information, neuro-imaging phenotypes measured by MRI, cognitive performance, and CSF measurements | RNN | ADNI-1618 subjects (AD-338, MCI-865, CN-415) | Accuracy (CN/MCI/AD): 81% |

| [58][96] | 2019 | Speech transcripts | CNN + RNN | DementiaBank dataset | AUC (NC/AD): 0.838 |

| [59][97] | 2019 | MRI | CNN + AE | ADNI-1941 subjects (AD-345, MCI-991, NC-605) | Accuracy (MCI/AD): 94.6% Accuracy (NC/AD): 92.98% Accuracy (NC/MCI): 94.04% |

| [35][60] | 2019 | MRI and PET | CNN | ADNI-392 subjects (AD-91, MCI-200, CN-101) | Accuracy (NC/AD): 98.47% Accuracy (NC/MCI): 85.74% Accuracy (AD/MCI): 88.20% |

| [36][61] | 2019 | MRI | CNN | ADNI-1820 images (AD-635, MCI: 548, CN: 637) | Accuracy (CN/MCI/AD): 86.9% Accuracy (CN/AD): 100% Accuracy (MCI/AD): 96.2% Accuracy (CN/MCI): 98% |

| [15][40] | 2019 | MRI | DNN | ADNI-1737 subjects | AUC (NC/MCI/AD): 0.866 |

| [37][62] | 2019 | MRI and clinical features | CNN | ADNI-785 subjects (AD-192, MCI-409, HC-184) | Accuracy (MCI/AD): 86% |

| [49][87] | 2020 | MRI | GAN | ADNI-1114 subjects and Frontotemporal Lobar Degeneration Neuroimaging Initiative (NIFD)-840 subjects | Accuracy (NC/AD): 88.28% |

| [38][63] | 2020 | MRI | CNN | ADNI | Test time (NC/AD): 0.2 s |

| [39][64] | 2020 | MEG | CNN | Data collected from Centre for Biomedical Technology, Spain-132 subjects (MCI-78, HC-54) | F1-Score (HC/MCI) = 0.92 |

| [40][65] | 2020 | MRI | CNN | OASIS dataset-126 subjects (AD-28, HC-98) and data from local hospitals-70 subjects (AD-70) | Accuracy (HC/AD): 97.76% ± 0.41 |

| [41][66] | 2020 | MRI | CNN | ADNI-159 subjects (AD-45, MCI-62, NC-52) | Accuracy (NC/MCI/AD): 99.89% |

| [42][67] | 2020 | MRI | CNN | ADNI-390 subjects (AD-195, CN-195), SNUBH-390 subjects (AD-195, CN-195) | Accuracy (ADNI (CN/AD)): 89% Accuracy (SNUBH (CN/AD)): 88% |

| [63][69] | 2020 | fMRI and PET | CNN | fMRI ADNI dataset-54 subjects (AD-27, HC-27) PET ADNI dataset-2675 images (AD-900, HC-1775) |

Accuracy (fMRI dataset (HC/AD)): 99.95% Accuracy (PET ADNI (HC/AD)): 73.46% |

| [64][70] | 2020 | MRI | CNN | Kaggle’s MRI dataset | Accuracy (MCI/AD): 96% |

| [16][41] | 2020 | MRI | DNN | ADNI-819 subjects (AD-192, MCI-398, CN-229) and NIMHANS-99 (AD-39, CN-60) | Accuracy (ADNI (CN/MCI/AD)): 99.50% Accuracy (NIMHANS (CN/AD)): 98.40% |

| [65][71] | 2020 | MRI | CNN | OASIS-382 images (No Dementia: 167, Very Mild Dementia-87, Mild Dementia-105, Moderate AD-23) | Accuracy (No Dementia/Very Mild Dementia/Mild Dementia/Moderate AD): 99.05% |

| [66][72] | 2020 | MRI | CNN | ADNI-465 subjects (AD-132, MCI-181, CN-152) | Accuracy (CN/MCI/AD): 97.77% |

| [67][73] | 2020 | MRI | CNN | ADNI-132 subjects (AD-25, MCI-61, CN-46) | Accuracy (CN/MCI/AD):84% |

| [68][74] | 2020 | MRI | CNN | ADNI-GO/2-663 subjects ADNI-3-575 subjects AIBL-606 subjects DELCODE-474 subjects |

Accuracy (ADNI-GO/2): 86.25% Accuracy (ADNI-3): 74.375% Accuracy (AIBL): 79.225% Accuracy (DELCODE): 78% |

| [69][75] | 2020 | MRI | CNN | ADNI-469 subjects (AD-153, MCI-157, CN-159) | Accuracy (NC/MCI/AD): 92.11% ± 2.31 Accuracy (NC/AD): 99.10% ± 1.13 Accuracy (NC/MCI): 98.90% ± 2.78 Accuracy (MCI/AD): 89.40% ± 6.90 |

| [43][68] | 2020 | Tau-PET | CNN | ADNI-300 subjects (AD-66. EMCI-97, LMCI-71, CN-66) | Accuracy (CN/AD): 90.8% |

| [60][98] | 2021 | MRI | CNN + DNN | Gwangju Alzheimer’s and Related Dementia (GARD) | Accuracy (NC/AD): 94.02% |

| [61][99] | 2021 | Speech transcripts | Bidirectional encoder with logistic regression | DementiaBank dataset-269 subjects (AD-170, HC-99) | Accuracy (HC/AD): 88.08% |

| [62][100] | 2021 | MRI | CNN with attention mechanism | ADNI-968 subjects (AD-280, cMCI-162, ncMCI-251, NC-275) | Accuracy (NC/AD): 97.35% Accuracy (NC/MCI): 87.82% Accuracy (MCI/AD): 78.79% |

| [70][76] | 2021 | MRI and PET | CNN | ADNI-5556 images (AD-718, EMCI-1222, MCI-1274, LMCI-636, SMC-186, CN-1520) | Accuracy (CN/EMCI/MCI/LMCI/AD): 86% |

| [71][77] | 2021 | fMRI | CNN | ADNI-675 subjects | Accuracy (Low AD): 98.1% Accuracy (Mild AD): 95.2% Accuracy (Moderate AD): 89% Accuracy (Severe AD): 87.5% |

| [72][78] | 2021 | MRI | CNN | ADNI-450 subjects (AD-150, MCI-150, NC-150) | Accuracy (NC/AD): 90.15% ± 1.1 Accuracy (MCI/AD): 87.30% ± 1.4 Accuracy (NC/MCI): 83.90% ± 2.5 |

| [17][42] | 2021 | Genetic Measures | DNN | MCSA-266 subjects | p-value ˂ 1 × 10−3 |

| [73][79] | 2021 | FDG-PET | CNN | MCSA | Mean Absolute Error: 2.8942 |

| [74][80] | 2021 | MRI | CNN | HABS | Error rate < 1% |

| [75][81] | 2021 | Tau-PET and MRI | CNN | Tau-PET and MRI images from two human brains | Area under Curve: 0.88 |