Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Ahmed Bassam Ayoub and Version 2 by Conner Chen.

Optical Diffraction Tomography (ODT) is an emerging tool for label-free imaging of semi-transparent samples in three-dimensional space. Being semi-transparent, such objects do not strongly alter the amplitude of the illuminating field.

- optical diffraction tomography

- ODT

1. Introduction

Optical Diffraction Tomography (ODT) is an emerging tool for label-free imaging of semi-transparent samples in three-dimensional space [1][2][3][4][5][6][7][8][9][10]. Being semi-transparent, such objects do not strongly alter the amplitude of the illuminating field. However, the total phase delay at a particular wavelength is a function of the refractive index of the sample and also the thickness of the sample. Due to this ambiguity, one cannot distinguish between those parameters from 2D projections. Hence, to reconstruct the 3D refractive index (RI) map of semi-transparent samples, a holographic detection is needed to extract the phase of the field after passing through the sample. Then, by acquiring different holograms at different illumination angles, the 3D RI map can be reconstructed using inverse scattering models [10].

Optical Diffraction Tomography (ODT) is an emerging tool for label-free imaging of semi-transparent samples in three-dimensional space [1,2,3,4,5,6,7,8,9,10]. Being semi-transparent, such objects do not strongly alter the amplitude of the illuminating field. However, the total phase delay at a particular wavelength is a function of the refractive index of the sample and also the thickness of the sample. Due to this ambiguity, one cannot distinguish between those parameters from 2D projections. Hence, to reconstruct the 3D refractive index (RI) map of semi-transparent samples, a holographic detection is needed to extract the phase of the field after passing through the sample. Then, by acquiring different holograms at different illumination angles, the 3D RI map can be reconstructed using inverse scattering models [10].

Holographic detection was introduced by Gabor who used “in-line” holography. He showed that the intensity image retrieved from the in-line holography is composed of an “in-focus” image in addition to an “out-of-focus” image (i.e., “Twin” image) [11]. Due to this “Twin” image problem, in-line holography usually encounters problems in retrieving the phase of the object. Upatnieks and Leith proposed an “off-axis” holography [12]. In this configuration, a small tilt is introduced between the reference arm and the sample arm, which results in shifting in the Fourier domain the “out-of-focus” image with respect to the “in-focus”. Since then, “off-axis” interferometry has been widely used in ODT by first extracting the phase before using the inverse models [13][14].

Holographic detection was introduced by Gabor who used “in-line” holography. He showed that the intensity image retrieved from the in-line holography is composed of an “in-focus” image in addition to an “out-of-focus” image (i.e., “Twin” image) [11]. Due to this “Twin” image problem, in-line holography usually encounters problems in retrieving the phase of the object. Upatnieks and Leith proposed an “off-axis” holography [12]. In this configuration, a small tilt is introduced between the reference arm and the sample arm, which results in shifting in the Fourier domain the “out-of-focus” image with respect to the “in-focus”. Since then, “off-axis” interferometry has been widely used in ODT by first extracting the phase before using the inverse models [13,14].

2. Theory

The intensity pattern captured by the detector of the ODT system is denoted by It(x,y) with x and y being the horizontal and the vertical dimensions of the 2D intensity pattern. The detected intensity is given by:

Figure 1

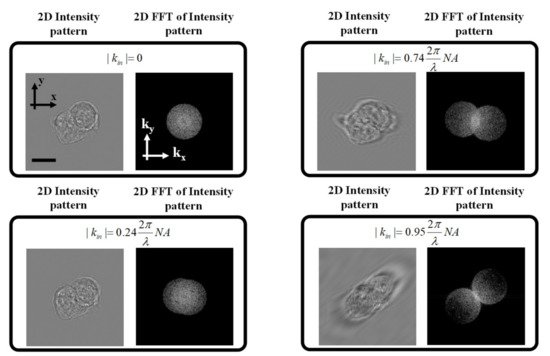

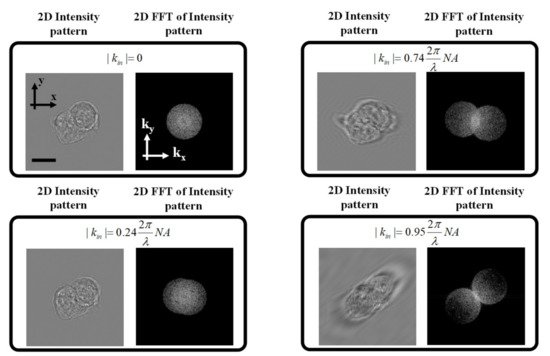

shows the effect of changing the illumination angle on the 2D intensity image of a simulated digital phantom where different illumination angles were assumed. Note the 2D Fourier transform of the corresponding intensity images includes two circles in the Fourier domain. Each circle is the result of the spectral filtering applied by the limited numerical aperture of the objective lens. From Equation (3), we see that we have three terms: a Zero-order term (the first term on the right-hand side) and the two cross terms. The shift of the two circles from the Zero-order term depends on the illumination angle of the incident plane wave.

Figure 1.

Intensity images and their 2D Fourier transforms for on-axis and off-axis configurations with different illumination angles. As can be seen from the figures, as the incident illumination vector kin approaches the numerical aperture of the objective lens, the 2 cross terms can be decoupled, and the principal term can be retrieved. Scale bar = 8 μm.

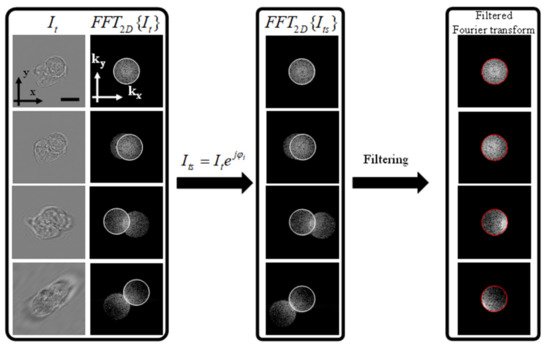

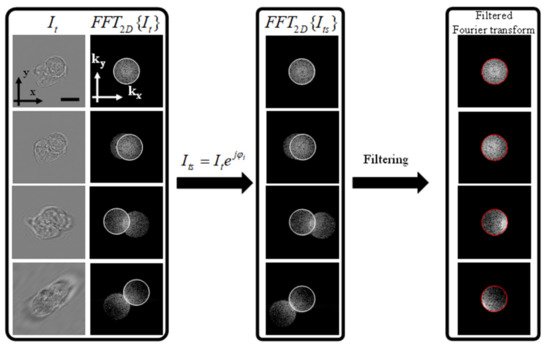

Figure 1 shows that for an accurate extraction of the scattered field, the sample should be illuminated with the maximum angle permitted by the NA of the objective lens [16]. The scattered field can be extracted by simply multiplying the intensity measurement with the incident plane wave, which results in shifting the spectrum in the Fourier domain. This is followed by the spatial filtering of the “Principal” image with a circular filter whose size is determined by the NA of the objective lens as shown in

shows that for an accurate extraction of the scattered field, the sample should be illuminated with the maximum angle permitted by the NA of the objective lens [21]. The scattered field can be extracted by simply multiplying the intensity measurement with the incident plane wave, which results in shifting the spectrum in the Fourier domain. This is followed by the spatial filtering of the “Principal” image with a circular filter whose size is determined by the NA of the objective lens as shown in

Figure 2

.

Figure 2.

Processing of the 2D intensity images before mapping into the 3D Fourier space. The left-most panel shows the intensity measurements and the corresponding Fourier transform. The middle panel shows the effect of multiplying by the incident plane wave, which results in centering the scattered field highlighted by the white circles. The final step is the filtering of the scattered field with a circular filter whose size matches the size of the numerical aperture in the Fourier space declared by the red circle. Scale bar = 8 μm.

Figure 2, it can be seen that only when the illumination angle is at the edge of the imaging NA can we extract the complex scattered field from the intensity image. This can be experimentally demonstrated by illumination along a circular cone whose center is perfectly aligned with the imaging objective lens. This is demonstrated in the experimental setup described in the following section.

By multiplying the intensity image with the incident plane-wave to shift the spectrum in the Fourier domain, the scattered field spectrum becomes centered around the origin. To filter out the complex scattered field in the Fourier domain, we apply a low pass filter given by the following equation:

, it can be seen that only when the illumination angle is at the edge of the imaging NA can we extract the complex scattered field from the intensity image. This can be experimentally demonstrated by illumination along a circular cone whose center is perfectly aligned with the imaging objective lens. This is demonstrated in the experimental setup described in the following section.

By multiplying the intensity image with the incident plane-wave to shift the spectrum in the Fourier domain, the scattered field spectrum becomes centered around the origin. To filter out the complex scattered field in the Fourier domain, we apply a low pass filter given by the following equation:

U˜s(kx,ky)=LPF{FFT2D{Itejφi}}

F˜(κ→)=kz2πjU˜s(kx,ky)

κ→=k→−k→i=⎡⎣⎢⎢⎢ kx−kinx ky−kinz(k0n0)2−k2x−k2y−−−−−−−−−−−−−−√−kinz⎤⎦⎥⎥⎥

n(r)=4πk20F(r)+n20−−−−−−−−−−√

In=It−IBkgIBkg