Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Mikhail A. Makarkin and Version 2 by Jason Zhu.

In modern digital microscopy, deconvolution methods are widely used to eliminate a number of image defects and increase resolution.

- Deconvolution

- Deep Learning

- Machine learning

1. Deconvolution Types

The works mentioned above use the well-known point spread function (PSF) of the optical system. The PSF describes the imaging system’s response to the infinitely small object (single point size). The PSF of an imaging system can be measured using the small calibration objects of the known shape or calculated from the first principles if rwesearchers know the parameters of the imaging system. Therefore, these and similar methods are classified as non-blind deconvolution methods. However, more often than not, the PSF cannot be accurately calculated for several reasons. First, it is impossible to take into account all the noise and distortions that arise during shooting. Second, the PSF can be very complex in shape. Alternatively, the PSF can change during the experiment [1][2][16,17]. Therefore, methods have been developed that extract the estimated PSF directly from the resulting images. These methods can be either iterative (the PSF estimate is obtained from a set of parameters of sequentially obtained images that are refined at each pass of the algorithm) or non-iterative (the PSF is calculated immediately by some parameters and metrics of one image).

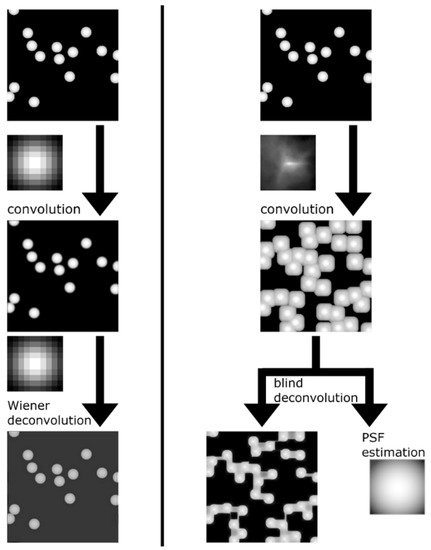

The mathematical formulation of the blind deconvolution problem is a very ill-posed problem and can have a large (or infinite) number of solutions [3][18]. Therefore, it is still necessary to impose certain restrictions on the condition—to introduce regularization, for example, in the form of a so-called penalty block, such as a kernel intensity penalizer [4][19] or a structured common least norm [5][20] (STLN), or in other ways. A typical problem, in this case, is the appearance of image artifacts [6][7][21,22], which appear due to an insufficiently accurate PSF estimate or because of the nature of the noise. This problem is especially acute for iterative deconvolution methods, since there is a possibility that PSF and noise in different images will not coincide with real ones, and therefore the error accumulates at each iteration (Figure 1). In this regard, machine learning algorithms are of particular interest because they are specifically geared towards extracting information from data and their iterative processing.

Figure 1. Difference between blind and non-blind deconvolution. On the left is an example of convolution and non-blind deconvolution with the same kernel and some regularization. On the right is an example of poor estimation of PSF with blind deconvolution. A generated image of the same-shaped objects similar to an atomic force microscopy (AFM) image is used as the source. A real AFM tip shape is used as a convolution kernel in this case. Blind deconvolution based on the Villarrubia algorithm [8][23] confuses object shape and tip shape, resulting in poor image restoration.

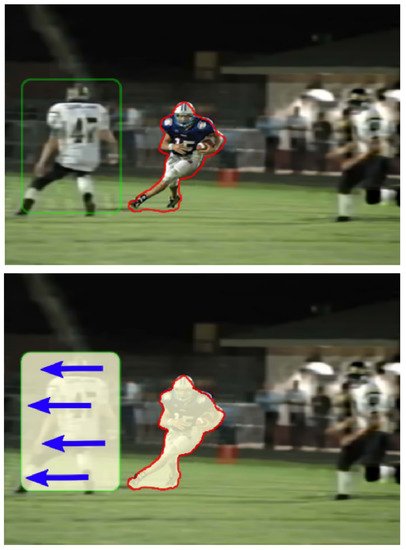

An important issue when solving the deconvolution problem is the nature of the distortion. It can be uniform (that is, the same distortion kernel and/or noise is applied to all parts of the image) or non-uniform (different blur kernels are applied to different parts of the image and/or the noise on them is also not the same). The absence of a uniform distortion for the image further complicates the task. In these cases, it is no longer possible to proceed with a general estimate that reswearchers derive from large-scale dependencies between pixels. Instead, researcherswe have to consider local dependencies in small areas of the image, which makes global dependencies more complex structures in a mathematical sense, and much more expensive from a purely computational perspective. Figure 2 shows an example of a non-uniform blur. Accordingly, different types of distortion must be applied to different deconvolution types, either uniform or non-uniform. In the future, rwesearchers will use as synonyms the concepts of homogeneous and uniform, heterogeneous and non-uniform.

Figure 2. An example of non-uniform distortion. Image is taken from [9][24]. The operator tracks and focuses on the player with the ball, so there is no blur for his image and the small area around him (red outline). The area indicated by the green outline will show slight defocusing distortion and motion blur (direction of movement is shown by arrows). To adequately restore such an image, it will be necessary to establish the relationships between such areas and consider the transitions between them.

In real-life problems, one must confront the problem of inhomogeneous distortions most often.

2. Application of Deep Learning in a Deconvolution Problem

Machine learning is divided into classical (ML) and deep learning (DL). Classical algorithms are based on manual feature selection or construction. Deep learning transfers the task of feature construction entirely to the neural network. This approach allows the process to be fully automated and performs blind deconvolution in the complete sense, i.e., restoring images only using information from the initial dataset. Therefore, the solution to the deconvolution problem using DL is an auspicious direction at the moment. The automation of feature extraction allows these algorithms to be adapted to the variety of resulting images, which is crucial since it is almost impossible to obtain an accurate PSF estimate and reconstruct an image by using it in the presence of random noise and/or several types of parameterized noise. A more reasonable solution would be to build its iterative approximation, which will adjust when the input data changes, as DL does. Today, two main neural network types are used for deconvolution.

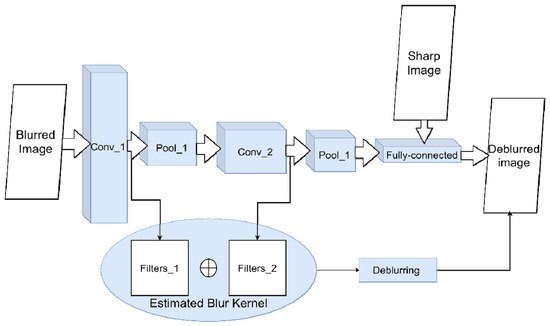

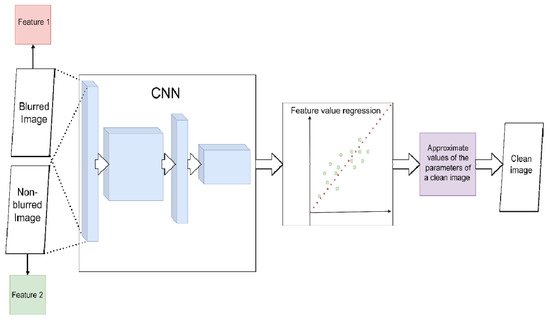

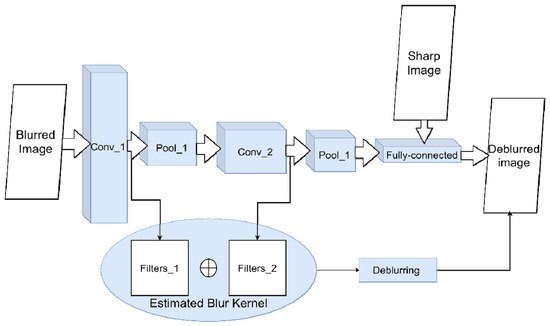

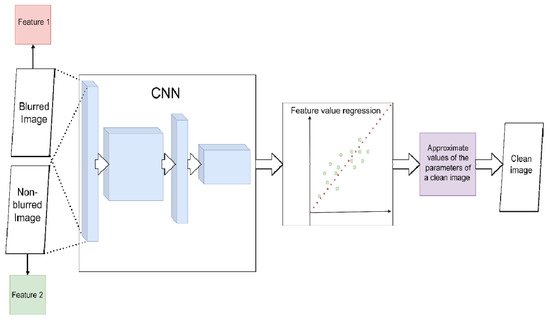

The first is convolutional neural networks (CNN). In CNN, alternating convolutional and downsampling layers extract from the image a set of spatially invariant hierarchical features, a set of low-level geometric shapes, and transformations of pixels that line up into specific high-level features [10][56]. In theory, in the presence of “blurred/non-blurred image” pairs, CNN can learn a specific set of transformations for image pixels that lead to blur (i.e., evaluate the PSF) (Figure 3 and Figure 4). For example, in [11][57], the authors show that, on the one hand, such considerations are relevant; on the other hand, they do not work well for standard CNN architectures and do not always produce a sharp image. The reason is that small kernels are used for convolutions. Because of this, the network is unable to find correlations between far-apart pixels.

Figure 3. Scheme for image deconvolution with kernel blur using CNN. To solve the classification problem of a blurred/non-blurred image, the neural network adjusts its weights and filters in convolutional layers during training. The sequence of applied filters will be approximately equivalent to the blur kernel.

Figure 4. Scheme for image deconvolution with image-to-image regression using CNN (without PSF estimation). CNN directly solves the regression problem with the spatial parameters of the images. The parameters of the blurred image (red) are approximated to the parameters of the non-blurred (green). The resulting estimate is used to restore a clean image.

Nevertheless, using CNNs for image restoration problems leads to the appearance of artifacts. The simple replacement of small kernels with large kernels in convolutions does not generally allow the network to be trained due to the explosion of gradients. Therefore, the authors replaced the standard convolutions with the pseudoinverse kernel of the discrete Fourier transform function. This kernel is chosen so that it can be decomposed into a small number of one-dimensional filters. Standard Wiener deconvolution is used for initial activation, which improves the restored image’s sharpness.

However, the convolution problem is not the only one. When using the classic CNN architectures (AlexNet [12][58], VGG [13][59]), researchers have found that they perform poorly at reconstructing images with non-uniform backgrounds, often leaving certain areas of the image blurry. One can often find such a phenomenon as the fluctuation of blur during training—under the same conditions and on the same data, with frozen weights, the network after training still gives both sharp and blurry images. What seems paradoxical is that an increase in the training sample number and an increase in the depth of the model led to the fact that the network began to recover images with blur more often. This is due to some properties of the CNN architectures, primarily the use of standard loss functions. As shown in [14][15][60,61], blur primarily suppresses high-frequency areas in the image (which means that the L-norms of the images decrease). This means that with the standard maximum a posteriori approach (MAP) with an error function that minimizes the L1 or L2 norm, the optimum of these functions will correspond to a blurry image, not a sharp one. As a result, the network learns to produce blurry images. Some modification of the regularization can partially suppress this effect, but this is not a reliable solution. In addition, the estimation for minimizing the distance between the true and blurred image is inconvenient because, if there are strongly and weakly blurred images in the sample, neural networks are trained to display intermediate values of blur parameters. Thus, they either underestimate or overestimate blur [16][62]. Therefore, using CNN for the deconvolution task requires specific additional steps.

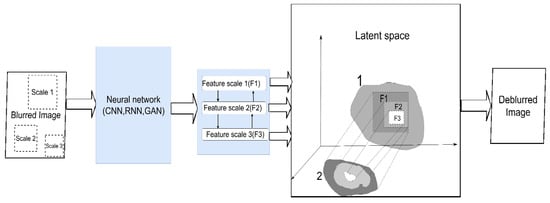

The use of multiscale learning looks promising in this regard (Figure 5). To obtain a clean image after deconvolution, researchwers need to solve two problems. First, find local patterns on small patches so that small details can be restored. Second, consider the interaction between far-apart pixels to capture the distortion pattern typical to the image. This requires the network to extract spatial features from multiple image scales. It also helps to learn how these traits will change as the resolution changes. In ref. [17][63], the authors propose using a neural network architecture called CRCNN (concatenated residual convolutional neural network). In this approach, residual blocks are used as the elements of spatial feature extraction in an implicit form and are then fed into an iterative deconvolution (IRD) algorithm. They are then concatenated at the output to obtain multiscale deconvolution. In addition, the approach described in [18][64] integrates the encoder-decoder architecture (see, for example, [19][65]) and recurrent blocks. A distorted image at different scales is fed into the input of the network. When training a network, the weights from the network’s branches for smaller scales are reused, with the help of the residual connection when training branches for larger ones. This reduces the number of parameters and makes learning easier. Another important advantage of multiscale learning is the ability to completely abandon the kernel assessment and end-to-end modeling of a clear image. The general idea [20][66] is that co-learning the network at different scales and establishing a connection between them using modified residual blocks allows a fully-fledged regression to be carried out. resWearchers are not looking for the blur kernel, but approximating a clear image in spatial terms (for example, the intensity of the pixels at a specific location in an image). At the moment, the direction of using multiscale training looks promising, and other exciting results have already been obtained in [21][22][23][67,68,69]. ResWearchers can separately note an attempt to use the attention mechanism to study the relationship between spatial objects and the channels on an image [24][70].

Figure 5. Principle of multiscale learning. A neural network of any suitable architecture extracts spatial features at different scales (F1, F2, F3) of the image. In various ways, smaller-scale features are used in conjunction with larger-scale features. This makes it possible to build an approximate explicit, spatially consistent clean image (2) from the latent representation of a clean picture (1).

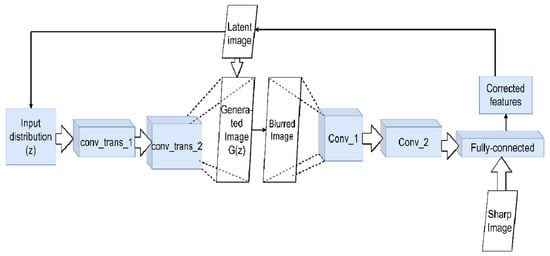

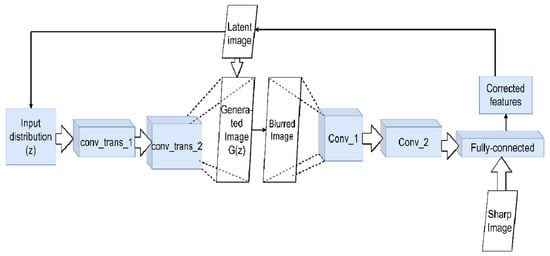

The second type of architecture used is generative models, which primarily includes various modifications of generative adversarial networks (GANs) [25][71]. Generative models try to express a pure latent image explicitly. Information about it is implicitly contained in the function space (Figure 6). GAN training is closely related to the previous issue discussed above: prioritization and the related training adjustments. The work [26][72] used two pre-trained generative models to create non-blurred images and synthetic blur kernels. Next, grids were used to approximate the real kernel of the blur using a third, untrained generator. In ref. [27][73], a special class network—spatially constrained generative adversarial network (SCGAN) [28][74]—was used, which can directly isolate spatial features in the latent space and manipulate them directly. This feature made it possible to modify it for training on sets of images projected along three axes, implementing their joint deconvolution, and obtaining a sharp three-dimensional image. When using GAN, the problem of the appearance of image artifacts almost always plays a unique role. At the beginning of the training cycle, the network has to make strong assumptions about the nature of the noise (for instance, Gaussian, Poisson) and its uniform distribution across all of the images. The article [29][75] proposes eradicating artifacts without resorting to additional a priori constraints or additional processing. The authors set up a convolutional network as a generator and trained it to produce sharp images with its error function independently. At the same time, there remained a common error function for the entire GAN, which affected setting the minimax optimization. A simplified VGG was taken as a generator, which determined whether the input image was real or not. As a result, CNN and GAN worked together. The generator updated its internal variable directly to reduce the error between x and G(z). The GAN then updated the internal variable to produce a more realistic output.

Figure 6. Using GAN for deconvolution. The generator network creates a false image G(z) from the initial distribution z (at first, it may be just noise). It is fed to the input of the network discriminator, which should distinguish it from the present. The discriminator network is trained to distinguish between blurred and non-blurred images, adjusting the feature values accordingly. These values form the control signal for the main generator. This signal will change G(z) step by step to approach the latent clean image iteratively.

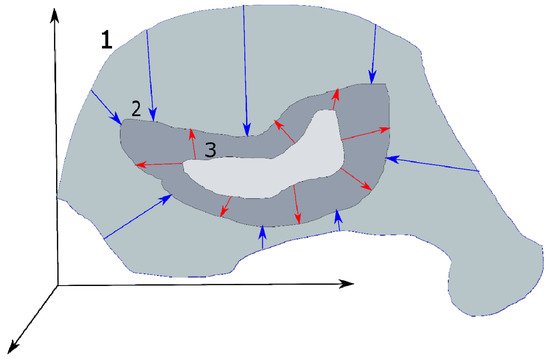

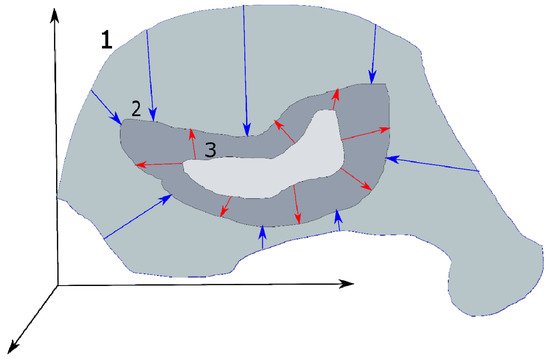

The general problems affecting blind deconvolution are still present while using a DL approach, albeit with their own specificities. Blind deconvolution is an inverse problem that still requires sufficiently strong prior constraints, explicit or implicit, to work (Figure 7). As an example of explicit constraints, one can cite the above assumptions about the homogeneity of noise in images, the assumption that optical aberrations are described by Zernike polynomials [30][76], or directly through special regularizing terms [31][77]. A good example of implicit constraints is the pretraining generator networks in a GAN or the training discriminator networks that use certain blurry/sharp images sets. These actions automatically determine the specific distribution in the response space corresponding to the training data. According to this distribution, control signals are generated and supplied to the generator. It will adjust to this distribution and produce an appropriate set of synthetic PSFs or parameters for a clean image. This approach allows extracting prior constraints directly from the data. This property is typical for generative models in general; for example, the combination of an asymmetric autoencoder with end-to-end connections and a fully connected neural network (FCN) is used in [32][78]. The autoencoder creates a latent representation of the clean image, and the FCN learns to extract the blur kernels from the noise and serves as an additional regularizer for the autoencoder. The coordinated interaction of these networks makes it possible to reduce the deconvolution problem, contributing to a MAP optimization of the network parameters.

Figure 7. The principle of using the prior constraints. It narrows the infinite set of all possible values of the parameters of image 1 to the final one. Stronger prior constraints allow the narrowing of this set more precisely to the one corresponding to the true pure image 2 (shown by blue arrows). In turn, a more accurate and flexible model will iteratively refine its estimate of the clean image 3 (depicted by red arrows).

The clear advantages of the neural network approach include the already mentioned full automation, the ability to use the end-to-end pipeline (which significantly simplifies the operation and debugging of the method, or its modification if necessary), and its high accuracy. The disadvantages include problems common to DL—the need for sufficiently large and diverse datasets for training and computational complexity (especially for modern CNN and GAN architectures). However, it is worth highlighting the weak interpretability of the results—even with the deconvolution problem, it is often necessary to restore the image, estimate the PSF, and understand how it was obtained.

In addition to GAN and CNN, other architectures are used for the deconvolution problem. An autoencoder consists of two connected neural networks—an encoder and a decoder. The encoder takes input data and transforms it, making the representation more compact and concise. In the generating subtype, the variational autoencoder, the encoder part produces not one vector of hidden states but two vectors—mean values and standard deviations—according to which the data will be restored from random values. In addition to [32][78], the article [33][79] can be noted. It uses the output of a denoising autoencoder, which is typically the local average of the true density of the natural image, while the error of the autoencoder is the vector of the mean shift. With a known degradation, it is possible to iteratively decrease the value of the average shift and bring the solution closer to the average value, which is supposed to be cleaned of distortions. In the article [34][80], an autoencoder was used to find invariants on a clean/blurry image, based on which the GAN was trained to restore clean images. Autoencoders are commonly used to remove noise by transforming and compressing data [35][36][37][81,82,83].

For the case of video (when there is a sequential set of slightly differing images), some type of recurrent neural network (RNN) is often used, most often in combination with CNN. In work [38][84], individual CNN's first obtained the pixel weights of the incoming images from the dynamic scene and extracted its features. The four RNNs then processed each performance map (one for each direction of travel), and then the result was combined by the final convolutional network. This helped increase the receptive field and ensured that spatial non-uniformity of the blur was taken into account. In work [39][85], based on the convLSTM blocks, a pyramidal model of the interpolation of blurred images was built, which provided continuous interpolation of the intermediate frames, building an averaged sharp frame, and distributing information about it to all modules of the pyramid. This created an iterative de-blurring process. An interesting approach is proposed in [40][86], which offers an alternative to multiscale learning, called multi-temporal learning. It does not restore a clean image at small scales then go to the original resolution but rather works in a temporal resolution. A strong blur is a set of weak blurs that are successive over time. With the help of the RNN, the correction of weak initial blurring is iterated over the entire time scale.

Interest in the use of attention mechanisms in the task of deconvolution is beginning to grow. Attention in neural networks allows one to concentrate the processing of incoming information on its most important parts and establish a certain hierarchy of relations between objects to each other (initially, attention mechanisms were developed in the field of natural language processing and helped to determine the context of the words used). Attention mechanisms can be implemented as separate blocks in classical architectures and used, for example, to store and further summarize global information from different channels [41][87] or to combine hierarchical functions from different points in time in a video in a similar way [42][88]. Attempts are being made to use an architecture entirely based on attention—the so-called transformers [43][89]. An illustrative example of their use is shown in [44][90]. The authors take advantage of one of the main advantages of the transformer—the ability to handle global dependencies. Using residual links and building the architecture in a similar Y-net style allows the user to obtain local dependencies and link them to larger ones. As mentioned above regarding multiscale learning, this is one of the main problems in image deconvolution and, perhaps, the attention mechanism will allow one to solve it more efficiently—in some tasks (for example, removing rain and moiré patterns), the increase in PSNR and SSIM is very noticeable.

Deep learning is also beginning to be widely used in microscopy and related techniques. Yang et al. showed an example of non-blind deconvolution by using neural networks for 3D microscopy with different viewing angles for samples [45][91]. Usually, CNNs have some difficulty recognizing rotations, so the authors used GANs with a self-controlled approach to learning. It allowed them to surpass the standard algorithms for three-dimensional microscopy (CBIF [46][92], EBMD [47][13]) in terms of quantitative and qualitative characteristics: PSNR (peak signal-noise ratio), SSIM (structural similarity index), and CC (correlation coefficients). The flexibility of deep learning can also be seen in [48][93], which shows how it can be combined with the classical method. In this work, adaptive filters were used with the Wiener—Kolmogorov algorithm. The neural network predicted the optimal values of the regularizer and adjusted the filter kernel for it. It helped to improve the quality of the resulting image. In this case, the computational time was less than with direct image restoration using neural network training. It is an important point—in microscopy, especially in imaging cytometry, a transition to real-time image processing is needed. Examples can be given where super-resolution is achieved using the GAN [49][50][94,95], the encoder-decoder [51][96], and the U-Net-based architectures [52][97]. The use of deep learning in these works made it possible to significantly improve the quality of the reconstructed image and remove the connection to the optical properties of the installations, reducing the problem to a purely computational one. Despite the demanding computational power of deep learning algorithms, against the background of classical methods (especially nonlinear ones), they can show excellent performance [53][98] precisely due to their ability to build hierarchical features. Their other feature is the need for large datasets for training. On the one hand, the need is satiated by the appearance of large databases of cellular images in the public domain; on the other hand, it is still a problem. However, in imaging flow cytometry, a large dataset is relatively easy to collect. Therefore, it is convenient to use neural networks in flow cytometry with visualization; for example, the residual dense network can be used to eradicate blurring [54][99].

The use of deep learning made it possible to cope with non-uniform distortion, which classical methods could hardly achieve, and therefore weakened the requirements for the quality of the restored images. In addition to increasing the numerical characteristics, deep learning allows one to automate the image recovery process thoroughly and, therefore, expand its use for non-specialist users.

Furthermore, deep learning is used in medical imaging, by which rwesearchers mean here all non-microscopic instruments (e.g., CT, MRI, and ultrasound). In ref. [55][100], a standard approach was used to extract the main features of images with a convolutional network at a low resolution and restore a pure image at a higher resolution. A similar but slightly complicated method is shown in [56][101], where the so-called upsampling layer was additionally used in the network architecture (the terminology requires caution: the layer was previously called the deconvolution layer, but it did not perform the deconvolution operation and this caused confusion). The methods were tested on MRI and retinal images. Chen et al. proposed adding residual bandwidth to the U-net encoder/decoder network to improve the quality of low-dose CT scans [57][102]. The encoder branch reduces noise and artifacts, and the decoder recovers structural information in the CT image. In addition, a residual gap-filling mechanism will complement the details lost when going through multiple layers of convolution and deconvolution. Finally, in [58][103], the convolutional network was trained to recognize the difference between low- and high-resolution MRI heart slices, and then taught to use this information as a recovery operator.

Deep learning is being used to improve image quality in photography and video. In addition to the usual adaptation of algorithms to, for example, mobile devices (see the review [59][104]), it can also be found being applied to the restoration of old photographs [60][105] or shooting fast-moving objects [61][106].