Constant industrial innovation has made it possible that 2021 has been officially marked by the European Commission as the beginning of the era of “Industry 5.0”. In this 5th industrial revolution, RS has the potential of being one of the most important technologies for today’s agriculture. RS sprouted in the 19th century (specifically in 1858) through the use of air balloons for aerial observations. At present, it occupies a central position in precision agriculture (PA) and soil studies. It is also important to mention some of the interchangeable terms most commonly used include “precision farming”, “precision approach”, “remote sensing”, “digital farming”, “information intensive agriculture”, “smart agriculture”, “variable rate technology (VRT)”, “global navigation satellite system (GNSS) agriculture”, “farming by inch”, “site specific crop management”, “digital agriculture”, “agriculture 5.0”, etc. RS is a vast term that covers various technological systems, such as satellites, RPAs, GNSS, geographic information systems (GIS), big data analysis, the Internet of Things (IoT), the Internet of Everything (IoE), cloud computing, wireless sensors technologies (WST), decision support systems (DSS), and autonomous robots.

- agriculture 5.0

- drones

- remotely piloted aircrafts (RPAs)

- precision agriculture

- remote sensing

- Internet of Things (IoT)

1. Agricultural Remote Sensing

Agricultural remote sensing is a very useful technology that allows us to observe crops on a large scale in a synoptic, remote, and non-destructive manner. Usually, it involves a sensor mounted on a platform, which could be a satellite, RPA, unmanned ground vehicles (UGV), or a field robot. The sensor collects the reflected or emitted electromagnetic radiation from plants, which is then further processed to produce useful information and products. This information consists of traits of the agriculture system and their variations in space and time. Functional traits have been defined as the biochemical, morphological, phenological, physiological, and structural physiognomies that regulate organism (plant) performance or fitness [1]. These traits vary from one plant to another and from one location to other, and can be categorized as typological-, biological-, physical-, structural-, geometrical-, or chemical-based in terms of their respective natures. RS provides an effective relation between the radiance of plants and the respective traits to extract useful information, e.g., leaf area index (LAI), chlorophyll content, soil moisture content, etc. Nevertheless, a number of factors, like the crop phenological stage, crop type, soil type, location, wind speed, precipitation, humidity, solar radiation, nutrient supply, etc., need to be known to generate accurate information from RS products. Among others, plant density, organ computing, LAI, green cover fraction, leaf biochemical content, leaf orientation, height, soil and vegetation temperature, and soil moisture are prominent informational products that RS delivers. This information is further processed and used to interpret crop health, disease infraction, irrigation period, nutrient deficiency, and yield estimations.

Weiss et al. [1] categorized agricultural data retrieving approaches from RS into the following three principal categories:

-

Purely empirical methods: establishing a direct relationship between a measured RS signal and biophysical variables (linear and nonlinear regressions: machine learning).

-

Mechanistic methods: model inversion based on Maxwell’s equations (for radar interferometry and polarimetry), optical and projective geometry (for LiDAR and photogrammetry), and radiative transfer theory (for solar and microwave domains).

-

Contextual methods: processing the spatial and temporal characteristics of captured images using segmentation techniques.

Another way to describe information treatment using RS technology is the preparation of vegetation indices (VIs). Most commonly, VIs calculated using RS, among others, include: normalized difference vegetation index (NDVI) for crop monitoring and empirical studies; soil-adjusted vegetation index (SAVI) for improving the sensitivity of NDVI to soil backgrounds; green normalized difference vegetation index (gNDVI) for estimating the photosynthetic activity; wide dynamic range vegetation index (WDRVI) for enhancing the dynamic range of NDVI; chlorophyll index–green (CI–G) for determining the leaf chlorophyll content; modified soil adjusted vegetation index (MSAVI) for reducing the influence of bare soil on SAVI; optimized soil-adjusted vegetation index (OSAVI) for calculating aboveground biomass, leaf nitrogen content, and chlorophyll content; chlorophyll vegetation index (CVI) for representing relative abundance of vegetation and soil; triangular vegetation index (TVI) for predicting leaf nitrogen status; normalized green red difference index (NGRDI) for estimating nutrient status; visible atmospherically resistant index (VARI) for mitigating the illuminating differences and atmospheric effects in the visible spectrum; crop water stress index (CWSI) for measuring canopy temperature changes and dynamics; and photochemical reflectance index (PRI) for detecting disease symptoms [2].

Normally sensors used in RS that are for crop monitoring detect the following electromagnetic wave bands, depending on specific objectives [2]:

-

Thermal infrared band;

-

Red, green, and blue (RGB) bands;

-

Near infrared (NIR) band;

-

Red edge band (RE).

The amplitude of the information retrieved from RS is considerable to support sustainable agriculture capable of feeding a rapidly growing world population. Among the prominent advantages or applications of RS are the identification of phenotypically better varieties, optimization of crop management, evapotranspiration, agriculture phenology, crop production forecasting, ecosystem services (related to soil or water resources) provision, plant and animal biodiversity screening, crop and land monitoring, and precision farming [3][1][4][5][6][7].

2. Sensors

The backbone of RS is the sensors that provide all the basic information, not only of crops, but also of the environment. The quality and yield of the plants is highly dependent on factors such as temperature, humidity, light, and the level of carbon dioxide (CO2) [8]. These factors, along with a variety of other parameters, can easily be measured using sensors [9]. Sensors usually serve for narrow band hyperspectral or broad band multispectral data acquisition, and can be space-borne, air-borne, and ground-based in terms of their respective use in satellites, RPAs, and fields or laboratories, respectively [5]. Currently, the most commonly used sensors in agricultural RS include synthetic aperture radar (SAR), near-infrared (NIR), light detection and ranging (LiDAR), fluorescence spectroscopy and imaging, multispectral, and visible RGB (VIS) sensors; thereby studying a variety of parameters according to needs. Table 1 lists the RS sensors, grouped under main categories, with their respective applications in agriculture. For an elaborated use of different sensors in agriculture, the study by Yang et al. [10] is recommended.

Table 1. Principal categories of remote sensing (RS) sensors with their respective functions in agricultural studies.

| Sensor | Purpose | Ref. |

|---|---|---|

| Synthetic aperture radar (SAR) | Crop classification, crop growth monitoring, and soil moisture monitoring. | [11] |

| Visible RGB (VIS) | Vegetation classification and estimation of geometric attributes. | [7] |

| Multispectral and hyperspectral | Physiological and biochemical attributes (leaf area index, crop water content, leaf/canopy chlorophyll content, and nitrogen content). | [12] |

| Fluorescence spectroscopy and imaging sensors | Chlorophyll and nitrogen content, nitrogen-to-carbon ratio, and leaf area index. | [13] |

| Laser/light detection and ranging (LiDAR) | Horizontal and vertical structural characteristics of plants. | [14] |

| Near-infrared (NIR) | Crop health, water. Management, soil moisture analysis, plant counting, and erosion analysis. |

[2] |

Ground-based sensors have long been in use, i.e., since the third industrial revolution, and those of the current era are wireless sensor technologies (WSTs). WSTs use radio frequency identification (RFID) and wireless sensor networks (WSN) [15]. The 5th industrial revolution has also resulted in rapid research and development in terms of smaller sensing devices, digital circuits, and radio frequency technology. The principal difference between RFID and WSN is that WSN permits multihop communication and network topologies, whereas no cooperative capabilities are offered by RFID devices. Now, WSNs are, not only the base of precision agriculture, but also that of precision livestock farming and precision poultry farming. RFID, which was basically developed for identification purposes, is currently being used for developing new wireless sensor devices. These systems comprise of a number of tiny sensor nodes (consisting of three basic components: sensing, processing, and communication) and few sinks. Each wireless sensor node can be employed in the desired crop field and are linked through a gateway unit, communicating with other computer systems via wireless local area networks (WLAN), local area networks (LAN), Internethe-of-Things and Internet-of-Everything [16]mis, wireless wide area network (WWAN), or controller area network (CAN), making use of standard protocols (i.e., general packet radio service (GPRS) or global system for mobile communication (GSM)) [1617]. Given the maturity and potential of these technologies, they seem to be promising technologies for agricultural remote sensing. Another reason for WSN implementation in agriculture is a prerequisite of DSS is that it needs processed information rather than sensor-taken raw data. Therefore, WSNs using a meshed network of wireless sensors collect, process, and communicate the data for DSS, thus ensuring a controlled system [1718].

WST applications in the agricultural sector have gone through considerable research and it is found to be very common in greenhouse and livestock monitoring applications. These applications are becoming more common by the day. Additionally, it is also important to consider challenges, e.g., weather conditions, reliable link quality above crop canopies, and coverage, that are faced when implementing radio frequencies in crop fields. It is also important in remote sensing that there be a mechanism of erroneous measurement detection, thereby rectifying wrongly collected data. Actual examples of the applications of WST, although few, include creating a mobile WSN connecting tractors or combining harvesters with other vehicles, enabling them to exchange data [1617]. Recently, a customized WSN was used to detect fungal disease in a strawberry production using a distributed mesh network of wireless mini weather stations, equipped with relative humidity, temperature, and leaf wetness sensors. Similarly, other studies included the implementation of WSN in vineyards for precision viticulture and addressed heat summation and potential frost damage [1617]. Similarly, various other studies on their application in irrigation, greenhouse, and horticulture domains have been reported [1718]. However, it is important to consider that there is an immense need for a standard body to regulate agricultural sensor device development as well as their subsequent implementation in modeling and DSS.

These technologies have been in constant transition over the past few years, e.g., updating various ISO (International Organization for Standards) standards for RFID (ISO/IEC 18000, ISO 11784, etc.), Bluetooth, Wibree, WiFi, and ZigBee for WSNs [15][1819]. Various studies have reported on the use of these technologies, such as the use of ZigBee for precision agriculture (elaborated by Sahitya et al. [1920]). A substantial improvement in WSN for subsequent incorporation into satellites and RPAs will certainly augment the efficiency of these platforms. For a summary of some of the commonly implied sensors in agriculture that provide data about plant, soil, and climate conditions, the study by Abbasi et al. [1819] is recommended.

A lot of renowned companies and institutes, including NASA, are involved in the production of novel and efficient sensors. For example, the Microsoft Kinect sensor developed in the last decade shows remarkable potential for rapid characterization of vegetation structure, as reported previously [2021]. For a comprehensive study on the latest advances in sensor technology applied to the agricultural sector, the study by Kayad et al. is recommended [2122].

Joint ventures by governments and academic institutes, apart from those of the private sector, in the implementation of sensor technology can bear rapid and efficient results, such as the Center of Satellite Communication and Remote Sensing at Istanbul Technical University (ITU), and the Turkish Statistical Institute joining hands to realize the sensor-driven agricultural monitoring and information system (TARBIL) project [2223]. Various sensors are being currently invented and developed, through private firms and joint ventures, based on wireless technology for subsequent applications in viticulture, irrigation, greenhouse, horticulture, pest control, fertilization, etc. [1819], but this also highlights the necessity of some regulating and standard bodies.

Similarly, John Deere, a leading farming equipment company, is currently undertaking various IoT projects, including sensor-fitted autonomous tractors, capable of yield estimation features, among various other features [2324]. Apart from the industrial perspective, a great deal of research in the academic domain is also on the rise in this sphere. These technologies are promising for efficient and sustainable farming, which is basically expected in the 5th industrial revolution.

Satellites and RPAs are two prominent platforms of RS technology and therefore, it is important to consider their use in the 5th industrial revolution, mainly focusing on the agricultural sector. Various studies have highlighted the limitations of the one or the other, but both are beneficial in general. In this regard, their current and future roles in on-board sensors and cameras are reported here.

2.1. Satellite

One of the oldest and main platforms of RS technology is the satellite. In 1957, the first ever satellite: Sputnik 1, weighing 183 pounds and the size of a basketball, was launched into space by the Soviet Union [2425]. It has been over 40 years since satellite systems became operational, but a milestone in RS was achieved with the creation of normalized difference vegetation index (NDVI) maps, providing information about land cover, phenology, and vegetation activity, using advanced very high resolution radiometer (AVHRR) [2526]. In terms of improved performance, considering geometric, spectral, and radiometric properties, the moderate resolution imaging spectroradiometer (MODIS) significantly helped with remote sensing. MODIS instruments laid the foundation of a new era in remote sensing after their launch in 1999, providing 1-km spatial resolution GPP products [2526].

Numerous satellites had been launched in the past two decades with various spatial and temporal resolutions [2627], which proved to be the basis of the 5th technological revolution. Today, various privately-held and government-managed satellites harboring versatile sensors are an efficient foundation to RS, thereby providing huge sets of interpretable data and information. For example, Spain started its “National Remote Sensing Plan (PNT)” in 2004, aimed at data provisioning for various Spanish public administrations [2728]. Although, it is important to bear in mind that this domain was envisaged with a little boost in 2008, when Landsat (US operated satellites) images were set freely accessible (under open license), and the launch of Sentinel 1A and Sentinel 2A satellites, in 2014 and 2015, respectively, under the Copernicus Program, further increased the momentum of RS. The Sentinel constellation is the part of Copernicus program that was launched by the European Commission and the European Space Agency (ESA). As more than 30 satellites provide data for this program, it is of great use to scientists to analyze the freely available data regarding agriculture. In addition, a separate database, titled “Copernicus Academic”, of the Copernicus program also facilitates the training and better utilization of satellite data [2829]. Similarly, the Landsat program, launched by the United States as a joint venture between the National Aeronautics and Space Administration (NASA) and the United States Geological Survey (USGS), also offers free access to high-resolution images. The present needs are to wisely utilize these free accessible data to achieve ambitious agricultural goals. Furthermore, space-borne hyperspectral missions, such as ZY1-AHSI, PRISMA, DESIS, and GF5-AHSI, which launched recently, or are planned to be launched in upcoming years, such as SBG, CHIME, and EnMAP, are expected to augment agricultural monitoring in terms of vegetation functions and traits. It is imperative to address recurring limitations, such as soil background and canopy structure interference, signal variations in optical vegetation properties, and poor discrimination of functional traits due to lesser narrow spectral regions [2930].

Recently, a cloud-free crop map service utilizing radar and optical data from Sentinel-1 and -2, respectively, was announced under a joint venture by two private companies i.e., VanderSat and BASF Digital Farming GmbH, thereby providing daily maps of field-scale crop biomass [3031]. This advancement is a remarkable breakthrough in RS from satellites, as it has been a great challenge since the beginning of this technology. Recent advances in this technology are making it a fine and efficient technology through the use of machine learning. A study conducted by Mazzia et al. [3132] reported a novel strategy for refining the freely available photos from satellites to minimize the errors often encountered by their low or moderate resolutions. This is an excellent example of the use of blended technology, where the authors trained a convolutional neural network using high-resolution images from RPA and then derived the NDVI to interpret the low- or moderate-resolution satellite images of vineyard vigor maps. Among other uses of this RS platform, one is the construction of historical maps of crops, as these play a significant role in crop prediction estimation and related simulations. Recently, a study established the relationship between MODIS-derived NDVI and the grain yields of wheat, barley, and all cereals for 20 European countries (including Austria, Belgium, Denmark, France, Germany, Ireland, Sweden, the United Kingdom, etc.). After analyzing the data from 2010 to 2018, a higher percentage of cereals was reported; 35% for arable land. The efficacy of yield prediction in relation to NDVI and cereals was reported as trustworthy over a good time period of 4 months [3233]. More studies of this nature on a global scale are needed to strengthen the agriculture of today.

Implementation of existing technological tools is also very important. For instance, Google Earth Engine (GEE) offers diverse services in this domain for the agricultural industry. GEE facilitates product downloading of various satellites imagery (Landsat, Sentinels, MODIS, etc.) along with cloud-based management, by granting access either through an academic email or simply a standard Gmail account to their servers. Processing very large geospatial datasets often makes the process slow and difficult. In this regard, GEE, a cloud-based platform, facilitates access to high-performance computing resources without any cost. Systematic data products and interactive applications can be developed based on GEE once an algorithm is developed—thereby reducing the complexities of programming and application development [1718]. A stepwise illustration for product downloads from GEE is presented in Figure 1, although the final exported product can be different from this illustration, based on the needs and preferred format.

Figure 1. A stepwise illustration (1 to 7) of processing and downloading satellite imagery products from Google Earth Engine (GEE).

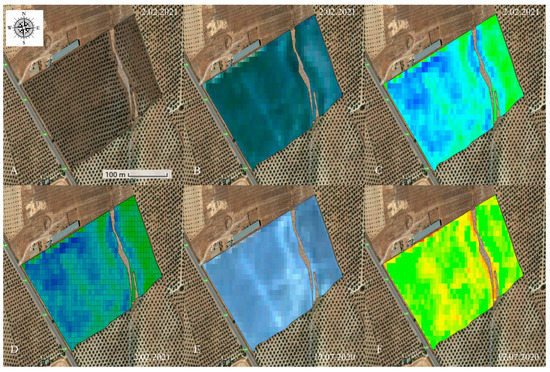

A practical example of benefiting from free services GEE is presented as the construction of vegetation indices (VIs) maps for olive grove trees located at Guadahortuna (Granada, Spain) (Figure 2). Sentinel-2 images, between July 2020 and February 2021, were accessed through GEE, at a resolution of 10 × 10, and were processed to generate NDVI (as indicated in the below formula) vector tiles, followed by their export into GeoJSON format. The GeoJSON format is user friendly and generates data in the form of a table where each pixel represents a file with its NDVI value, which is quite handy to work with.

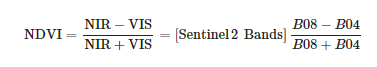

Likewise, another practical example of this nature is the data acquisition, via GEE, for a parcel of vineyard crop situated at Reggio Emilia (Emilia-Romagna, Italy) (Figure 3). In both of these examples, NDVI was calculated using the following formula:

Figure 3. Sentinel-2 images accessed through Google Earth Engine (GEE). (A) Area of interest, (B) RGB field composition, (C) NDVI raster tile, (D) RGB field composition, (E) NDVI raster tile, (F) NDVI vector tile (Images facilitated by Graniot).

Another important aspect of using satellite technology is the digital boundaries of crop fields that are a pre-requisite. Areas with intensive agriculture have solved this, but, for small crop areas (>1 ha), it persists. The 5th revolution is playing its part here. Recently, a novel method, “DESTIN”, of crop field delineation was reported with an accuracy of 95–99%, fusing temporal and spatial information acquired from WorldView and Planet satellite imagery [3334]. Such types of studies are opening up the way for digitalizing agriculture and making it sustainable. This new era is not just about processing acquired data it is also about optimizing it for improved-quality products. For example, a recent study on formulating optimal segmentation parameters to obtain precise spatial information of agricultural parcels that normally suffer over- or under-segmentation is an evident demonstration of this idea [3435]. This is not just limited to the optimization of satellite imagery, it is a way to achieve automation and implementation of these technologies on all scales.

2.2. Remotely Piloted Aircrafts (RPAs)

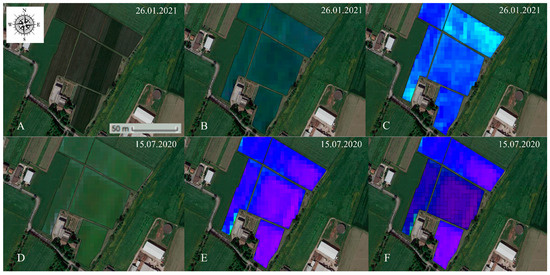

A rise in the use of this technology has been seen over the past decade given its worth as an integral part of RS technology. Intensive research has been conducted using RPAs, considering their application in crop monitoring, disease surveillance, soil analysis, irrigation, fertilization, mechanical pollination, weed management, crop harvest, crop insurance, tree plantation, etc. [2] (Figure 4), which obviously hints towards their remarkable potential in the agricultural sector.

Figure 4. A schematic diagram of RPA application in agriculture.

The first use of RPAs in agriculture was reported as back as 1986 for monitoring Montana’s forest fires [2]. This 5th revolution is anticipated to make more frequent use of RPAs in farming. According to an estimate, RPAs and the agricultural robotics industry could be worth as much as 28 billion dollars by 2028 and up to 35 billion dollars by 2038 [3536].

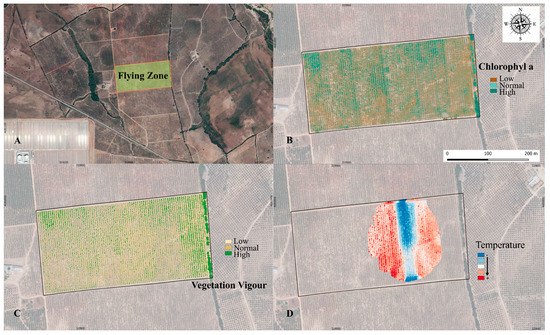

The use of RPA imagery consists of mission or flight planning, data collection, data processing, and information report generation. Several free resources are available for each of these purposes. For example, QGroundControl is an open-source software that can act as a ground control station (GCS) for RPAs. Similarly, several user-friendly open-source software titles are available in the market for image processing, orthomosaic assembly, digital surface model (DSM), and digital elevation model (DEM) constructions. Open Drone Map (ODM) is a prominent example of this, and offers creations and visualizations of orthomosaic, point cloud, 3D models, and other products [2]. RPAs offer better resolutions and flexibility of use over satellite imagery. A few such desired features help to monitor the most important crop parameters: nitrogen (N) and chlorophyll contents [3637]. For example, Figure 5 shows an overview of the practical applications of RPAs to acquire Vis, such as NDVI, normalized difference red edge (NDRE) and thermal maps. A Yuneec Typhoon H hexacopter RPA, flown at a 100-m height, equipped with a multispectral camera (parrot sequoia) and a thermal sensor (Yuneec CGOET) was used to monitor olive crops in Cordoba, Spain. After processing the images, treatment zones were indicated depending upon the vegetation vigour, stress, and N content (Figure 6).

Figure 5. Vegetation index (VIs) maps generated through Parrot Sequoia (a multispectral camera) and Yuneec CGOET (a thermal sensor) mounted on Yuneec Typhoon H hexacopter RPA. (A) Crop area studied. (B) Map of “chlorophyll a” content based on NDRE. (C) Map for vegetation status generated based on NDVI. (D) Thermal map established using Yuneec CGOET. (Images facilitated by MCBiodrone).

Figure 6. A set of field zones recommended for treatment based on the vegetation indices (VIs) maps, generated using a parrot sequoia and Yuneec CGOET mounted on a Yuneec Typhoon H hexacopter RPA for olive crops (image facilitated by MCBiodrone).

Quite a number of studies have reported on various aspect or uses of RPAs but it is interesting to learn what this new era means for one of these RS technologies. New research is being based on artificial intelligence to derive more detailed information about growth, yield, disease, and automation. For example, use of wireless sensor technology and control automation is on the rise in agriculture [37][38][39][40]. Some of the representative studies with respect to principal applications of RPAs in agriculture are summarized in Table 2.

Table 2. A summary of a few representative studies for principal agricultural applications of RPAs. (The purpose of citing these references is impartial and is not confined to these studies only).

| RPA Application Area | Reference |

|---|---|

| Crop growth and monitoring | [2][4041][4142][4243][4344] |

| Crop scouting | [2][4445] |

| Disease detection | [4546][4647][4748][4849] |

| Bird pest surveillance | [4950][5051] |

| Irrigation and fertilization | [4344][4445][4748][4849][5152][5253][5354][5455][5556][5657] |

| Soil and field analysis | [2][4041][4445][5758] |

| Weeds management | [4445][4849][5253][5859] |

| Crop harvest | [4445][5960][6061] |

| Crop insurance | [ |

| [ | |

| 69 | |

| 70 | ] |

One of the prominent features of this technology is its higher resolution than the satellite imagery—offering up to 0.2 m of spatial resolution, which is approximately 40,000 times better resolution that means more and high-quality information can be extracted from these images. Furthermore, laser imaging detection and ranging (LiDAR) systems are using this technology to pave the way towards building 3D maps of plant canopy, soil, and field analysis, which are crucial factors for yield estimation, irrigation, and nutrients estimation, such as for N [2]. Nevertheless, impeding metrological conditions, local and national regulations, limited spatial coverage due to battery or payload limits, along with a lack of standard procedures for inflight calibration of RPA sensors [1][7071] are few of the constraints of this platform.

Likewise, it is also important to consider regional and national regulations for operating RPAs, although agricultural applications of RPAs do not invoke substantial risks for people. Government bodies are trying to cope with such regulations, but there is plenty of room for growth. In Europe, the European Union Aviation Safety Agency (EASA) presented legislative framework: “Easy Access Rules for Unmanned Aircraft Systems” (Regulations (EU) 2019/947 and (EU) 2019/945), in 2019, for RPA operations and it went into effect from 31 December 2020 [7172]. The three principal categories established under this legislation are presented in Table 3. Safety of people, security, privacy, and data protection were the principal parameters considered for establishing these regulations. Nevertheless, most countries are struggling to establish standard regulations, and this requires urgent attention. A general list of RPA laws and regulations can be consulted in [7273].

Table 3. Three categories established by European Union Aviation Safety Agency (EASA) for operating RPAs.

| Sr. | Category | Description |

|---|---|---|

| 1 | Open | Not requiring any prior operational declaration or authorization. |

| 2 | Specified | Requiring an authorization by the competent authority except for standard scenarios. |

| 3 | Certified | Requiring certification by the competent authority. |

| 44 | ||

| 45 | ||

| ] | ||

| [ | ||

| 46 | ||

| 47 | ][4849] | |

| Mechanical pollination | [2][4849][6162][6263] | |

| Forestry | [6364][6465][6566][6667][6768] | |

| Livestock | [4445][4647][4849][5253][6263][6869] |

This new era is of the Internet of Drones (IoD), where a fleet of drones is deployed, controlled through a ground station server (GSS) via a wireless channel, to harvest the desired data. Currently, research is being undertaken to explore these possibilities for remote areas, where there is no Internet connection. For example, a recent study reported on the possibility of using a cellular network for this purpose [7374]. An interesting study by Prasanna and Jebapriya [7475] on the use of RPAs in smart agriculture, based on IoT, is highly recommended, where the possibilities of this novel technology with their practical examples are discussed. Similarly, novel applications of RPAs can also enrich RS technologies; for example, very recently, an RPA was used as a mobile gateway for a wireless sensor network (WSN), establishing a 24-m flying height and a 25-m antenna coverage provided maximum node density [7576]. In the same way, the benefits of drone centimeter-scale multispectral imagery were investigated in sugar beet crop to improve the assessment of foliar and canopy parameters; five important structural and biochemical plant traits were reported, highlighting the importance of drone imagery for centimeter-scale characterization [7677]. RPAs are already used for capturing videos and photos under specific flight plans, as such, setting an RPA as a node for a WSN is an efficient way to fully benefit from this RS tool.

Despite the appeal of IoD, security may be a recurring issue in upcoming years; large companies are already at the brink of this issue. For this reason, thorough research in this domain is recommended to ensure a reliable application of RS. A recent study undertaken on this aspect of technology offers the implementation of blockchain and smart contracts to safeguard data, which are then archived through IoT-enabled RPAs and sensors, with their respective deployment considerations [7778]. Alternative and economical solutions are urgently required to address these issues.

2.3. Satellite and RPAs: A Complementing System

The use of RPAs versus satellites is often debated in the context of their agricultural applications. Nevertheless, the choice of any of these RS platforms is highly subjective to the needs of farmer and the crop to be studied. However, a minor comparison of these is presented considering their usefulness in RS.

Limited battery life and flight time hinder extensive spatial coverage using RPAs, despite being regarded as optimal for providing robust, reliable and efficient crop phenotyping [7879]. RPAs offer lower operational costs; however, for large amounts of data (to cover larger areas), data processing costs increase exponentially. Similarly, flexibility with flying times and the significant resolution of RPA imagery are desirable characteristics over satellite imagery [2].

On the other hand, freely accessible satellite data are gaining popularity. Even so, these freely available data come with coarser spatial and temporal resolutions (with the exception of a few commercial satellites), and is being used to generate VIs. In this regard, AI offers leverage to fine-tune coarser satellite data based on high-resolution drone data [7980]. Moreover, a large dataset for training AI models, despite poor quality or noisiness, generates substantial results compared to smaller datasets, indicating the importance of huge datasets obtained through satellite imagery [7980]. This is why big data holds a remarkable position in this regard. For example, a previously reported study demonstrated the effectiveness of deep learning associated with the availability of large and high-quality training samples [8081]. Furthermore, crop simulation models can be generated beforehand from these data for respective agronomic measures and crop yield estimations. Therefore, a complementing system based on these two RS platforms can supplement the efficiency of RS products.

References

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402.

- Ahmad, A.; Ordoñez, J.; Cartujo, P.; Martos, V. Remotely Piloted Aircraft (RPA) in Agriculture: A Pursuit of Sustainability. Agronomy 2021, 11, 7.

- Wulder, M.A.; Loveland, T.R.; Roy, D.P.; Crawford, C.J.; Masek, J.G.; Woodcock, C.E.; Allen, R.G.; Anderson, M.C.; Belward, A.S.; Cohen, W.B. Current status of Landsat program, science, and applications. Remote Sens. Environ. 2019, 225, 127–147.

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of remote sensing in precision agriculture: A review. Remote Sens. 2020, 12, 3136.

- Huang, Y.; Chen, Z.-x.; Tao, Y.; Huang, X.-z.; Gu, X.-f. Agricultural remote sensing big data: Management and applications. J. Integr. Agric. 2018, 17, 1915–1931.

- Deery, D.; Jimenez-Berni, J.; Jones, H.; Sirault, X.; Furbank, R. Proximal remote sensing buggies and potential applications for field-based phenotyping. Agronomy 2014, 4, 349–379.

- Zheng, C.; Abd-Elrahman, A.; Whitaker, V. Remote Sensing and Machine Learning in Crop Phenotyping and Management, with an Emphasis on Applications in Strawberry Farming. Remote Sens. 2021, 13, 531.

- Ahonen, T.; Virrankoski, R.; Elmusrati, M. Greenhouse monitoring with wireless sensor network. In Proceedings of the 2008 IEEE/ASME International Conference on Mechtronic and Embedded Systems and Applications, Beijing, China, 12–15 October 2008; pp. 403–408.

- Astill, J.; Dara, R.A.; Fraser, E.D.; Roberts, B.; Sharif, S. Smart poultry management: Smart sensors, big data, and the internet of things. Comput. Electron. Agric. 2020, 170, 105291.

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X. Unmanned aerial vehicle remote sensing for field-based crop phenotyping: Current status and perspectives. Front. Plant Sci. 2017, 8, 1111.

- Steele-Dunne, S.C.; McNairn, H.; Monsivais-Huertero, A.; Judge, J.; Liu, P.-W.; Papathanassiou, K. Radar remote sensing of agricultural canopies: A review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2249–2273.

- Mishra, P.; Asaari, M.S.M.; Herrero-Langreo, A.; Lohumi, S.; Diezma, B.; Scheunders, P. Close range hyperspectral imaging of plants: A review. Biosyst. Eng. 2017, 164, 49–67.

- Corp, L.A.; McMurtrey, J.E.; Middleton, E.M.; Mulchi, C.L.; Chappelle, E.W.; Daughtry, C.S. Fluorescence sensing systems: In vivo detection of biophysical variations in field corn due to nitrogen supply. Remote Sens. Environ. 2003, 86, 470–479.

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543.

- Ruiz-Garcia, L.; Lunadei, L.; Barreiro, P.; Robla, I. A review of wireless sensor technologies and applications in agriculture and food industry: State of the art and current trends. Sensors 2009, 9, 4728–4750.

- Lary, D.J.; Alavi, A.H.; Gandomi, A.H.; Walker, A.L. Machine learning in geosciences and remote sensing. Geosci. Front. 2016, 7, 3–10.Favour Adenugba; Sanjay Misra; Rytis Maskeliūnas; Robertas Damaševičius; Egidijus Kazanavičius; Smart irrigation system for environmental sustainability in Africa: An Internet of Everything (IoE) approach. Mathematical Biosciences and Engineering 2019, 16, 5490-5503, 10.3934/mbe.2019273.

- Heung, B.; Ho, H.C.; Zhang, J.; Knudby, A.; Bulmer, C.E.; Schmidt, M.G. An overview and comparison of machine-learning techniques for classification purposes in digital soil mapping. Geoderma 2016, 265, 62–77.Lary, D.J.; Alavi, A.H.; Gandomi, A.H.; Walker, A.L. Machine learning in geosciences and remote sensing. Geosci. Front. 2016, 7, 3–10.

- Abbasi, A.Z.; Islam, N.; Shaikh, Z.A. A review of wireless sensors and networks’ applications in agriculture. Comput. Stand. Interfaces 2014, 36, 263–270.Heung, B.; Ho, H.C.; Zhang, J.; Knudby, A.; Bulmer, C.E.; Schmidt, M.G. An overview and comparison of machine-learning techniques for classification purposes in digital soil mapping. Geoderma 2016, 265, 62–77.

- Sahitya, G.; Balaji, N.; Naidu, C.D.; Abinaya, S. Designing a wireless sensor network for precision agriculture using zigbee. In Proceedings of the 2017 IEEE 7th International Advance Computing Conference (IACC), Hyderabad, India, 5–7 January 2017; pp. 287–291.Abbasi, A.Z.; Islam, N.; Shaikh, Z.A. A review of wireless sensors and networks’ applications in agriculture. Comput. Stand. Interfaces 2014, 36, 263–270.

- Azzari, G.; Goulden, M.L.; Rusu, R.B. Rapid characterization of vegetation structure with a Microsoft Kinect sensor. Sensors 2013, 13, 2384–2398.Sahitya, G.; Balaji, N.; Naidu, C.D.; Abinaya, S. Designing a wireless sensor network for precision agriculture using zigbee. In Proceedings of the 2017 IEEE 7th International Advance Computing Conference (IACC), Hyderabad, India, 5–7 January 2017; pp. 287–291.

- Kayad, A.; Paraforos, D.S.; Marinello, F.; Fountas, S. Latest Advances in Sensor Applications in Agriculture. Agriculture 2020, 10, 362.Azzari, G.; Goulden, M.L.; Rusu, R.B. Rapid characterization of vegetation structure with a Microsoft Kinect sensor. Sensors 2013, 13, 2384–2398.

- Iban, M.C.; Aksu, O. A model for big spatial rural data infrastructure in Turkey: Sensor-driven and integrative approach. Land Use Policy 2020, 91, 104376.Kayad, A.; Paraforos, D.S.; Marinello, F.; Fountas, S. Latest Advances in Sensor Applications in Agriculture. Agriculture 2020, 10, 362.

- INSIDER. Available online: (accessed on 7 February 2021).Iban, M.C.; Aksu, O. A model for big spatial rural data infrastructure in Turkey: Sensor-driven and integrative approach. Land Use Policy 2020, 91, 104376.

- NASA. NASA Knows. Available online: (accessed on 17 January 2021).INSIDER. Available online: (accessed on 7 February 2021).

- Ryu, Y.; Berry, J.A.; Baldocchi, D.D. What is global photosynthesis? History, uncertainties and opportunities. Remote Sens. Environ. 2019, 223, 95–114.NASA. NASA Knows. Available online: (accessed on 17 January 2021).

- Al-Yaari, A.; Wigneron, J.-P.; Dorigo, W.; Colliander, A.; Pellarin, T.; Hahn, S.; Mialon, A.; Richaume, P.; Fernandez-Moran, R.; Fan, L. Assessment and inter-comparison of recently developed/reprocessed microwave satellite soil moisture products using ISMN ground-based measurements. Remote Sens. Environ. 2019, 224, 289–303.Ryu, Y.; Berry, J.A.; Baldocchi, D.D. What is global photosynthesis? History, uncertainties and opportunities. Remote Sens. Environ. 2019, 223, 95–114.

- Institute, N.G. National Remote Sensing Plan. Available online: (accessed on 21 January 2021).Al-Yaari, A.; Wigneron, J.-P.; Dorigo, W.; Colliander, A.; Pellarin, T.; Hahn, S.; Mialon, A.; Richaume, P.; Fernandez-Moran, R.; Fan, L. Assessment and inter-comparison of recently developed/reprocessed microwave satellite soil moisture products using ISMN ground-based measurements. Remote Sens. Environ. 2019, 224, 289–303.

- Copernicus. Available online: (accessed on 21 January 2021).Institute, N.G. National Remote Sensing Plan. Available online: (accessed on 21 January 2021).

- FAO. Available online: (accessed on 9 April 2021).Copernicus. Available online: (accessed on 21 January 2021).

- ESA. Available online: (accessed on 21 January 2021).FAO. Available online: (accessed on 9 April 2021).

- Mazzia, V.; Comba, L.; Khaliq, A.; Chiaberge, M.; Gay, P. UAV and Machine Learning Based Refinement of a Satellite-Driven Vegetation Index for Precision Agriculture. Sensors 2020, 20, 2530.ESA. Available online: (accessed on 21 January 2021).

- Panek, E.; Gozdowski, D. Relationship between MODIS Derived NDVI and Yield of Cereals for Selected European Countries. Agronomy 2021, 11, 340.Mazzia, V.; Comba, L.; Khaliq, A.; Chiaberge, M.; Gay, P. UAV and Machine Learning Based Refinement of a Satellite-Driven Vegetation Index for Precision Agriculture. Sensors 2020, 20, 2530.

- Cheng, T.; Ji, X.; Yang, G.; Zheng, H.; Ma, J.; Yao, X.; Zhu, Y.; Cao, W. DESTIN: A new method for delineating the boundaries of crop fields by fusing spatial and temporal information from WorldView and Planet satellite imagery. Comput. Electron. Agric. 2020, 178, 105787.Panek, E.; Gozdowski, D. Relationship between MODIS Derived NDVI and Yield of Cereals for Selected European Countries. Agronomy 2021, 11, 340.

- Tetteh, G.O.; Gocht, A.; Conrad, C. Optimal parameters for delineating agricultural parcels from satellite images based on supervised Bayesian optimization. Comput. Electron. Agric. 2020, 178, 105696.Cheng, T.; Ji, X.; Yang, G.; Zheng, H.; Ma, J.; Yao, X.; Zhu, Y.; Cao, W. DESTIN: A new method for delineating the boundaries of crop fields by fusing spatial and temporal information from WorldView and Planet satellite imagery. Comput. Electron. Agric. 2020, 178, 105787.

- Research, E.V. Available online: (accessed on 25 February 2021).Tetteh, G.O.; Gocht, A.; Conrad, C. Optimal parameters for delineating agricultural parcels from satellite images based on supervised Bayesian optimization. Comput. Electron. Agric. 2020, 178, 105696.

- Maes, W.H.; Steppe, K. Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant Sci. 2019, 24, 152–164.Research, E.V. Available online: (accessed on 25 February 2021).

- Tzounis, A.; Bartzanas, T.; Kittas, C.; Katsoulas, N.; Ferentinos, K. Spatially distributed greenhouse climate control based on wireless sensor network measurements. In Proceedings of the V International Symposium on Applications of Modelling as an Innovative Technology in the Horticultural Supply Chain-Model-IT 1154; ISHS Acta Horticulturae: Wageningen, The Netherlands, 2017; pp. 111–120.Maes, W.H.; Steppe, K. Perspectives for remote sensing with unmanned aerial vehicles in precision agriculture. Trends Plant Sci. 2019, 24, 152–164.

- Keerthi, V.; Kodandaramaiah, G. Cloud IoT based greenhouse monitoring system. Int. J. Eng. Res. Appl. 2015, 5, 35–41.Tzounis, A.; Bartzanas, T.; Kittas, C.; Katsoulas, N.; Ferentinos, K. Spatially distributed greenhouse climate control based on wireless sensor network measurements. In Proceedings of the V International Symposium on Applications of Modelling as an Innovative Technology in the Horticultural Supply Chain-Model-IT 1154; ISHS Acta Horticulturae: Wageningen, The Netherlands, 2017; pp. 111–120.

- Tzounis, A.; Katsoulas, N.; Bartzanas, T.; Kittas, C. Internet of Things in agriculture, recent advances and future challenges. Biosyst. Eng. 2017, 164, 31–48.Keerthi, V.; Kodandaramaiah, G. Cloud IoT based greenhouse monitoring system. Int. J. Eng. Res. Appl. 2015, 5, 35–41.

- Pino, E. Los drones una herramienta para una agricultura eficiente: Un futuro de alta tecnología. Idesia (Arica) 2019, 37, 75–84.Tzounis, A.; Katsoulas, N.; Bartzanas, T.; Kittas, C. Internet of Things in agriculture, recent advances and future challenges. Biosyst. Eng. 2017, 164, 31–48.

- Oré, G.; Alcântara, M.S.; Góes, J.A.; Oliveira, L.P.; Yepes, J.; Teruel, B.; Castro, V.; Bins, L.S.; Castro, F.; Luebeck, D. Crop growth monitoring with drone-borne DInSAR. Remote Sens. 2020, 12, 615.Pino, E. Los drones una herramienta para una agricultura eficiente: Un futuro de alta tecnología. Idesia (Arica) 2019, 37, 75–84.

- Panday, U.S.; Shrestha, N.; Maharjan, S.; Pratihast, A.K.; Shrestha, K.L.; Aryal, J. Correlating the plant height of wheat with above-ground biomass and crop yield using drone imagery and crop surface model, a case study from Nepal. Drones 2020, 4, 28.Oré, G.; Alcântara, M.S.; Góes, J.A.; Oliveira, L.P.; Yepes, J.; Teruel, B.; Castro, V.; Bins, L.S.; Castro, F.; Luebeck, D. Crop growth monitoring with drone-borne DInSAR. Remote Sens. 2020, 12, 615.

- DEVI, G.; Sowmiya, N.; Yasoda, K.; Muthulakshmi, K.; BALASUBRAMANIAN, K. Review on Application of Drones for Crop Health Monitoring and Spraying Pesticides and Fertilizer. J. Crit. Rev. 2020, 7, 667–672.Panday, U.S.; Shrestha, N.; Maharjan, S.; Pratihast, A.K.; Shrestha, K.L.; Aryal, J. Correlating the plant height of wheat with above-ground biomass and crop yield using drone imagery and crop surface model, a case study from Nepal. Drones 2020, 4, 28.

- Rani, A.; Chaudhary, A.; Sinha, N.; Mohanty, M.; Chaudhary, R. Drone: The green technology for future agriculture. Har. Dhara 2019, 2, 3–6.DEVI, G.; Sowmiya, N.; Yasoda, K.; Muthulakshmi, K.; BALASUBRAMANIAN, K. Review on Application of Drones for Crop Health Monitoring and Spraying Pesticides and Fertilizer. J. Crit. Rev. 2020, 7, 667–672.

- Santos, L.M.d.; Barbosa, B.D.S.; Andrade, A.D. Use of remotely piloted aircraft in precision agriculture: A review. Dyna 2019, 86, 284–291.Rani, A.; Chaudhary, A.; Sinha, N.; Mohanty, M.; Chaudhary, R. Drone: The green technology for future agriculture. Har. Dhara 2019, 2, 3–6.

- Stehr, N.J. Drones: The newest technology for precision agriculture. Nat. Sci. Educ. 2015, 44, 89–91.Santos, L.M.d.; Barbosa, B.D.S.; Andrade, A.D. Use of remotely piloted aircraft in precision agriculture: A review. Dyna 2019, 86, 284–291.

- Psirofonia, P.; Samaritakis, V.; Eliopoulos, P.; Potamitis, I. Use of unmanned aerial vehicles for agricultural applications with emphasis on crop protection: Three novel case-studies. Int. J. Agric. Sci. Technol. 2017, 5, 30–39.Stehr, N.J. Drones: The newest technology for precision agriculture. Nat. Sci. Educ. 2015, 44, 89–91.

- Ren, Q.; Zhang, R.; Cai, W.; Sun, X.; Cao, L. Application and Development of New Drones in Agriculture. In Proceedings of IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2020; p. 052041.Psirofonia, P.; Samaritakis, V.; Eliopoulos, P.; Potamitis, I. Use of unmanned aerial vehicles for agricultural applications with emphasis on crop protection: Three novel case-studies. Int. J. Agric. Sci. Technol. 2017, 5, 30–39.

- Wang, W.; Paschalidis, K.; Feng, J.-C.; Song, J.; Liu, J.-H. Polyamine catabolism in plants: A universal process with diverse functions. Front. Plant Sci. 2019, 10, 561.Ren, Q.; Zhang, R.; Cai, W.; Sun, X.; Cao, L. Application and Development of New Drones in Agriculture. In Proceedings of IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2020; p. 052041.

- Iost Filho, F.H.; Heldens, W.B.; Kong, Z.; de Lange, E.S. Drones: Innovative technology for use in precision pest management. J. Econ. Entomol. 2020, 113, 1–25.Wang, W.; Paschalidis, K.; Feng, J.-C.; Song, J.; Liu, J.-H. Polyamine catabolism in plants: A universal process with diverse functions. Front. Plant Sci. 2019, 10, 561.

- Daponte, P.; De Vito, L.; Glielmo, L.; Iannelli, L.; Liuzza, D.; Picariello, F.; Silano, G. A review on the use of drones for precision agriculture. In Proceedings of IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2020; p. 012022.Iost Filho, F.H.; Heldens, W.B.; Kong, Z.; de Lange, E.S. Drones: Innovative technology for use in precision pest management. J. Econ. Entomol. 2020, 113, 1–25.

- Natu, A.S.; Kulkarni, S. Adoption and utilization of drones for advanced precision farming: A review. Int. J. Recent Innov. Trends Comput. Commun. 2016, 4, 563–565.Daponte, P.; De Vito, L.; Glielmo, L.; Iannelli, L.; Liuzza, D.; Picariello, F.; Silano, G. A review on the use of drones for precision agriculture. In Proceedings of IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2020; p. 012022.

- Gómez-Candón, D.; Virlet, N.; Labbé, S.; Jolivot, A.; Regnard, J.-L. Field phenotyping of water stress at tree scale by UAV-sensed imagery: New insights for thermal acquisition and calibration. Precis. Agric. 2016, 17, 786–800.Natu, A.S.; Kulkarni, S. Adoption and utilization of drones for advanced precision farming: A review. Int. J. Recent Innov. Trends Comput. Commun. 2016, 4, 563–565.

- Knipper, K.R.; Kustas, W.P.; Anderson, M.C.; Alfieri, J.G.; Prueger, J.H.; Hain, C.R.; Gao, F.; Yang, Y.; McKee, L.G.; Nieto, H. Evapotranspiration estimates derived using thermal-based satellite remote sensing and data fusion for irrigation management in California vineyards. Irrig. Sci. 2019, 37, 431–449.Gómez-Candón, D.; Virlet, N.; Labbé, S.; Jolivot, A.; Regnard, J.-L. Field phenotyping of water stress at tree scale by UAV-sensed imagery: New insights for thermal acquisition and calibration. Precis. Agric. 2016, 17, 786–800.

- Song, X.-P.; Liang, Y.-J.; Zhang, X.-Q.; Qin, Z.-Q.; Wei, J.-J.; Li, Y.-R.; Wu, J.-M. Intrusion of fall armyworm (Spodoptera frugiperda) in sugarcane and its control by drone in China. Sugar Tech 2020, 22, 734–737.Knipper, K.R.; Kustas, W.P.; Anderson, M.C.; Alfieri, J.G.; Prueger, J.H.; Hain, C.R.; Gao, F.; Yang, Y.; McKee, L.G.; Nieto, H. Evapotranspiration estimates derived using thermal-based satellite remote sensing and data fusion for irrigation management in California vineyards. Irrig. Sci. 2019, 37, 431–449.

- Shaw, K.K.; Vimalkumar, R. Design and development of a drone for spraying pesticides, fertilizers and disinfectants. Eng. Res. Technol. (IJERT) 2020, 9, 1181–1185.Song, X.-P.; Liang, Y.-J.; Zhang, X.-Q.; Qin, Z.-Q.; Wei, J.-J.; Li, Y.-R.; Wu, J.-M. Intrusion of fall armyworm (Spodoptera frugiperda) in sugarcane and its control by drone in China. Sugar Tech 2020, 22, 734–737.

- Tripicchio, P.; Satler, M.; Dabisias, G.; Ruffaldi, E.; Avizzano, C.A. Towards smart farming and sustainable agriculture with drones. In Proceedings of the 2015 International Conference on Intelligent Environments, Prague, Czech Republic, 15–17 July 2015; pp. 140–143.Shaw, K.K.; Vimalkumar, R. Design and development of a drone for spraying pesticides, fertilizers and disinfectants. Eng. Res. Technol. (IJERT) 2020, 9, 1181–1185.

- Negash, L.; Kim, H.-Y.; Choi, H.-L. Emerging UAV applications in agriculture. In Proceedings of the 2019 7th International Conference on Robot Intelligence Technology and Applications (RiTA), Daejeon, Korea, 1–3 November 2019; pp. 254–257.Tripicchio, P.; Satler, M.; Dabisias, G.; Ruffaldi, E.; Avizzano, C.A. Towards smart farming and sustainable agriculture with drones. In Proceedings of the 2015 International Conference on Intelligent Environments, Prague, Czech Republic, 15–17 July 2015; pp. 140–143.

- Herrmann, I.; Bdolach, E.; Montekyo, Y.; Rachmilevitch, S.; Townsend, P.A.; Karnieli, A. Assessment of maize yield and phenology by drone-mounted superspectral camera. Precis. Agric. 2020, 21, 51–76.Negash, L.; Kim, H.-Y.; Choi, H.-L. Emerging UAV applications in agriculture. In Proceedings of the 2019 7th International Conference on Robot Intelligence Technology and Applications (RiTA), Daejeon, Korea, 1–3 November 2019; pp. 254–257.

- Vroegindeweij, B.A.; van Wijk, S.W.; van Henten, E. Autonomous unmanned aerial vehicles for agricultural applications. In Proceedings of the AgEng 2014, Lausanne, Switzerland, 6–10 July 2014.Herrmann, I.; Bdolach, E.; Montekyo, Y.; Rachmilevitch, S.; Townsend, P.A.; Karnieli, A. Assessment of maize yield and phenology by drone-mounted superspectral camera. Precis. Agric. 2020, 21, 51–76.

- Gauvreau, P.R., Jr. Unmanned Aerial Vehicle for Augmenting Plant Pollination. U.S. Patent Application No 16/495,818, 23 January 2020.Vroegindeweij, B.A.; van Wijk, S.W.; van Henten, E. Autonomous unmanned aerial vehicles for agricultural applications. In Proceedings of the AgEng 2014, Lausanne, Switzerland, 6–10 July 2014.

- Sun, Y.; Yi, S.; Hou, F.; Luo, D.; Hu, J.; Zhou, Z. Quantifying the dynamics of livestock distribution by unmanned aerial vehicles (UAVs): A case study of yak grazing at the household scale. Rangel. Ecol. Manag. 2020, 73, 642–648.Gauvreau, P.R., Jr. Unmanned Aerial Vehicle for Augmenting Plant Pollination. U.S. Patent Application No 16/495,818, 23 January 2020.

- Banu, T.P.; Borlea, G.F.; Banu, C. The use of drones in forestry. J. Environ. Sci. Eng. B 2016, 5, 557–562.Sun, Y.; Yi, S.; Hou, F.; Luo, D.; Hu, J.; Zhou, Z. Quantifying the dynamics of livestock distribution by unmanned aerial vehicles (UAVs): A case study of yak grazing at the household scale. Rangel. Ecol. Manag. 2020, 73, 642–648.

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447.Banu, T.P.; Borlea, G.F.; Banu, C. The use of drones in forestry. J. Environ. Sci. Eng. B 2016, 5, 557–562.

- D’Odorico, P.; Besik, A.; Wong, C.Y.; Isabel, N.; Ensminger, I. High-throughput drone-based remote sensing reliably tracks phenology in thousands of conifer seedlings. New Phytol. 2020, 226, 1667–1681.Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447.

- Tu, Y.-H.; Phinn, S.; Johansen, K.; Robson, A.; Wu, D. Optimising drone flight planning for measuring horticultural tree crop structure. New Phytol. 2020, 160, 83–96.D’Odorico, P.; Besik, A.; Wong, C.Y.; Isabel, N.; Ensminger, I. High-throughput drone-based remote sensing reliably tracks phenology in thousands of conifer seedlings. New Phytol. 2020, 226, 1667–1681.

- Sudhakar, S.; Vijayakumar, V.; Kumar, C.S.; Priya, V.; Ravi, L.; Subramaniyaswamy, V. Unmanned Aerial Vehicle (UAV) based Forest Fire Detection and monitoring for reducing false alarms in forest-fires. Comput. Commun. 2020, 149, 1–16.Tu, Y.-H.; Phinn, S.; Johansen, K.; Robson, A.; Wu, D. Optimising drone flight planning for measuring horticultural tree crop structure. New Phytol. 2020, 160, 83–96.

- Vayssade, J.-A.; Arquet, R.; Bonneau, M. Automatic activity tracking of goats using drone camera. Comput. Electron. Agric. 2019, 162, 767–772.Sudhakar, S.; Vijayakumar, V.; Kumar, C.S.; Priya, V.; Ravi, L.; Subramaniyaswamy, V. Unmanned Aerial Vehicle (UAV) based Forest Fire Detection and monitoring for reducing false alarms in forest-fires. Comput. Commun. 2020, 149, 1–16.

- Wang, D.; Song, Q.; Liao, X.; Ye, H.; Shao, Q.; Fan, J.; Cong, N.; Xin, X.; Yue, H.; Zhang, H. Integrating satellite and unmanned aircraft system (UAS) imagery to model livestock population dynamics in the Longbao Wetland National Nature Reserve, China. Sci. Total Environ. 2020, 746, 140327.Vayssade, J.-A.; Arquet, R.; Bonneau, M. Automatic activity tracking of goats using drone camera. Comput. Electron. Agric. 2019, 162, 767–772.

- Barsi, J.A.; Schott, J.R.; Hook, S.J.; Raqueno, N.G.; Markham, B.L.; Radocinski, R.G. Landsat-8 thermal infrared sensor (TIRS) vicarious radiometric calibration. Remote Sens. 2014, 6, 11607–11626.Wang, D.; Song, Q.; Liao, X.; Ye, H.; Shao, Q.; Fan, J.; Cong, N.; Xin, X.; Yue, H.; Zhang, H. Integrating satellite and unmanned aircraft system (UAS) imagery to model livestock population dynamics in the Longbao Wetland National Nature Reserve, China. Sci. Total Environ. 2020, 746, 140327.

- Cerro, J.d.; Cruz Ulloa, C.; Barrientos, A.; de León Rivas, J. Unmanned Aerial Vehicles in Agriculture: A Survey. Agronomy 2021, 11, 203.Barsi, J.A.; Schott, J.R.; Hook, S.J.; Raqueno, N.G.; Markham, B.L.; Radocinski, R.G. Landsat-8 thermal infrared sensor (TIRS) vicarious radiometric calibration. Remote Sens. 2014, 6, 11607–11626.

- A Global Directory of Drone Laws and Regulations. Available online: (accessed on 14 February 2021).Cerro, J.d.; Cruz Ulloa, C.; Barrientos, A.; de León Rivas, J. Unmanned Aerial Vehicles in Agriculture: A Survey. Agronomy 2021, 11, 203.

- Singh, B.; Singh, N.; Kaushish, A.; Gupta, N. Optimizing IOT Drones using Cellular Networks. In Proceedings of the 2020 12th International Conference on Computational Intelligence and Communication Networks (CICN), Bhimtal, India, 25–26 September 2020; pp. 192–197.A Global Directory of Drone Laws and Regulations. Available online: (accessed on 14 February 2021).

- Prasanna, M.S.; Jebapriya, M.J. IoT based agriculture monitoring and smart farming using drones. Mukt Shabd J. 2020, IX, 525–534.Singh, B.; Singh, N.; Kaushish, A.; Gupta, N. Optimizing IOT Drones using Cellular Networks. In Proceedings of the 2020 12th International Conference on Computational Intelligence and Communication Networks (CICN), Bhimtal, India, 25–26 September 2020; pp. 192–197.

- García, L.; Parra, L.; Jimenez, J.M.; Lloret, J.; Mauri, P.V.; Lorenz, P. DronAway: A Proposal on the Use of Remote Sensing Drones as Mobile Gateway for WSN in Precision Agriculture. Appl. Sci. 2020, 10, 6668.Prasanna, M.S.; Jebapriya, M.J. IoT based agriculture monitoring and smart farming using drones. Mukt Shabd J. 2020, IX, 525–534.

- FAO Sdgs. Available online: (accessed on 8 April 2021).García, L.; Parra, L.; Jimenez, J.M.; Lloret, J.; Mauri, P.V.; Lorenz, P. DronAway: A Proposal on the Use of Remote Sensing Drones as Mobile Gateway for WSN in Precision Agriculture. Appl. Sci. 2020, 10, 6668.

- Chinnaiyan, R.; Balachandar, S. Reliable administration framework of drones and IoT sensors in agriculture farmstead using blockchain and smart contracts. In Proceedings of the Proceedings of the 2020 2nd International Conference on Big Data Engineering and Technology, Singapore, 3–5 January 2020; pp. 106–111.FAO Sdgs. Available online: (accessed on 8 April 2021).

- Singh, K.K.; Frazier, A.E. A meta-analysis and review of unmanned aircraft system (UAS) imagery for terrestrial applications. Int. J. Remote Sens. 2018, 39, 5078–5098.Chinnaiyan, R.; Balachandar, S. Reliable administration framework of drones and IoT sensors in agriculture farmstead using blockchain and smart contracts. In Proceedings of the Proceedings of the 2020 2nd International Conference on Big Data Engineering and Technology, Singapore, 3–5 January 2020; pp. 106–111.

- Jung, J.; Maeda, M.; Chang, A.; Bhandari, M.; Ashapure, A.; Landivar-Bowles, J. The potential of remote sensing and artificial intelligence as tools to improve the resilience of agriculture production systems. Curr. Opin. Biotechnol. 2021, 70, 15–22.Singh, K.K.; Frazier, A.E. A meta-analysis and review of unmanned aircraft system (UAS) imagery for terrestrial applications. Int. J. Remote Sens. 2018, 39, 5078–5098.

- Halevy, A.; Norvig, P.; Pereira, F. The unreasonable effectiveness of data. IEEE Intell. Syst. 2009, 24, 8–12.Jung, J.; Maeda, M.; Chang, A.; Bhandari, M.; Ashapure, A.; Landivar-Bowles, J. The potential of remote sensing and artificial intelligence as tools to improve the resilience of agriculture production systems. Curr. Opin. Biotechnol. 2021, 70, 15–22.

- Halevy, A.; Norvig, P.; Pereira, F. The unreasonable effectiveness of data. IEEE Intell. Syst. 2009, 24, 8–12.