Significant strides have been made in the vision community to integrate attention mechanisms into architectures inspired by CNNs. Recent research has shown that these transformer modules can potentially substitute standard convolutions in deep neural networks by working on a sequence of image patches culminating in the creation of ViTs.

In recent years, the integration of vision transformers into medical US analysis has encompassed a diverse range of methodological tasks. These tasks include traditional diagnostic functions like segmentation, classification, biometric measurements, detection, quality assessment, and registration, as well as innovative applications like image-guided interventions and therapy.

2. Fundamentals of Transformers

Transformers have advanced the field of Natural Language Processing (NLP) by providing a fundamental framework for processing and understanding language. Originally introduced by Vaswani et al. in 2017

[24][25], transformers have since become a cornerstone in NLP, particularly with the development of models such as BERT, GPT-3, and T5.

Fundamentally, transformers represent a kind of neural network architecture that does not rely on convolutions. They excel at identifying long-range dependencies and relationships in sequential data, which makes them especially effective for tasks related to language. The groundbreaking aspect of transformers is their attention mechanism, which empowers them to assign weights to the significance of various words in a sentence. This capability allows them to process and comprehend context more efficiently than earlier NLP models.

In addition to their significant impact on NLP, transformers have also shown promise in the field of computer vision. Vision transformers (ViTs) have emerged as a novel approach to image recognition tasks, challenging the traditional CNN architectures.

By applying the self-attention mechanism to image patches, vision transformers can effectively capture global dependencies in images, enabling them to understand the context and relationships between different parts of an image. This has led to impressive results in tasks such as image classification, object detection, and image segmentation.

The introduction of vision transformers has also opened up opportunities for cross-modal learning, where transformers can be applied to tasks that involve both text and images, such as image captioning and visual question answering. This demonstrates the versatility of transformers in handling multimodal data and their potential to drive innovation at the intersection of NLP and computer vision.

Overall, the application of transformers in computer vision showcases their adaptability and potential to revolutionize not only NLP, but also other domains of artificial intelligence, paving the way for new advancements in multimodal learning and our understanding of complex data.

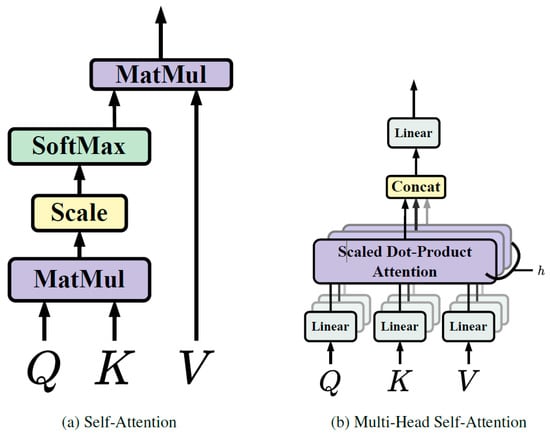

2.1. Self-Attention

In transformers, self-attention is an element of the attention mechanism that allows the model to focus on various segments of the input sequence and identify dependencies among them

[24][25]. This process involves converting the input sequence into three vectors: queries, keys, and values. The queries are employed to extract pertinent data from the keys, while the values are utilized to generate the output. The attention weights are determined based on the correlation between the queries and keys. The final output is produced by summing the weighted values.

This mechanism is especially potent in detecting long-term dependencies within the input sequence, thereby making it a valuable instrument for natural language processing and similar sequence-based tasks.

To elaborate, before the input sentence is fed into the self-attention block, it is first converted into an embedding vector. This process is known as “word embedding” or “sentence embedding”, and it forms the basis of many Natural Language Processing (NLP) tasks. After the embedding vector, the positional information of each word is also included because the position can alter the meaning of the word or sentence. This is performed to allow the model to track the position of each vector or word. Once the vectors are prepared, the next step is to calculate the similarity between any two vectors. The dot product is commonly used for this purpose due to its computational efficiency and space optimization. The dot product provides scalar results, which are suitable for our needs. After obtaining the similarity scores, the next step involves normalizing and applying the softmax function to obtain the attention weights. These weights are then multiplied with the original input vector to adjust the values according to the weights received from the softmax function.

2.2. Multi-Head Self-Attention

Multi-head self-attention is a strategy used in transformers to boost the model’s capacity to grasp a variety of relationships and dependencies within the input sequence

[24][25]. This methodology involves executing self-attention multiple times concurrently, each time with different sets of learned queries, keys, and values. Each set originates from a linear projection of the initial input, offering multiple unique viewpoints on the input sequence (

Figure 13).

Figure 13. Self-attention and multi-head self-attention [24]. Self-attention and multi-head self-attention [25].

Utilizing multiple attention heads allows the model to pay attention to different portions of the input sequence and collect various types of information simultaneously. Once the self-attention process is independently carried out for each head, the outcomes are amalgamated and subjected to a linear transformation to yield the final output. This methodology empowers the model to effectively identify intricate patterns and relationships within the input data, thereby enhancing its overall representational capability.

Multi-head self-attention is a key innovation in transformers, contributing to their effectiveness in handling diverse and intricate sequences of data, such as those encountered in natural language processing and other sequence-based tasks.

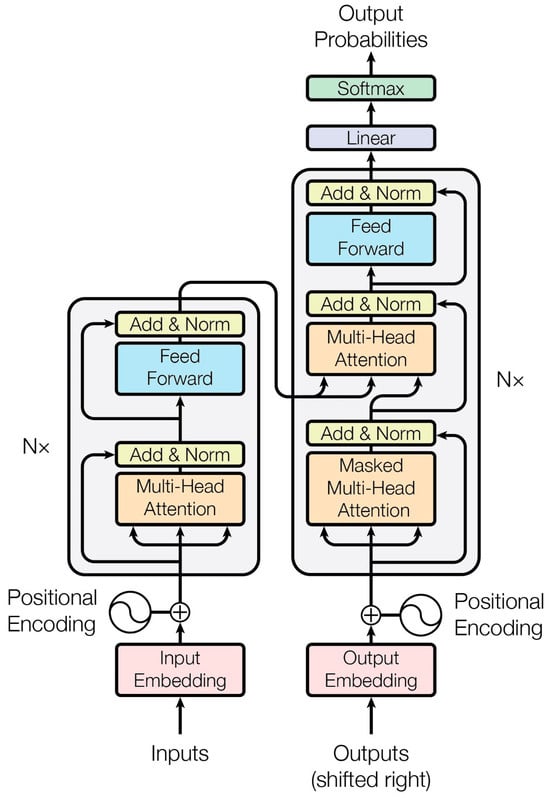

3. Transformer Architecture

The architecture of transformers consists of both encoder and decoder blocks (

Figure 24), which are fundamental components in sequence-to-sequence models, particularly in tasks such as machine translation

[24][25].

Figure 24. Transformer architecture [24]. Transformer architecture [25].

Encoder: The encoder is responsible for processing the input sequence. It typically comprises multiple layers, each containing self-attention mechanisms and feedforward neural networks. In each layer, the input sequence is transformed through self-attention, allowing the model to capture dependencies and relationships within the sequence. The outputs from the self-attention are then passed through position-wise feedforward networks to further process the information. The encoder’s role is to create a rich representation of the input sequence, capturing its semantic and contextual information effectively.

Decoder: The decoder, on the other hand, is tasked with generating the output sequence based on the processed input. Similar to the encoder, it consists of multiple layers, each containing self-attention mechanisms and feedforward neural networks. However, the decoder also includes an additional cross-attention mechanism that allows it to focus on the input sequence (encoded representation) while generating the output. This enables the decoder to leverage the information from the input sequence to produce a meaningful output sequence.

The encoder–decoder architecture in transformers enables the model to effectively handle sequence-to-sequence tasks, such as machine translation and text summarization. It allows complex dependencies within the input sequence to be captured and that information to be leveraged to generate accurate and coherent output sequences.

4. Vision Transformers

The achievements of transformers in natural language processing have influenced the computer vision research community, leading to numerous endeavors to modify transformers for vision-related tasks. Transformer-based models specifically designed for vision applications have been rapidly developed, with notable examples including the detection transformer (DETR)

[25][26], vision transformer (ViT), data-efficient image transformer (DeiT)

[26][27], and Swin transformer

[27][28]. These models represent significant advancements in leveraging transformers for computer vision and have gained recognition for their contributions to tasks such as object detection, image classification, and efficient image comprehension.

DETR: DETR, standing for DEtection TRansformer, has brought a major breakthrough in the realm of computer vision, particularly in the area of object detection tasks. Created by Carion et al.

[25][26], DETR represents a departure from conventional methods that depended heavily on manual design processes, and demonstrates the potential of transformers to revolutionize object detection within the field of computer vision. This approach replaces the complex, hand-crafted object detection pipeline with a simpler one based on transformers. This method simplifies the intricate, manually crafted object detection pipeline by substituting it with a transformer.

The DETR uses a transformer encoder to comprehend the relationships between the image features derived from a CNN backbone. The transformer decoder generates object queries, and a feedforward network is responsible for assigning labels and determining bounding boxes around the objects. This involves a set-based global loss mechanism that ensures unique predictions through bipartite matching, along with a transformer encoder–decoder architecture. With a fixed small set of learned object queries, the DETR considers the relationships between objects and the global image context to directly produce the final set of predictions in parallel.

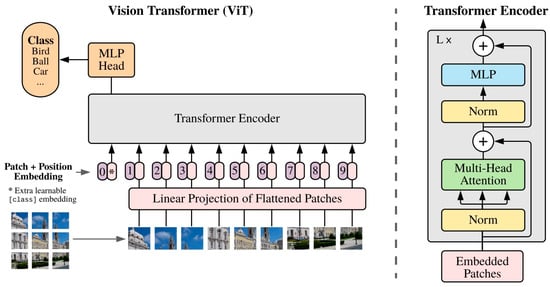

ViT: Following the introduction of the DETR, Dosovitskiy et al.

[10] introduced the Vision Transformer (ViT), a model that employs the fundamental architecture of the traditional transformer for image classification tasks. As depicted in

Figure 35, the ViT operates similarly to a BERT-like encoder-only transformer, utilizing a series of vector representations to classify images.

Figure 35.

Vision transformer overview [10].

The process begins with the input image being converted into a sequence of patches. Each patch is paired by a positional encoding technique, which encodes the spatial positions of the patches to provide spatial information. These patches, along with a class token, are then fed into the transformer. This process computes the Multi-Head Self-Attention (MHSA) and generates the learned embeddings of the patches. The class token’s state from the ViT’s output serves as the image’s representation. Lastly, a multi-layer perceptron (MLP) is used to classify the learned image representation.

Moreover, the ViT can also accept feature maps from CNNs as input for relational mapping, in addition to raw images. This flexibility allows for more nuanced and complex image analyses.

DeiT: To address the issue of the ViT requiring vast amounts of training data, Touvron et al.

[26][27] introduced the Data-efficient Image Transformer (DeiT) to achieve high performance on small-scale data.

In the context of knowledge distillation, a teacher–student framework was implemented, incorporating a distillation token, a term used in transformer terminology. This token followed the input sequence and enabled the student model to learn from the output of the teacher model. They hypothesized that using a CNN as the teacher model could assist in training the transformer as the student network, allowing the student network to inherit inductive bias.

Swin Transformer Introduced by Ze Liu et al. in 2021

[27][28], the Swin transformer is a transformer architecture known for its ability to generate a hierarchical feature representation. This architecture exhibits linear computational complexity relative to the size of the input image. It is particularly useful in various computer vision tasks due to its ability to serve as a versatile backbone. These tasks include instance segmentation, semantic segmentation, image classification, and object detection.

The Swin transformer is based on the standard transformer architecture, but it uses shifted windows to process images at different scales. The Swin transformer is designed to be more efficient than other transformer architectures, such as the ViT, with smaller datasets.

PVT: The Pyramid Vision Transformer (PVT)

[28][29] is a transformer variant that is adept at handling dense prediction tasks. It employs a pyramid structure, enabling detailed inputs (4 × 4 pixels per patch) and reducing the sequence length of the transformer as it deepens, thus lowering the computational cost. The PVT comprises several key components: dense connections for learning complex patterns, feedforward networks for data processing, layer normalization for stabilizing learning, residual connections (Skip Connections) for mitigating the vanishing gradients problem, and scaled dot-product attention for calculating the input data relevance.

CvT: The Convolutional Vision Transformer (CvT)

[29][30] is an innovative architecture that enhances the vision transformer (ViT) by integrating convolutions. This enhancement is realized through two primary alterations: a hierarchical structure of transformers with a new convolutional token embedding, and a convolutional transformer block that employs a convolutional projection. The convolutional token embedding layer provides the ability to modify the token feature dimension and the quantity of tokens at each level, allowing the tokens to depict progressively intricate visual patterns across wider spatial areas, similar to feature layers in CNNs. The convolutional transformer block replaces the linear projection in the transformer module with a convolutional projection, capturing the local spatial context and reducing semantic ambiguity in the attention mechanism.

The CvT architecture has been found to exhibit superior performance compared to other vision transformers and ResNets on ImageNet-1k. Interestingly, the CvT model demonstrates that positional encoding, a crucial element in existing vision transformers, can be safely discarded. This simplification allows the model to handle higher-resolution vision tasks more effectively.

HVT: The Hybrid Vision Transformer (HVT)

[30][31] is a unique architecture that merges the advantages of CNNs and transformers for image processing. It capitalizes on transformers’ ability to concentrate on global relationships in images and CNNs’ capacity to model local correlations, resulting in superior performance across various computer vision tasks. HVTs typically blend both the convolution operation and self-attention mechanism, enabling the exploitation of both local and global image representations. They have demonstrated impressive results in vision applications, providing a viable alternative to traditional CNNs, and have been successfully deployed in tasks such as image segmentation, object detection, and surveillance anomaly detection. However, the specific implementation of the HVT can vary significantly depending on the task and requirements, with some HVTs incorporating additional components or modifications to further boost performance. In essence, the hybrid vision transformer is a potent tool for image processing tasks, amalgamating the strengths of both CNNs and transformers to achieve high performance.