Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 3 by Catherine Yang and Version 2 by Weixin Zeng.

Inductive relationship prediction for knowledge graphs, as an important research topic, aims to predict missing relationships between unknown entities and many practical applications. Most of the existing approaches to this problem use closed subgraphs to extract features of target nodes for prediction; however, there is a tendency to ignore neighboring relationships outside the closed subgraphs, which leads to inaccurate predictions. In addition, they ignore the rich commonsense information that can help filter out less compelling results.

- inductive relation prediction

- commonsense

- dual attention

- contrastive learning

1. Introduction

Knowledge graphs (KGs) are composed of organized knowledge in the form of factual triples (entity, relation, entity), and they form a collection of interrelated knowledge, thereby facilitating downstream tasks such as question answering [1], relation extraction [2], and recommendation systems [3]. However, even state-of-the-art KGs suffer from an issue of incompleteness [4[4][5],5], such as FreeBase [6] and WikiData [7]. To solve this issue, many studies have been proposed mining missing triples in KGs, in which the embedding-based methods become a dominant paradigm, such as TransE [8], ComplEx [9], RGCN [10], and CompGCN [11]. In particular, certain scholars have explored knowledge graph completion under low-data regime conditions [12]. In actuality, the aforementioned methods are often only suitable for transductive scenarios, which assumes that the set of entities in KGs is fixed.

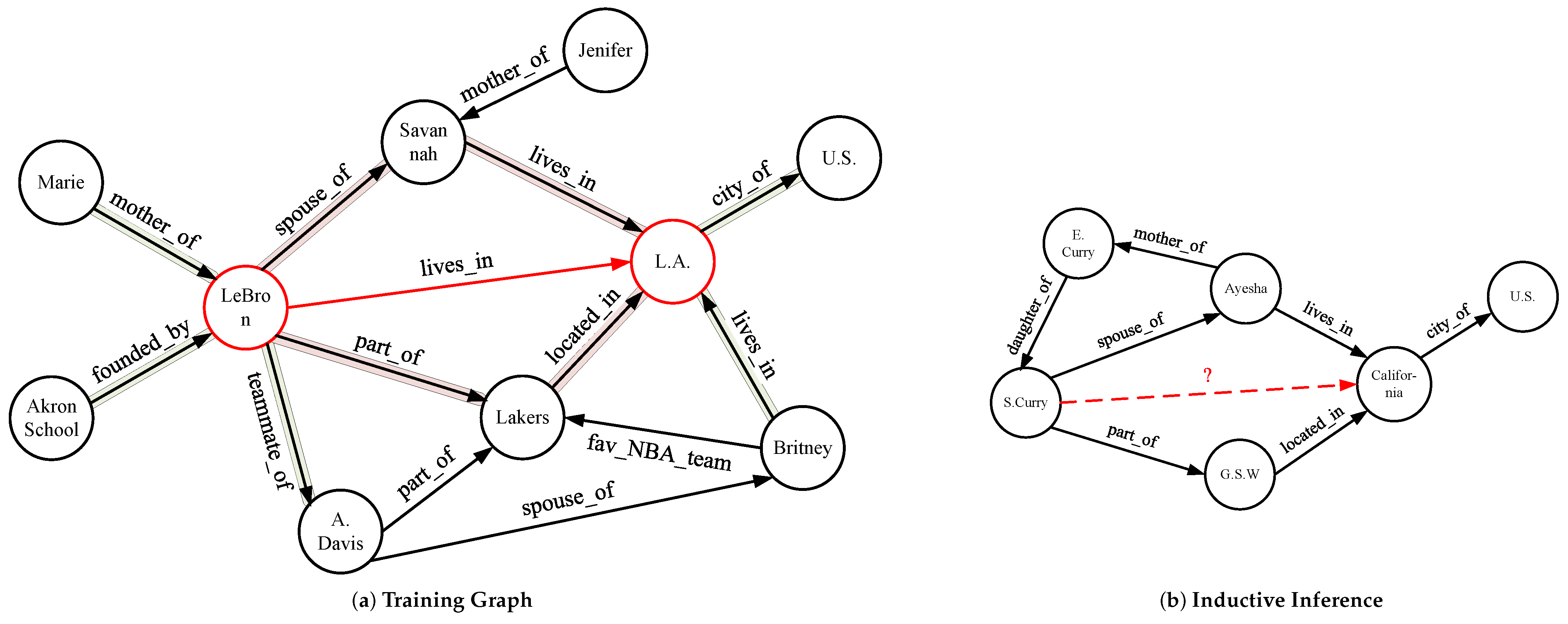

However, KGs undergo continuous updates, whereby new entities and triples are incorporated to store additional factual knowledge, such as new users and products on e-commerce platforms. Predicting the relation links between new entities requires inductive reasoning capabilities, which implies that generality should be derived from existing datasets and extended to a broader spectrum of fields, as shown in Figure 1. The crux of the inductive relation prediction [13] resides in utilizing information that is not specifically tied to a particular entity. A representative strategy in inductive relation prediction techniques is rule mining [14], which extracts first-order logic rules from a given KG and employs weighted combinations of these rules for inference. Each rule can be regarded as a relational path, comprising a set of relations from the head entity to the tail entity, which signifies the presence of a target relationship between two entities. For example, consider the straightforward rule (X, part_of, Y) ∧ (Y, located_in, Z) → (X, lives_in, Z), which was derived from the KG depicted in Figure 1a. These relational paths exist in symbolic forms and are independent of particular entities, thus rendering them inductive and highly interpretable.

Figure 1. An explanatory case in inductive relation prediction, which learned from a (a) training graph, and generalizes to be (b) without any shared entities for inference. A red dashed line denotes the relation to be predicted.

Motivated by graph neural networks (GNNs) that have the ability of aggregating local information, researchers have recently proposed GNN-based inductive models. GraIL [15] models the subgraphs of target triples to capture topologies. Based on GraIL, some works [16,17,18][16][17][18] have further utilized enclosing subgraphs for inductive prediction. Recent research has also considered few-shot settings for handling unseen entities [19,20][19][20]. SNRI [21] extracts the neighbor features of target node and path features, solves the problem of sparse subgraphs, and introduces mutual information (MI) maximization to model from a global perspective, which improves the prediction effect of inductive relationships.

2. Relation Prediction Methods

To improve the integrity of KGs, state-of-the-art approaches use either internal KG structures [8,15][8][15] or external KG information [22,23][22][23]. Transduction methods. Transduction methods are used to learn entity-specific embeddings for each node, and they have one thing in common: reasoning about the original KG. However, it is difficult to predict missing links between unseen nodes. For example, TransE [8] is based on translation, while RGCN [10] and CompGCN [11] are based on GNN. the main difference between them is the scoring function and whether or not the structural information in the KG is utilized. wang et al. proposed to obtain global structural information about an entity by using a global neighborhood aggregator to solve the problem of sparse local structural information under certain snapshots [24]. Meng et al. proposed a multi-hop path inference model based on sparse temporal knowledge graph [25]. Recently, Wang et al. proposed knowledge graph complementation with multi-level interactions, in which entities and relations interact at both fine- and coarse-grained levels [26]. Induction methods. Inductive methods can be used to learn how to reason over unseen nodes. The methods fall into two main categories: rule-based methods and graph-based methods. Rule-based methods aim to learn logical reasoning rules that are entity-independent. For example, NeuralLP [14] and DRUM [27] integrate neural networks with symbolic rules to learn logical rules and rule confidence in an end-to-end microscopic manner. In terms of graph-based approaches, in recent years, researchers have drawn inspiration from the local information aggregation capability of graph neural networks and incorporated graph neural networks (GNNs) into their models. GraIL [15] demonstrated inductive prediction by extracting closed subgraphs of the target triad to capture the topology of the target nodes. TACT [16] built on this model by introducing subgraphs in the correlation of relations and constructed a relational correlation network (RCN) to enhance the coding of subgraphs. CoMPLIE [17] proposed a node-edge communication message propagation network to enhance the interaction between nodes and edges and naturally handle asymmetric or antisymmetric relations to enhance the adequate flow of relational information. ConGLR [13] formulated a contextual graph to represent subgraphs of relational paths, where two GCNs are applied to handle closed subgraphs and contexts, where different layers utilize the corresponding outputs in an interactive manner to better represent features. RE-PORT [28] aggregates relational paths and contexts to capture the linkages and intrinsic properties of entities through a unified layered transformation framework. However, the RE-PORT model is not selected as a comparison model in the experimental part of this preseaper rch since its experimental metrics are different from other state-of-the-art models. RMPI [29] uses a novel relational message-passing network for complete inductive knowledge graph completion. SNRI [21] extracts nodes' neighbor-relationship features and path embeddings to fully utilize an entity's complete neighborhood relationship information for better generalization. However, all these approaches just add extra simple processing load and do not fully utilize the overall structural features of KGs. Unlike SNRI, CNIA preserves the integrated neighbor relations, utilizes the dual attention mechanism to process the structural features of the subgraphs and introduces common-sense reordering.3. Commonsense Knowledge

Commonsense knowledge is a key component in solving bottlenecks in artificial intelligence and knowledge engineering technologies. And the acquisition of commonsense knowledge is a fundamental problem in the field. The earliest construction methods involved experts manually defining the architecture and types of relationships of a knowledge base. Lenat [30] [30] constructed one of the oldest knowledge bases, CYC, in the 1980s.However, expert construction methods require a lot of human and material resources. Therefore, researchers started to develop semi-structured and unstructured text extraction methods. YAGO [31] constructed a common-sense knowledge base containing more than 1 million entities and 5 million facts derived from semi-structured Wikipedia data and harmonized with WordNet through a well-designed combination of rule-based and heuristic methods.The above approach prioritizes encyclopedic knowledge and structure storage by creating well-defined entity spaces and corresponding relational systems. However, actual general knowledge structures are more loosely organized and are difficult to apply to models of two entities with known relationships. Therefore, existing solutions model entity parts as natural language phrases and relationships as any concepts that can connect entities. For example, the OpenIE approach reveals properties of open text entities and relationships. However, the method is extractive and it is difficult to obtain semantic information about the text.

References

- Zhang, Y.; Dai, H.; Kozareva, Z.; Smola, A.J.; Song, L. Variational Reasoning for Question Answering With Knowledge Graph. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence (AAAI 2018), New Orleans, LA, USA, 2–7 February 2018; pp. 6069–6076.

- Verlinden, S.; Zaporojets, K.; Deleu, J.; Demeester, T.; Develder, C. Injecting Knowledge Base Information into End-to-End Joint Entity and Relation Extraction and Coreference Resolution. In Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021; Association for Computational Linguistics: Kerrville, TX, USA, 2021; pp. 1952–1957.

- Wang, H.; Zhao, M.; Xie, X.; Li, W.; Guo, M. Knowledge Graph Convolutional Networks for Recommender Systems. In Proceedings of the WWW 2019, San Francisco, CA, USA, 13–17 May 2019; pp. 3307–3313.

- Zhao, X.; Zeng, W.; Tang, J. Entity Alignment—Concepts, Recent Advances and Novel Approaches; Springer: Singapore, 2023.

- Zeng, W.; Zhao, X.; Li, X.; Tang, J.; Wang, W. On entity alignment at scale. VLDB J. 2022, 31, 1009–1033.

- Bollacker, K.D.; Evans, C.; Paritosh, P.K.; Sturge, T.; Taylor, J. Freebase: A collaboratively created graph database for structuring human knowledge. In Proceedings of the SIGMOD Conference 2008, Vancouver, BC, Canada, 10–12 June 2008; pp. 1247–1250.

- Vrandecic, D. Wikidata: A new platform for collaborative data collection. In Proceedings of the WWW 2012, Lyon, France, 16–20 April 2012; pp. 1063–1064.

- Bordes, A.; Usunier, N.; García-Durán, A.; Weston, J.; Yakhnenko, O. Translating Embeddings for Modeling Multi-relational Data. In Proceedings of the NIPS 2013, Lake Tahoe, NV, USA, 5–10 December 2013; pp. 2787–2795.

- Trouillon, T.; Welbl, J.; Riedel, S.; Gaussier, É.; Bouchard, G. Complex Embeddings for Simple Link Prediction. In Proceedings of the 33rd International Conference on International Conference on Machine Learning (ICML 2016), New York, NY, USA, 19–24 June 2016; Volume 48, pp. 2071–2080.

- Schlichtkrull, M.S.; Kipf, T.N.; Bloem, P.; van den Berg, R.; Titov, I.; Welling, M. Modeling Relational Data with Graph Convolutional Networks. In The Semantic Web, Proceedings of the 15th International Conference, ESWC 2018, Heraklion, Crete, Greece, 3–7 June 2018; Proceedings 15; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 10843, pp. 593–607.

- Vashishth, S.; Sanyal, S.; Nitin, V.; Talukdar, P.P. Composition-based Multi-Relational Graph Convolutional Networks. In Proceedings of the ICLR 2020, Addis Ababa, Ethiopia, 26–30 April 2020.

- Liu, J.; Fan, C.; Zhou, F.; Xu, H. Complete feature learning and consistent relation modeling for few-shot knowledge graph completion. Expert Syst. Appl. 2024, 238, 121725.

- Lin, Q.; Liu, J.; Xu, F.; Pan, Y.; Zhu, Y.; Zhang, L.; Zhao, T. Incorporating Context Graph with Logical Reasoning for Inductive Relation Prediction. In Proceedings of the SIGIR 2022, Madrid, Spain, 11–15 July 2022; pp. 893–903.

- Yang, F.; Yang, Z.; Cohen, W.W. Differentiable Learning of Logical Rules for Knowledge Base Reasoning. In Proceedings of the NIPS 2017, Long Beach, CA, USA, 4–9 December 2017; pp. 2319–2328.

- Teru, K.K.; Denis, E.G.; Hamilton, W.L. Inductive Relation Prediction by Subgraph Reasoning. In Proceedings of the ICML 2020, Virtual, 13–18 July 2020; Volume 119, pp. 9448–9457.

- Chen, J.; He, H.; Wu, F.; Wang, J. Topology-Aware Correlations Between Relations for Inductive Link Prediction in Knowledge Graphs. In Proceedings of the AAAI 2021, Virtual, 2–9 February 2021; pp. 6271–6278.

- Mai, S.; Zheng, S.; Yang, Y.; Hu, H. Communicative Message Passing for Inductive Relation Reasoning. In Proceedings of the AAAI 2021, Virtual, 2–9 February 2021; pp. 4294–4302.

- Galkin, M.; Denis, E.G.; Wu, J.; Hamilton, W.L. NodePiece: Compositional and Parameter-Efficient Representations of Large Knowledge Graphs. In Proceedings of the ICLR 2022, Virtual, 25–29 April 2022.

- Baek, J.; Lee, D.B.; Hwang, S.J. Learning to Extrapolate Knowledge: Transductive Few-shot Out-of-Graph Link Prediction. In Proceedings of the NeurIPS 2020, Virtual, 6–12 December 2020.

- Zhang, Y.; Wang, W.; Chen, W.; Xu, J.; Liu, A.; Zhao, L. Meta-Learning Based Hyper-Relation Feature Modeling for Out-of-Knowledge-Base Embedding. In Proceedings of the CIKM 2021, Gold Coast, QLD, Australia, 1–5 November 2021; pp. 2637–2646.

- Xu, X.; Zhang, P.; He, Y.; Chao, C.; Yan, C. Subgraph Neighboring Relations Infomax for Inductive Link Prediction on Knowledge Graphs. In Proceedings of the IJCAI 2022, Vienna, Austria, 23–29 July 2022; pp. 2341–2347.

- Zeng, W.; Zhao, X.; Tang, J.; Lin, X. Collective Entity Alignment via Adaptive Features. In Proceedings of the 36th IEEE International Conference on Data Engineering, ICDE 2020, Dallas, TX, USA, 20–24 April 2020; pp. 1870–1873.

- Zeng, W.; Zhao, X.; Tang, J.; Lin, X.; Groth, P. Reinforcement Learning-based Collective Entity Alignment with Adaptive Features. ACM Trans. Inf. Syst. 2021, 39, 26:1–26:31.

- Wang, J.; Lin, X.; Huang, H.; Ke, X.; Wu, R.; You, C.; Guo, K. GLANet: Temporal knowledge graph completion based on global and local information-aware network. Appl. Intell. 2023, 53, 19285–19301.

- Meng, X.; Bai, L.; Hu, J.; Zhu, L. Multi-hop path reasoning over sparse temporal knowledge graphs based on path completion and reward shaping. Inf. Process. Manag. 2024, 61, 103605.

- Wang, J.; Wang, B.; Gao, J.; Hu, S.; Hu, Y.; Yin, B. Multi-Level Interaction Based Knowledge Graph Completion. IEEE ACM Trans. Audio Speech Lang. Process. 2024, 32, 386–396.

- Sadeghian, A.; Armandpour, M.; Ding, P.; Wang, D.Z. DRUM: End-To-End Differentiable Rule Mining on Knowledge Graphs. In Proceedings of the NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; pp. 15321–15331.

- Li, J.; Wang, Q.; Mao, Z. Inductive Relation Prediction from Relational Paths and Context with Hierarchical Transformers. In Proceedings of the ICASSP 2023, Rhodes Island, Greece, 4–10 June 2023; pp. 1–5.

- Geng, Y.; Chen, J.; Pan, J.Z.; Chen, M.; Jiang, S.; Zhang, W.; Chen, H. Relational Message Passing for Fully Inductive Knowledge Graph Completion. In Proceedings of the ICDE 2023, Anaheim, CA, USA, 3–7 April 2023; pp. 1221–1233.

- Lenat, D.B. CYC: A Large-Scale Investment in Knowledge Infrastructure. Commun. ACM 1995, 38, 32–38.

- Suchanek, F.M.; Kasneci, G.; Weikum, G. Yago: A core of semantic knowledge. In Proceedings of the WWW 2007, Banff, AB, Canada, 8–12 May 2007; pp. 697–706.

- Wang, J.; Lin, X.; Huang, H.; Ke, X.; Wu, R.; You, C.; Guo, K. GLANet: Temporal knowledge graph completion based on global and local information-aware network. Appl. Intell. 2023, 53, 19285–19301.

- Meng, X.; Bai, L.; Hu, J.; Zhu, L. Multi-hop path reasoning over sparse temporal knowledge graphs based on path completion and reward shaping. Inf. Process. Manag. 2024, 61, 103605.

- Wang, J.; Wang, B.; Gao, J.; Hu, S.; Hu, Y.; Yin, B. Multi-Level Interaction Based Knowledge Graph Completion. IEEE ACM Trans. Audio Speech Lang. Process. 2024, 32, 386–396.

- Sadeghian, A.; Armandpour, M.; Ding, P.; Wang, D.Z. DRUM: End-To-End Differentiable Rule Mining on Knowledge Graphs. In Proceedings of the NeurIPS 2019, Vancouver, BC, Canada, 8–14 December 2019; pp. 15321–15331.

- Li, J.; Wang, Q.; Mao, Z. Inductive Relation Prediction from Relational Paths and Context with Hierarchical Transformers. In Proceedings of the ICASSP 2023, Rhodes Island, Greece, 4–10 June 2023; pp. 1–5.

- Geng, Y.; Chen, J.; Pan, J.Z.; Chen, M.; Jiang, S.; Zhang, W.; Chen, H. Relational Message Passing for Fully Inductive Knowledge Graph Completion. In Proceedings of the ICDE 2023, Anaheim, CA, USA, 3–7 April 2023; pp. 1221–1233.

- Lenat, D.B. CYC: A Large-Scale Investment in Knowledge Infrastructure. Commun. ACM 1995, 38, 32–38.

- Suchanek, F.M.; Kasneci, G.; Weikum, G. Yago: A core of semantic knowledge. In Proceedings of the WWW 2007, Banff, AB, Canada, 8–12 May 2007; pp. 697–706.

More