1. Introduction

With the advancement of high-resolution sensors in the field of remote sensing

[1][2][1,2], the horizontal pixel resolution (the horizontal distance represented by a single pixel) of the visible light band for ground observation from satellite/aerial platforms has reached the level of decimeters or even centimeters. This has made various downstream applications such as fine-grained urban 3D reconstruction

[3], high-precision mapping

[4], and MR scene interaction

[5] incrementally achievable. Among these applications, the ground surface height value (Digital Surface Model, DSM) serves as a primary data support, and its acquisition methods have been a focal point of research

[6].

Numerous studies on height estimation have been published, among which methods based on LiDAR demonstrate the highest measurement accuracy

[7]. These techniques involve calculating the time difference between wave emission and reception to ascertain the distance to corresponding points, with further adjustments to generate height values. However, the high power consumption and costly equipment required for LiDAR significantly constrain its application in satellite/UAV scenarios. Another prevalent method is stereophotogrammetry, as developed by researchers like Nemmaou et al.

[8], which utilizes prior knowledge of perspective differences from multiple images or multi-angles to fit height information. Furthermore, Hoja et al. and Xiaotian et al.

[9][10][9,10] have employed multisensor fusion methods combining stereoscopic views with SAR interferometry, further enhancing the quality of the estimations. While stereophotogrammetry significantly reduces both power consumption and equipment costs compared to LiDAR, its high computational demands pose challenges for sensor-side deployment and real-time computation.

Recently, numerous monocular deep learning algorithms have been proposed that, when combined with AI-capable chips, can achieve a balance between computational power and cost (latency <100 ms, chip cost < USD 50, chip power consumption <3 W). Among these, convolutional neural network (CNNs) models, widely applied in the field of computer vision, have begun to be utilized in monocular height estimation

[11]. CNNs excel in reconstructing details like edges and offer controllable computational complexity. However, as monocular height estimation is inherently an ill-posed problem

[12], it demands more advanced information extraction. CNNs use a fixed receptive field for information extraction, making it challenging to interact with information at the level of the entire image. This limitation often leads to common issues such as instance-level height deviations (overall deviation in height prediction for individual, homogeneous land parcels) like

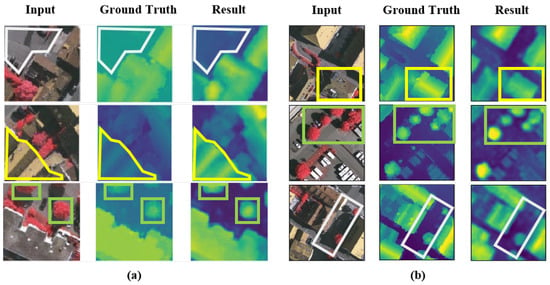

Figure 1a, which restrict the large-scale application of monocular height estimation.

Figure 1. Typical problems of existing height estimation methods. (a): Instance-level height deviation caused by fixed receptive field. (b): Edge ambiguity (gray box: road; yellow box: building; green box: tree).

But the advent of transformers and their attention mechanisms

[13], which capture long-distance feature dependencies, has significantly improved the inadequate whole-image information interaction common to CNN models. Transformers have been progressively applied in remote sensing tasks such as object detection

[14] and semantic segmentation

[15]. Among these developments, the Vision Transformer (ViT)

[16] was an early adopter of the transformer approach in the visual domain, yet it encounters two primary issues. First, the high computational complexity and difficulty in model convergence: ViT, by emulating the transformer’s approach used in natural language processing, constructs attention among all tokens (small segments of an image). Given that height prediction is a dense generation task requiring predictions for all pixel heights, this leads to an excessively high overall computational complexity and challenges in model convergence. Second, the original ViT’s edge reconstruction quality is mediocre. Due to the lack of inductive bias in the transformer’s attention mechanism, which is inherent in CNNs, although it achieves better average error metrics (REL) by reducing overall instance errors, it underperforms in edge reconstruction quality between instances compared to CNN models, resulting in edge blurring issues akin to those observed in

Figure 1b.

2. Overview of Height Estimation

Height estimation is a key component of 3D scene understanding

[17][20] and has long held a significant position in the domains of remote sensing and computer vision. Initial research predominantly focused on stereo or multi-view image matching. These methodologies

[18][21] typically relied on geometric relationships for keypoint matching between two or more images, followed by using triangulation and camera pose data to compute depth information. Recently, with the advent of large-scale depth datasets

[19][22], research focus has shifted. The current effort is centered on estimating distance information from monocular 2D images using supervised learning. Present monocular height estimation approaches can be generally categorized into three types

[20][21][22][23][23,24,25,26]: methodologies based on handcrafted features, methodologies utilizing convolutional neural networks (CNN), and methodologies based on attention mechanisms. In general, due to the datasets containing various types of features, it is challenging to extract only manual features to fit the distribution of different datasets, and the effectiveness is generally moderate.

3. Height Estimation Based on Manual Features

Conditional random fields (CRF) and Markov random fields (MRF) have been primarily utilized by researchers to model the local and global structures of images. Recognizing that local features alone are insufficient for predicting depth values, Batra et al.

[24][27] simulated the relationships between adjacent regions and used CRF and MRF to model these structures. To capture global features beyond local ones, Saxena et al.

[25][28] computed features of neighbouring blocks and applied MRF and Laplacian models for area depth estimation. In another study, Saxena et al.

[26][29] introduced superpixels as replacements for pixels during the training process, enhancing the depth estimation approach. Liu et al.

[27][30] previously formulated depth estimation as a discrete–continuous optimization problem, with the discrete component encoding relationships between adjacent pixels and the continuous part representing the depth of superpixels. These variables were interconnected in a CRF for predicting depth values. Lastly, Zhuo et al.

[28][31] introduced a hierarchical approach that combines local depth, intermediate structures, and global structures for depth estimation.

4. Height Estimation Based on CNN

Convolutional neural networks (CNNs) have been extensively utilized in recent years across various fields of computer vision, including scene classification, semantic segmentation, and object detection

[29][30][32,33]. Among these applications, ResNet

[31][34] is often employed as the backbone of models. In a notable study, IMG2DSM

[32][35], an adversarial loss function was introduced early to enhance the synthesization of Digital Surface Models (DSM), using conditional generative adversarial networks to transform images into DSM elevations. Zhang et al.

[33][36] improved object feature abstraction at various scales through multipath fusion networks for multiscale feature extraction. Li et al.

[34][37] segmented height values into intervals with incrementally increasing spacing and reframed the regression problem as an ordinal regression problem, using ordinal loss for network training. They also developed a postprocessing technique to convert predicted height maps of each block into seamless height maps. Carvalho et al.

[35][38] conducted in-depth research on various loss functions for depth regression. They combined an encoder–decoder architecture with adversarial loss and proposed D3Net. Zhu et al.

[36][39] focused on reducing processing time and eliminating fully connected layers before the upsampling process in the visual geometry group. Kuznietsov et al.

[12] enhanced network performance by utilizing stereo images with sparse ground truth depths. Their loss function harnessed the predicted depth, reference depth, and the differences between the image and the generated distorted image. In conclusion, the convolution-based height estimation method achieves basic dataset fitting, but it still exhibits significant instance-level height prediction deviations due to the limitation of a fixed receptive field. Xiong et al.

[37][40] and Tao et al.

[38][41] attempted to improve existing deformable convolutions by introducing ’scaling’ mechanisms and authentic deformation mechanisms into the convolutions, respectively. They aim to enable convolutions to adaptively adjust for the extraction of multiscale information.

5. Attention and Transformer in Remote Sensing

5.1. Attention Mechanism and Transformer

The attention mechanism

[13], an information processing method that simulates the human visual system, allows for the assignment of different weights to elements in a learning input sequence. By learning to assign higher weights to more essential elements, the attention mechanism enables the model to focus on critical information, thus improving its performance in processing sequential information. In computer vision, this mechanism directs the model’s focus toward key areas of an image, enhancing its performance. It can be seen as a simulation of the human process of image perception, which involves understanding the entire image by focusing on important parts.

Recently, attention mechanisms have been incorporated into computer vision, inspired by the exceptional performance of Transformer models in natural language processing (NLP)

[13]. These models, based on self-attention mechanisms

[39][42], establish global dependencies in the input sequence, enabling better handling of sequential information. The format of input sequences in computer vision, however, varies from NLP and includes vectors, single-channel feature maps, multichannel feature maps, and feature maps from different sources. Consequently, various forms of attention mechanisms, such as spatial attention

[40][43], local attention

[41][44], cross-channel attention

[42][45], and cross-modal attention

[13][43][13,46], have been adapted for visual sequence modeling.

Concurrently, various visual transformer methods have been proposed. The Vision Transformer (ViT)

[16] partitions an image into blocks and computes attention between these block vectors. The Swin Transformer

[44][47] significantly reduces computational burden by establishing three different attention computation scales and optimizes local modeling across various visual tasks.

5.2. Transformers Applied in Remote Sensing

Recently, numerous remote sensing tasks have begun incorporating or optimizing transformer networks. Yang et al.

[45][48] used an optimized Vision Transformer (ViT) network for hyperspectral image classification, adjusting the sampling method of ViT to improve local modeling. In object detection, Zhao et al.

[14] integrated additional classification tokens for synthetic aperture radar (SAR) images to enhance ViT-based detection accuracy. Chen et al.

[46][49] introduced the MPViT network, combining scene classification, super-resolution, and instance segmentation to significantly increase building extraction. In the realm of supervised learning, He et al.

[47][50] were among the first to integrate a pyramid structure into ViT for broadening self-supervised learning applications in optical remote sensing image interpretation.

In the context of monocular height estimation, Sun et al.

[48][19] built upon Adabins by dividing the decoder into two branches, one for generating classified height values and another for probability map regression, simplifying the complexity of reconstructing both local semantics and semantic modeling in a single branch. The SFFDE model incorporated an Elevation Semantic Globalization (ESG) module, using self-attention between the encoder and decoder to extract global semantics and reduce edge blurring. However, due to the sequential use of local modeling (CNN, encoding), global modeling (attention, ESG), and local modeling (CNN, decoding), a dedicated fusion module to address the coupling problem of features with different granularities is lacking.

Overall, in the field of remote sensing, existing methods primarily expand the ViT structure by adding local modeling modules or extending supervision types to adapt to the multiscale nature of remote sensing images. This potentially complicates the modeling in monocular height estimation tasks.