In the field of UAV-based object tracking, the use of infrared mode can improve the robustness of the tracker in the scene with severe illumination changes, occlusion and expand the applicable scenarios of UAV-based object tracking tasks. Inspired by the great achievements of 在基于无人机的目标跟踪领域,利用红外模态可以提高跟踪器在光照变化和遮挡严重的场景中的鲁棒性,扩展无人机目标跟踪任务的适用场景。受Transformer architecture in the field of RGB object tracking, a dual-mode object tracking network based on 结构在RGB目标跟踪领域巨大成就的启发,可以设计一种基于Transformer can be designed.的双模态目标跟踪网络。

1. Introduction引言

Object tracking is one of the fundamental tasks in computer vision and has been widely used in robot vision, video analysis, autonomous driving and other fields [1]. Among them, the drone scene is an important application scenario for object tracking which assist drones in playing a crucial role in urban governance, forest fire protection, traffic management, and other fields. Given the initial position of a target, object tracking is to capture the target in subsequent video frames. Thanks to the availability of large datasets of visible images [2], visible-based object tracking algorithms have made significant progress and achieved state-of-the-art results in recent years. Currently, due to the diversification of drone missions, visible object tracking is unable to meet the diverse needs of drones in various application scenarios [3]. Due to the limitations of visible imaging mechanisms, object tracking heavily relies on optimal optical conditions. However, in realistic drone scenarios, UAVs are required to perform object tracking tasks in dark and foggy environments. In such situations, visible imaging conditions are inadequate, resulting in significantly noisy images. Consequently, object tracking algorithms based on visible imaging fail to function properly.目标跟踪是计算机视觉的基础任务之一,已广泛应用于机器人视觉、视频分析、自动驾驶等领域[1]。其中,无人机场景是目标跟踪的重要应用场景,助力无人机在城市治理、森林消防、交通管理等领域发挥关键作用。给定目标的初始位置,对象跟踪是在后续视频帧中捕获目标。由于大型可见光图像数据集的可用性[2],基于可见光的目标跟踪算法近年来取得了重大进展并取得了最先进的成果。目前,由于无人机任务的多样化,可见物体跟踪已无法满足无人机在各种应用场景中的多样化需求[3]。由于可见光成像机制的局限性,物体跟踪在很大程度上依赖于最佳光学条件。然而,在现实的无人机场景中,无人机需要在黑暗和雾气的环境中执行物体跟踪任务。在这种情况下,可见光成像条件不足,导致图像明显噪点。因此,基于可见光成像的目标跟踪算法无法正常工作。

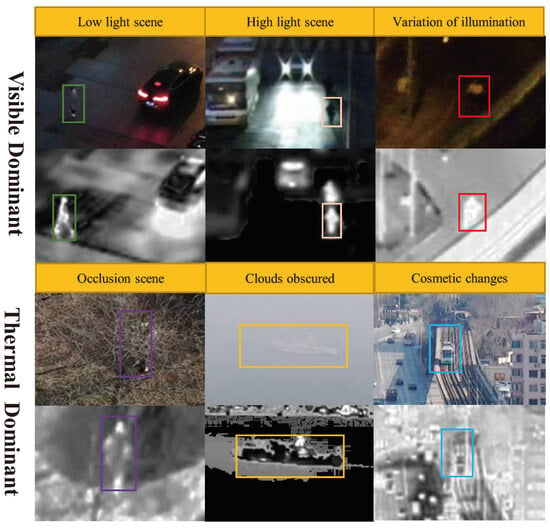

Infrared images are produced by measuring the heat emitted by objects. Compared with visible images, infrared images have relatively poor visual effects and complementary target location information [4][5]. In addition, infrared images are not sensitive to changes in scene brightness, and thus maintain good imaging results even in poor lightning environments. However, the imaging quality of infrared images is poor and the spatial resolution and grayscale dynamic range are limited, resulting in a lack of details and texture information in the images. In contrast, visible images are very rich in details and texture features. In summary, visible and infrared object tracking has received increasing attention as it can meet the mission requirements of drones in various scenarios, due to the complementary advantages of infrared and visible images (Figure 红外图像是通过测量物体发出的热量来产生的。与可见光图像相比,红外图像具有相对较差的视觉效果和互补的目标位置信息[4,5]。此外,红外图像对场景亮度的变化不敏感,因此即使在较差的闪电环境中也能保持良好的成像效果。然而,红外图像的成像质量较差,空间分辨率和灰度动态范围有限,导致图像中缺乏细节和纹理信息。相比之下,可见光图像的细节和纹理特征非常丰富。综上所述,可见光和红外目标跟踪由于红外和可见光图像的优势互补,可以满足无人机在各种场景下的任务需求,因此越来越受到关注(图1).)。

Figure图 1. These are some visible-infrared image pairs captured by drones. In some scenarios, visible images may be difficult to distinguish different objects, while infrared images can continue to work in these scenarios. Therefore, information from visible and infrared modalities can complement each other in these scenarios. Introducing information from the infrared modality is very beneficial for achieving comprehensive object tracking in drone missions.这些是无人机捕获的一些可见红外图像对。在某些情况下,可见光图像可能难以区分不同的物体,而红外图像可以在这些场景中继续工作。因此,在这些情况下,来自可见光和红外模态的信息可以相互补充。引入红外模态信息对于在无人机任务中实现全面的目标跟踪非常有益。

Currently, two main kinds of methods in visual object tracking are deep learning (目前,视觉对象跟踪的主要方法有两种是基于深度学习(DL

)-based methods and correlation filter (CF)-based approaches [1]. The methods based on correlation filtering utilize )的方法和基于相关过滤器(CF)的方法[1]。基于相关滤波的方法利用快速傅里叶变换(F

ast F

ourier Transform (FFT) to perform correlation operation in the frequency domain, which have a very fast processing speed and run in real-time. However, their accuracy and robustness are poor. The methods based on neural network mainly utilize the powerful feature extraction ability of neural network. Their accuracy is better than that of correlation filtering based methods while their speed is slower. With the proposal of Siamese networks [6][7], the speed of neural network-based tracking methods has been greatly improved. In recent years, the neural network-based algorithm has become the mainstream method for object tracking. T)在频域中进行相关运算,具有非常快的处理速度和实时性。然而,它们的准确性和鲁棒性很差。基于神经网络的方法主要利用神经网络强大的特征提取能力。它们的准确性优于基于相关滤波的方法,但速度较慢。随着连体网络[6,7]的提出,基于神经网络的跟踪方法的速度得到了极大的提高。近年来,基于神经网络的算法已成为目标跟踪的主流方法。

2. Drone Based RGBT Tracking with Dual-Feature Aggregation Network

2.1 RGBT Tracking Algorithms

2. RGBT跟踪算法

Many 到目前为止,已经提出了许多RGBT

trackers have been proposed so far [8][9][10][11]. Due to the rapid development of 跟踪器[12,13,14,15]。由于RGB

trackers, current 跟踪器发展迅速,目前的RGBT

trackers mainly consider the problem of dual-modal information fusion within mature trackers finetuned on the RGBT tracking task, where the key is to f跟踪器主要考虑在RGBT跟踪任务上微调的成熟跟踪器内部的双模态信息融合问题,其中关键是融合可见光和红外图像信息。提出了几种融合方法,分为图像融合、特征融合和决策融合。对于图像融合,主流的方法是基于权重对图像像素进行融合[16\u

se visible and infrared image information. Several fusion methods are proposed, which are categorized as image fusion, feature fusion and decision fusion. For image fusion, the mainstream approach is to fusion image pixels based on weights [12][13], but the main information extracted from image fusion is the homogeneous information of the image pairs, and the ability to extract heterogeneous information from infrared201217],但图像融合提取的主要信息是图像对的同质信息,从红外-

visible image pairs is not strong. At the same time, image fusion has certain requirements for registration between image pairs, which can lead to cumulative errors and affect tracking performance. Most trackers aggregate the representation by fusing features [14][15]. Feature fusion is a more advanced semantic fusion compared with image fusion. There are many ways to fuse features, but the most common way is to aggregate features using weighting. Feature fusion has the potential of high flexibility and can be trained with massive unpaired data, which is well-designed to achieve significant promotion. Decision fusion models each modality independently and the scores are fused to obtain the final candidate. Compared with image fusion and feature fusion, decision fusion is the fusion method on a higher level, which uses all the information from visible and infrared images. However, it is difficult to determine the decision criteria. 可见光图像对中提取异质信息的能力不强。同时,图像融合对图像对之间的配准有一定的要求,这会导致累积误差并影响跟踪性能。大多数跟踪器通过融合特征来聚合表示[18,19]。特征融合是与图像融合相比更高级的语义融合。融合要素的方法有很多种,但最常见的方法是使用权重聚合要素。特征融合具有高度的灵活性,可以用海量未配对数据进行训练,设计精良,实现显著提升。决策融合对每种模态进行独立建模,并将分数融合以获得最终的候选结果。与图像融合和特征融合相比,决策融合是更高层次的融合方法,它利用了来自可见光和红外图像的所有信息。然而,很难确定决策标准。Luo

et al. [8] utilize independent frameworks to track in 等[12]利用独立框架跟踪RGB-T

data and then the results are combined by adaptive weighting. Decision fusion avoids the heterogeneity of different modalities and is not sensitive to modality registration. Finally, these fusion methods can also be used complementarily. For example, 数据,然后通过自适应加权将结果组合在一起。决策融合避免了不同模态的异质性,对模态注册不敏感。最后,这些融合方法也可以互补使用。例如,Zhang

[16] used image fusion, feature fusion and decision fusion simultaneously for information fusion and achieved good results in multiple tests. [11]同时使用图像融合、特征融合和决策融合进行信息融合,并在多次测试中取得了良好的结果。

2.2Transformer

3. 变压器

Transformer

originates起源于用于机器翻译的自然语言处理 from natural language processing (NLP) for machine translation and has(NLP),最近被引入视觉领域,具有巨大的潜力 [8]。受到其他领域成功的启发,研究人员利用 been introduced to vision recently with great potential [17]. Inspired by the success in other fields, researchers have leveraged Transformer

for tracking. Briefly, 进行跟踪。简而言之,Transformer

is an architecture for transforming one sequence into another one with the help of attention-based encoders and decoders. The attention mechanism can determine which parts of the sequence are important, breaking through the receptive field limitation of traditional CNN networks and capturing global information from the input sequence.

However, the attention mechanism requires more training data to establish global relationships. 是一种在基于注意力的编码器和解码器的帮助下将一个序列转换为另一个序列的架构。注意力机制可以判断序列的哪些部分是重要的,突破了传统CNN网络的感受野限制,从输入序列中捕获全局信息。然而,注意力机制需要更多的训练数据来建立全局关系。因此,在一些样本量较小且更强调区域关系的任务中,T

herefore, Transformer

will have a lower effect than traditional CNN networks in的影响将低于传统的 CNN 网络 [20]。此外,注意力机制能够通过在全局范围内的搜索区域中找到与模板最相关的区域来取代连体网络中的相关过滤操作。[9] some的方法应用 tasks with smaller sample size and more emphasis on regional relationships [18]. Additionally, the attention mechanism is able to replace correlation filtering operations in the Siamese network by finding the most relevant region to the template in the search area in a global scope. T

he method of [19] applies Transformer

to enhance and fuse features来增强和融合 in the Siamese

tracking for performance improvement.跟踪中的特征,以提高性能。

2.3. UAV RGB-Infrared Tracking

4. 无人机RGB-红外跟踪

Currently, there are few visible-light-infrared object tracking algorithms available for drones, mainly due to two reasons. Firstly, there is a lack of training data for visible -infrared images of drones. Previously, models were trained using infrared images generated from visible images due to the difficulty in obtaining infrared images. With the emergence of datasets such as 目前,可用于无人机的可见光红外物体跟踪算法很少,主要有两个原因。首先,缺乏无人机可见光红外图像的训练数据。以前,由于难以获得红外图像,使用从可见光图像生成的红外图像来训练模型。随着LasHeR

[20], it is now possible to directly use visible and infrared images for training. In addition, there are also datasets such as [21]等数据集的出现,现在可以直接使用可见光和红外图像进行训练。此外,还有GTOT

[21], [22]、RGBT210

[22], [23]、RGBT234

[23], etc. available for evaluating [24]等数据集可用于评估RGBT

tracking algorithm performance. However, in the field of 跟踪算法的性能。然而,在无人机的RGBT

object tracking for drones, only the 目标跟踪领域,只有VTUAV

[16] dataset is available. Due to the different imaging perspectives of images captured by drones compared to normal images, training algorithms with other datasets does not yield good results. Secondly, existing algorithms have slow running speeds, making them difficult to use directly. Existing mainstream [11]数据集可用。由于无人机拍摄的图像与普通图像的成像视角不同,使用其他数据集训练算法不会产生良好的结果。其次,现有算法运行速度慢,难以直接使用。现有主流的RGBT

object tracking algorithms are based on deep learning, which have to deal with both visible and infrared images at the same time, with a large amount of data, a complex algorithmic structure and a low processing speed, such as 目标跟踪算法都是基于深度学习的,需要同时处理可见光和红外图像,数据量大,算法结构复杂,处理速度低,如JMMAC

(4fps) [24], (4fps)[25]、FANet

((2fps

) [14], )[18]、MANnet

((2fps

) [25]. In drone scenarios, there is a high demand for speed in )[26]。在无人机场景中,无人机的RGBT

object tracking algorithms for drones. It is necessary to simplify the algorithm structure and improve its speed.目标跟踪算法对速度有很高的要求。需要简化算法结构,提高算法速度。