You're using an outdated browser. Please upgrade to a modern browser for the best experience.

Please note this is a comparison between Version 1 by Tariq Alwada'n and Version 2 by Rita Xu.

Large amounts of data are created from sensors in Internet of Things (IoT) services and applications. These data create a challenge in directing these data to the cloud, which needs extreme network bandwidth. Fog computing appears as a modern solution to overcome these challenges, where it can expand the cloud computing model to the boundary of the network, consequently adding a new class of services and applications with high-speed responses compared to the cloud.

- cloud computing

- fog computing

- Internet of Things

- big data

1. Introduction

The term IoT concerns how to achieve a point at which several of the entities around us will have the capability to interconnect with each other through the Internet with no human involvement [1]. A cloud computing model enables on-demand access to a network for sharing and configuring computing resources. Instead of sending IoT data to the cloud, the fog offers secure storage and management of nearby IoT devices.

Fog computing and cloud computing both provide a variety of resources and services on-demand. The service providers in both models can contain numerous physical and virtual machines (VMs). A growing number of cloud service providers are expanding their data centers worldwide in order to meet the rapidly growing demand for data management capabilities and storage among clients and entities. In cloud data centers, a large number of linked resources are typically present, including physical machines as well as virtual machines that consume large amounts of electricity for their activities [2][3][2,3].

The construction and management of data centers must be cost-effective for cloud providers. As cloud computing scales up, power consumption increases, resulting in higher operational costs. An amount of 45% of the total cost of a data center will be attributed to physical resources (such as CPU, memory, and storage) [4]. Nevertheless, energy costs will make up 15% of operating costs, according to [5]. Data centers have doubled their energy consumption in the past five years; infrastructure and energy costs will account for 75% of the overall operating cost [6]. It is therefore important for cloud data centers to reduce their energy consumption.

Virtualization technology is now used by most of the physical servers in cloud data centers. VMs are placed on different hosts and communicate with each other based on the service-level agreement (SLA) with cloud providers. It is important to maintain application performance isolation and security for each VM by providing it with enough resources, including CPU, memory, storage, and bandwidth. Virtualization technology allows for the running of multiple virtual servers on a single physical machine (PM), which helps with resource utilization and energy efficiency. Likewise, virtualization provides an efficient way to manage resources and reduce energy consumption, enabling cloud managers to deploy resources on-demand and in an orderly manner [7].

IaaS (infrastructure as a service) is one of the major services of public clouds with virtualization. Cloud providers optimize resource allocation by deploying virtual machines (VMs) on physical machines (PMs) based on tenants’ SLA requirements. As different mappings between VMs and PMs lead to different resource utilizations, cloud providers face the challenge of placing multiple VMs required by tenants efficiently on physical servers to minimize the number of active physical resources and energy consumption, resulting in reduced operation and management costs. VM placement has become a hot topic in recent years.

1.1. Internet of Things (IoT)

Initially, the IoT was intended to reduce human interaction and effort by employing several types of sensors that could gather data from the surrounding environment, organize it, and allow storage and management of that information [8][9][8,9]. The IoT is a modern innovation of the Internet. It allows entities (things) to gain access to information that was aggregated by other entities, or they can be used to be part of complex services [10][11][10,11]. By using any network or service, the IoT is designed to enable entities to be connected anytime, anywhere, and with anything. The figure below illustrates the general concept of the Internet of Things. A general overview of the IoT is shown in Figure 1 [12].

Figure 1. IoT general concept.

IoT architecture comprises multiple layers of technologies to support IoT operations. The first layer consists of smart entities incorporated with sensors. Sensors provide a method of correlating physical and digital objects to allow the accumulating and managing of real-time data. Sensors are available in different types for a variety of uses. In addition to measuring air quality and temperature, the sensors can record pressure, humidity, flow, electricity, and movement. There is also memory in the sensors for storing a certain amount of data. Usually, the sensors embedded in IoT systems produce a huge volume of big data. The majority of sensors have need of connectivity to a gateway in order to transfer the big data that was collected from the sounded environment. The gateways vary from Wide Area Networks, Personal Area Networks, to Local Area Networks. In the current network environment, networks, applications, and services between machines are supported by a variety of protocols. It is becoming increasingly necessary to integrate multiple networks with different protocols and technologies to provide a wide range of IoT applications and services in a heterogeneous environment as the demand for services and applications continues to grow [12].

Considering the limitations of the IoT in terms of processing power and storage, it is necessary to create a collaboration between the IoT and cloud computing. This collaboration has been referred to as the Cloud of Things (CoT), and this collaboration is one of the most effective solutions available to solve many of the challenges associated with the IoT [13].

1.2. Cloud Computing

As a result of cloud computing, individuals and businesses have the opportunity to access dynamic and distributed computer resources such as storage, processing power, and services via the Internet on demand. Third parties control and administer these resources remotely. Webmail, social networking sites, and online storage services are all examples of cloud services [14].

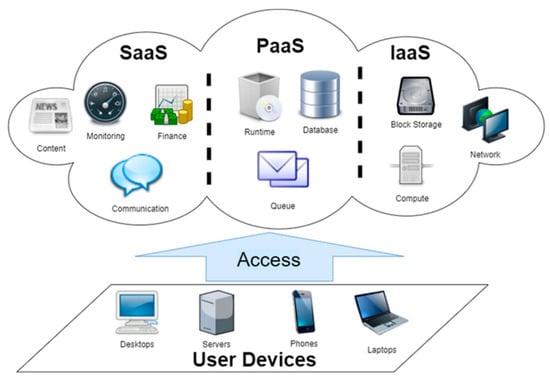

In most cases, a cloud provider is responsible for operating and administering a large data center, which is used to house and host cloud resources, as well as providing computing resources to cloud clients. These resources can be reached from anywhere by anyone via the Internet on demand [15]. The cloud computing infrastructure is illustrated in Figure 2.

Figure 2. Cloud computing infrastructure.

IaaS, SaaS, and PaaS represent the cloud service models offered by the cloud providers. Infrastructure as a Service (IaaS) refers to a monitoring model or/and service business that provides on-demand virtualization of resources [16][17]. In this paradigm, the provider is the owner of the machines and the applications. Instead of owning these machines and applications, the providers allow cloud clients to use them virtually. According to this model, the client pays or rents the services on a pay-per-use basis [17][18]. A cloud resource can be accessed via the web-based interface by cloud users.

The most popular cloud service model is Software as a Service (SaaS), in which cloud users can obtain access to the services and applications of the cloud, in order to exploit them with no need to purchase or download those applications and services. In the same way, it is a storage service model in which the cloud users can also store their data in a rental base [18][19]. As in the IaaS, the cloud clients can get access and use applications and services of SaaS through using the web-based services.

A third model of cloud service is Platform as a Service (PaaS). where clients of cloud service providers can rent services from the cloud providers in order to run their applications on the Internet by renting infrastructure, software, and hardware from the cloud provider [19][20]. Applications can be developed and tested in this environment, which is particularly useful for application developers.

Presently, cloud computing is growing vastly in different services and applications. It has developed to become a most significant technology of computing infrastructure, applications, and services [20][21].

1.3. Fog Computing

The Cloud of Things (CoT) is a combination of the Internet of Things and cloud computing. The reason behind this collaboration is that the IoT is distinguished by its rustiness in term of privacy, performance, reliability, security, processing power, and storage. The combination is one of the useful choices to solve most IoT challenges [21][22]. In addition to simplifying IoT data transfer, the CoT facilitates the installation and integration of complicated data between entities [22][23][23,24]. It is, however, challenging to manufacture new IoT services and applications due to the large number of IoT devices and equipment with different paradigms available. It is necessary to analyze and process the large volumes of data generated by IoT devices and sensors in order to determine the correct action to be taken. It is therefore a necessity to have a high-bandwidth network to be able to transfer all of the data into a cloud environment. In this case, fog computing can be utilized to solve this problem [23][24][24,25].

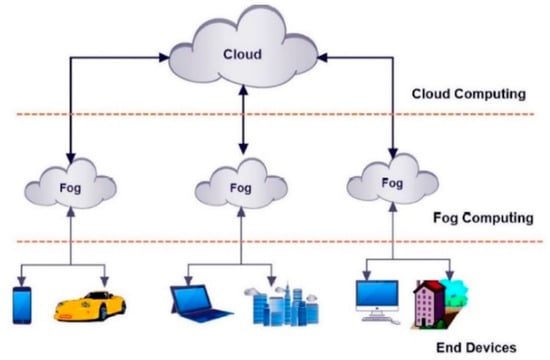

IoT is a particular application of fog computing, which was developed by Cisco [25][26]. It offers a number of benefits to a wide range of fields, particularly the Internet of Things. IoT devices can access fog computing, similar to cloud computing, by storing and processing data locally, rather than transferring it to a cloud server. Fog computing, in general, is an extension of cloud computing but is located closer to end users (endpoints) [21][22], as shown in Figure 3.

Figure 3. Fog computing is an expansion of the cloud but closer to end devices.

As a result of fog computing in IoT environments, performance will be improved and the amount of data transferred to cloud computing environments for storage and processing will decrease. Thus, instead of sending sensors’ data to the cloud, network end devices will process, analyze, and store the data locally instead. As a result, network traffic and latency will be reduced [26][27].

The following points clarify how fog computing functions. Data from IoT devices are collected by fog devices closest to the network edge. Afterwards, the fog IoT application selects the best location for analyzing the data. Mostly, there are three kinds of data [21][27][22,28]:

-

The highest time-sensitive data: this kind of data is processed on the fog nodes closest to the entity of data generator.

-

A fog node processes, assesses, and responds to data which can wait for action or respond for a few seconds or minutes.

-

Those data sets that cannot be delayed or are less time-sensitive are sent to the cloud where they are archived, analyzed, and permanently stored.