Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Catherine Yang and Version 1 by Monica Micucci.

Multimodal biometric systems are often used in a wide variety of applications where high security is required. Such systems show several merits in terms of universality and recognition rate compared to unimodal systems. Among several acquisition technologies, ultrasound bears great potential in high secure access applications because it allows the acquisition of 3D information about the human body and is able to verify liveness of the sample.

- multimodal systems

- palmprint

- hand-geometry

- 3D ultrasound

- fusion

1. Introduction

In recent years, biometric recognition is acquiring increasing popularity in various fields where personal security is required, replacing classical authentication methods based on PINs and passwords. Biometric characteristics are mainly employed in commercial applications such as smartphones and access control, government, and forensics.

Biometric systems based on the combination of two or more characteristics, referred to as multimodal systems, have several advantages compared to their unimodal counterparts as they allow improved recognition rate, universality, and the authentication of users for which one of the single biometric characteristic cannot be detected [1][2][3]. In particular, multimodal systems based on a single sensor are arousing interest because they permit to achieve cost-effectiveness and improved acceptability from users [4].

Multimodal systems are often employed for human hand characteristics including hand geometry and palmprint because both are universal, invariant, acceptable and collectable [5][6].

Over the years, several technologies have been experimented with for the acquisition of the two human hand modalities. The most commonly employed are optical and infrared [7]. The former is mainly based on CCD cameras and contactless technique [8][9][10]: CCD cameras collect high-quality images but are limited by the bulkiness of the device, while contactless modality is highly useful for acceptability of users and reasons of personal hygiene, but it is not very reliable for low-quality images. Regarding the latter, both Near-Infrared (NIR) and Far-Infrared (FIR) radiation are used [11][12]. The principal limit of these technologies is their capability of providing information present only on the external skin surface.

Ultrasound is a technology employed in several fields including sonar [13], motors and actuators [14], Non-Destructive Evaluations (NDE) [15], Indoor Positioning Systems (IPS) [16], medical imaging [17] and therapy [18], and biometric systems [19]. The capability of ultrasound to penetrate the human body can be very useful in the latter field because it allows for 3D information on the features to be obtained, leading to a more accurate description of the biometric characteristic and hence, improved recognition accuracy [10].Moreover, ultrasound is featured by the capability of effectively detecting liveness during the acquisition phase, by simply checking vein pulsing, making the system very difficult to counterfeit and is not influenced by the presence of oil and ink stains on the skin and by environmental changes in light or temperature. Ultrasound technology has been widely investigated in the biometric field, particularly for extraction of fingerprint features [20][21] and, recently, the integration of the sensor in smartphone devices became reality [22]. Other characteristics, including hand geometry [23][24], palmprint [25][26][27], and hand veins [28][29][30] were also investigated.

2. Image Acquisition and Feature Extraction

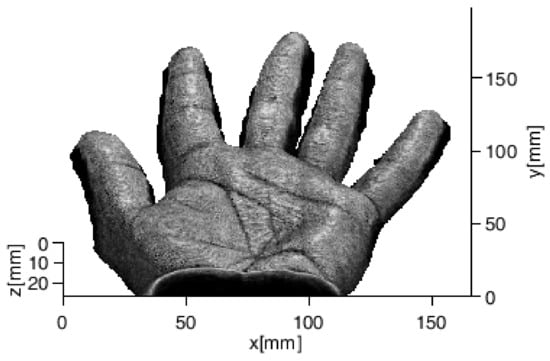

Ultrasound image acquisition of the human hand [24] is performed with a system composed of an ultrasound scanner [31], a linear array of 192 elements and a numerical pantograph, which controls the movement of the probe on the region of interest (ROI). The acoustic coupling between the human body and the probe is created by submerging both in a tank of water. A three-dimensional image is acquired by moving the probe along the elevation direction; during the motion, several B-mode images are collected and regrouped in order to obtain a volume defined by an 8-bit grayscale 3D matrix (416 × 500 × 68 voxels). Figure 1 shows an example of a 3D render of the whole human hand. The resolution of the image is about 400 μm.

Figure 1.

Example of 3D rendered human hand.

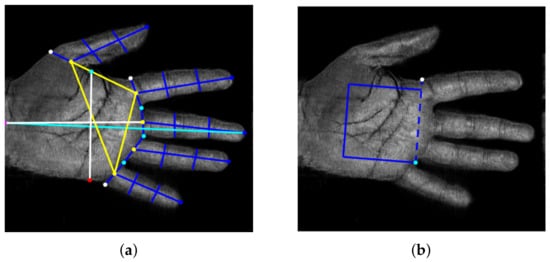

Figure 2. (a) Feature points extracted from the hand shape and the 26 distances defining the 2D template; (b) ROI extraction for palmprint from two feature points of (a).

-

Mean features (MF): each length computed as the mean value of the lengths obtained at each depth;

-

Weighted Mean features (WMF): each length represented by a weighted mean of the lengths obtained at various depths;

-

Global features (GF): all lengths computed at every depth.

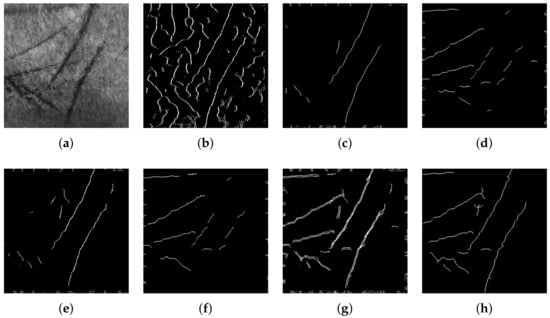

Figure 3. Palmprint feature extraction procedure step by step: (a) 2D grayscale image at 350 μm; (b) image after detection of edges; (c) feature extraction along direction 0° (d) 90° (e) 180° (f) 270° (g); logical OR of images after feature extraction along four directions; (h) final 2D template.

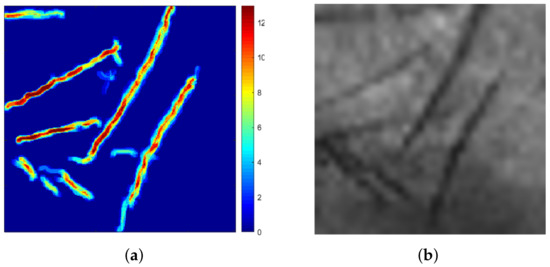

Figure 4. (a) Three-dimensional template represented as a colour scale matrix where the trait’s depth varies from 0 to 13; (b) 2D greyscale render of the same sample.

References

- Wang, Y.; Shi, D.; Zhou, W. Convolutional Neural Network Approach Based on Multimodal Biometric System with Fusion of Face and Finger Vein Features. Sensors 2022, 22, 6039.

- Ryu, R.; Yeom, S.; Kim, S.H.; Herbert, D. Continuous Multimodal Biometric Authentication Schemes: A Systematic Review. IEEE Access 2021, 9, 34541–34557.

- Haider, S.; Rehman, Y.; Usman Ali, S. Enhanced multimodal biometric recognition based upon intrinsic hand biometrics. Electronics 2020, 9, 1916.

- Bhilare, S.; Jaswal, G.; Kanhangad, V.; Nigam, A. Single-sensor hand-vein multimodal biometric recognition using multiscale deep pyramidal approach. Mach. Vis. Appl. 2018, 29, 1269–1286.

- Kumar, A.; Zhang, D. Personal recognition using hand shape and texture. IEEE Trans. Image Process. 2006, 15, 2454–2461.

- Charfi, N.; Trichili, H.; Alimi, A.; Solaiman, B. Bimodal biometric system for hand shape and palmprint recognition based on SIFT sparse representation. Multimed. Tools Appl. 2017, 76, 20457–20482.

- Gupta, P.; Srivastava, S.; Gupta, P. An accurate infrared hand geometry and vein pattern based authentication system. Knowl. Based Syst. 2016, 103, 143–155.

- Kanhangad, V.; Kumar, A.; Zhang, D. Contactless and pose invariant biometric identification using hand surface. IEEE Trans. Image Process. 2011, 20, 1415–1424.

- Kumar, A. Toward More Accurate Matching of Contactless Palmprint Images under Less Constrained Environments. IEEE Trans. Inf. Forensics Secur. 2019, 14, 34–47.

- Liang, X.; Li, Z.; Fan, D.; Zhang, B.; Lu, G.; Zhang, D. Innovative Contactless Palmprint Recognition System Based on Dual-Camera Alignment. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 6464–6476.

- Wu, W.; Elliott, S.; Lin, S.; Sun, S.; Tang, Y. Review of palm vein recognition. IET Biom. 2020, 9, 1–10.

- Palma, D.; Blanchini, F.; Giordano, G.; Montessoro, P.L. A Dynamic Biometric Authentication Algorithm for Near-Infrared Palm Vascular Patterns. IEEE Access 2020, 8, 118978–118988.

- Wang, R.; Müller, R. Bioinspired solution to finding passageways in foliage with sonar. Bioinspir. Biomim. 2021, 16, 066022.

- Iula, A.; Bollino, G. A travelling wave rotary motor driven by three pairs of langevin transducers. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2012, 59, 121–127.

- Pyle, R.; Bevan, R.; Hughes, R.; Rachev, R.; Ali, A.; Wilcox, P. Deep Learning for Ultrasonic Crack Characterization in NDE. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2021, 68, 1854–1865.

- Carotenuto, R.; Merenda, M.; Iero, D.; Della Corte, F. An Indoor Ultrasonic System for Autonomous 3-D Positioning. IEEE Trans. Instrum. Meas. 2019, 68, 2507–2518.

- Avola, D.; Cinque, L.; Fagioli, A.; Foresti, G.; Mecca, A. Ultrasound Medical Imaging Techniques. ACM Comput. Surv. 2021, 54.

- Trimboli, P.; Bini, F.; Marinozzi, F.; Baek, J.H.; Giovanella, L. High-intensity focused ultrasound (HIFU) therapy for benign thyroid nodules without anesthesia or sedation. Endocrine 2018, 61, 210–215.

- Iula, A. Ultrasound systems for biometric recognition. Sensors 2019, 19, 2317.

- Schmitt, R.; Zeichman, J.; Casanova, A.; Delong, D. Model based development of a commercial, acoustic fingerprint sensor. In Proceedings of the IEEE International Ultrasonics Symposium, IUS, Dresden, Germany, 7–10 October 2012; pp. 1075–1085.

- Lamberti, N.; Caliano, G.; Iula, A.; Savoia, A. A high frequency cMUT probe for ultrasound imaging of fingerprints. Sens. Actuator A Phys. 2011, 172, 561–569.

- Jiang, X.; Tang, H.Y.; Lu, Y.; Ng, E.J.; Tsai, J.M.; Boser, B.E.; Horsley, D.A. Ultrasonic fingerprint sensor with transmit beamforming based on a PMUT array bonded to CMOS circuitry. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2017, 64, 1401–1408.

- Iula, A.; Hine, G.; Ramalli, A.; Guidi, F. An Improved Ultrasound System for Biometric Recognition Based on Hand Geometry and Palmprint. Procedia Eng. 2014, 87, 1338–1341.

- Iula, A. Biometric recognition through 3D ultrasound hand geometry. Ultrasonics 2021, 111, 106326.

- Iula, A.; Nardiello, D. Three-dimensional ultrasound palmprint recognition using curvature methods. J. Electron. Imaging 2016, 25, 033009.

- Nardiello, D.; Iula, A. A new recognition procedure for palmprint features extraction from ultrasound images. Lect. Notes Electr. Eng. 2019, 512, 113–118.

- Iula, A.; Nardiello, D. 3-D Ultrasound Palmprint Recognition System Based on Principal Lines Extracted at Several under Skin Depths. IEEE Trans. Instrum. Meas. 2019, 68, 4653–4662.

- De Santis, M.; Agnelli, S.; Nardiello, D.; Iula, A. 3D Ultrasound Palm Vein recognition through the centroid method for biometric purposes. In Proceedings of the 2017 IEEE International Ultrasonics Symposium (IUS), Washington, DC, USA, 6–9 September 2017.

- Iula, A.; Vizzuso, A. 3D Vascular Pattern Extraction from Grayscale Volumetric Ultrasound Images for Biometric Recognition Purposes. Appl. Sci. 2022, 12, 8285.

- Micucci, M.; Iula, A. Ultrasound wrist vein pattern for biometric recognition. In Proceedings of the 2022 IEEE International Ultrasonics Symposium, IUS, Venice, Italy, 10–13 October 2022; Volume 2022.

- Tortoli, P.; Bassi, L.; Boni, E.; Dallai, A.; Guidi, F.; Ricci, S. ULA-OP: An advanced open platform for ultrasound research. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2009, 56, 2207–2216.

- Sharma, S.; Dubey, S.; Singh, S.; Saxena, R.; Singh, R. Identity verification using shape and geometry of human hands. Expert Syst. Appl. 2015, 42, 821–832.

- Iula, A. Micucci, M. Multimodal Biometric Recognition Based on 3D Ultrasound Palmprint-Hand Geometry Fusion. IEEE Access 2022, 10, 7914–7925.

- Iula, A.; Micucci, M. A Feasible 3D Ultrasound Palmprint Recognition System for Secure Access Control Applications. IEEE Access 2021, 9, 39746–39756.

More