Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by AMAL NAITALI and Version 3 by Lindsay Dong.

Deepfakes, is a fast-developing field at the nexus of artificial intelligence and multimedia. These artificial media creations, made possible by deep learning algorithms, allow for the manipulation and creation of digital content that is extremely realistic and challenging to identify from authentic content. Deepfakes can be used for entertainment, education, and research; however, they pose a range of significant problems across various domains, such as misinformation, political manipulation, propaganda, reputational damage, and fraud.

- deepfake detection

- face forgery

- deep learning

- generative artificial intelligence

1. Introduction

Deepfakes are produced by manipulating existing videos and images to produce realistic-looking but wholly fake content. The rise of advanced artificial intelligence-based tools and software that require no technical expertise has made deepfake creation easier. With the unprecedented exponential advancement, the world is currently witnessing in generative artificial intelligence, the research community is in dire need of keeping informed on the most recent developments in deepfake generation and detection technologies to not fall behind in this critical arms race.

Deepfakes present a number of serious issues that arise in a variety of fields. These issues could significantly impact people, society [1], and the reliability of digital media [2]. Some significant issues include fake news, which can lead to the propagation of deceptive information, manipulation of public opinion, and erosion of trust in media sources. Deepfakes can also be employed as tools for political manipulation, influence elections, and destabilize public trust in political institutions [3][4]. In addition, this technology enables malicious actors to create and distribute non-consensual explicit content to harass and cause reputational damage or create convincing impersonations of individuals, deceiving others for financial or personal gains [5]. Furthermore, the rise of deepfakes poses a serious issue in the domain of digital forensics as it contributes to a general crisis of trust and authenticity in digital evidence used in litigation and criminal justice proceedings. All of these impacts show that deepfakes present a serious threat, especially in the current sensitive state of the international political climate and the high stakes at hand considering the conflicts on the global scene and how deepfakes and fake news can be weaponized in the ongoing media war, which can ultimately result in catastrophic consequences.

The most common manipulation types are identity swap or face swapping, face reenactment, and lip-syncing. Face swapping [8][9] is a form of manipulation that has primarily become prevalent in videos even though it can occur at the image level. It entails the substitution of one individual’s face in a video, known as the source, with the face of another person, referred to as the target. In this process, the original facial features and expressions of the target subject are mapped onto the associated areas of the source subject’s face, creating a seamless integration of the target’s appearance into the source video. The origins of research on the subject of identity swap can be traced to the morphing method introduced in [10].

The most common manipulation types are identity swap or face swapping, face reenactment, and lip-syncing. Face swapping [8][9] is a form of manipulation that has primarily become prevalent in videos even though it can occur at the image level. It entails the substitution of one individual’s face in a video, known as the source, with the face of another person, referred to as the target. In this process, the original facial features and expressions of the target subject are mapped onto the associated areas of the source subject’s face, creating a seamless integration of the target’s appearance into the source video. The origins of research on the subject of identity swap can be traced to the morphing method introduced in [10].

23. Deepfake Generation

2.1. Deepfake Manipulation Types

3.1. Deepfake Manipulation Types

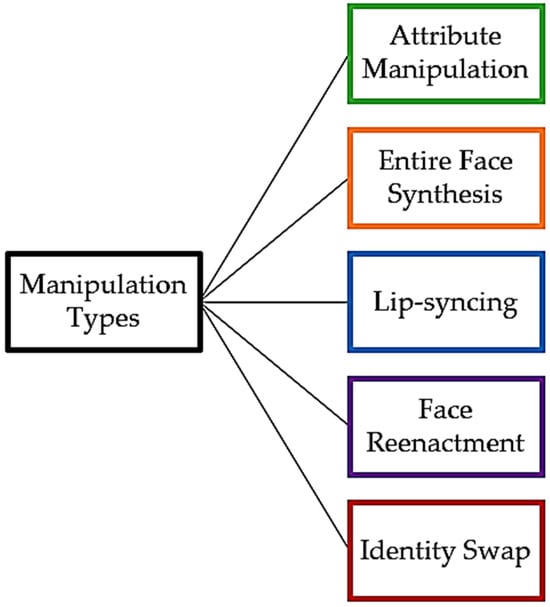

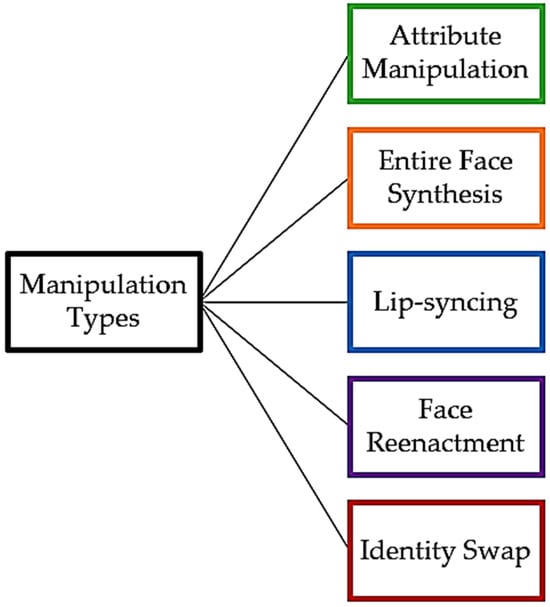

There exist five primary types of deepfake manipulation, as shown in Figure 1. Face synthesis [6] is a manipulation type which entails creating images of a human face that does not exist in real life. In attribute manipulation [7], only the region that is relevant to the attribute is altered alone in order to change the facial appearance by removing or donning eyeglasses, retouching the skin, and even making some more significant changes, like changing the age and gender.

Figure 1.

The five principal categories of deepfake manipulation.

2.2. Deepfake Generation Techniques

3.2. Deepfake Generation Techniques

Multiple techniques exist for generating deepfakes. Generative Adversarial Networks GANs [11] and Autoencoders are the most prevalent techniques. GANs consist of a pair of neural networks, a generator network and discriminator network, which engage in a competitive process. The generator network produces synthetic images, which are presented alongside real images to the discriminator network. The generator network learns to produce images that deceive the discriminator, while the discriminator network is trained to differentiate between real and synthetic images. Through iterative training, GANs become proficient at producing increasingly realistic deepfakes. On the other hand, Autoencoders can be used as feature extractors to encode and decode facial features. During training, the autoencoder learns to compress an input facial image into a lower-dimensional representation that retains essential facial features. This latent space representation can then be used to reconstruct the original image. Though, for deepfake generation, two autoencoders are leveraged, one trained on the face of the source and another trained on the target. Numerous sophisticated GAN-based techniques have emerged in the literature, contributing to the advancement and complexity of deepfakes. AttGAN [12] is a technology for facial attribute manipulation; its attribute awareness enables precise and high-quality attribute changes, making it valuable for applications like face-swapping and age progression or regression. Likewise, StyleGAN [13] is a GAN architecture that excels in generating highly realistic and detailed images. It allows for the manipulation of various facial features, making it a valuable tool for generating high-quality deepfakes. Similarly, STGAN [7] modifies specific facial attributes in images while preserving the person’s identity. The model can work with labeled and unlabeled data and has shown promising results in accurately controlling attribute changes. Another technique is StarGANv2 [14], which is able to perform multi-domain image-to-image translation, enabling the generation of images across multiple different domains using a single unified model. Unlike the original StarGAN [15], which could only perform one-to-one translation between each pair of domains, StarGANv2 [14] can handle multiple domains simultaneously. An additional GAN variant is CycleGAN [16], which specializes in style transfer between two domains. It can be applied to transfer facial features from one individual to another, making it useful for face-swapping applications. In addition to the previously mentioned methods, there is a range of open-source tools readily available for digital use, enabling users to create deep fakes with relative ease, like FaceApp [17], Reface [18], DeepBrain [19], DeepFaceLab [20], and Deepfakes Web [21]. These tools have captured the public’s attention due to their accessibility and ability to produce convincing deepfakes. It is essential for users to utilize these tools responsibly and ethically to avoid spreading misinformation or engaging in harmful activities. As artificial intelligence is developing fast, deepfake generation algorithms are simultaneously becoming more sophisticated, convincing, and hard to detect.34. Deepfake Detection

3.1. Deepfake Detection Clues

4.1. Deepfake Detection Clues

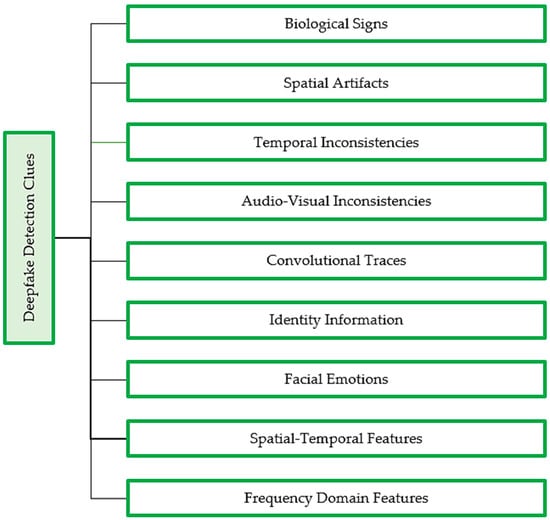

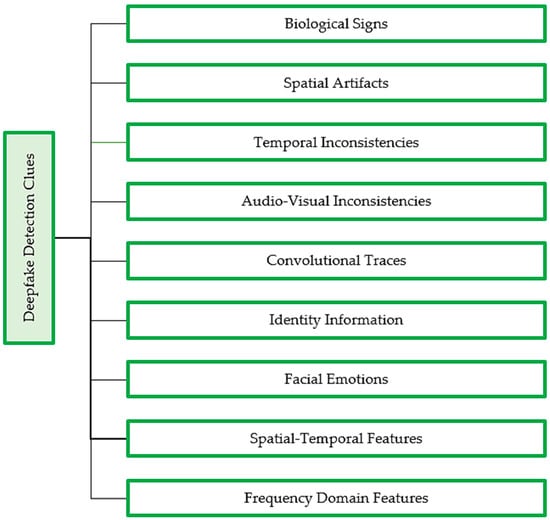

Deepfakes can be detected by exploiting various clues, as summarized in Figure 2. One approach is to analyze spatial inconsistencies by closely examining deepfakes for visual artifacts, facial landmarks, or intra-frame inconsistencies. Another method involves detecting convolutional traces that are often present in deepfakes as a result of the generation process, for instance, bi-granularity artifacts and GAN fingerprints. Additionally, biological signals such as abnormal eye blinking frequency, eye color, and heartbeat can also indicate the presence of a deepfake, as can temporal inconsistencies or the discontinuity between adjacent video frames, which may result in flickering, jittering, and changes in facial position. Poor alignment of facial emotions on swapped faces in deepfakes is a high-level semantic feature used in detection techniques. Detecting audio-visual inconsistencies is a multimodal approach that can be used for deepfakes that involve swapping both faces and audio.

Figure 2. Clues and features employed by deepfake detection models in the identification of deepfake content.

3.1.1. Detection Based on Spatial Artifacts

To effectively use face landmark information, in Ref.

Clues and features employed by deepfake detection models in the identification of deepfake content.

4.1.1. Detection Based on Spatial Artifacts

To effectively use face landmark information, in Ref. , Liang et al. described a facial geometry prior module. The model harnesses facial maps and correlation within the frequency domain to study the distinguishing traits of altered and unmanipulated regions by employing a CNN-LSTM network. In order to predict manipulation localization, a decoder is utilized to acquire the mapping from low-resolution feature maps to pixel-level details, and SoftMax function was implemented for the classification task. A different approach, dubbed forensic symmetry, by Li, G. et al. , assessed whether the natural features of a pair of mirrored facial regions are identical or dissimilar. The symmetry attribute extracted from frontal facial images and the resemblance feature obtained from profiles of the face images are obtained by a multi-stream learning structure that uses DRN as its backbone network. The difference between the two symmetrical face patches is then quantified by mapping them into angular hyperspace. A heuristic prediction technique was used to put this model into functioning at the video level. As a further step, a multi-margin angular loss function was developed for classification.

Furthermore, data augmentation plays a crucial role in training deep learning-based detection models. This technique involves augmenting the training dataset with synthetic or modified samples, which enhances the model’s capacity to generalize and recognize diverse variations of deepfake media. Data augmentation enables the model to learn from a wider range of examples and improves its robustness against different types of manipulations. Attention mechanisms have also proven to be valuable additions to deep learning-based detection models. By directing the model’s focus toward relevant features and regions of the input data, attention mechanisms enhance the model’s discriminative power and improve its overall accuracy. These mechanisms help the model select critical details [43], making it more effective in distinguishing between real and fake media. Collectively, the combination of deep learning-based architectures, meta-learning, data augmentation, and attention mechanisms has significantly advanced the field of deepfake detection. These technologies work in harmony to equip models with the ability to identify and flag manipulated media with unprecedented accuracy.

The Convolutional Neural Network is a powerful deep learning algorithm designed for image recognition and processing tasks. It consists of various levels, encompassing convolutional layers, pooling layers, and fully connected layers. There are different types of CNN models used in deepfake detection such as ResNet [44], short for Residual Network, which is an architecture that introduces skip connections to fix the vanishing gradient problem that occurs when the gradient diminishes significantly during backpropagation; these connections involve stacking identity mappings and skipping them, utilizing the layer’s prior activations. This technique accelerates first training by reducing the number of layers in the network. The concept underlying this network is different from having the layers learn the fundamental mapping.

Furthermore, data augmentation plays a crucial role in training deep learning-based detection models. This technique involves augmenting the training dataset with synthetic or modified samples, which enhances the model’s capacity to generalize and recognize diverse variations of deepfake media. Data augmentation enables the model to learn from a wider range of examples and improves its robustness against different types of manipulations. Attention mechanisms have also proven to be valuable additions to deep learning-based detection models. By directing the model’s focus toward relevant features and regions of the input data, attention mechanisms enhance the model’s discriminative power and improve its overall accuracy. These mechanisms help the model select critical details [43], making it more effective in distinguishing between real and fake media. Collectively, the combination of deep learning-based architectures, meta-learning, data augmentation, and attention mechanisms has significantly advanced the field of deepfake detection. These technologies work in harmony to equip models with the ability to identify and flag manipulated media with unprecedented accuracy.

The Convolutional Neural Network is a powerful deep learning algorithm designed for image recognition and processing tasks. It consists of various levels, encompassing convolutional layers, pooling layers, and fully connected layers. There are different types of CNN models used in deepfake detection such as ResNet [44], short for Residual Network, which is an architecture that introduces skip connections to fix the vanishing gradient problem that occurs when the gradient diminishes significantly during backpropagation; these connections involve stacking identity mappings and skipping them, utilizing the layer’s prior activations. This technique accelerates first training by reducing the number of layers in the network. The concept underlying this network is different from having the layers learn the fundamental mapping.

[22]

[23], assessed whether the natural features of a pair of mirrored facial regions are identical or dissimilar. The symmetry attribute extracted from frontal facial images and the resemblance feature obtained from profiles of the face images are obtained by a multi-stream learning structure that uses DRN as its backbone network. The difference between the two symmetrical face patches is then quantified by mapping them into angular hyperspace. A heuristic prediction technique was used to put this model into functioning at the video level. As a further step, a multi-margin angular loss function was developed for classification.

3

4.1.2. Detection Based on Biological/Physiological Signs

Li, Y. et al. [24] adopted an approach based on identifying eye blinking, a biological signal that is not easily conveyed in deepfake videos. Therefore, a deepfake video can be identified by the absence of eye blinking. To spot open and closed eye states, a deep neural network model that blends CNN and a recursive neural network is used while taking into account previous temporal knowledge.34.1.3. Detection Based on Audio-Visual Inconsistencies

Boundary Aware Temporal Forgery Detection is a multimodal technique introduced by Cai et al. [25] for correctly predicting the borders of fake segments based on visual and auditory input. While an audio encoder using a 2DCNN learns characteristics extracted from the audio, a video encoder leveraging a 3DCNN learns frame-level spatial-temporal information.34.1.4. Detection Based on Convolutional Traces

To detect deepfakes, Huang et al. [26] harnessed the imperfection of the up-sampling process in GAN-generated deepfakes by employing a map of gray-scale fakeness. Furthermore, attention mechanism, augmentation of partial data, and clustering of individual samples are employed to improve the model’s robustness. Chen et al. [27] exploited a different trace which is bi-granularity artifacts, intrinsic-granularity artifacts that are caused by up-convolution or up-sampling operations, and extrinsic granularity artifacts that are the result of the post-processing step that blends the synthesized face to the original video. Deepfake detection is tackled as a multi-task learning problem where ResNet-18 is used as the backbone feature extractor.34.1.5. Detection Based on Identity Information

Based on the intuition that every person can exhibit distinct patterns in the simultaneous occurrence of their speech, facial expressions, and gestures, Agarwal et al. [28] introduced a multimodal detection method with a semantic focus that incorporates speech transcripts into gestures specific to individuals analysis using interpretable action units to model facial and cranial motion of an individual. Meanwhile, Dong et al. [29] proposed an Identity Consistency Transformer that learns simultaneously and identifies vectors for the inner face and another for the outer face; moreover, the model uses a novel consistency loss to drive both identities apart when their labels are different and to bring them closer when their labels are the same.34.1.6. Detection Based on Facial Emotions

Despite the fact that deepfakes can produce convincing audio and video, it can be difficult to produce material that maintains coherence concerning high-level semantics, including emotions. Unnatural displays of emotion, as determined by characteristics like valence and arousal, where arousal indicates either heightened excitement or tranquility and valence represents positivity or negativity of the emotional state, can offer compelling proof that a video has been artificially created. Using the emotion inferred from the visage and vocalizations of the speaker, Hosler et al. [30] introduced an approach for identifying deepfakes. The suggested method makes use of long, short-term memory networks and visual descriptors to infer emotion from low-level audio emotion; a supervised classifier is then incorporated to categorize videos as real or fake using the predicted emotion.34.1.7. Detection Based on Temporal Inconsistencies

To leverage temporal coherence to detect deepfakes, Zheng et al. [31] proposed an approach to reduce the spatial convolution kernel size to 1 while keeping the temporal convolution kernel size constant using a fully temporal convolution network in addition to a Transformer Network that explores the long-term temporal coherence. Pei et al. [32] exploited the temporal information in videos by incorporating a Bidirectional-LSTM model.34.1.8. Detection Based on Spatial-Temporal Features

The forced mixing of the manipulated face in the generation process of deepfakes causes spatial distortions and temporal inconsistencies in crucial facial regions, which Sun et al. [33] proposed to reveal by extracting the displacement trajectory of the facial region. For the purpose of detecting fake trajectories, a fake trajectory detection network, utilizing a gated recurrent unit backbone in conjunction with a dual-stream spatial-temporal graph attention mechanism, is created. In order to detect the spatial-temporal abnormalities in the altered video trajectory, the network makes use of the extracted trajectory and explicitly integrates the important data from the input sequences.3.2. Deep Learning Models for Deepfake Detection

4.2. Deep Learning Models for Deepfake Detection

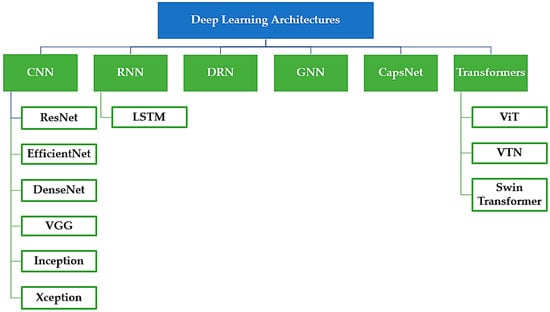

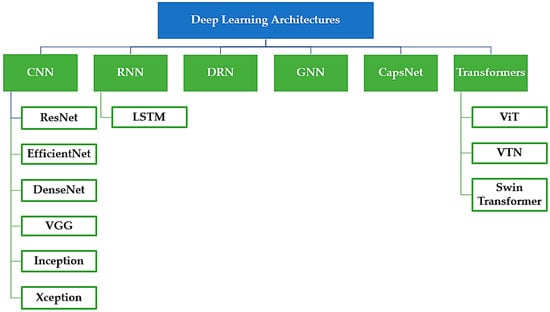

Several advanced technologies have been employed in the domain of deepfake detection, such as machine learning [34][35][36] and media forensics-based approaches [37]. However, it is widely acknowledged that deep learning-based models currently exhibit the most remarkable performance in discerning between fabricated and authentic digital media. These models leverage sophisticated neural network architectures known as backbone networks, displayed in Figure 3, which have demonstrated exceptional efficacy in computer vision tasks. Prominent examples of such architectures include VGG [38], EfficientNet [39], Inception [40], CapsNet [41], and ViT [42], and are particularly renowned for their prowess in the feature extraction phase. Deep learning-based detection models go beyond conventional methods by incorporating additional techniques to further enhance their performance. One such approach is meta-learning, which enables the model to learn from previous experiences and adapt its detection capabilities accordingly. By leveraging meta-learning, these models become more proficient at recognizing patterns and distinguishing between genuine and manipulated content.

Figure 3. Overview of predominant deep learning architectures, networks, and frameworks employed in the development of deepfake detection models.

45. Datasets

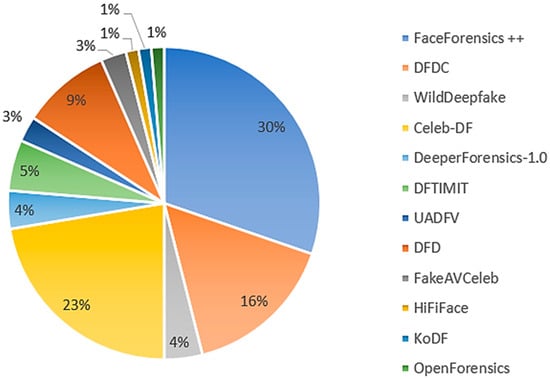

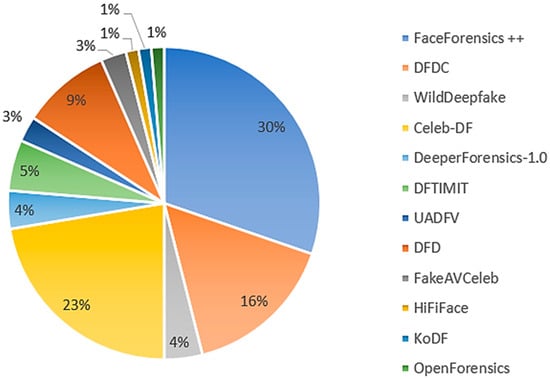

In the context of deepfakes, datasets serve as the foundation for training, testing, and benchmarking deep learning models. The accessibility of reliable and diverse datasets plays a crucial role in the development and evaluation of deepfake techniques. A variety of important datasets, summarized in Table 13, have been curated specifically for deepfake research, each addressing different aspects of the problem and contributing to the advancement of the field. Figure 46 shows some of the widely used datasets in deepfake detection models’ improvement.

Figure 46.

Frequency of usage of different deepfake datasets in the discussed detection models within this survey.

Table 13.

Key characteristics of the most prominent and publicly available deepfake datasets.

| Dataset | Year Released |

Real Content |

Fake Content |

Generation Method | Modality |

|---|---|---|---|---|---|

| FaceForensics ++ [45] | 2019 | 1000 | 4000 | DeepFakes [46], Face2Face2 [47], FaceSwap [48], NeuralTextures [49], FaceShifter [8] | Visual |

| Celeb-DF (v2) [50] | 2020 | 590 | 5639 | DeepFake [50] | Visual |

| DFDC [51] | 2020 | 23,654 | 104,500 | DFAE, MM/NN, FaceSwap [48], NTH [52], FSGAN [53] | Audio/Visual |

| DeeperForensics-1.0 [54] | 2020 | 48,475 | 11,000 | DF-VAE [54] | Visual |

| WildDeepfake [55] | 2020 | 3805 | 3509 | Curated online | Visual |

| OpenForensics [56] | 2021 | 45,473 | 70,325 | GAN based | Visual |

| KoDF [57] | 2021 | 62,166 | 175,776 | FaceSwap [48], DeepFaceLab [20], FSGAN [53], FOMM [58], ATFHP [59], Wav2Lip [60] | Visual |

| FakeAVCeleb [61] | 2021 | 500 | 19,500 | FaceSwap [48], FSGAN [53], SV2TTS [62], Wav2Lip [60] | Audio/Visual |

| DeepfakeTIMIT [63] | 2018 | 640 | 320 | GAN based | Audio/Visual |

| UADFV [64] | 2018 | 49 | 49 | DeepFakes [46] | Visual |

| DFD [65] | 2019 | 360 | 3000 | DeepFakes [46] | Visual |

| HiFiFace [66] | 2021 | - | 1000 | HifiFace [66] | Visual |

56. Challenges

Although deepfake detection has improved significantly, there are still a number of problems with the current detection algorithms that need to be resolved. The most significant challenge would be real-time detection of deepfakes and the implementation of detection models in diverse sectors and across multiple platforms. A challenge difficult to surmount due to several complexities, such as the computational power needed to detect deepfakes in real-time considering the massive amount of data shared every second on the internet and the necessity that these detection models be effective and have almost no instances of false positives. To attain this objective, one can leverage advanced learning techniques, such as meta-learning and metric learning, employ efficient architectures like transformers, apply compression techniques such as quantization, and make strategic investments in robust software and hardware infrastructure foundations.

In addition, detection methods encounter challenges intrinsic to deep learning, including concerns about generalization and robustness. Deepfake content frequently circulates across social media platforms after undergoing significant alterations like compression and the addition of noise. Consequently, employing detection models in real-world scenarios might yield limited effectiveness. To address this problem, several approaches have been explored to strengthen the generalization and robustness of detection models, such as feature restoration, attention guided modules, adversarial learning and data augmentation. Additionally, when it comes to deepfakes, the lack of interpretability of deep learning models becomes particularly problematic, making it challenging to directly grasp how they arrive at their decisions. This lack of transparency can be concerning, especially in critical applications, such as forensics, where understanding the reasoning behind a model’s output is important for accountability, trust, and safety. Furthermore, since private data access may be necessary, detection methods raise privacy issues.

The quality of the deepfake datasets is yet another prominent challenge in deepfake detection. The development of deepfake detection techniques is made possible by the availability of large-scale datasets of deepfakes. The content in the available datasets, however, has some noticeable visual differences from the deepfakes that are actually being shared online. Researchers and technology companies such as Google and Facebook constantly put forth datasets and benchmarks to improve the field of deepfake detection. A further threat faced by detection models is adversarial perturbations that can successfully deceive deepfake detectors. These perturbations are strategically designed to exploit vulnerabilities or weaknesses in the underlying algorithms used by deepfake detectors. By introducing subtle modifications to the visual or audio components of a deepfake, adversarial perturbations can effectively trick the detectors into misclassifying the manipulated media as real.

Deepfake detection algorithms, although crucial, cannot be considered the be-all end-all solution in the ongoing battle against the threat they pose. Recognizing this, numerous approaches have emerged within the field of deepfakes that aim to not only identify these manipulated media but also provide effective means to mitigate and defend against them. These multifaceted approaches serve the purpose of not only detecting deepfakes but also hindering their creation and curbing their rapid dissemination across various platforms. One prominent avenue of exploration in combating deepfakes involves the incorporation of adversarial perturbations to obstruct the creation of deepfakes. An alternative method involves employing digital watermarking, which discreetly embeds data or signatures within digital content to safeguard its integrity and authenticity. Additionally, blockchain technology offers a similar solution by generating a digital signature for the content and storing it on the blockchain, enabling the verification of any alterations or manipulations to the content.

References

- Hancock, J.T.; Bailenson, J.N. The Social Impact of Deepfakes. Cyberpsychol. Behav. Soc. Netw. 2021, 24, 149–152.

- Giansiracusa, N. How Algorithms Create and Prevent Fake News: Exploring the Impacts of Social Media, Deepfakes, GPT-3, and More; Apress: Berkeley, CA, USA, 2021; ISBN 978-1-4842-7154-4.

- Fallis, D. The Epistemic Threat of Deepfakes. Philos. Technol. 2021, 34, 623–643.

- Karnouskos, S. Artificial Intelligence in Digital Media: The Era of Deepfakes. IEEE Trans. Technol. Soc. 2020, 1, 138–147.

- Ridouani, M.; Benazzouza, S.; Salahdine, F.; Hayar, A. A Novel Secure Cooperative Cognitive Radio Network Based on Chebyshev Map. Digit. Signal Process. 2022, 126, 103482.

- Shi, Y.; Liu, X.; Wei, Y.; Wu, Z.; Zuo, W. Retrieval-Based Spatially Adaptive Normalization for Semantic Image Synthesis. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11214–11223.

- Liu, M.; Ding, Y.; Xia, M.; Liu, X.; Ding, E.; Zuo, W.; Wen, S. STGAN: A Unified Selective Transfer Network for Arbitrary Image Attribute Editing. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3668–3677.

- Li, L.; Bao, J.; Yang, H.; Chen, D.; Wen, F. FaceShifter: Towards High Fidelity And Occlusion Aware Face Swapping. arXiv 2020, arXiv:1912.13457.

- Robust and Real-Time Face Swapping Based on Face Segmentation and CANDIDE-3. Available online: https://www.springerprofessional.de/robust-and-real-time-face-swapping-based-on-face-segmentation-an/15986368 (accessed on 18 July 2023).

- Ferrara, M.; Franco, A.; Maltoni, D. The Magic Passport. In Proceedings of the IEEE International Joint Conference on Biometrics, Clearwater, FL, USA, 29 September–2 October 2014; pp. 1–7.

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 105–114.

- He, Z.; Zuo, W.; Kan, M.; Shan, S.; Chen, X. AttGAN: Facial Attribute Editing by Only Changing What You Want. IEEE Trans. Image Process. 2019, 28, 5464–5478.

- Karras, T.; Laine, S.; Aila, T. A Style-Based Generator Architecture for Generative Adversarial Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–19 June 2019.

- Choi, Y.; Uh, Y.; Yoo, J.; Ha, J.-W. StarGAN v2: Diverse Image Synthesis for Multiple Domains. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: Seattle, WA, USA, 2020; pp. 8185–8194.

- Choi, Y.; Uh, Y.; Yoo, J.; Ha, J.-W. StarGAN v2: Diverse Image Synthesis for Multiple Domains. Available online: https://arxiv.org/abs/1912.01865v2 (accessed on 8 October 2023).

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision, Seattle, WA, USA, 13–19 June 2020.

- FaceApp: Face Editor. Available online: https://www.faceapp.com/ (accessed on 5 October 2023).

- Reface—AI Face Swap App & Video Face Swaps. Available online: https://reface.ai/ (accessed on 5 October 2023).

- DeepBrain AI—Best AI Video Generator. Available online: https://www.deepbrain.io/ (accessed on 5 October 2023).

- Perov, I.; Gao, D.; Chervoniy, N.; Liu, K.; Marangonda, S.; Umé, C.; Dpfks, M.; Facenheim, C.S.; RP, L.; Jiang, J.; et al. DeepFaceLab: Integrated, Flexible and Extensible Face-Swapping Framework. arXiv 2021, arXiv:2005.05535.

- Make Your Own Deepfakes . Available online: https://deepfakesweb.com/ (accessed on 5 October 2023).

- Liang, P.; Liu, G.; Xiong, Z.; Fan, H.; Zhu, H.; Zhang, X. A Facial Geometry Based Detection Model for Face Manipulation Using CNN-LSTM Architecture. Inf. Sci. 2023, 633, 370–383.

- Li, G.; Zhao, X.; Cao, Y. Forensic Symmetry for DeepFakes. IEEE Trans. Inf. Forensics Secur. 2023, 18, 1095–1110.

- Li, Y.; Chang, M.-C.; Lyu, S. In Ictu Oculi: Exposing AI Created Fake Videos by Detecting Eye Blinking. In Proceedings of the International Workshop on Information Forensics and Security, WIFS, Hong Kong, China, 11–13 December 2018; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2019.

- Cai, Z.; Stefanov, K.; Dhall, A.; Hayat, M. Do You Really Mean That? Content Driven Audio-Visual Deepfake Dataset and Multimodal Method for Temporal Forgery Localization: Anonymous Submission Paper ID 73. In Proceedings of the International Conference on Digital Image Computing: Techniques and Applications, DICTA, Sydney, Australia, 30 November–2 December 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022.

- Huang, Y.; Juefei-Xu, F.; Guo, Q.; Liu, Y.; Pu, G. FakeLocator: Robust Localization of GAN-Based Face Manipulations. IEEE Trans. Inf. Forensics Secur. 2022, 17, 2657–2672.

- Chen, H.; Li, Y.; Lin, D.; Li, B.; Wu, J. Watching the BiG Artifacts: Exposing DeepFake Videos via Bi-Granularity Artifacts. Pattern Recogn. 2023, 135, 109179.

- Agarwal, S.; Hu, L.; Ng, E.; Darrell, T.; Li, H.; Rohrbach, A. Watch Those Words: Video Falsification Detection Using Word-Conditioned Facial Motion. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, WACV, Waikoloa, HI, USA, 2–7 January 2023; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2023; pp. 4699–4708.

- Dong, X.; Bao, J.; Chen, D.; Zhang, T.; Zhang, W.; Yu, N.; Chen, D.; Wen, F.; Guo, B. Protecting Celebrities from DeepFake with Identity Consistency Transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; IEEE Computer Society: Washington, DC, USA, 2022; Volume 2022, pp. 9458–9468.

- Hosler, B.; Salvi, D.; Murray, A.; Antonacci, F.; Bestagini, P.; Tubaro, S.; Stamm, M.C. Do Deepfakes Feel Emotions? A Semantic Approach to Detecting Deepfakes via Emotional Inconsistencies. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; IEEE Computer Society: Washington, DC, USA, 2021; pp. 1013–1022.

- Zheng, Y.; Bao, J.; Chen, D.; Zeng, M.; Wen, F. Exploring Temporal Coherence for More General Video Face Forgery Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021; pp. 15024–15034.

- Pei, S.; Wang, Y.; Xiao, B.; Pei, S.; Xu, Y.; Gao, Y.; Zheng, J. A Bidirectional-LSTM Method Based on Temporal Features for Deep Fake Face Detection in Videos. In Proceedings of the 2nd International Conference on Information Technology and Intelligent Control (CITIC 2022), Kunming, China, 15–17 July 2022; Nikhath, K., Ed.; SPIE: Washington, DC, USA, 2022; Volume 12346.

- Sun, Y.; Zhang, Z.; Echizen, I.; Nguyen, H.H.; Qiu, C.; Sun, L. Face Forgery Detection Based on Facial Region Displacement Trajectory Series. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision Workshops, WACV, Waikoloa, HI, USA, 3–7 January 2023; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2023; pp. 633–642.

- Matern, F.; Riess, C.; Stamminger, M. Exploiting Visual Artifacts to Expose Deepfakes and Face Manipulations. In Proceedings of the 2019 IEEE Winter Applications of Computer Vision Workshops (WACVW), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 83–92.

- Ciftci, U.A.; Demir, I.; Yin, L. FakeCatcher: Detection of Synthetic Portrait Videos Using Biological Signals. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 9, 1.

- Benazzouza, S.; Ridouani, M.; Salahdine, F.; Hayar, A. A Novel Prediction Model for Malicious Users Detection and Spectrum Sensing Based on Stacking and Deep Learning. Sensors 2022, 22, 6477.

- Verdoliva, L. Media Forensics and DeepFakes: An Overview. IEEE J. Sel. Top. Signal Process. 2020, 14, 910–932.

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015, arXiv:1409.1556.

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks; PMLR: Westminster, UK, 2020.

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014.

- Sabour, S.; Frosst, N.; Hinton, G.E. Dynamic Routing between Capsules. arXiv 2017, arXiv:1710.09829.

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929.

- Benazzouza, S.; Ridouani, M.; Salahdine, F.; Hayar, A. Chaotic Compressive Spectrum Sensing Based on Chebyshev Map for Cognitive Radio Networks. Symmetry 2021, 13, 429.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015.

- Rössler, A.; Cozzolino, D.; Verdoliva, L.; Riess, C.; Thies, J.; Niessner, M. FaceForensics++: Learning to Detect Manipulated Facial Images. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 November 2019; pp. 1–11.

- GitHub—Deepfakes/Faceswap: Deepfakes Software for All. Available online: https://github.com/deepfakes/faceswap (accessed on 10 October 2023).

- Thies, J.; Zollhöfer, M.; Stamminger, M.; Theobalt, C.; Nießner, M. Face2Face: Real-Time Face Capture and Reenactment of RGB Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020.

- GitHub—MarekKowalski/FaceSwap: 3D Face Swapping Implemented in Python. Available online: https://github.com/MarekKowalski/FaceSwap/ (accessed on 10 October 2023).

- Thies, J.; Zollhöfer, M.; Nießner, M. Deferred Neural Rendering: Image Synthesis Using Neural Textures. Available online: https://arxiv.org/abs/1904.12356v1 (accessed on 10 October 2023).

- Li, Y.; Yang, X.; Sun, P.; Qi, H.; Lyu, S. Celeb-DF: A Large-Scale Challenging Dataset for DeepFake Forensics. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3204–3213.

- Dolhansky, B.; Bitton, J.; Pflaum, B.; Lu, J.; Howes, R.; Wang, M.; Ferrer, C.C. The DeepFake Detection Challenge (DFDC) Dataset. arXiv 2020, arXiv:2006.07397.

- GitHub—Cuihaoleo/Kaggle-Dfdc: 2nd Place Solution for Kaggle Deepfake Detection Challenge. Available online: https://github.com/cuihaoleo/kaggle-dfdc (accessed on 10 October 2023).

- Nirkin, Y.; Keller, Y.; Hassner, T. FSGAN: Subject Agnostic Face Swapping and Reenactment. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019.

- Jiang, L.; Li, R.; Wu, W.; Qian, C.; Loy, C.C. DeeperForensics-1.0: A Large-Scale Dataset for Real-World Face Forgery Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020.

- Zi, B.; Chang, M.; Chen, J.; Ma, X.; Jiang, Y.-G. WildDeepfake: A Challenging Real-World Dataset for Deepfake Detection. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 2382–2390.

- Le, T.-N.; Nguyen, H.H.; Yamagishi, J.; Echizen, I. OpenForensics: Large-Scale Challenging Dataset for Multi-Face Forgery Detection and Segmentation In-the-Wild. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 10097–10107.

- Kwon, P.; You, J.; Nam, G.; Park, S.; Chae, G. KoDF: A Large-Scale Korean DeepFake Detection Dataset. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 10724–10733.

- Siarohin, A.; Lathuilière, S.; Tulyakov, S.; Ricci, E.; Sebe, N. First Order Motion Model for Image Animation. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019.

- Yi, R.; Ye, Z.; Zhang, J.; Bao, H.; Liu, Y.-J. Audio-Driven Talking Face Video Generation with Learning-Based Personalized Head Pose. arXiv 2020, arXiv:2002.10137.

- Prajwal, K.R.; Mukhopadhyay, R.; Namboodiri, V.; Jawahar, C.V. A Lip Sync Expert Is all You Need for Speech to Lip Generation in the Wild. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12 October 2020; pp. 484–492.

- Khalid, H.; Tariq, S.; Woo, S.S. FakeAVCeleb: A Novel Audio-Video Multimodal Deepfake Dataset. arXiv 2021, arXiv:2108.05080.

- Jia, Y.; Zhang, Y.; Weiss, R.J.; Wang, Q.; Shen, J.; Ren, F.; Chen, Z.; Nguyen, P.; Pang, R.; Moreno, I.L.; et al. Transfer Learning from Speaker Verification to Multispeaker Text-to-Speech Synthesis. Available online: https://arxiv.org/abs/1806.04558v4 (accessed on 10 October 2023).

- Korshunov, P.; Marcel, S. DeepFakes: A New Threat to Face Recognition? Assessment and Detection. arXiv 2018, arXiv:1812.08685.

- Yang, X.; Li, Y.; Lyu, S. Exposing Deep Fakes Using Inconsistent Head Poses. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 8261–8265.

- Contributing Data to Deepfake Detection Research—Google Research Blog. Available online: https://blog.research.google/2019/09/contributing-data-to-deepfake-detection.html (accessed on 5 October 2023).

- Wang, Y.; Chen, X.; Zhu, J.; Chu, W.; Tai, Y.; Wang, C.; Li, J.; Wu, Y.; Huang, F.; Ji, R. HifiFace: 3D Shape and Semantic Prior Guided High Fidelity Face Swapping. arXiv 2021, arXiv:2106.09965.

More