Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Razvan Adrian Covache-Busuioc and Version 2 by Jessie Wu.

The genesis of Anterior Skull Base (ASB) surgery as a distinct field is anchored in the innovations of the 1940s. Dandy’s instrumental contributions are emblematic of this era, particularly his surgical strategy via the anterior cranial fossa for the excision of orbital tumors and his subsequent expansion of the resection to incorporate the ethmoidal regions.

- cranial base surgery

- minimally invasive techniques

- intraoperative neuromonitoring

- advanced imaging

- robotics in neurosurgery

- radiosurgery

- gamma knife

- cyberknife

1. Endoscopy in the New Era: Advanced Imaging, Robotic Assistance, and Augmented Reality Overlays

Advancements in skull base surgery are increasingly leveraging the capabilities of virtual reality (VR) and augmented reality (AR). For instance, color-coded stereotactic VR models can be custom-tailored for individual surgical cases, providing a simulated operating field for surgeons and trainees [1][56]. These models offer invaluable opportunities for surgical education and preoperative simulations. Furthermore, VR technology can be integrated into real-time operative settings by overlaying 3D images onto microscopic or endoscopic views, thus enhancing spatial navigation capabilities for the surgeon [2][57].

AR technology appears to offer particular benefits to less experienced medical professionals. These systems serve not just as educational tools but also as potential substitutes for existing neural navigation technology. AR can offer both contextual information about underlying structures and direct patient perspectives, potentially revolutionizing conventional neural navigation systems [3][58].

Beyond surgery, AR also has applications in non-surgical and clinical management at the skull base. For example, it is used for ablating damaged nasal tissue and offers guidance on basic surgical plans and navigational protocols [4][59]. In cranio-maxillofacial procedures, AR plays a significant role in reconstructing cheekbones and offering data on the underlying structure, albeit without the capability for real-time modifications [5][60]. Many AR applications superimpose precollected, immersive data onto real endoscopic camera images. However, fields that lie outside the endoscopic view remain hidden to the medical team, necessitating further adaptations to fully realize the technology’s potential.

Moreover, the application of Augmented Reality in clinical settings, particularly in the management of base-of-the-skull pathologies, has been gaining significant attention in the medical community, as evidenced by multiple academic conferences exploring its potential [4][5][59,60]. A specific clinical model has been proposed, offering an extended observational perspective of the area under examination [6][61]. In this model, endoscopic images are displayed centrally, while the projection external to the endoscopic field of view is rendered virtually, utilizing pre-existing computerized tomography data. Such an integrated AR framework suggests that, following technological advancements and methodological refinements, AR applications may become increasingly prevalent across a broader spectrum of clinical scenarios necessitating heightened alertness and precision [7][62].

When it comes to the design of an ideal AR device for clinical applications, certain rigorous criteria must be met to ensure its functional efficacy and safety. The system should feature a focus marker and device alignment capabilities that are intuitive and minimally intrusive, particularly for the medical professional using it. Calibration adjustments should be undertaken before the initiation of the clinical procedure to minimize undue burden or cognitive load on the healthcare provider [8][63].

Furthermore, conventional imaging techniques that focus solely on two-dimensional visual data may suffer from limitations in perceived depth, thereby potentially compromising the practitioner’s situational awareness and decision making accuracy. To mitigate such limitations, it is advisable to incorporate depth cues to enhance the perceptual veracity of the rendered images [9][64]. Additionally, in applications where virtual 3D objects are superimposed onto endoscopic images, it becomes imperative to maintain parallax when the viewing position changes in order to preserve spatial relationships and depth perception.

In terms of data presentation, meticulous attention must be devoted to the structural layout of the AR interface. Inadequate design considerations can obscure critical information or induce visual discomfort, thereby diminishing the user experience and potentially compromising clinical outcomes. Therefore, it is essential to engage in an iterative design process, incorporating user feedback and empirical data, to optimize the AR interface and data presentation for the specialized needs of clinical practice.

2. Data-Driven Neurosurgery: Machine Learning, AI-Assisted Diagnosis, and Surgical Planning

The application of Radiomics in oncological diagnostics has emerged as a transformative approach in recent years, particularly in the preoperative assessment of various neoplastic conditions including prostate cancer, lung cancer, and an array of brain tumors such as gliomas, meningiomas, and brain metastases [8][9][10][63,64,65]. Traditional diagnostic methodologies that rely predominantly on qualitative assessments made by radiologists based on “visible” features, Radiomics facilitates the quantitative extraction of high-dimensional features as parametric data from radiographic images [11][12][66,67].

The incorporation of machine learning algorithms further enhances the analytical capabilities of Radiomics, offering unprecedented insights into the pathophysiological characteristics of lesions that are otherwise challenging to discern through conventional visual inspection [13][14][68,69]. Several studies have demonstrated the utility of Radiomics-based machine learning in the differential diagnosis of various brain tumors, thus indicating its prospective application in clinical decision making [15][70].

In the feature selection domain, Least Absolute Shrinkage and Selection Operator (LASSO) has been noted for its effectiveness in handling high-dimensional Radiomics data, particularly when the sample sizes are relatively limited [16][17][71,72]. LASSO distinguishes itself by its ability to avoid overfitting, making it an optimal choice for robust feature selection in Radiomics analyses.

Additionally, Linear Discriminant Analysis (LDA) serves as another valuable machine learning classification algorithm tailored for Radiomics applications. LDA seeks to identify and delineate boundaries around clusters belonging to distinct classes and projects these statistical entities into a lower-dimensional space to maximize class discriminatory power. Notably, it has been reported to retain substantial class discrimination information while reducing dimensionality [18][19][20][73,74,75].

Radiomics has extended its utility beyond diagnostic applications to prognostic evaluations, as exemplified in its role in both the diagnosis and treatment control rate prediction for chordoma [21][76]. Chordoma, a disease notorious for its refractory nature necessitating multiple surgical interventions and radiotherapeutic treatments, poses unique challenges for sustained disease control. In this context, Radiomic models built on features describing both the morphological shape and the genomic heterogeneity of the tumor have demonstrated superior performance in predicting the effectiveness of radiotherapy for tumor control. Such predictive capabilities underscore the potential benefits of Radiomics in enabling more targeted, efficient treatment regimens for diseases such as chordoma, thereby potentially reducing the need for repetitive, invasive procedures.

In another application, Radiomics-based machine learning algorithms have been shown to assist significantly in the preoperative differential diagnosis between germinoma and choroid plexus papilloma [22][77]. These two types of primary intracranial tumors often present with overlapping clinical manifestations and radiological features, yet they require markedly different treatment modalities. In addressing this diagnostic conundrum, high-performance prediction models have been developed using sophisticated feature selection methodologies and classifiers. These models suggest that Radiomics can offer a non-invasive diagnostic strategy with substantial reliability.

Notably, the application of Radiomics and machine learning in these scenarios holds the promise of revolutionizing the approach to image-based diagnosis and personalized clinical decision making. By leveraging advanced computational techniques to analyze complex, high-dimensional radiographic data, Radiomics provides a more nuanced understanding of tumor characteristics and treatment responses. This computational approach thereby opens avenues for more accurate, timely, and individualized therapeutic strategies, significantly enhancing the quality of patient care in oncological settings.

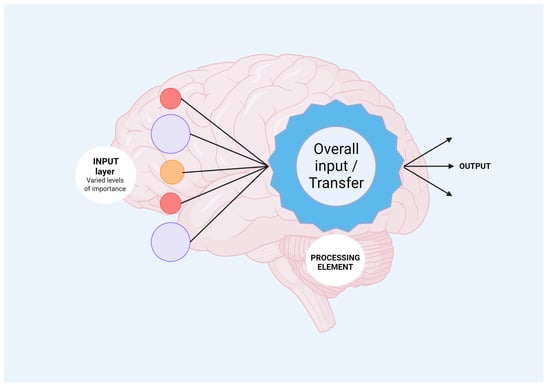

In the realm of skull base neurosurgery, machine learning (ML) methods, including neural network models (NNs) (Figure 1), have been rigorously applied to a comprehensive, multi-center, prospective database to predict the occurrence of Cerebrospinal Fluid Rhinorrhoea (CSFR) following endonasal surgical procedures [23][78]. The predictive capabilities of NNs surpass those of traditional statistical models and other ML techniques in accurately forecasting CSFR events. Notably, NNs have also revealed intricate relationships between specific risk factors and surgical repair techniques that influence CSFR, relationships that remained elusive when examined through conventional statistical approaches. As these predictive models continue to evolve through the integration of more extensive and granular datasets, refined NN architectures, and external validation processes, they hold the promise of significantly impacting future surgical decision making. Such next-generation models may provide invaluable support for more personalized patient counseling and tailored treatment plans.

Figure 1. Mechanisms of neural network processing are shown. Input layer refers to heterogenous data which will be analyzed by the neural network incorporated algorithms. Further, output information is obtained, offering new avenues for biomedical fields.

Regarding automated image segmentation in surgical navigation applications, although there is a high correlation between the automated segmentation and the anatomical landmarks in question, the Dice Coefficient (DC)—a measure commonly used to assess the performance of the segmentation task—was not deemed to be particularly high [24][79]. Various factors contribute to this finding, including the complexity of anatomical pathways, the absence of clearly delineated contours in certain regions, and inherent variations arising from manual segmentation. These limitations cast doubt on the utility of the DC as a standalone metric for objectively evaluating the performance of this specific task. However, the low average Hausdorff Distance (HD) on the testing dataset better encapsulates the high accuracy of the automated segmentation, bolstering its credibility for applications such as surgical navigation.

In summary, the application of machine learning, and particularly neural networks, appears to be a game-changer in predicting complex clinical outcomes such as CSFR following skull base neurosurgery. Meanwhile, automated image segmentation remains a challenging task, warranting a more nuanced approach to performance assessment than merely relying on singular statistical measures such as the Dice Coefficient. These advancements signify not only the growing impact of computational methods in medicine but also the necessity for ongoing refinement and validation to ensure these techniques meet the highest standards of clinical efficacy and safety.