1. Introduction

Deep neural networks are computational systems comprising units that resemble neurons and are connected through synapse-like connections. These units transmit scalar values akin to spike rates, which are determined by the total of their inputs or the activity of preceding units multiplied by the strength of the transmitting synapse, as explained by Goodfellow et al.

[1][13]. It is noteworthy that the activity of these units is governed by non-linear functions applied to their inputs. This non-linearity enables the creation of networks with multiple layers of units positioned between the “output” and “input” sides, giving rise to what we call “deep” neural networks. These deep networks have the capacity to approximate any function that maps activation inputs to activation outputs

[2][33]. Additionally, there are “recurrent” neural networks (RNNs) that can compute functions based on input sequences, as their network activations can retain information from previous events when loops are present in the connection topology

[3][34].

The term “deep learning” encompasses the challenge of adjusting the link weights within a deep neural network to achieve a desired input–output mapping

[4][35]. However, while backpropagation has been in use for over three decades, it was primarily applied to supervised and unsupervised learning scenarios. In supervised learning, the objective is to learn from labeled data, whereas in unsupervised learning, the focus is on creating meaningful representations of input data. These paradigms are quite distinct from reinforcement learning (RL), where the learner must determine actions that maximize rewards. RL also introduces the concept of exploration, where the learner must balance the search for new actions with exploiting previously acquired knowledge. Unlike traditional supervised and unsupervised learning, RL assumes that the actions taken by the learning system influence its future inputs, creating a sensory–motor feedback loop. This introduces complexities due to non-stationarity in the training data, and the desired outcomes in RL often involve multiple decision-making steps rather than simple input–output mappings

[5][36].

One of the formidable challenges faced by scholars and professionals is making decisions based on massive data while considering multiple criteria. Researchers are actively exploring innovative approaches to build decision support systems that integrate AI and machine learning to address the diverse challenges across various big data application domains. These systems facilitate decision making by taking into account a range of factors such as the number of options, effectiveness, and potential outcomes. There exist several decision support systems designed to assist in this complex decision-making process

[6][7][37,38]. Effective decision making is paramount for the success of businesses and organizations, given the multitude of criteria that can influence whether or not a particular course of action should be pursued

[8][39].

The integration of deep learning algorithms into current approaches for handling large datasets has enabled the development of more intelligent decision support systems

[9][10][40,41]. These systems find applications in various industries, including agriculture, the energy sector, and business

[11][12][13][42,43,44]. Multiple disciplines offer a range of theories and techniques, from fundamental to advanced and intelligent models, to aid in the decision-making process

[14][15][45,46].

2. Preprocessing Stages and Caveats in AI Decision Making

The preprocessing of data is a pivotal step in the decision-making process for AI models. Raw data must undergo cleansing, transformation, and engineering to make it suitable for analysis and model training. Data cleansing involves handling issues like missing values and outliers to prevent skewed results. However, mismanagement at this stage can introduce errors and alter the behavior of the model

[16][47]. Integrating data from various sources presents challenges due to diverse formats, dimensions, and units. Care must be taken to avoid conflicts and errors in this process

[17][48]. Additionally, the selection of relevant features and the creation of new ones based on domain expertise can enhance model performance. Nevertheless, incorrect decisions may lead to overfitting or the loss of critical information

[18][49].

Different data types, such as sequential or text data, come with specific constraints. When dealing with sequential data, maintaining the order of samples is crucial to avoid inaccurate predictions or the loss of temporal trends

[17][48]. In the interim, tokenization, stemming, and stop word elimination are required for text data preprocessing; however, improper control of these processes can result in the loss of crucial context or meaning

[16][47]. To avoid introducing majority-class bias, it is also necessary to evaluate how to manage data imbalances in classification assignments

[18][49]. To protect user privacy, the personal information in the dataset needs to be anonymized or encrypted. Equal weight is given to privacy and security considerations

[17][48].

Data scientists need to establish a balance between model complexity and interpretability to develop a robust and trustworthy model. Some preprocessing techniques may increase precision but also reduce interpretability, which can be problematic when making crucial decisions

[18][49]. Additionally, since preprocessing should not result in extensive data fitting, the model’s generalizability should be maintained

[16][47]. To evaluate the effects of each preprocessing stage and determine the most appropriate techniques for the task, a comprehensive understanding of the data, domain, and issue at hand is required

[17][48]. Regular validation and testing of the model using diverse preprocessing techniques facilitate selecting the most effective AI-driven decision-making procedures

[16][18][47,49].

3. Types of Neural Networks Used in Decision Making

Neural networks represent a powerful class of machine learning algorithms that have found widespread application in decision-making tasks

[19][50]. They are designed to learn and simulate complex interactions between inputs and outputs, drawing inspiration from the functioning of the human brain. Neural networks typically consist of interconnected neurons organized into layers, with the three primary layer types being the input, hidden, and output layers

[20][51]. Each neuron receives input, performs mathematical processing, and produces an output, which is then transmitted to neurons in subsequent layers.

The primary strength of neural networks lies in their ability to learn from and generalize across extensive datasets. During the training phase, the network adjusts its internal parameters, known as weights, based on input–output examples. The objective is to minimize the disparity between the actual outputs in the training data and the network’s predictions. Once trained, neural networks can be deployed for decision-making tasks by providing new data as the input and obtaining predictions as the output. Through training, neural networks acquire the capability to identify patterns and make inferences, making them suitable for applications such as classification, regression, and pattern recognition.

Different neural network architectures are employed in decision-making scenarios

[21][22][23][24][52,53,54,55]. The feedforward neural network (FNN) represents one of the most fundamental types, where data flow from the input layer to the output layer in a unidirectional manner

[25][56]. Convolutional neural networks (CNNs) are commonly used for image and video analysis, as they can capture spatial correlations through convolutional layers

[26][27][57,58]. RNNs are well-suited for tasks involving sequential data due to their cyclic connections, allowing them to model temporal dependencies

[3][34].

Neural networks have found successful applications in various decision-making domains, including natural language processing (NLP), computer vision, speech recognition, recommendation systems, and autonomous vehicles. Their ability to recognize intricate patterns and make precise predictions has made them invaluable tools across numerous industries. However, neural networks also face challenges, such as computational complexity, extensive training time, and the risk of overfitting, where the network memorizes training data rather than generalizing effectively to new data. To address these issues, regularization techniques and appropriate data preprocessing are commonly employed.

Neural networks are a potent class of machine learning algorithms applied to decision-making problems. They learn from data to create predictions or conclusions based on intricate patterns and relationships. They have become a key tool in AI due to their adaptability and capacity for handling complex issues. Neural networks come in a variety of forms and are utilized frequently in numerous industries. Classification, regression, and pattern recognition applications utilize the most fundamental FNN or multilayer perceptron type

[28][59]. CNNs are the best choice for image-related tasks such as classification, object detection, and segmentation because they are so adept at interpreting visual input

[29][60]. Recurrent neural networks (RNNs) are well-suited for sequential data, such as time series or NLP, due to their cyclic connections, which permit the modeling of temporal dependencies

[30][61]. Variants such as Long Short-Term Memory (LSTM)

[31][62] and Gated Recurrent Unit (GRU)

[32][63] enable RNNs to better manage long-term dependencies, thereby resolving the problem of vanishing gradients

[33][64].

Furthermore, Generative Adversarial Networks (GANs) introduce a unique approach where discriminator and generator networks compete to create increasingly realistic synthetic data. GANs have demonstrated success in tasks such as unsupervised learning, data synthesis, and image generation

[34][65]. Additionally, reinforcement learning (RL) networks combine neural networks with RL algorithms to facilitate learning through interaction with the environment, making them suitable for decision-making tasks with delayed rewards, such as gaming, robotics control, and autonomous systems

[35][66].

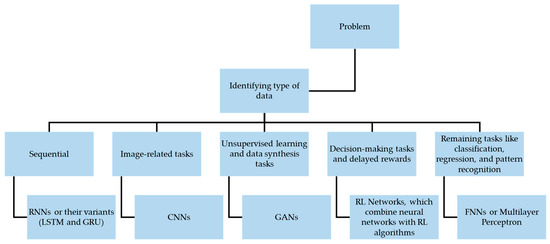

The selection of the most appropriate deep learning technique depends on factors like the type of data, task requirements, and the need to identify specific dependencies or patterns (Figure 1). Researchers and practitioners continually experiment with various neural network topologies to push the boundaries of AI and decision making, seeking optimal solutions for their unique use cases.

Figure 1.

Selecting the right neural network for the problem domain.

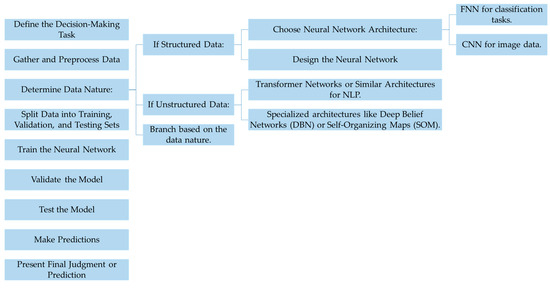

A few types of neural networks applied to decision-making problems are listed in Figure 1. In addition to the previously mentioned neural network architectures, several other specialized models are applied to decision-making problems, including transformer networks, deep belief networks, and self-organizing maps. These architectures each have their own unique strengths and are suitable for specific types of data and tasks. The choice of the most appropriate neural network architecture depends on the nature of the data being processed and the problem at hand. Figure 2 provides a high-level overview of the neural network decision-making process, from the initial choice of network type to the generation and presentation of the final decision or prediction. The provided flowchart can be adjusted and customized to suit the specific decision-making task at hand. Due to their adaptable nature, neural network configurations can effectively serve a wide range of decision-making scenarios.

Figure 2.

Neural networks in decision-making process flowchart.

4. Deep Learning Algorithms and Architectures

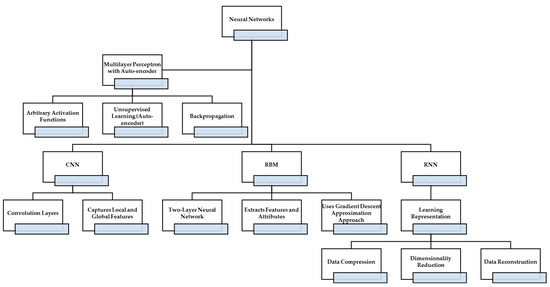

A subset of machine learning techniques based on learning data representations is known as deep learning. The fundamental component of deep learning neural networks is the distributed information processing and communication nodes seen in biological systems. Convolution neural networks, multilayer perceptrons, restricted Boltzmann machines (RBMs), auto-encoders, RBMs, RNN, and others are some of the different components of deep learning

[36][37][38][67,68,69]. A feed-forward neural network with many hidden layers is called a multilayer perceptron with an autoencoder. Perceptrons that use arbitrary activation functions are included in each layer. An unsupervised learning model called an auto-encoder aims to recreate the input data in the output. The backpropagation approach used by this neural network determines the gradient of the error function about the neural network’s weights. The primary component of learning representation encodes the input and reduces large vectors into small vectors. The tiny vectors record the most important vector characteristics that aid in data compression, dimensionality reduction, and data reconstruction. Convolution layers make up the feed-forward neural network known as the CNN. These convolution layers capture the local and global feature that contributes to improving accuracy. Hidden and visible layers are parts of the two-layer neural network that makes up the RBM. There is no interlayer communication between the visible and the concealed layers. This RBM extracts features and attributes using the gradient descent approximation approach.

Figure 3 depicts deep learning’s components (neural networks: multilayer perceptron with auto-encoder, CNN, and RBM), and learning representation.

Figure 3.

Deep learning methods and their practical applications.

Over the past decade, managers have rapidly adopted deep learning to enhance decision making across various levels and processes within organizations

[39][70]. Deep learning applications within companies have seen significant utilization in the context of the ever-expanding realm of social media activities, which generate vast amounts of user-generated content

[40][71]. Much of this content is in unstructured formats, including text, photos, and videos. Deep learning algorithms have been instrumental in navigating and extracting insights from this complex digital landscape, often referred to as the “echoverse”. This echoverse encompasses not only user-generated content but also includes content generated by organizations themselves, traditional news media, and press releases

[41][72]. Deep learning technologies have proven invaluable in processing and making sense of the rich and diverse data within this echoverse, thereby enhancing decision-making capabilities for businesses.

5. Applications of Deep Learning and Neural Networks in Decision Making

Deep learning and neural networks have revolutionized various fields by harnessing their capability to process vast datasets and discern meaningful patterns

[42][73]. In computer vision, they have ushered in a transformative era, reshaping tasks such as object detection, facial recognition, and image categorization

[43][74]. These neural networks are now being trained on extensive datasets to make precise decisions based on visual information, enabling innovations like autonomous vehicles, surveillance systems, and medical image analysis

[44][30]. Their adeptness at identifying and classifying objects in images has led to advancements in domains as diverse as agriculture and healthcare

[45][75].

In the realm of natural language processing (NLP), deep learning has achieved remarkable progress, with neural networks excelling in various applications, including language translation, sentiment analysis, and question-answering systems

[46][47][48][76,77,78]. They make it possible for chatbots, virtual assistants, and search engines to comprehend language since they can evaluate and interpret textual content

[49][79]. These innovations have fundamentally improved human–computer interfaces and transformed how humans interact with technology

[45][46][75,76].

Recommender systems have also benefited significantly from deep learning techniques, as neural networks can deliver personalized recommendations in e-commerce platforms, streaming services, and social media by analyzing user preferences and historical data

[50][80]. Consequently, user experiences have been enhanced, engagement has soared, and content delivery has become more efficient

[51][81].

Furthermore, the influence of deep learning extends into the realm of financial decision making, where neural networks have made a substantial impact by evaluating intricate financial data, predicting stock market trends, detecting fraud, and supporting algorithmic trading and credit scoring

[52][82]. These models empower real-time decision making by scrutinizing vast datasets and uncovering trends, thereby mitigating risk and enhancing financial performance

[53][83]. In summary, the versatile applications of deep learning and neural networks span multiple domains, including computer vision, NLP, recommender systems, and financial decision making, as highlighted in

Table 1. These technologies have ushered in transformative changes by harnessing their data-processing prowess to extract valuable insights and drive innovation.

Table 1.

Overview of the various applications of deep learning and neural networks in decision making across different fields.

| Application |

Description |

Technical Aspect |

| Image and Object Recognition |

Deep learning models for image classification, object detection, and facial recognition. |

CNNs and Transfer Learning |

| NLP |

Neural networks for language translation, sentiment analysis, and question-answering systems. |

RNNs, Transformers, and Word Embeddings |

| Recommender Systems |

Personalized recommendations in e-commerce, streaming services, and social media platforms. |

Collaborative Filtering and Matrix Factorization |

| Financial Decision Making |

Stock market prediction, fraud detection, credit scoring, and algorithmic trading. |

Time Series Analysis and Reinforcement Learning |

| Healthcare and Medicine |

Medical diagnosis, disease prediction, and treatment planning using medical data and images. |

Medical Imaging Analysis and Clinical Data Integration |

| Autonomous Systems |

Decision making in self-driving cars, drones, and robots for navigation and task execution. |

Sensor Fusion and Path Planning |

| Anomaly Detection |

Identifying anomalies or outliers in network security, fraud detection, and predictive maintenance. |

Autoencoders and Isolation Forests |

| Gaming and Strategy |

Deep learning models trained through RL for game playing and strategy. |

RL and Deep Q-Networks (DQN) |