You're using an outdated browser. Please upgrade to a modern browser for the best experience.

Please note this is a comparison between Version 1 by Arika Ligmann-Zielinska and Version 2 by Dean Liu.

Agent-based model (ABM) development needs information on system components and interactions. Qualitative narratives contain contextually rich system information beneficial for ABM conceptualization. Traditional qualitative data extraction is manual, complex, and time- and resource-consuming. Moreover, manual data extraction is often biased and may produce questionable and unreliable models. A possible alternative is to employ automated approaches borrowed from Artificial Intelligence.

- agent-based modeling

- natural language processing

- unsupervised data extraction

1. Introduction

Qualitative data provide thick contextual information [1][2][3][4][1,2,3,4] that can support reliable complex system model development. Qualitative data analysis explores systems components, their complex relationships, and behavior [3][4][5][3,4,5]) and provides a structured framework that can guide the formulation of quantitative models [6][7][8][9][10][6,7,8,9,10]. However, qualitative research is complex, and time- and resource-consuming [1][4][1,4]. Data analysis usually involves keyword-based data extraction and evaluation that requires multiple coders to reduce biases. Moreover, model development using qualitative data requires multiple, lengthy, and expensive stakeholder interactions [11][12][11,12], which adds to its inconvenience. Consequently, quantitative modelers often avoid using qualitative data for their model development. Modelers often skip qualitative data analysis or use unorthodox approaches for framework development, which may lead to failed capturing of target systems’ complex dynamics and produce inaccurate and unreliable outputs [13].

The development in the information technology sector has substantially increased access to qualitative data over the past few decades. Harvesting extensive credible data is crucial for reliable model development. Increased access to voluminous data presents a challenge and an opportunity for model developers [14]. However, qualitative data analysis has always been a hard nut to crack for complex modelers. Most existing qualitative data analyses are highly supervised (i.e., performed mainly by humans) and hence, bias-prone and inefficient for large datasets.

This study proposes a methodology that uses an efficient, largely unsupervised qualitative data extraction for credible Agent-Based Model (ABM) development using Natural Language Processing (NLP) toolkits. ABM requires information on agents (emulating the target system’s decision makers), their attributes, actions, and interactions for its development. The development of a model greatly depends on its intended purpose. Abstract theoretical models concentrate on establishing new relationships and theories with less emphasis on data requirements and structure. In contrast, application-driven models aim to explain specific target systems and tend to be data-intensive. They require a higher degree of adherence to data requirements, validity, feasibility, and transferability [15][16][17][15,16,17]. Our methodology is particularly applicable to application-driven models rich in empirical data.

ABMs help understand phenomena that emerge from nonlinear interactions of autonomous and heterogeneous constituents of complex systems [18][19][20][18,19,20]. ABM is a bottom-up approach; interactions at the micro-level produce complex and emergent phenomena at a macro (higher) level. As micro-scale data become more accessible to the research community, modelers increasingly use empirical data for more realistic system representation and simulation [11][21][22][23][24][11,21,22,23,24].

Quantitative data are primarily useful as inputs for parameterizing and running simulations. Additionally, quantitative model outputs are also used for model verification and validation. Qualitative data, on the other hand, find uses at various stages of the model cycle [25]. Apart from the routine tasks of identifying systems constituents and behaviors for model development, qualitative data support the model structure and output representations [26][27][26,27]. Qualitative model representations facilitate communication for learning, model evaluation, and replication.

Various approaches have been proposed to conceptualize computational models. First of all, selected quantitative models have predefined structures for model representation. System dynamics, for instance, uses Causal Loop Diagrams as qualitative tools [28]. Causal Loop Diagrams elucidate systems components, their interrelationships, and feedback that can be used for learning and developing quantitative system dynamics models. ABM, however, does not have a predefined structure for model representation; models are primarily based on either highly theoretical or best-guess ad-hoc structures, which are problematic for model structural validation [16][29][16,29].

As a consequence, social and cognitive theories [30][31][32][33][34][30,31,32,33,34] often form the basis for translating qualitative data to empirical ABM [35]. Since social behavior is complex and challenging to comprehend, using social and cognition theories helps determine the system’s expected behavior. Moreover, using theories streamlines data management and analysis for model development.

Another school of thought bases model development on stakeholder cognition. Rather than relying mainly on social theories, this approach focuses on extracting empirical information about system components and behaviors. Participatory or companion modeling [36], as well as role-playing games [11], are some of the conventional approaches to eliciting stakeholder knowledge for model development [23][24][23,24]. Stakeholders usually develop model structures in real time, while some modelers prefer to process stakeholders’ information after the discussions. For instance, [37] employs computer technologies to post-process stakeholder responses to develop a rule-induction algorithm for her ABM.

Stakeholders are assumed to be the experts of their systems, and using their knowledge in model building makes the model valid and reliable. However, stakeholder involvement is not always feasible; for instance, when modeling remote places or historical events. In such cases, modelers resort to information elicitation tools for information extraction. In the context of ABM, translating empirical textual data into agent architecture is complex and requires concrete algorithms and structures [25][38][25,38]. Therefore, modelers first explore the context of the narratives and then identify potential context-specific scopes. Determining narrative elements becomes straightforward once context and scopes are identified [38].

Many ABM modelers have formulated structures for organizing qualitative data for model development. For instance, [39] used Institutional Analysis and Development framework for managing qualitative data in their Modeling Agent system based on Institutions Analysis (MAIA). MAIA comprises five structures: collective, constitutional, physical, operational, and evaluative. Information on agents is populated in a collective structure, while behavior rules and environment go in constitutional and physical structures. Similar frameworks were introduced by [40][41][40,41], to name but a few. However, all these structures use manual, slow, and bias-prone data processing and extraction. A potential solution presented in this paper is to employ AI tools, such as NLP, for unsupervised information extraction for model development [30].

2. The Proposed Framework

In response to these limitations, our study proposes and tests a largely unsupervised domain-independent approach for developing ABM structures from informal semi-structured interviews using Python-based semantic and syntactic NLP tools (Figure 1). The method primarily uses syntactic NLP approaches for information extraction directly to the object-oriented programming (OOP) framework (i.e., agents, attributes, and actions/interactions) using widely accepted approaches in database design and OOP [42][61]. Database designers and OOP programmers generally exploit the syntactic structure of sentences for information extraction. Syntactic analysis usually treats the subject of a sentence as a class (an entity for a database) and the main verb as a method (a relationship for a database). Since the approach is not based on machine learning, it does not require large training data. The semantic analysis is limited to external static datasets such as WordNet (https://wordnet.princeton.edu/) (accessed on 8 July 2020) and VerbNet (https://verbs.colorado.edu/verbnet/) (accessed on 21 July 2020).

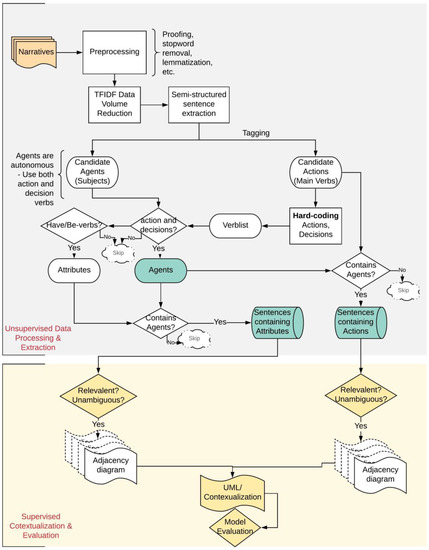

Figure 1. Largely unsupervised information extraction for ABM development. TFIDF: term frequency inverse document frequency.

In the proposed approach, information extraction includes systems agents, their actions, and interactions from qualitative data for model development using syntactic and semantic NLP tools. As our information extraction approach is primarily unsupervised and does not require manual interventions, researcher argue that, in addition to being efficient, it reduces the potential for subjectivity and biases arising from modelers’ preconceptions about target systems.

The extracted information is then represented using Unified Modeling Language (UML) for an object-oriented model development platform. UML is a standardized graphical representation of software development [43][62]. It has a set of well-defined class and activity diagrams that effectively represent the inner workings of ABMs [44][63]. UML diagrams represent systems classes, their attributes, and actions. Identified candidate agents, attributes, and actions were manually arranged in the UML structure for supporting model software development. Although there are other forms of graphical ABM representations such as Petri Nets [45][64], Conceptual Model for Simulation [29], and sequence and activity diagrams [46][65], UML is natural in representing ABM, named by [47][66] the default lingua franca of ABM.

In our approach, model development is mainly unsupervised and involves the following steps (Figure 1):

-

Unsupervised data processing and extraction;

-

Data preprocessing (cleaning and normalization);

-

Data volume reduction;

-

Tagging and information extraction;

-

Supervised contextualization and evaluation;

-

UML/Model conceptualization;

-

Model evaluation.

Steps one and two are required since semi-structured interviews often contain redundant or inflected texts that can bog down NLP analysis. Hence, removing non-informative contents from large textual data is highly recommended at the start of the analysis. NLP is well-equipped with stop words removal tools that can effectively remove redundant texts. Similarly, tools such as stemming and lemmatizing help normalize texts to their base forms [48][67].

Step three is data volume reduction, which can tremendously speed up NLP analyses. Traditional volume reduction approaches usually contain highly supervised keyword-based methods. Data analysts use predefined keywords to select and extract sentences perceived to be relevant [49][68]. Keyword identification generally requires a priori knowledge of the system and is often bias-prone. Consequently, researcher recommend a domain-independent unsupervised Term Frequency Inverse Document Frequency (TFIDF) approach [50][69] that eliminates manual keyword identification requirements. The approach provides weightage to individual words based on their uniqueness and machine-perceived importance. The TFIDF differentiates between important and common words by comparing their frequency in individual documents and across entire texts. Sentences that have high cumulative TFIDF scores are perceived to have higher importance. Given a document collection D, a word w, and an individual document d ε D, TFIDF can be defined as follows:

where fw,d equals the number of times w appears in d, |D| is the size of the corpus, and fw,D equals the number of documents in which w appears in D [50][69].

where fw,d equals the number of times w appears in d, |D| is the size of the corpus, and fw,D equals the number of documents in which w appears in D [50][69].

Step four involves tagging and information extraction. Once the preprocessed data are reduced, researcher move to tagging agents, attributes, and actions/interactions that can occur. Researcher propose the following approaches for tagging agent architecture:

Candidate agents: Following the conventional approaches in database design and OOP [42][61], researcher propose identifying the subjects of sentences as candidate agents. For instance, the farmer in ‘the farmer grows cotton’ can be a candidate agent. NLP has well-developed tools such as part-of-speech tagger and named-entity tagger that can be used to detect subjects of sentences.

Candidate actions: The main verbs of sentences can become candidate actions. The main verbs need candidate agents as the subject of the sentences. For example, in the sentence ‘the farmer grows cotton,’ the farmer is a candidate agent, and the subject of the sentence; grows is the main verb and, hence, a candidate action.

Candidate attributes: Attributes are properties inherent to the agents. Sentences containing candidate agents as subjects and be or have as their primary (non-auxiliary) verbs provide attribute information, e.g., ‘the farmer is a member of a cooperative,’ and ‘the farmer has 10 ha of land.’ Additionally, the use of possessive words also indicates attributes, e.g., the cow in the sentence ‘my cow is very small’ is an attribute.

Candidate interactions: Main verbs indicating relationships between two candidate agents are identified as interactions. Hence the sentences containing two or more candidate agents provide information on interactions, e.g., ‘The government trains the farmers.’

Since the data tagging is strictly unsupervised, false positives are likely to occur. The algorithm can over-predict agents, as the subjects of all the sentences are treated as candidate agents. In ABM, however, agents are defined as autonomous actors, they act and make decisions. Hence, researcher propose to use a hard-coded list of action verbs (e.g., eat, grow, and walk) and decision verbs (e.g., choose, decide, and think) to filter agents from the list of candidate agents. Only the candidate agents that use both types of verbs qualify as agents. Candidate agents not using both verbs are categorized as entities that may be subjected to manual evaluation. Similarly, people use different terminologies that are semantically similar. researcher recommend using external databases such as WordNet to group semantically similar terminologies.

Step five involves supervised contextualization and evaluation. While the unsupervised analysis reduces data volume and translates semi-structured interviews to the agent–action–attribute structure, noise can percolate to the outputs since the process is unsupervised. Additionally, the outputs need to be contextualized. Consequently, researcher suggest performing a series of supervised output filtration followed by manual contextualization and validation. The domain-independent unsupervised analysis extracts individual sentences that can sometimes be ambiguous or domain-irrelevant. Hence the output should be filtered based on ambiguity and domain relevancy. Once output filtration is performed, contextual structures can be developed and validated with domain experts and stakeholders.