Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Sirius Huang and Version 1 by Xijian Fan.

Forest cover mapping is of paramount importance for environmental monitoring, biodiversity assessment, and forest resource management. In the realm of forest cover mapping, significant advancements have been made by leveraging fully supervised semantic segmentation models. However, the process of acquiring a substantial quantity of pixel-level labelled data is prone to time-consuming and labour-intensive procedures.

- semi-supervision

- forest cover mapping

- semi-supervised semantic segmentation

- self-training

1. Introduction

Forests play a vital role in the land ecosystem of the Earth. They are indispensable for conserving biodiversity, protecting watersheds, capturing carbon, mitigating climate change effects [1[1][2],2], maintaining ecological balance, regulating rainfall patterns, and ensuring the stability of large-scale climate systems [3,4][3][4]. As a result, the timely and precise monitoring and mapping of forest cover has emerged as a vital aspect of sustainable forest management and the monitoring of ecosystem transformations [5].

Traditionally, the monitoring and mapping of forest cover has primarily relied on field research and photo-interpretation techniques. However, these methods are limited by the extensive manpower required. With the advancements in remote sensing (RS) technology, the acquisition of large-scale, high-resolution forest imagery data has become possible without the need for physical contact and without causing harm to the forest environment. Taking advantage of RS imagery, numerous studies have proposed various methods for forest cover mapping, including decision trees [6], regression trees [7], maximum likelihood classifiers [8], random forest classification algorithms [9[9][10],10], support vector machines, spatio-temporal Markov random-field super-resolution mapping [11], and multi-scale spectral–spatial–temporal super-resolution mapping [12].

Recently, due to the growing prevalence of deep Convolutional Neural Networks (CNNs) [13] and semantic segmentation [14[14][15][16],15,16], there has been a notable shift in the research community towards utilising these techniques for forest cover mapping with RS imagery. CNNs have emerged as powerful tools for analysing two-dimensional images, employing their multi-layered convolution operations to effectively capture low-level spatial patterns (such as edges, textures, and shapes) and extract high-level semantic information. Meanwhile, semantic segmentation techniques enable the precise identification and extraction of different objects/regions in an image by classifying each image pixel into a specific semantic category, achieving pixel-level image segmentation. In the context of forest cover mapping, several existing methods have demonstrated the effectiveness of using semantic segmentation techniques. Bragagnolo et al. [17] proposed to integrate an attention block into the basic UNet network to segment the forest area using satellite imagery from South America. Flood et al. [18] also proposed a UNet-based network and achieved promising results in mapping the presence or absence of trees and shrubs in Queensland, Australia. Isaienkov et al. [19] directly employed the baseline U-Net model combined with Sentinel-2 satellite data to detect changes in Ukrainian forests. However, all these methods rely on fully supervised learning for semantic segmentation, which necessitates a substantial amount of labelled pixel data, resulting in a significant labelling expense. Semi-supervised learning [20,21,22][20][21][22] has emerged as a promising approach to address the aforementioned challenges. It involves training models using a combination of limited labelled data and a substantial amount of unlabelled data, which reduces the need for manual annotations while still improving the performance of the model. Several research studies [23,24][23][24] have explored the application of semi-supervised learning in semantic segmentation for land-cover-mapping tasks in RS. While these studies have assessed the segmentation of forests to some extent, their focus has predominantly been on forests situated in urban or semi-natural areas, limiting their performance in densely forested natural areas. Moreover, there are unique challenges associated with utilising satellite RS imagery specifically for forest cover mapping:

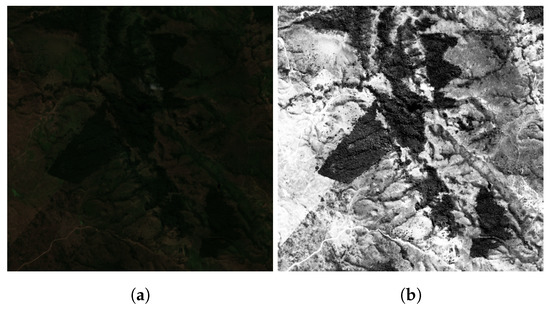

Challenge 1: As illustrated in Figure 1a, satellite remote sensing (RS) forest images often face problems such as variations in scene illumination and atmospheric interference during the image acquisition process. These factors can lead to colour deviations and distortions, resulting in poor colour fidelity and low contrast. Therefore, it becomes essential to employ image enhancement techniques to improve visualisation and reveal more details. This enhancement facilitates the ability of CNNs to effectively capture spatial patterns.

Figure 1. Visualisation of images after application of different processing methods: (a) original image; and (b) image enhanced using contrast enhancement.

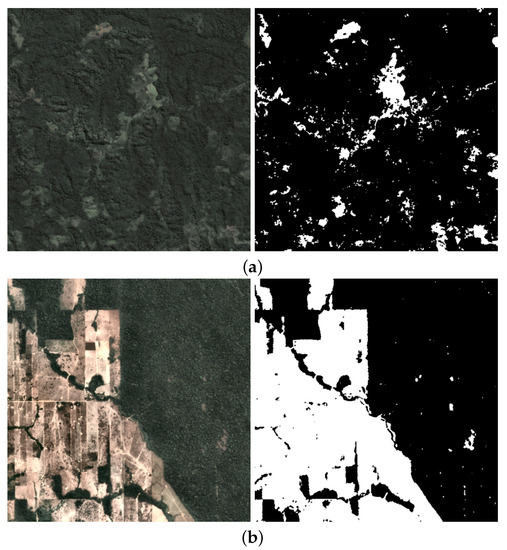

Challenge 2: Due to the high density of natural forest cover and the similar reflectance characteristics between forest targets and other non-forest targets, e.g., grass and the shadow of vegetation, the boundaries between forest and non-forest areas often become unclear. As a result, it becomes challenging to accurately distinguish and delineate the details and edges of the regions of interest, as depicted in Figure 2a.

Figure 2. Visualisation of balanced and imbalanced samples (where black represents non-forest, and white represents forest): (a) balanced sample and (b) imbalanced sample.

Challenge 3: For unknown forest distributions, there are two scenarios: imbalanced (as illustrated in Figure 2) or balanced datasets. Current methods face challenges in effectively handling datasets with different distributions, resulting in poor model generalisation.

2. Semi-Supervised Semantic Segmentation

Consistency regularisation and pseudo-labelling are two main categories of methods in the field of semi-supervised semantic segmentation [21,22][21][22]. Consistency regularisation methods aim to improve model performance by promoting consistency among diverse predictions for the same image. This is achieved by introducing perturbations to either the input images or the models themselves. For instance, the CCT approach [25] utilises an auxiliary decoder structure that incorporates multiple robust perturbations at both the feature level and decoder output stage. These perturbations are strategically employed to enforce consistency in the model predictions, ensuring that the predictions remain consistent even in the presence of perturbations. Furthermore, Liu et al. proposed PS-MT [26], which introduces innovative extensions to improve upon the mean-teacher (MT) model. These extensions include the introduction of an auxiliary teacher and the replacement of the MT’s mean square error (MSE) loss with a more stringent confidence-weighted cross-entropy (Conf-CE) loss. These enhancements greatly enhance the accuracy and consistency of the predictions in the MT model. Building upon these advancements, Abulikemu Abuduweili et al. [27] proposed a novel method that leverages adaptive consistency regularisation to effectively combine pre-trained models and unlabelled data, improving model performance. However, consistency regularisation typically relies on perturbation techniques that impose certain requirements on dataset quality and distribution, as well as numerous challenging hyperparameters to fine-tune. Contrary to the consistency regularisation methods, pseudo-labelling techniques, exemplified by self-training [28], leverage predictions from unlabelled data to generate pseudo-labels, which are then incorporated into the training process, effectively expanding the training set and enriching the model with more information. In this context, Yi Zhu et al. [29] proposed a self-training framework for semantic segmentation that utilises pseudo-labels from unlabelled data, addressing data imbalance through centroid sampling and focusing on optimising computational efficiency. However, pseudo-labelling methods offer more stable training but have limitations in achieving substantial improvements in model performance, leading to under-utilisation of the potential of unlabelled data.3. Semi-Supervised Semantic Segmentation in RS

Several studies have investigated the application of semi-supervised learning in RS. Lucas et al. [23] proposed Sourcerer, a deep-learning-based technique for semi-supervised domain adaptation in land cover mapping from satellite image time-series data. Sourcerer surpasses existing methods by effectively leveraging labelled data from a source domain and adapting the model to the target domain using a novel regulariser, even with limited labelled target data. Chen et al. [30] introduced SemiRoadExNet, a novel semi-supervised road extraction network based on a Generative Adversarial Network (GAN). The network efficiently utilises both labelled and unlabelled data by generating road segmentation results and entropy maps. Zou et al. [31] introduced a novel pseudo-labelling approach for semantic segmentation, improving the training process using unlabelled or weakly labelled data. Through the intelligent combination of diverse sources and robust data augmentation, the proposed strategy demonstrates effective consistency training, showing its effectiveness for data of low or high density. On the other hand, Zhang et al. [32] proposed a semi-supervised deep learning framework for the semantic segmentation of high-resolution RS images. The framework utilises transformation consistency regularisation to make the most of limited labelled samples and abundant unlabelled data. However, the application of semi-supervised semantic segmentation methods in forest cover mapping has not been explored.References

- Van Mantgem, P.J.; Stephenson, N.L.; Byrne, J.C.; Daniels, L.D.; Franklin, J.F.; Fulé, P.Z.; Harmon, M.E.; Larson, A.J.; Smith, J.M.; Taylor, A.H.; et al. Widespread increase of tree mortality rates in the western United States. Science 2009, 323, 521–524.

- Zhu, Y.; Li, D.; Fan, J.; Zhang, H.; Eichhorn, M.P.; Wang, X.; Yun, T. A reinterpretation of the gap fraction of tree crowns from the perspectives of computer graphics and porous media theory. Front. Plant Sci. 2023, 14, 1109443.

- Boers, N.; Marwan, N.; Barbosa, H.M.; Kurths, J. A deforestation-induced tipping point for the South American monsoon system. Sci. Rep. 2017, 7, 41489.

- Li, X.; Wang, X.; Gao, Y.; Wu, J.; Cheng, R.; Ren, D.; Bao, Q.; Yun, T.; Wu, Z.; Xie, G.; et al. Comparison of Different Important Predictors and Models for Estimating Large-Scale Biomass of Rubber Plantations in Hainan Island, China. Remote Sens. 2023, 15, 3447.

- Lewis, S.L.; Lopez-Gonzalez, G.; Sonké, B.; Affum-Baffoe, K.; Baker, T.R.; Ojo, L.O.; Phillips, O.L.; Reitsma, J.M.; White, L.; Comiskey, J.A.; et al. Increasing carbon storage in intact African tropical forests. Nature 2009, 457, 1003–1006.

- Hansen, M.C.; Stehman, S.V.; Potapov, P.V.; Loveland, T.R.; Townshend, J.R.; DeFries, R.S.; Pittman, K.W.; Arunarwati, B.; Stolle, F.; Steininger, M.K.; et al. Humid tropical forest clearing from 2000 to 2005 quantified by using multitemporal and multiresolution remotely sensed data. Proc. Natl. Acad. Sci. USA 2008, 105, 9439–9444.

- Sexton, J.O.; Song, X.P.; Feng, M.; Noojipady, P.; Anand, A.; Huang, C.; Kim, D.H.; Collins, K.M.; Channan, S.; DiMiceli, C.; et al. Global, 30-m resolution continuous fields of tree cover: Landsat-based rescaling of MODIS vegetation continuous fields with lidar-based estimates of error. Int. J. Digit. Earth 2013, 6, 427–448.

- Hamunyela, E.; Reiche, J.; Verbesselt, J.; Herold, M. Using space-time features to improve detection of forest disturbances from Landsat time series. Remote Sens. 2017, 9, 515.

- Yin, H.; Khamzina, A.; Pflugmacher, D.; Martius, C. Forest cover mapping in post-Soviet Central Asia using multi-resolution remote sensing imagery. Sci. Rep. 2017, 7, 1375.

- Zhang, P.; Ke, Y.; Zhang, Z.; Wang, M.; Li, P.; Zhang, S. Urban land use and land cover classification using novel deep learning models based on high spatial resolution satellite imagery. Sensors 2018, 18, 3717.

- Zhang, Y.; Li, X.; Ling, F.; Atkinson, P.M.; Ge, Y.; Shi, L.; Du, Y. Updating Landsat-based forest cover maps with MODIS images using multiscale spectral-spatial-temporal superresolution mapping. Int. J. Appl. Earth Obs. Geoinf. 2017, 63, 129–142.

- Flores, E.; Zortea, M.; Scharcanski, J. Dictionaries of deep features for land-use scene classification of very high spatial resolution images. Pattern Recognit. 2019, 89, 32–44.

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53.

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241.

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440.

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Computer Vision—ECCV 2018; Lecture Notes in Computer Science; Springer International Publishing: Cham, Germany, 2018; pp. 833–851.

- Bragagnolo, L.; da Silva, R.; Grzybowski, J. Amazon forest cover change mapping based on semantic segmentation by U-Nets. Ecol. Inform. 2021, 62, 101279.

- Flood, N.; Watson, F.; Collett, L. Using a U-net convolutional neural network to map woody vegetation extent from high resolution satellite imagery across Queensland, Australia. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101897.

- Isaienkov, K.; Yushchuk, M.; Khramtsov, V.; Seliverstov, O. Deep Learning for Regular Change Detection in Ukrainian Forest Ecosystem with Sentinel-2. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 364–376.

- Papandreou, G.; Chen, L.C.; Murphy, K.P.; Yuille, A.L. Weakly-and semi-supervised learning of a deep convolutional network for semantic image segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1742–1750.

- Peláez-Vegas, A.; Mesejo, P.; Luengo, J. A Survey on Semi-Supervised Semantic Segmentation. arXiv 2023, arXiv:cs.CV/2302.09899.

- Van Engelen, J.E.; Hoos, H.H. A survey on semi-supervised learning. Mach. Learn. 2020, 109, 373–440.

- Lucas, B.; Pelletier, C.; Schmidt, D.; Webb, G.I.; Petitjean, F. A bayesian-inspired, deep learning-based, semi-supervised domain adaptation technique for land cover mapping. Mach. Learn. 2021, 112, 1941–1973.

- Hong, D.; Yokoya, N.; Ge, N.; Chanussot, J.; Zhu, X.X. Learnable manifold alignment (LeMA): A semi-supervised cross-modality learning framework for land cover and land use classification. ISPRS J. Photogramm. Remote Sens. 2019, 147, 193–205.

- Ouali, Y.; Hudelot, C.; Tami, M. Semi-supervised semantic segmentation with cross-consistency training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12674–12684.

- Liu, Y.; Tian, Y.; Chen, Y.; Liu, F.; Belagiannis, V.; Carneiro, G. Perturbed and strict mean teachers for semi-supervised semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4258–4267.

- Abuduweili, A.; Li, X.; Shi, H.; Xu, C.Z.; Dou, D. Adaptive Consistency Regularization for Semi-Supervised Transfer Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 6923–6932.

- Zoph, B.; Ghiasi, G.; Lin, T.Y.; Cui, Y.; Liu, H.; Cubuk, E.D.; Le, Q. Rethinking Pre-training and Self-training. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: San Francisco, CA, USA, 2020; Volume 33, pp. 3833–3845.

- Zhu, Y.; Zhang, Z.; Wu, C.; Zhang, Z.; He, T.; Zhang, H.; Manmatha, R.; Li, M.; Smola, A.J. Improving Semantic Segmentation via Efficient Self-Training. IEEE Trans. Pattern Anal. Mach. Intell. 2021, early access. 1.

- SemiRoadExNet: A semi-supervised network for road extraction from remote sensing imagery via adversarial learning. ISPRS J. Photogramm. Remote Sens. 2023, 198, 169–183.

- Zou, Y.; Zhang, Z.; Zhang, H.; Li, C.L.; Bian, X.; Huang, J.B.; Pfister, T. Pseudoseg: Designing pseudo labels for semantic segmentation. arXiv 2020, arXiv:2010.09713.

- Zhang, B.; Zhang, Y.; Li, Y.; Wan, Y.; Guo, H.; Zheng, Z.; Yang, K. Semi-supervised Deep Learning via Transformation Consistency Regularization for Remote Sensing Image Semantic Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5782–5796.

More