Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Kinga Lis and Version 2 by Rita Xu.

The home pregnancy test is the most frequently performed laboratory test for self-diagnosis (home diagnostic test). It is also the first laboratory test that has been adapted for self-use at home.

- antibodies

- pregnancy test

- Aschheim–Zondek

1. Introduction

The discovery of antibodies played a significant role in the development of in vitro diagnostic methods. The knowledge of their properties and their skillful use became the basis for modern immunochemical techniques. Immunochemical techniques, on the other hand, have revolutionized laboratory diagnostics, which consequently translates into a wide clinical application [1]. An in vitro pregnancy test is defined as a procedure for determining the presence or absence of pregnancy based on in vitro diagnostic techniques [2]. The history of the pregnancy test is an example of the search for effective ways to diagnose various physiological and pathological conditions based on non-invasive diagnostic techniques. It leads from tests resulting from careful observation of the surrounding world, through simple diagnostic techniques based on organoleptic assessment and biological tests on animals, to techniques based on immunological reactions.

2. History of Pregnancy Test

For centuries, women have tried to recognize pregnancy in a reliable way as early as possible, although it was not always as easy as it is today. Women have always preferred to check it as individually as possible in intimate conditions and in the simplest and most reliable way possible. Although there were no scientific methods for detecting early pregnancy in a woman until the 1920s, and the first home pregnancy tests did not appear in the United States until 1976, the brilliant history of “laboratory” pregnancy tests began in ancient Egypt [3][4][7,8].2.1. Ancient Egypt

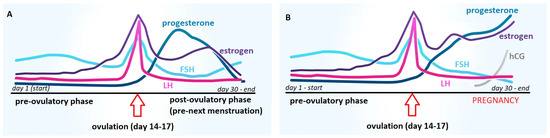

The history of the pregnancy test dates back to ancient Egypt. Ancient Egyptian physicians have rightly noticed that the best material for laboratory tests to detect pregnancy is urine [4][8]. Four thousand years ago, the Egyptians developed the first in vitro diagnostic test to detect a unique substance present, according to their observations, only in the urine of pregnant women. It is the most famous and advanced of the ancient pregnancy tests. In Egyptian papyri dating from 1500–1300 B.C.E., there is a description of the pregnancy test used in ancient Egypt. This test consisted of watering cereal seeds (wheat and barley) with the urine of the examined woman every day for about 10 days. The sprouting of cereal grains meant that the woman was pregnant. No germination was a negative result (no pregnancy). According to the available descriptions, this test allowed not only to detect pregnancy but also to determine the sex of the developing child. According to the ancient Egyptians, if the germination of wheat preceded the germination of barley, it indicated a girl, and if the germination of barley came first, a boy was to be born [4][5][6][7][8][8,9,10,11,12]. It is worth noting, however, that there is no agreement in the translation of this papyrus either as to the cereal species used in the test or as to the interpretation of their germination in relation to gender recognition. Ghalioungui et al. [7][11], analyzing translations of the text made by various Egyptologists, note that three different grains are served (wheat, barley, and buckwheat). Also, the germination of wheat is not always considered an indicator of the female sex of the fetus since buckwheat is also mentioned as a cereal that indicates the female sex of the fetus. However, it can generally be assumed that the test itself, according to the available data, was performed as described above and that the sprouting of the cereals meant that the urine was from a pregnant woman. The ancient Egyptian test of sprouting grains has been verified several times. In 1933, Manger [9][13] conducted an experiment on a sample of 100 pregnant women whose urine he used to water grains of wheat and barley. On the basis of the obtained results, he concluded that the urine of pregnant women actually accelerates the germination of cereals and that faster growth of barley than wheat means a girl, while neither accelerated nor delayed growth of barley means a boy. He estimated the effectiveness of the test in recognizing the sex of the child at 80%, although the conclusion was not consistent with the observations of the ancient Egyptians as to the relationship between the type of germinating grain and the sex of the fetus. In 1963, Ghalioungui et al. [7][11] performed an analogous experiment with 40 urine samples of pregnant women, using two types of control—urine samples of non-pregnant women and men, and distilled water. They found that 70% of the urine samples of pregnant women stimulated the germination of cereal grains. None of the urine samples of non-pregnant women or men showed such activity. Cereal germination was unrelated to fetal sex [7][11]. The ancient Egyptians established empirically that the urine of pregnant women could stimulate seed germination. This is probably due to the increased concentration of estrogen in the urine of women in the early stages of pregnancy (Figure 1). Human estrogens, like phytoestrogens, can affect the initiation of germination and stimulate plant development [10][14].

Figure 1. Comparison of the dynamics of hormonal changes during the menstrual cycle in women: (A) without conception and (B) with conception; FSH—follicle-stimulating hormone, LH—luteotropic hormone, hCG—human chorionic gonadotropin.

2.2. From Hippocrates to Gallen

In ancient Greece (ca. 400 B.C.), methods of detecting pregnancy, both the Hippocratic and Hellenic schools, were very similar to the methods used by the Egyptians. It was still believed that the urine of pregnant women contained life-giving components that stimulate seed germination [11][12][15,16]. However, methods directly interfering with the woman’s body were also readily used. One such method was the onion test. In this trial, an onion was inserted into the woman’s vagina and left there overnight. If, in the morning, a woman’s breath smelled of onions, it meant that she was not pregnant. It was believed that if the woman was pregnant, the smell of onion from the vagina could not get into the woman’s mouth. Pregnancy was also diagnosed when, after a woman consumed honey dissolved in water, her stomach was distended and painful. The Greeks, like the ancient Egyptians, also believed that if a woman felt nauseous after drinking milk or from the smell of beer, it meant that she was pregnant [6][12][10,16]. These theories, along with the development of trade, became known in all European countries. These tests were widely used until the Middle Ages.2.3. From the Middle Ages through the Seventeenth Century

Perhaps slightly more empirical techniques were used in the Middle Ages. Using visual assessment of the physical characteristics of urine (e.g., color, clarity) to detect pregnancy became a popular method at the time. Doctors specializing in uroscopy, the so-called “Piss Prophate”, appeared in Europe; they specialized in the diagnosis of many diseases based on the visual assessment of a urine sample, i.e., uroscopy. Medieval uroscopy was a medical practice that involved the visual examination of urine for the presence of pus, blood, color translucence, or other lesions. The roots of uroscopy go back to ancient Egypt, Babylon, and India and were especially important in Byzantine medicine. These techniques were commonly used by Avicenna [12][16]. According to the guidelines of medieval uroscopy, the urine of a pregnant woman was clear, light lemon, and turning to whitish, with a foamy surface [6][12][13][14][10,16,17,18]. Other urine tests were also used in the Middle Ages. For example, it was believed that milk floated on the surface of a pregnant woman’s urine. At the time, some physicians believed that if a needle inserted into a vial of urine turned rust-red or black, the woman was probably pregnant [6][12][13][14][10,16,17,18]. Another popular test involved mixing wine with urine and observing the changes [14][15][18,19]. Today, we know that many of these tests used the presence of protein in the urine and the changes in urine pH of pregnant women due to hormonal changes associated with pregnancy. Indeed, protein-containing urine can be cloudy and frothy. Alcohol, on the other hand, reacts with some proteins in the urine, precipitating them. A more alkaline urine pH can darken some metals or remove rust. Pregnant women have higher levels of protein in their urine than non-pregnant women, and their urine pH is more alkaline, so these tests may have been quite effective for the time. Various provocative pregnancy tests have also been used. Some doctors advised a woman suspected of being pregnant to drink a sweet drink before going to bed. If a woman complained of pain in the navel in the morning, the pregnancy was confirmed. In the 17th century, some doctors gave a woman a ribbon dipped in her urine to sniff. If the smell of this ribbon made the woman feel sick or vomit, it meant that she was probably pregnant [5][15][9,19]. In another test from the 17th century, a ribbon dipped in a woman’s urine was then burned in a candle flame. If the smell of smoke made the woman feel sick, it meant that she was probably pregnant [16][20]. It is difficult to find any other logical explanation for these tests than the natural tendency of pregnant women to feel excessively nauseous, which is due to hormonal changes caused by pregnancy.2.4. Nineteenth Century

The nineteenth century did not bring anything new in this area; the main material for study was still urine. Nevertheless, researchers tried to approach this study in a more rational way. Attempts to link the microscopic examination of urine (bacteria or crystals) with pregnancy were made. In the 19th century, French doctors used a urine test called the “Kyesteine pellicle” as a method of pregnancy detection. The formation of a sticky film on the surface of the urine of pregnant women after standing in a vessel for several days was observed; it was called the early pregnancy membrane [17][21]. The diagnosis of pregnancy, however, was based mainly on the observation of physical changes in the body of a woman and the presence of characteristic symptoms of this condition, such as morning sickness [15][19].2.5. From the 1920s to the 1960s

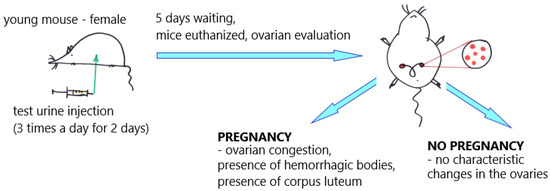

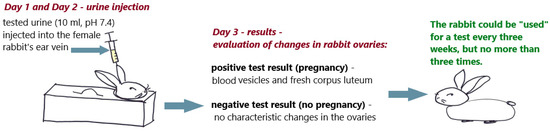

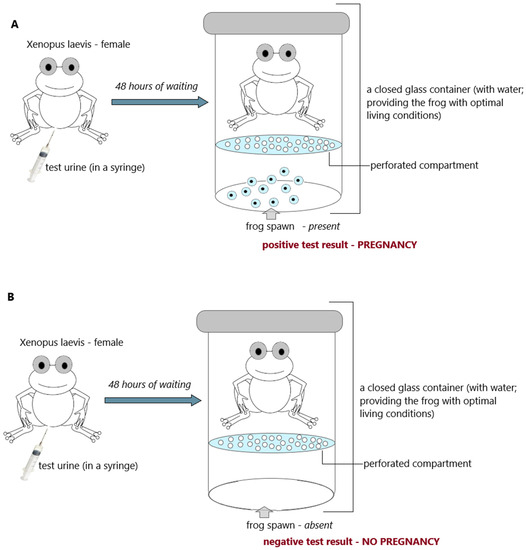

The first major steps towards constructing a reliable pregnancy test became possible in the 1920s after the discovery of a hormone present only in the urine of pregnant women. It was called human chorionic gonadotropin (hCG). That scientific discovery finally found a reliable, empirical marker that could be used for testing purposes. Since this discovery, all used pregnancy tests have been based on detecting the presence or absence of hCG in the urine [18][19][22,23]. Until the 1960s, pregnancy tests were mainly biological methods involving laboratory animals, mainly mice, rabbits, and a specific species of toads [17][18][19][20][21][22][23][24][25][26][27][28][29][30][31][32][33][34][35][36][37][38][39][40][41][42][21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46]. In 1927/1928, German scientists Selmar Aschheim and Bernhard Zondek developed the first biological pregnancy test, known as the Aschheim–Zondek test (A–Z test), which detects the presence of hCG in the urine (Figure 2).

Figure 2. Aschheim–Zondek test (mouse); an illustrative scheme. The "red dots" illustrate changes in the ovaries of mice.

Figure 3. Friedman test (rabbit); an illustrative scheme.

Figure 4. Hogben test (frog; Xenopuslaevis); (A) positive result (pregnancy) and (B) negative result (no pregnancy); an illustrative scheme.

2.6. 1960s—Agglutination Tests

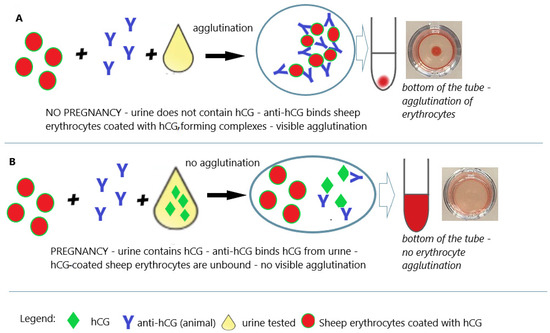

Animal bioassays (mice, rats, rabbits, and frogs) were the only practical way to detect pregnancy for four decades. The year 1960 began the era of immunological tests, which also found application in the detection of early pregnancy, allowing the abandonment of animal testing and enabling the development of the technology for rapid, sensitive, and specific home tests [20][24]. The ability to produce polyclonal antibodies (by immunization of animals) and use them as diagnostic reagents has generally revolutionized laboratory diagnostics and opened up a wide range of possibilities [45][49]. In the early 1960s, Wide and Gemzell announced the Wide–Gemzell test as an immunological hemagglutination inhibition method for diagnosing pregnancy [46][50]. The test using the agglutination inhibition test was the first pregnancy test that successfully opened the way for tests for home use [46][50]. These tests were based on agglutination inhibition reactions of chorionic gonadotropin-coated sheep blood cells (Figure 5). Since cells were used in the testing process, this test was an immunoassay rather than a bioassay.

Figure 5. Pregnancy test based on the hemagglutination inhibition test. (A) Negative result (no pregnancy); (B) positive result (pregnancy); an illustrative scheme.

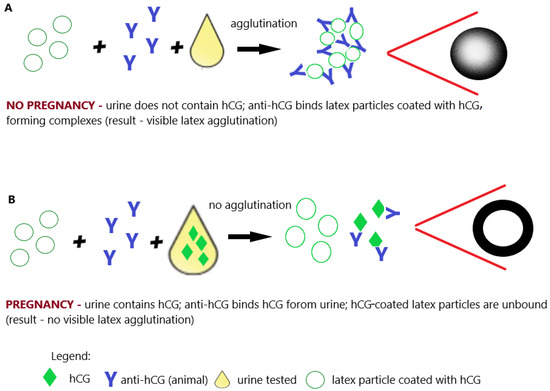

Figure 6. Pregnancy test based on the latex agglutination inhibition (slide) test. (A) Negative result (no pregnancy); (B) positive result (pregnancy); illustrative scheme.

2.7. 1970s—Time of the Radioimmunoassay

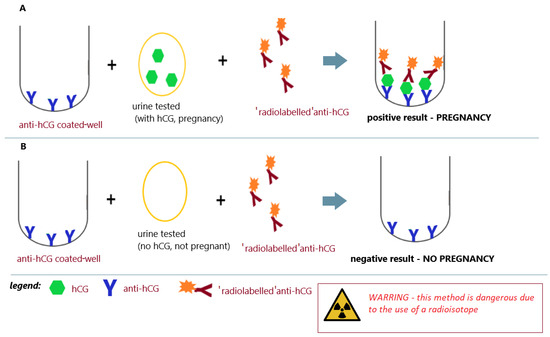

In the 1970s, the structure of the hCG molecule was precisely determined. This allowed the construction of immunological tests unequivocally distinguishing hCG from luteinizing hormone (LH) [53][54][55][56][57,58,59,60]. Further research (1980s/1990s) into the effects of hormones on reproduction led to the development of a way to identify and measure hCG [57][58][61,62]. This knowledge, along with the development of immunology and immunochemistry, has become the basis for the construction of specific pregnancy tests that can clearly detect pregnancy in its early stages. In 1966, Midgley first measured hCG and luteinizing hormone using a radioimmunoassay that ranged from 25 IU/L to 5000 IU/L [59][63]. Early RIA techniques were unable to distinguish between LH and hCG due to their cross-reactivity with specific antibodies. In order to overcome the cross-reaction between LH and hCG, the assay was performed at a concentration that did not interfere with LH, but the sensitivity of hCG deteriorated as a result. In 1972, Vaitukaitis et al. [60][64] published their paper describing the hCG beta-subunit radioimmunoassay that could finally distinguish between hCG and LH, therefore making it potentially useful as an early test for pregnancy. Antiserum directed against the beta-subunit of human chorionic gonadotropin (β-hCG) was used in the assay. With this strategy, it was possible to selectively measure hCG in samples containing both human pituitary luteinizing hormone (hLH) and hCG. The high levels of hLH observed in samples taken during the luteinizing phase of the menstrual cycle or from castrated patients had no effect on the specific detection of β-hCG by this radioimmunoassay (Figure 7A,B) [60][64].

Figure 7. Radioimmunoassay for pregnancy; (A) positive result (pregnancy) and (B) negative result (no pregnancy); an illustrative scheme.

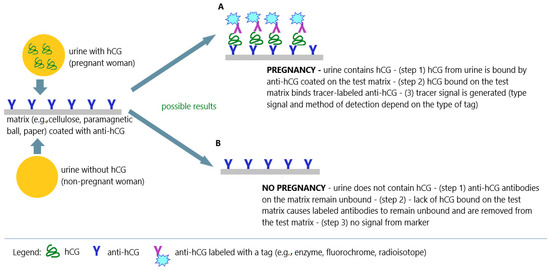

Figure 8.

Immunochemical techniques in pregnancy tests; (

A

) positive result (pregnancy); (

B

) negative result (no pregnancy); an illustrative scheme.