Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Rita Xu and Version 1 by Suhare Solaiman.

A framework for simultaneous tracking and recognizing drone targets using a low-cost and small-sized millimeter-wave radar is presented. The radar collects the reflected signals of multiple targets in the field of view, including drone and non-drone targets. The analysis of the received signals allows multiple targets to be distinguished because of their different reflection patterns.

- mmWave radar

- cloud points

- target tracking

1. Introduction

In recent years, unmanned aerial vehicles (UAVs), such as drones, have received significant attention for performing tasks in different domains. This is because of their low cost, high coverage, and vast mobility, as well as their capability to perform different operations using small-scale sensors [1]. Smartphones can now operate drones instead of traditional remote controllers, owing to technological advancements. In addition, drone technology can provide live video streaming and image capturing, as well as make autonomous decisions based on these data. Consequently, artificial intelligence techniques have been utilized in the provisioning of civilian and military services [2]. In this context, drones have been adopted for express shipping and delivery [3,4[3][4][5],5], natural disaster prevention [6[6][7],7], geographical mapping [8], search and rescue operations [9], aerial photography for journalism and film making [10], providing essential materials [11], border control surveillance [12], and building safety inspection [13]. Even though drone technology offers a multitude of benefits, it raises mixed concerns when it comes to how it will be used in the future. Drones pose many potential threats, including invasion of privacy, smuggling, espionage, flight disruption, human injury, and terrorist attacks. These threats compromise aviation operations and public safety. However, it has become increasingly necessary to detect, track, and recognize drone targets and make decisions in certain situations, such as detonating or jamming unwanted drone targets.

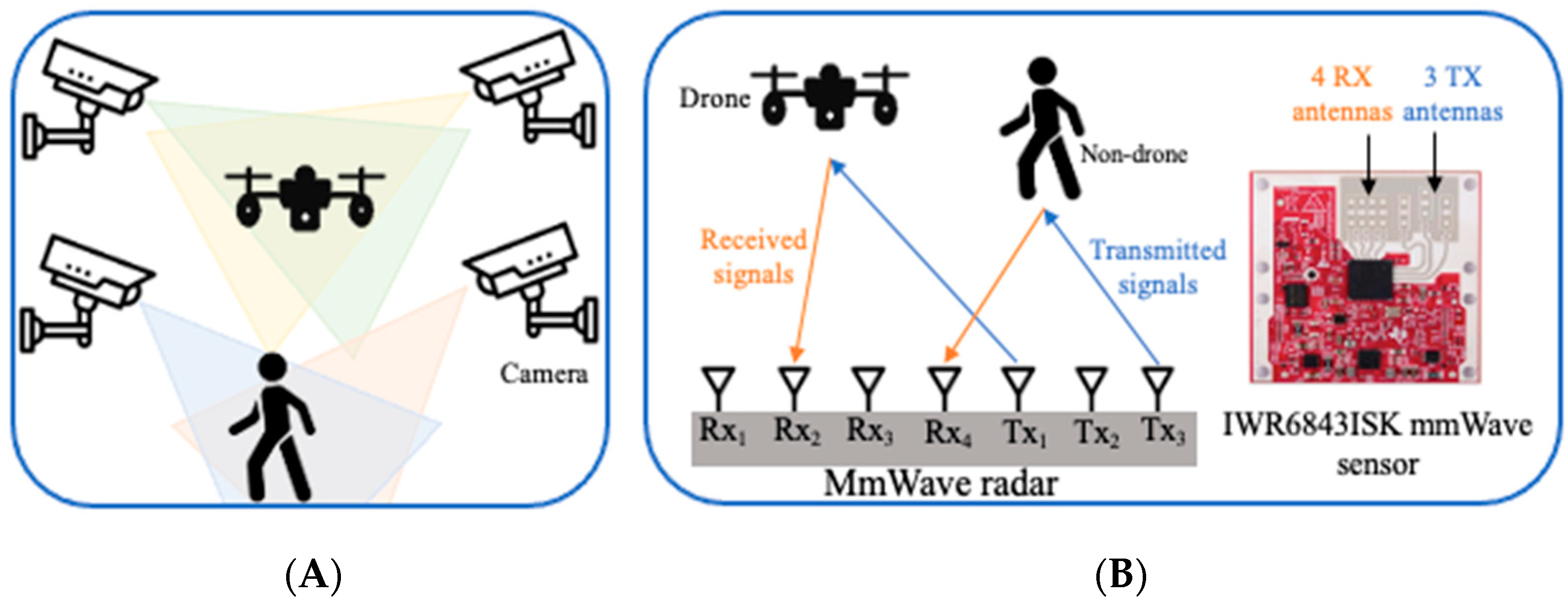

The detection of unwanted drones poses significant challenges to observation systems, especially in urban areas, as drones are tiny and move at different rates and heights compared to other moving targets [2]. For target recognition, optic-based systems that rely on cameras provide more detailed information than radio-frequency (RF)-based systems, but these require a clear frontal view, as well as ideal light and weather conditions [14,15][14][15], as shown in Figure 1A. Both residential and business environments are less accepting of the use of cameras for target recognition because of their intrusive nature [16]. Although RF-based systems are less intrusive, the signals received from RF devices are not as expressive or intuitive as those received from images. Humans are often unable to directly interpret RF signals. Thus, preprocessing RF signals is a challenging process that requires the translation of raw data into intuitive information for target recognition. It has been proven that RF-based systems such as WiFi, ultrasound sensors, and millimeter-wave (mmWave) radar can be useful for a variety of observation applications that are not affected by light or weather conditions [17]. WiFi signals require a delicate transmitter and receiver and are limited to situations where targets must move between the transmitter and receiver [18]. Because ultrasound signals are short-range, they are usually used to detect close targets and are affected by blocking or interference from other nearby transmitters [14].

Figure 1. In contrast to (A), an optic-based system, (B) the proposed framework is based on mmWave radar, which consists of three transmitting antennas and four receiving antennas.

The large bandwidth of mmWave allows a high distance-independent resolution, which not only facilitates the detection and tracking of moving targets, but also their recognition [18]. Furthermore, mmWave radar requires at least two antennas for transmitting and receiving signals; thus, the collected signals can be used in multiple observation operations [18]. Rather than true color image representation, mmWave signals can represent multiple targets using reflected three-dimensional (3D) cloud points, micro-Doppler signatures, RF-intensity-based spatial heat maps, or range-Doppler localizations [19].

mmWave-based systems frequently use convolutional neural networks (CNNs) to extract representative features from micro-Doppler signatures to recognize objects [18,20,21][18][20][21]; however, examining micro-Doppler signals is computationally complex because they deal with images, and they only distinguish moving targets based on translational motion. Employing a CNN to extract representative features from cloud points is becoming the tool of choice for developing the mathematical modeling underlying dynamic environments and leveraging spatiotemporal information processed from range, velocity, and angle information, thereby improving robustness, reliability, and detection accuracy and reducing computing complexity to achieve the simultaneous performance of mmWave radar operations [22].

2. Millimeter-Wave Radar and Convolutional Neural Network

Several techniques have been developed to detect and recognize drones, including visual [24][23], audio [25][24], WiFi [26[25][26],27], infrared camera [28][27], and radar [29][28]. Drone audio detection relies on detecting propeller sounds and separating them from the background noise. A high-resolution daylight camera and a low-resolution infrared camera were used for visual assessment [30][29]. Good weather conditions and a reasonable distance between drone targets and cameras are still required for visual assessment. Fixed visual detection methods cannot estimate the continuance track of drones. Infrared cameras detect heat sources on drones such as batteries, motors, and motor driver boards. Airborne vehicles can be detected more easily by mmWave radar, which has been the most-popular form of detection for military troops for a long time. However, traditional military radars are designed to recognize large targets and have trouble detecting small drones. Furthermore, the target discrimination may not be straightforward. The extremely short wavelength of mmWave radar systems makes them highly sensitive to the small features of drones, providing very precise velocity resolution, and allowing them to penetrate certain materials to detect concealed hazardous targets [30][29]. This subsection discusses various recent drone classifications using machine learning and deep learning models. The radar cross-section (RCS) signatures of different drones with different frequency levels have been discussed in several studies, including [2,31][2][30]. The method proposed in [2] relied on converting the RCS into images and then using a CNN to perform drone classification, which required much computation. As a result, they introduced a weight-optimization model that reduces the computational overhead, resulting in improved long short-term memory (LSTM) networks. The authors showed how a database of mmWave radar RCS signatures can be utilized to recognize and categorize drones in [31][30]. They demonstrated RCS measurements at 28 GHz for a carbon-fiber drone model. The measurements were collected in an anechoic chamber and provided significant information regarding the RCS signature of the drone. The authors aided the RCS-based detection probability and range accuracy by performing simulations in metropolitan environments. The drones were placed at different distances ranging from 30 m to 90 m, and the RCS signatures used for detection and classification were developed by trial and error. The authors proposed a novel drone-localization and activity-classification method using vertically oriented mmWave radar antennas to measure the elevation angle of the drone from the ground station in [32][31]. The measured radial distance and elevation angle were used to estimate the height of the drone and the horizontal distance from the radar station. A machine learning model was used to classify the drone’s activity based on micro-Doppler signatures extracted from radar measurements taken in an outdoor environment. The system architecture and performance of the FAROS-E 77 GHz radar at the University of St Andrews were reported in [33][32] for detecting and classifying drones. The goal of the system was to demonstrate that a highly reliable drone-classification sensor could be used for security surveillance in a small, low-cost, and portable package. To enable robust micro-Doppler signature analysis and classification, the low phase noise and coherent architecture take advantage of the high Doppler sensitivity available at mmWave frequencies. Even when a drone hovered in a stationary manner, the classification algorithm was able to classify its presence. In [34][33], the authors employed a vector network analyzer that functioned as a continuous wave radar with a carrier frequency of 6 GHz to gather Doppler patterns from test data and then recognize the motions using a CNN. Furthermore, the authors of [35][34] proposed a method for the registration of light detection and ranging (LiDAR) point clouds and images collected by low-cost drones to integrate spectral and geometrical data.References

- Kanellakis, C.; Nikolakopoulos, G. Survey on computer vision for UAVs: Current developments and trends. J. Intell. Robot. Syst. 2017, 87, 141–168.

- Fu, R.; Al-Absi, M.A.; Kim, K.-H.; Lee, Y.-S.; Al-Absi, A.A.; Lee, H.-J. Deep Learning-Based Drone Classification Using Radar Cross Section Signatures at mmWave Frequencies. IEEE Access 2021, 9, 161431–161444.

- Doole, M.; Ellerbroek, J.; Hoekstra, J. Estimation of traffic density from drone-based delivery in very low level urban airspace. J. Air Transp. Manag. 2020, 88, 101862.

- Brahim, I.B.; Addouche, S.-A.; El Mhamedi, A.; Boujelbene, Y. Cluster-based WSA method to elicit expert knowledge for Bayesian reasoning—Case of parcel delivery with drone. Expert Syst. Appl. 2022, 191, 116160.

- Sinhababu, N.; Pramanik, P.K.D. An Efficient Obstacle Detection Scheme for Low-Altitude UAVs Using Google Maps. In Data Management, Analytics and Innovation; Springer: Berlin/Heidelberg, Germany, 2022; pp. 455–470.

- Cheng, M.-L.; Matsuoka, M.; Liu, W.; Yamazaki, F. Near-real-time gradually expanding 3D land surface reconstruction in disaster areas by sequential drone imagery. Autom. Constr. 2022, 135, 104105.

- Rizk, H.; Nishimur, Y.; Yamaguchi, H.; Higashino, T. Drone-Based Water Level Detection in Flood Disasters. Int. J. Environ. Res. Public Health 2022, 19, 237.

- Nath, N.D.; Cheng, C.-S.; Behzadan, A.H. Drone mapping of damage information in GPS-Denied disaster sites. Adv. Eng. Inform. 2022, 51, 101450.

- Mishra, B.; Garg, D.; Narang, P.; Mishra, V. Drone-surveillance for search and rescue in natural disaster. Comput. Commun. 2020, 156, 1–10.

- Jacob, B.; Kaushik, A.; Velavan, P. Autonomous Navigation of Drones Using Reinforcement Learning. In Advances in Augmented Reality and Virtual Reality; Springer: Berlin/Heidelberg, Germany, 2022; pp. 159–176.

- Chechushkov, I.V.; Ankusheva, P.S.; Ankushev, M.N.; Bazhenov, E.A.; Alaeva, I.P. Assessment of Excavated Volume and Labor Investment at the Novotemirsky Copper Ore Mining Site. In Geoarchaeology and Archaeological Mineralogy; Springer: Berlin/Heidelberg, Germany, 2022; pp. 199–205.

- Molnar, P. Territorial and Digital Borders and Migrant Vulnerability Under a Pandemic Crisis. In Migration and Pandemics; Springer: Cham, Switzerland, 2022; pp. 45–64.

- Sharma, N.; Saqib, M.; Scully-Power, P.; Blumenstein, M. SharkSpotter: Shark Detection with Drones for Human Safety and Environmental Protection. In Humanity Driven AI; Springer: Berlin/Heidelberg, Germany, 2022; pp. 223–237.

- Bhatia, J.; Dayal, A.; Jha, A.; Vishvakarma, S.K.; Soumya, J.; Srinivas, M.B.; Yalavarthy, P.K.; Kumar, A.; Lalitha, V.; Koorapati, S. Object Classification Technique for mmWave FMCW Radars using Range-FFT Features. In Proceedings of the 2021 International Conference on COMmunication Systems & NETworkS (COMSNETS), Bangalore, India, 5–9 January 2021; pp. 111–115.

- Huang, X.; Cheena, H.; Thomas, A.; Tsoi, J.K.P. Indoor Detection and Tracking of People Using mmWave Sensor. J. Sens. 2021, 2021, 6657709.

- Beringer, R.; Sixsmith, A.; Campo, M.; Brown, J.; McCloskey, R. The “acceptance” of ambient assisted living: Developing an alternate methodology to this limited research lens. In Towards Useful Services for Elderly and People with Disabilities, Proceedings of the International Conference on Smart Homes and Health Telematics, Montreal, QC, Canada, 20–22 June 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 161–167.

- Ferris, D.D., Jr.; Currie, N.C. Microwave and millimeter-wave systems for wall penetration. In Targets and Backgrounds: Characterization and Representation IV; International Society for Optics and Photonics: Bellingham, WA, USA, 1998; Volume 3375, pp. 269–279.

- Zhao, P.; Lu, C.X.; Wang, J.; Chen, C.; Wang, W.; Trigoni, N.; Markham, A. mID: Tracking and identifying people with millimeter wave radar. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini, Greece, 29–31 May 2019; pp. 33–40.

- Sengupta, A.; Jin, F.; Zhang, R.; Cao, S. mm-Pose: Real-time human skeletal posture estimation using mmWave radars and CNNs. IEEE Sens. J. 2020, 20, 10032–10044.

- Yang, Y.; Hou, C.; Lang, Y.; Yue, G.; He, Y.; Xiang, W. Person identification using micro-Doppler signatures of human motions and UWB radar. IEEE Microw. Wirel. Compon. Lett. 2019, 29, 366–368.

- Tripathi, S.; Kang, B.; Dane, G.; Nguyen, T. Low-complexity object detection with deep convolutional neural network for embedded systems. In Applications of Digital Image Processing XL; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10396, p. 103961M.

- Wang, S.; Song, J.; Lien, J.; Poupyrev, I.; Hilliges, O. Interacting with soli: Exploring fine-grained dynamic gesture recognition in the radio-frequency spectrum. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; pp. 851–860.

- Srigrarom, S.; Sie, N.J.L.; Cheng, H.; Chew, K.H.; Lee, M.; Ratsamee, P. Multi-camera Multi-drone Detection, Tracking and Localization with Trajectory-based Re-identification. In Proceedings of the 2021 Second International Symposium on Instrumentation, Control, Artificial Intelligence, and Robotics (ICA-SYMP), Bangkok, Thailand, 20–22 January 2021; pp. 1–6.

- Al-Emadi, S.; Al-Ali, A.; Al-Ali, A. Audio-Based Drone Detection and Identification Using Deep Learning Techniques with Dataset Enhancement through Generative Adversarial Networks. Sensors 2021, 21, 4953.

- Alsoliman, A.; Rigoni, G.; Levorato, M.; Pinotti, C.; Tippenhauer, N.O.; Conti, M. COTS Drone Detection using Video Streaming Characteristics. In Proceedings of the 2021 International Conference on Distributed Computing and Networking, Nara, Japan, 5–8 January 2021; pp. 166–175.

- Rzewuski, S.; Kulpa, K.; Pachwicewicz, M.; Malanowski, M.; Salski, B. Drone Detectability Feasibility Study using Passive Radars Operating in WIFI and DVB-T Band; The Institute of Electronic Systems: Warsaw, PL, USA, 2021.

- Svanström, F.; Englund, C.; Alonso-Fernandez, F. Real-Time Drone Detection and Tracking with Visible, Thermal and Acoustic Sensors. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 7265–7272.

- Yoo, L.-S.; Lee, J.-H.; Lee, Y.-K.; Jung, S.-K.; Choi, Y. Application of a Drone Magnetometer System to Military Mine Detection in the Demilitarized Zone. Sensors 2021, 21, 3175.

- Dogru, S.; Baptista, R.; Marques, L. Tracking drones with drones using millimeter wave radar. In Advances in Intelligent Systems and Computing, Proceedings of the Robot 2019: Fourth Iberian Robotics Conference, Porto, Portugal, 20–22 November 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 392–402.

- Semkin, V.; Yin, M.; Hu, Y.; Mezzavilla, M.; Rangan, S. Drone detection and classification based on radar cross section signatures. In Proceedings of the 2020 International Symposium on Antennas and Propagation (ISAP), Osaka, Japan, 25–28 January 2021; pp. 223–224.

- Rai, P.K.; Idsøe, H.; Yakkati, R.R.; Kumar, A.; Khan, M.Z.A.; Yalavarthy, P.K.; Cenkeramaddi, L.R. Localization and Activity Classification of Unmanned Aerial Vehicle using mmWave FMCW Radars. IEEE Sens. J. 2021, 21, 16043–16053.

- Rahman, S.; Robertson, D.A. FAROS-E: A compact and low-cost millimeter wave surveillance radar for real time drone detection and classification. In Proceedings of the 2021 21st International Radar Symposium (IRS), Berlin, Germany, 21–22 June 2021; pp. 1–6.

- Jokanovic, B.; Amin, M.G.; Ahmad, F. Effect of data representations on deep learning in fall detection. In Proceedings of the 2016 IEEE Sensor Array and Multichannel Signal Processing Workshop (SAM), Rio de Janeiro, Brazil, 10–13 July 2016; pp. 1–5.

- Li, J.; Yang, B.; Chen, C.; Habib, A. NRLI-UAV: Non-rigid registration of sequential raw laser scans and images for low-cost UAV LiDAR point cloud quality improvement. ISPRS J. Photogramm. Remote Sens. 2019, 158, 123–145.

More