Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 1 by Luigi Barazzetti and Version 2 by Alfred Zheng.

Reconstruction of 3D scenes starting from images is a research topic in which scholars have been developing new methods since the beginning of the 20th century. The approach receiving more attention so far is the one based on the geometric consideration of image geometry and image-matching technique for radiometric consistency. Neural Radiance Fields (NeRFs) are an emerging technology that may provide novel outcomes in the heritage documentation field. NeRFs are also relatively new compared to traditional photogrammetric processing, and improvements are indeed expected with future research work.

- drone

- NeRFs

1. Introduction

Nowadays, 3D modeling from drone images has reached significant maturity and a high automation level. Digital photogrammetry coupled with computer vision methods (e.g., Structure from Motion/SfM) can automate most phases of the reconstruction workflow [1]. In addition, flight planning software also allow users to automate the data acquisition phase, which can be remotely planned before reaching the site [2].

Digital Recording of Ruins for Restoration and Conservation

The versatility of advanced digital recording tools proves to be invaluable in the field of conservation. Restoring and conserving historical buildings necessitates a precise understanding of their geometrical features. Digital recording techniques, such as laser scanning and digital photogrammetry, offer a very high level of detail, providing geometrically accurate information. This data can be utilized to analyze the historic building based on its geometrical proportions and to facilitate further investigations, including assessing the material composition and state of conservation. The successful preparation of various conservation actions relies on creating a comprehensive recording dossier, instrumental for analyzing the proportions and architectural heritage. This includes verticality deviations or other deformations, understanding the construction logic, identifying materials, and assessing their conservation conditions. Digital recording techniques offer the advantage of flexible 2D and 3D representations utilized throughout the conservation process, from preliminary studies and intervention identification to devising enhancement strategies to ensure ongoing use and accessibility of the buildings.2. NeRFs for Processing Drone Images

2.1. Preliminary Considerations

Reconstruction of 3D scenes starting from images is a research topic in which scholars have been developing new methods since the beginning of the 20th century. The approach receiving more attention so far is the one based on the geometric consideration of image geometry and image-matching technique for radiometric consistency [1][3][4][5][1,24,25,26]. New approaches are emerging in the field based on implicit representations. In those methods, a reconstruction model can be trained from a set of 2D images, and the trained model can learn implicit 3D shapes and textures [6][27]. In particular, Neural Radiance Field (NeRF) models are gaining popularity recently. NeRF models use volume rendering with implicit neural scene representation by using Multi-Layer Perceptrons (MLPs) to learn the geometry and lighting of a 3D scene starting from 2D images. The first paper introducing the concept of NeRF was published by Mildenhall et al. [7][4] in 2020, and to date (June 2023) it has received more than 3000 citations (source: Google Scholar), proving the increasing interest in this topic. Providing a literature review of this topic is out of the scope of this paper, and the reader is referred to recent review papers [8][9][10][28,29,30].-

NeRF models can be trained using only multi-view images of a scene. Unlike other neural representations, NeRF models require only images and poses to learn a scene;

-

NeRF models are photo-realistic;

-

NeRF models require low memory and perform computations faster.

-

For each pixel to be created in the new synthetic image, camera rays and some sampling points are generated into the scene;

-

Local color and density are computed for each sampling point by using the view direction, the sampling location, and the trained NeRF;

-

The computed colors and densities are the input to produce the synthetic image using volume rendering.

2.2. Castel Mancapane

2.2.1. Processing with Nerfstudio

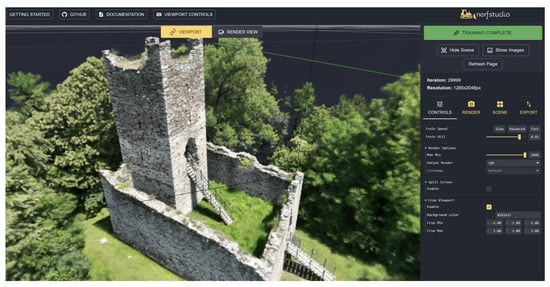

The first test with NeRFs was carried out with the images oriented in Metashape and Nerfstudio (https://docs.nerf.studio/en/latest/ (accessed on 1 August 2023)). Exterior, interior, and calibration parameters were exported from the software and processed using Nerfacto. Processing was very rapid (a few minutes, faster than the generation of the point clouds and mesh with Metashape), even considering the significant number of images in the project (549). Default parameters were used in the processing. An image of the viewer after training is shown in Figure 1, whereas some frames of the rendered video were illustrated at the beginning of the manuscript.

Figure 1.

Castel Mancapane processed using Nerfstudio.

Figure 2.

Point clouds produced with Nerfstudio (

left

) and Metashape (

right

) using the same set of images.

2.2.2. A Few Considerations on (Some) Other NeRFs Implementations

The same dataset was processed using Luma AI (https://lumalabs.ai/ (accessed on 1 August 2023) and Instant-ngp (https://github.com/NVlabs/instant-ngp (accessed on 1 August 2023)). Luma AI provides a fully automated workflow through a website in which images can be loaded as a single zip file. As the maximum size is 5 gigabytes, the images were resized by 50%. Instant-ngp can be downloaded from the provided website, and we used COLMAP for image orientation. Additionally, in this case, compressed images were used in the workflow, knowing that image resizing affects the final results. Rendering a video was straightforward with both solutions. The visual quality was excellent, especially for areas with vegetation, which is always difficult to model from a set of images and photogrammetric techniques. The background was also visible in the videos, including elements far from the castle. This interesting result could significantly improve traditional rendering from point clouds or meshes. A couple of sample frames from both videos are shown in Figure 3.

Figure 3.

Two frames from the rendered videos using Lumia AI (

top

) and Instant-ngp (

bottom

).

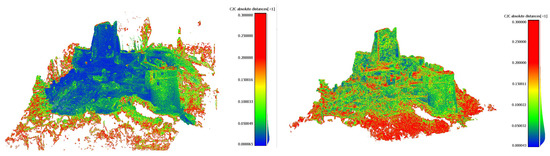

2.3. Rocca di Vogogna

A second test with NeRF was carried out for the Rocca di Vogogna dataset. This test aimed to compare results obtained with traditional image-matching techniques, those obtained with NeRF, and a common benchmark represented by a terrestrial laser scanning point cloud. A complete survey of the area for the Rocca di Vogogna is available. It was carried out using a Faro Focus X130 and a Leica BLK360. For this case study, the comparison is between Metashape and Nerfstudio. The same images were used for both reconstructions: 390 oblique and convergent images. The set of normal images was discarded since it determined some issues with the NeRF training. Default parameters were used in the processing workflows with both software. Once the training in Nerfstudio was completed, the point cloud was exported, setting the target points to 20 million. The point cloud obtained with Metashape has about 35 million points and was then subsampled to 20 million for further comparison. As previously noticed, also in the case of the Rocca di Vogogna dataset, a visual comparison shows a NeRF point cloud that is more noisy and less detailed than the one obtained with Metashape (Figure 4).

Figure 4.

Point clouds produced with Nerfstudio (

left

) and Metashape (

right

) using the same set of images for the Vogogna dataset.

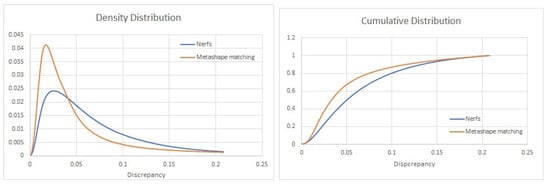

Figure 5. Comparison with TLS data: absolute distance. False color colorized point cloud (left) for image matching—Metashape (left) and NeRF—Nerfstudio (right).

Figure 6. Density (left) and cumulative (right) distributions of the discrepancies for image matching with Metashape (orange) and the Nerfstudio solution (blue).