Your browser does not fully support modern features. Please upgrade for a smoother experience.

Please note this is a comparison between Version 2 by Conner Chen and Version 1 by 子明 陈.

sEMG signal analysis refers to a series of processing steps applied to the acquired human sEMG signals using signal acquisition devices. The primary objective is to eliminate irrelevant noise unrelated to the intended movements while retaining as many useful features as possible. This process aims to accurately identify the user’s intended movements.

- myoelectric control

- intention recognition

- control strategy

1. sEMG Signal Processing

sEMG signal analysis refers to a series of processing steps applied to the acquired human sEMG signals using signal acquisition devices. The primary objective is to eliminate irrelevant noise unrelated to the intended movements while retaining as many useful features as possible. This process aims to accurately identify the user’s intended movements.

1.1. Pre-Processing

Common mode interference signals can have an impact on the sEMG signals of the human torso [53][1], such as 50 Hz power line interference, line voltage, and contamination from myocardial electrical activity [54][2]. Additionally, inherent instability exists within the sEMG signals themselves. The sEMG signals contain noise within the 0–20 Hz frequency range, influenced by the firing rate of MUs, while high-frequency signals exhibit a lower power spectral density [55][3]. Therefore, to improve the quality of sEMG signals, commonly used filters are employed to remove frequency components below 20 Hz and above 500 Hz, as well as interference signals at around 50 Hz.

Applying only filtering processing to the raw signals and inputting them into the decoding model can maximize the retention of useful information from the human signals. This approach aims to enhance the performance and practicality of the intent recognition system for real-world applications in myoelectric prosthetic hand systems. However, achieving significant accuracy in intent recognition solely through filtering the sEMG signals requires reliance on a decoding model with feature learning capabilities. The hierarchical structure of the decoding model transforms simple feature representations into more abstract and effective ones [56][4].

1.2. Feature Engineering

Traditional feature engineering for analyzing EMG signals can typically be categorized into three types: time-domain, frequency-domain, and time–frequency-domain features [57][5]. Time-domain features extract information about the signal from its amplitude, frequency-domain features provide information about the power spectral density of the signal, and time–frequency-domain features represent different frequency information at different time positions [58][6]. Frequency-domain features have unique advantages in muscle fatigue recognition. However, a comparative experiment based on 37 different features showed that frequency-domain features are not well suited for EMG signal classification [59][7]. Time–frequency-domain features are also limited in their application due to their inherent computational complexity. Time-domain features are currently the most popular feature type in the field of intent recognition.

The feature engineering techniques allow for the mapping of high-dimensional sEMG signals into a lower-dimensional space. This significantly reduces the complexity of signal processing, retaining the useful and distinguishable portions of the signal while eliminating unnecessary information. However, due to the stochastic nature of sEMG signals and the interference between muscles during movement, traditional feature engineering inevitably masks or overlooks some useful information within the signals, thereby limiting the accuracy of intent recognition. Additionally, since sEMG signals contain rich temporal and spectral information, relying on specific and limited feature combinations may not yield the optimal solution [45][8]. Moreover, a universally effective feature has not been identified thus far, necessitating further exploration of features that can make significant contributions to improving intent recognition performance.

2. Decoding Model

Decoding models serve as a bridge between user motion intentions and myoelectric prosthetic hand control commands, playing a significant role in motion intention recognition schemes. The purpose of a decoding model is to represent the linear or nonlinear relationship between the inputs and outputs of the motion intention recognition scheme, which can be achieved through either establishing an analytical relationship or constructing a mapping function. The former are known as model-based methods, while the latter are referred to as model-free methods [8][9], which include traditional machine learning algorithms and deep learning algorithms.

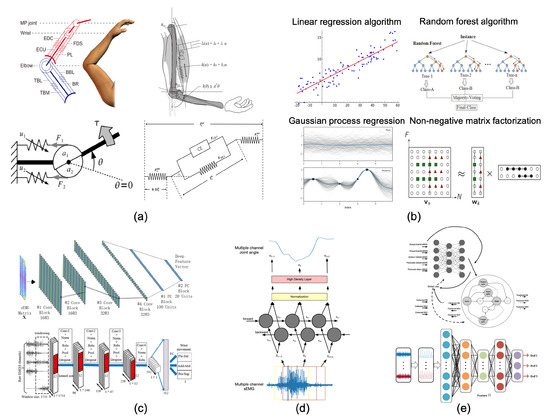

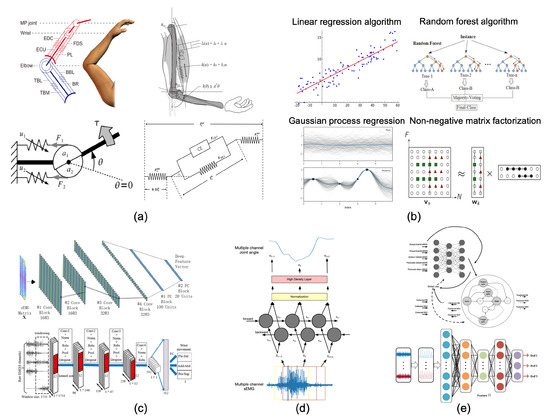

Figure 2. Application examples of decoding models: (a) Example of musculoskeletal model applications [23,60,61,62][10][11][12][13]. (b) Examples of traditional machine learning model applications. (c) Example of CNN-based model applications. [46,49][14][15]. (d) Example of RNN-based model applications [37][16]. (e) Example of hybrid-structured model applications [40,41][17][18].

2.1. Musculoskeletal Models

Musculoskeletal models are the most commonly used model-based approaches for myoelectric prosthetic hand control. These models aim to understand how neural commands responsible for human motion are transformed into actual physical movements by modeling muscle activation, kinematics, contraction dynamics, and joint mechanics [63][19]. Incorporating detailed muscle–skeletal models in the study of human motion contributes to a deeper understanding of individual muscle and joint loads [64][20]. The earliest muscle model was first proposed by A. V. Hill in 1938. It was a phenomenological lumped-parameter model that provided an explanation of the input–output data obtained from controlled experiments [21]. Through the efforts of researchers, the muscle–skeletal models commonly used in the field of EMG prosthetic hand control include the Mykin [23][10] and simplified muscle–skeletal models [25][22]. Musculoskeletal models, which encode the explicit representation of the musculoskeletal system’s anatomical structure, can better simulate human physiological motion [65][23], and are, therefore, commonly applied in research on human motion intention recognition. However, for EMG prosthetic hand human–machine interfaces based on motion skeletal models, it is necessary to acquire sEMG signals corresponding to the muscles represented by the model. This may require the use of invasive electrodes, which inevitably pose physical harm to the subjects’ limbs and require the involvement of expert physicians, leading to various inconveniences and obstacles. Another promising approach to facilitate the wider application of muscle–skeletal models is the localization of specific muscles in the subjects’ limbs through high-density sEMG signal decomposition techniques.2.2. Traditional Machine Learning Models

Machine learning algorithms typically establish mappings between inputs and desired target outputs using approximated numerical functions [8][9]. They learn from given data to achieve classification or prediction tasks and are widely applied in the field of motion intention recognition. Gaussian processes are non-parametric Bayesian models commonly applied in research on human motion intention recognition. Non-negative matrix factorization (NMF) is one of the most popular algorithms in motion intention recognition based on sEMG. As the name suggests, the design concept of this algorithm is to decompose a non-negative large matrix into two non-negative smaller matrices. In the field of motion intention recognition based on sEMG, the sEMG signal matrix is often decomposed into muscle activation signals and muscle weight matrices using non-negative matrix factorization algorithms. The muscle weight matrix is considered to reflect muscle synergies [29][24]. Despite achieving certain results, motion intention decoding models based on traditional machine learning algorithms often rely on tedious manual feature engineering. Research has shown that methods utilizing traditional machine learning algorithms still fail to meet the requirements of current human–machine interaction scenarios, such as EMG prosthetic hand control, in terms of accuracy and real-time responsiveness [66][25].2.3. Deep Learning Models

Deep learning algorithms can be used to classify input data into corresponding types or regress them into continuous sequences in an end-to-end manner, without the need for manual feature extraction and selection [9][26]. The concept of deep learning originated in 2006 [67][27], and, since then, numerous distinctive new algorithm structures have been developed. CNN-based models: CNN was first proposed in 1980 [68][28]. Due to its design of convolutional layers, it has the capability to learn general information from a large amount of data and provide multiple outputs. CNN has been applied in various fields such as image processing, video classification, and robot control. It has also found extensive applications in the field of motion intention recognition; RNN-based models: The introduction of RNN was aimed at modeling the temporal information within sequences and effectively extracting relevant information between input sequences. However, due to the issue of vanishing or exploding gradients, RNN struggles to remember long-term dependencies [9][26]. To address this inherent limitation, a variant of RNN called Long short-term memory (LSTM) was introduced, which has gained significant attention in research on recognizing human motion intentions. Numerous studies have been conducted on the application of LSTM in this field; Hybrid-structured models: Hybrid-structured deep learning algorithms typically consist of combining two or more different types of deep learning networks. For motion intention recognition based on sEMG, hybrid-structured deep learning algorithms often outperform other approaches in intention recognition tasks. One compelling reason for this is that hybrid-structured algorithms extract more abstract features from sEMG signals, potentially capturing more hidden information. This leads to improved performance in motion intention recognition. Deep learning algorithms have been widely applied in the recognition of human motion intentions due to their unique end-to-end mapping approach. They eliminate the need for researchers to manually extract signal features and instead learn more abstract and effective features through the depth and breadth of their network structures. However, their lack of interpretability makes it challenging to integrate them with biological theories for convincing analysis. Moreover, the increased complexity of networks associated with improved recognition accuracy results in significant computational demands. This poses challenges for tasks such as EMG prosthetic hand control that require fast response times within specific time frames. Currently, most research in this area is based on offline tasks. Therefore, key technical research focuses on how to incorporate human biological theories into the design of deep learning algorithms and achieve high accuracy and fast response in motion intention recognition solely through lightweight network structures.3. Mapping Parameters

Mapping parameters, as the output part of the motion recognition scheme, serve as the parameterization of user motion intention and control commands for the myoelectric prosthetic hand system. Human hand motion is controlled by approximately 29 bones and 38 muscles, offering 20–25 degrees of freedom (DoF) [69][29], enabling flexible and intricate movements. The movement of the human hand is achieved through the interaction and coordination of the neural, muscular, and skeletal systems [70][30]. This implies that the parameterization of motion intention should encompass not only the range of motion of each finger but also consider the variations in joint forces caused by different muscle contractions. In myoelectric hand control, two commonly used control methods exist [8][9]: (1) Using surface electromyography (sEMG) as the input for decoding algorithms, joint angles are outputted as commands for controlling low-level actuators; (2) Using sEMG as the input, joint torques are output and sent to either the low-level control loop or directly to the robot actuators (referred to as a force-/torque-based control algorithm).3.1. Kinematic Parameters

Parameterizing human motion intention typically involves kinematic parameters such as joint angles, joint angular velocity, and joint angular acceleration: Joint angle: Analyzing biomechanical muscle models reveals [45][8] that joint angles specifically define the direction of muscle fibers and most directly reflect the state of motion; Joint angular velocity: Joint angular velocity is more stable compared to other internal physical quantities, making it advantageous for better generalization to new individuals. It is closely related to the extension/flexion movement commands of each joint [71][31]; Joint angular acceleration: Joint angular acceleration describes the speed at which the joint motion velocity in the human body changes. The relationship between joint angular acceleration and joint angular velocity is similar to the relationship between joint angles and joint angular velocity. Some studies suggest a significant correlation between joint angular acceleration and muscle activity [72][32].3.2. Dynamics Parameters

When using a myoelectric prosthetic hand to perform daily grasping tasks, the appropriate contact force is also a crucial factor in determining task success rate for individuals with limb loss. Among the dynamic parameters commonly used for parameterizing human motion intention, joint torque has been proven to be closely related to muscle strength [21]. For laboratory-controlled prostheses operated through a host computer, suitable operation forces can be achieved using force/position hybrid control or impedance control. However, for myoelectric prosthetic hands driven by user biological signals, effective force control of the prosthetic hand must be achieved by decoding the user’s intention. Therefore, the conversion of human motion intention into dynamic parameters is necessary.3.3. Other Parameters

A common approach to parameterizing human motion intention using specific indicators involves guiding the subjects to perform specific tasks to obtain target data and using these cues as labels [73][33]. Motion intention recognition is then achieved using supervised learning decoding models. Representing human motion intention using other forms of parameters to some extent reduces the complexity of intention decoding tasks, simplifying the process and facilitating the application of motion intention recognition schemes to practical physical platforms. However, when relying on end-to-end mapping established by decoding models, if the mapping variables lack meaningful biological interpretations, this further reduces the persuasiveness and interpretability of the already less interpretable decoding models. Therefore, when considering non-biological alternative parameter schemes, it is important to strike a balance between control performance and the interpretability of human physiological mechanisms.References

- Hermens, H.J.; Freriks, B.; Disselhorst-Klug, C.; Rau, G. Development of recommendations for SEMG sensors and sensor placement procedures. J. Electromyogr. Kinesiol. 2000, 10, 361–374.

- Drake, J.D.; Callaghan, J.P. Elimination of electrocardiogram contamination from electromyogram signals: An evaluation of currently used removal techniques. J. Electromyogr. Kinesiol. 2006, 16, 175–187.

- Reaz, M.B.I.; Hussain, M.S.; Mohd-Yasin, F. Techniques of EMG signal analysis: Detection, processing, classification and applications. Biol. Proced. Online 2006, 8, 11–35.

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828.

- Oskoei, M.A.; Hu, H. Myoelectric control systems—A survey. Biomed. Signal Process. Control 2007, 2, 275–294.

- Turner, A.; Shieff, D.; Dwivedi, A.; Liarokapis, M. Comparing machine learning methods and feature extraction techniques for the emg based decoding of human intention. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Guadalajara, Mexico, 1–5 November 2021; pp. 4738–4743.

- Phinyomark, A.; Phukpattaranont, P.; Limsakul, C. Feature reduction and selection for EMG signal classification. Expert Syst. Appl. 2012, 39, 7420–7431.

- Nasr, A.; Bell, S.; He, J.; Whittaker, R.L.; Jiang, N.; Dickerson, C.R.; McPhee, J. MuscleNET: Mapping electromyography to kinematic and dynamic biomechanical variables by machine learning. J. Neural Eng. 2021, 18, 0460d3.

- Bi, L.; Guan, C. A review on EMG-based motor intention prediction of continuous human upper limb motion for human-robot collaboration. Biomed. Signal Process. Control 2019, 51, 113–127.

- Shin, D.; Kim, J.; Koike, Y. A myokinetic arm model for estimating joint torque and stiffness from EMG signals during maintained posture. J. Neurophysiol. 2009, 101, 387–401.

- He, Z.; Qin, Z.; Koike, Y. Continuous estimation of finger and wrist joint angles using a muscle synergy based musculoskeletal model. Appl. Sci. 2022, 12, 3772.

- Zhao, Y.; Zhang, Z.; Li, Z.; Yang, Z.; Dehghani-Sanij, A.A.; Xie, S. An EMG-driven musculoskeletal model for estimating continuous wrist motion. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 3113–3120.

- Kawase, T.; Sakurada, T.; Koike, Y.; Kansaku, K. A hybrid BMI-based exoskeleton for paresis: EMG control for assisting arm movements. J. Neural Eng. 2017, 14, 016015.

- Yu, Y.; Chen, C.; Zhao, J.; Sheng, X.; Zhu, X. Surface electromyography image-driven torque estimation of multi-DoF wrist movements. IEEE Trans. Ind. Electron. 2021, 69, 795–804.

- Yang, D.; Liu, H. An EMG-based deep learning approach for multi-DOF wrist movement decoding. IEEE Trans. Ind. Electron. 2021, 69, 7099–7108.

- Ma, C.; Lin, C.; Samuel, O.W.; Guo, W.; Zhang, H.; Greenwald, S.; Xu, L.; Li, G. A bi-directional LSTM network for estimating continuous upper limb movement from surface electromyography. IEEE Robot. Autom. Lett. 2021, 6, 7217–7224.

- Bao, T.; Zaidi, S.A.R.; Xie, S.; Yang, P.; Zhang, Z.Q. A CNN-LSTM hybrid model for wrist kinematics estimation using surface electromyography. IEEE Trans. Instrum. Meas. 2020, 70, 2503809.

- Ma, C.; Lin, C.; Samuel, O.W.; Xu, L.; Li, G. Continuous estimation of upper limb joint angle from sEMG signals based on SCA-LSTM deep learning approach. Biomed. Signal Process. Control 2020, 61, 102024.

- Buchanan, T.S.; Lloyd, D.G.; Manal, K.; Besier, T.F. Neuromusculoskeletal modeling: Estimation of muscle forces and joint moments and movements from measurements of neural command. J. Appl. Biomech. 2004, 20, 367–395.

- Inkol, K.A.; Brown, C.; McNally, W.; Jansen, C.; McPhee, J. Muscle torque generators in multibody dynamic simulations of optimal sports performance. Multibody Syst. Dyn. 2020, 50, 435–452.

- Winters, J.M. Hill-based muscle models: A systems engineering perspective. In Multiple Muscle Systems: Biomechanics and Movement Organization; Springer: Berlin/Heidelberg, Germany, 1990; pp. 69–93.

- Crouch, D.L.; Huang, H. Lumped-parameter electromyogram-driven musculoskeletal hand model: A potential platform for real-time prosthesis control. J. Biomech. 2016, 49, 3901–3907.

- Pan, L.; Crouch, D.L.; Huang, H. Comparing EMG-based human-machine interfaces for estimating continuous, coordinated movements. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 2145–2154.

- Jiang, N.; Englehart, K.B.; Parker, P.A. Extracting simultaneous and proportional neural control information for multiple-DOF prostheses from the surface electromyographic signal. IEEE Trans. Biomed. Eng. 2008, 56, 1070–1080.

- Ahsan, M.R.; Ibrahimy, M.I.; Khalifa, O.O. EMG signal classification for human computer interaction: A review. Eur. J. Sci. Res. 2009, 33, 480–501.

- Xiong, D.; Zhang, D.; Zhao, X.; Zhao, Y. Deep learning for EMG-based human-machine interaction: A review. IEEE/CAA J. Autom. Sin. 2021, 8, 512–533.

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554.

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551.

- Jarque-Bou, N.J.; Sancho-Bru, J.L.; Vergara, M. A systematic review of emg applications for the characterization of forearm and hand muscle activity during activities of daily living: Results, challenges, and open issues. Sensors 2021, 21, 3035.

- Sartori, M.; Llyod, D.G.; Farina, D. Neural data-driven musculoskeletal modeling for personalized neurorehabilitation technologies. IEEE Trans. Biomed. Eng. 2016, 63, 879–893.

- Todorov, E.; Ghahramani, Z. Analysis of the synergies underlying complex hand manipulation. In IEEE Engineering in Medicine and Biology Magazine; IEEE: New York, NY, USA, 2004; Volume 2, pp. 4637–4640.

- Suzuki, M.; Shiller, D.M.; Gribble, P.L.; Ostry, D.J. Relationship between cocontraction, movement kinematics and phasic muscle activity in single-joint arm movement. Exp. Brain Res. 2001, 140, 171–181.

- Jiang, N.; Vujaklija, I.; Rehbaum, H.; Graimann, B.; Farina, D. Is accurate mapping of EMG signals on kinematics needed for precise online myoelectric control? IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 22, 549–558.

More