| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Anna Wróblewska | -- | 2035 | 2024-02-22 02:48:42 |

Video Upload Options

Data processing in robotics is currently challenged by the effective building of multimodal and common representations. Tremendous volumes of raw data are available and their smart management is the core concept of multimodal learning in a new paradigm for data fusion.

1. Introduction

2. Types of Modalities

-

Tabular data: observations are stored as rows and their features as columns;

-

Graphs: observations are vertices and their features are in the form of edges between individual vertices;

-

Signals: observations are files of appropriate extension (images:.jpeg, audio:.wav, etc.) and their features are the numerical data provided within files;

-

Sequences: observations are in the form of characters/words/documents, where the type of character/word corresponds to features.

|

Modalities |

Article |

Overview |

|---|---|---|

|

Images, time series, and tabular |

[9] |

Prediction of Alzheimer’s disease based on magnetic resonance imaging and positron emission tomography (images) that are performed multiple times on one patient within specified periods of time (time series). Patient demographics and genetic data are also taken into account (tabular). |

|

Audio, video, and event streams |

[10] |

Behavioral analysis and emotion and stress prediction. Analyzed data consist of 45-min recordings of students during the final exam period. They are recorded with the use of cameras (video), thermal physiological measurements of the heart, breathing rates (event streams), and lapel microphones (audio). |

|

Text and images |

[11] |

Question answering based on images containing some textual data. |

|

Images, text, and graphs |

Outfit/movie recommender systems. Movies are recommended based on plot (text), poster (image), liked and disliked movies, and cast (graphs). Outfits are chosen based on product features in images and text descriptions. |

3. Multimodal Representation

-

Smoothness: A transformation should preserve objects similarities, expressed mathematically as 𝑥≈𝑦⇒𝑓(𝑥)≈𝑓(𝑦). For instance, the words “book” and “read” are expected to have similar embeddings;

-

Manifolds: probability mass is concentrated within regions of lower dimensionality than the original space, e.g., we can expect the words “Poland”, “USA”, and “France” to have embeddings within a certain region, and the words “jump”, “ride”, and “run” in another distinct region;

-

Natural clustering: categorical values could be assigned to observations within the same manifold, e.g., a region with the words “Poland”, “USA”, and “France” can be described as “countries”;

-

Sparsity: given an observation, only a subset of its numerical representation features should be relevant. Otherwise, we end up with complicated embeddings whose highly correlated features may lead to numerous ambiguities.

4. Multimodal Data Fusion

-

Intermodality: the combination of several modalities, which leads to better and more robust model predictions [2];

-

Cross-modality: this aspect assumes inseparable interactions between modalities. No reasonable conclusions can be drawn from data unless all their modalities are joined [19];

-

Missing data: for some cases, particular modalities might not be available. An ideal multimodal data fusion algorithm is robust to missing modalities and uses others to compensate for the widespread information loss in recommender systems.

4.1. Deep Learning Models

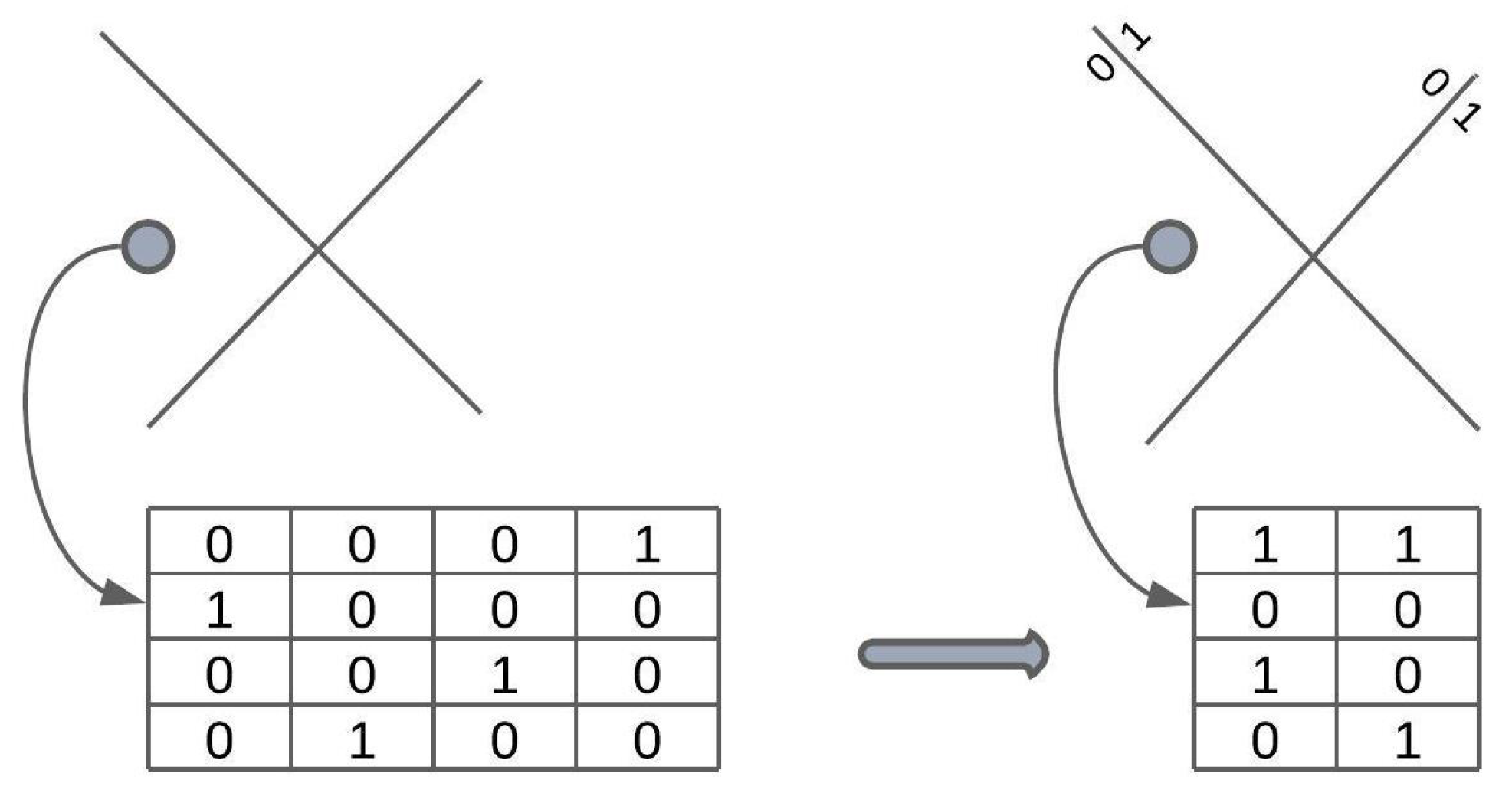

4.2. Hashing Ideas

4.3. Sketch Representation

5. Multimodal Model Evaluation

References

- Yuhas, B.; Goldstein, M.; Sejnowski, T. Integration of acoustic and visual speech signals using neural networks. IEEE Commun. Mag. 1989, 27, 65–71.

- Baltrusaitis, T.; Ahuja, C.; Morency, L.P. Multimodal Machine Learning: A Survey and Taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 423–443.

- Cao, W.; Feng, W.; Lin, Q.; Cao, G.; He, Z. A Review of Hashing Methods for Multimodal Retrieval. IEEE Access 2020, 8, 15377–15391.

- Gao, J.; Li, P.; Chen, Z.; Zhang, J. A Survey on Deep Learning for Multimodal Data Fusion. Neural Comput. 2020, 32, 829–864.

- Gandhi, A.; Adhvaryu, K.; Poria, S.; Cambria, E.; Hussain, A. Multimodal sentiment analysis: A systematic review of history, datasets, multimodal fusion methods, applications, challenges and future directions. Inf. Fusion 2023, 91, 424–444.

- Tsanousa, A.; Bektsis, E.; Kyriakopoulos, C.; González, A.G.; Leturiondo, U.; Gialampoukidis, I.; Karakostas, A.; Vrochidis, S.; Kompatsiaris, I. A Review of Multisensor Data Fusion Solutions in Smart Manufacturing: Systems and Trends. Sensors 2022, 22, 1734.

- Varshney, K. Trust in Machine Learning, Manning Publications, Shelter Island, Chapter 4 Data Sources and Biases, Section 4.1 Modalities. Available online: https://livebook.manning.com/book/trust-in-machine-learning/chapter-4/v-2/ (accessed on 23 March 2021).

- Zhang, Y.; Sidibé, D.; Morel, O.; Mériaudeau, F. Deep multimodal fusion for semantic image segmentation: A survey. Image Vis. Comput. 2021, 105, 104042.

- El-Sappagh, S.; Abuhmed, T.; Riazul Islam, S.; Kwak, K.S. Multimodal multitask deep learning model for Alzheimer’s disease progression detection based on time series data. Neurocomputing 2020, 412, 197–215.

- Jaiswal, M.; Bara, C.P.; Luo, Y.; Burzo, M.; Mihalcea, R.; Provost, E.M. MuSE: A Multimodal Dataset of Stressed Emotion. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; European Language Resources Association: Paris, France, 2020; pp. 1499–1510.

- Singh, A.; Natarajan, V.; Shah, M.; Jiang, Y.; Chen, X.; Batra, D.; Parikh, D.; Rohrbach, M. Towards VQA Models That Can Read. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 8309–8318.

- Rychalska, B.; Basaj, D.B.; Dabrowski, J.; Daniluk, M. I know why you like this movie: Interpretable Efficient Multimodal Recommender. arXiv 2020, arXiv:2006.09979.

- Laenen, K.; Moens, M.F. A Comparative Study of Outfit Recommendation Methods with a Focus on Attention-based Fusion. Inf. Process. Manag. 2020, 57, 102316.

- Salah, A.; Truong, Q.T.; Lauw, H.W. Cornac: A Comparative Framework for Multimodal Recommender Systems. J. Mach. Learn. Res. 2020, 21, 1–5.

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL, Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Toronto, AB, Canada, 2019; pp. 4171–4186.

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 770–778.

- Bengio, Y.; Courville, A.; Vincent, P. Representation Learning: A Review and New Perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828.

- Srivastava, N.; Salakhutdinov, R. Multimodal learning with deep Boltzmann machines. J. Mach. Learn. Res. 2014, 15, 2949–2980.

- Frank, S.; Bugliarello, E.; Elliott, D. Vision-and-Language or Vision-for-Language? On Cross-Modal Influence in Multimodal Transformers. arXiv 2021, arXiv:2109.04448.

- Gallo, I.; Calefati, A.; Nawaz, S. Multimodal Classification Fusion in Real-World Scenarios. In Proceedings of the 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 36–41.

- Kiela, D.; Grave, E.; Joulin, A.; Mikolov, T. Efficient Large-Scale Multi-Modal Classification. arXiv 2018, arXiv:1802.02892.

- Bayoudh, K.; Knani, R.; Hamdaoui, F.; Mtibaa, A. A survey on deep multimodal learning for computer vision: Advances, trends, applications, and datasets. Vis. Comput. 2022, 38, 2939–2970.

- Dabrowski, J.; Rychalska, B.; Daniluk, M.; Basaj, D.; Goluchowski, K.; Babel, P.; Michalowski, A.; Jakubowski, A. An efficient manifold density estimator for all recommendation systems. arXiv 2020, arXiv:2006.01894.