Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Ziyang Song | -- | 2187 | 2024-01-18 12:48:13 | | | |

| 2 | Rita Xu | Meta information modification | 2187 | 2024-01-19 02:42:31 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Zhang, X.; Song, Z.; Huang, Q.; Pan, Z.; Li, W.; Gong, R.; Zhao, B. Autonomous Wheelchair Navigation. Encyclopedia. Available online: https://encyclopedia.pub/entry/54051 (accessed on 07 February 2026).

Zhang X, Song Z, Huang Q, Pan Z, Li W, Gong R, et al. Autonomous Wheelchair Navigation. Encyclopedia. Available at: https://encyclopedia.pub/entry/54051. Accessed February 07, 2026.

Zhang, Xiaochen, Ziyang Song, Qianbo Huang, Ziyi Pan, Wujing Li, Ruining Gong, Bi Zhao. "Autonomous Wheelchair Navigation" Encyclopedia, https://encyclopedia.pub/entry/54051 (accessed February 07, 2026).

Zhang, X., Song, Z., Huang, Q., Pan, Z., Li, W., Gong, R., & Zhao, B. (2024, January 18). Autonomous Wheelchair Navigation. In Encyclopedia. https://encyclopedia.pub/entry/54051

Zhang, Xiaochen, et al. "Autonomous Wheelchair Navigation." Encyclopedia. Web. 18 January, 2024.

Copy Citation

As automated driving system (ADS) technology is adopted in wheelchairs, clarity on the vehicle’s imminent path becomes essential for both users and pedestrians. For users, understanding the imminent path helps mitigate anxiety and facilitates real-time adjustments. For pedestrians, this insight aids in predicting their next move when near the wheelchair.

autonomous wheelchairs

shared eHMI

human in the loop

1. Introduction

Wheelchairs are essential for people with mobility impairments, providing health and independence [1]. However, operating a wheelchair can be challenging for the elderly and those with upper limb injuries. Moreover, wheelchair users have a higher probability of upper limb injuries due to the use of their arms [2]. Additionally, the joystick controllers of electric wheelchairs are difficult for users with hand impairments [3]. If autonomous driving functionality is applied to electric wheelchairs, users could move to their desired destinations without the need to manually operate the wheelchair [2]. This would reduce their travel burden and enhance their independence in mobility.

The emergence of automated driving system (ADS) technology signifies a transformative epoch in intelligent autonomous mobility, especially in personal devices such as electric wheelchairs [2][4][5]. The adoption of autonomous driving technology enhances the convenience and independence of wheelchair users; however, these advancements introduce novel paradigms and conundrums in human–machine interface dynamics [6]. Contrary to conventional manual joystick mechanisms, autonomous wheelchair users often exhibit apprehension towards machine-dictated trajectories, which can appear as an opaque decision-making algorithm [7][8]. This transparency refers to “the degree of shared intent and shared awareness between a human and a machine [9].” Therefore, to achieve such transparency, the automated driving system needs to convey its capabilities, motion intentions, and anticipated actions to the user.

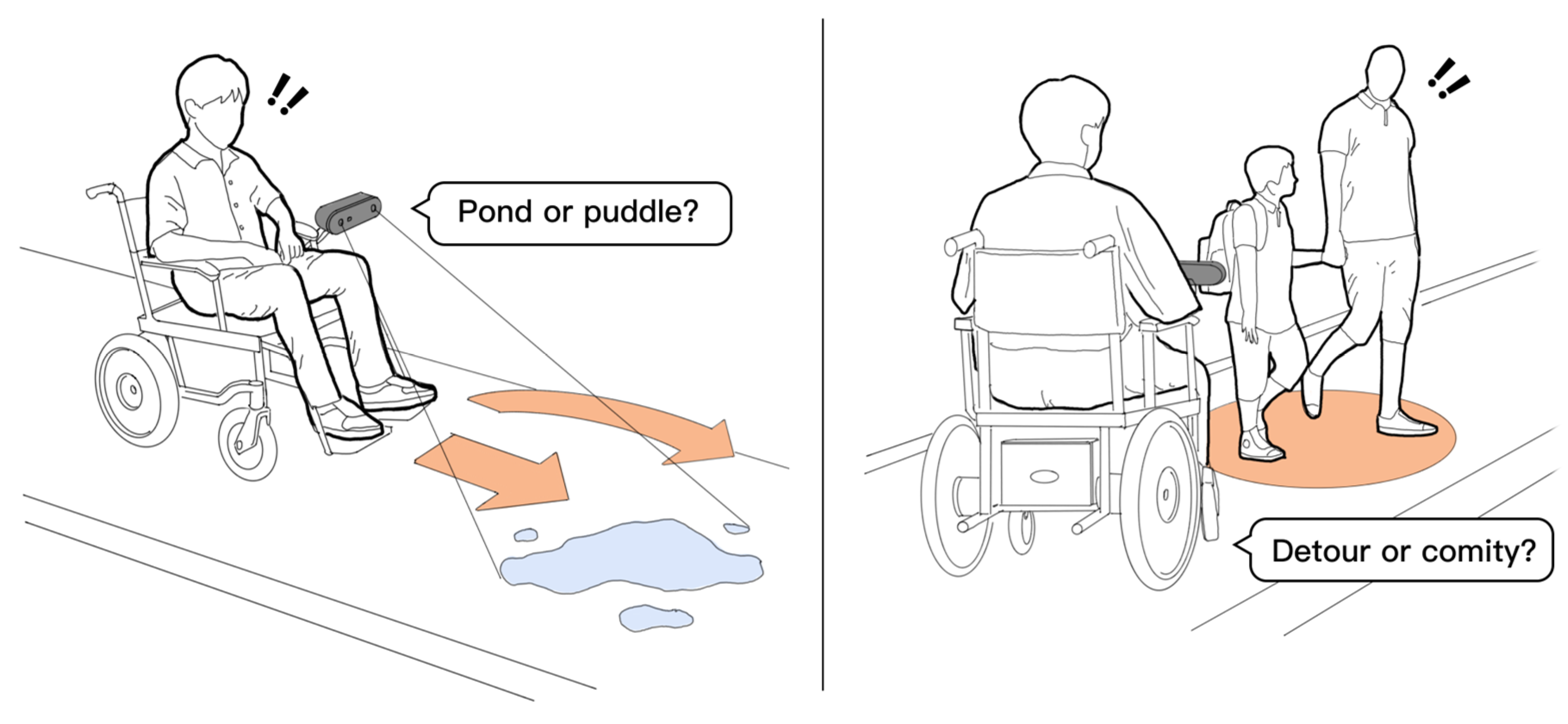

Notably, the terrains navigated by wheelchairs tend to be more complex than conventional vehicular roads [7][10]. The human visual system excels at interpreting these terrain nuances, often surpassing the discernment of standard machine sensors. Such intricacies, when amalgamated with instantaneous path determination, occasionally necessitate human interference, either through minor AI route adjustments or by re-assuming control. As shown in Figure 1, despite the commendable performance of ADS technology in many scenarios, there are still inherent challenges when confronting intricate road conditions and social interactions. This highlights the significant cognitive burden on users to comprehend the anticipated behaviors. It also reflects how transparent communication aids users in making decisions and intervening in the actions of the autonomous driving system. Moreover, it is essential for pedestrians to predict the autonomous wheelchair’s anticipated actions, facilitating their own appropriate adjustments to prevent potential mishaps [11][12].

Figure 1. The limitations of autonomous driving sensors in discerning terrain nuances and social scenarios require user intervention for accurate distinction.

Thus, further study and exploration are required on how to effectively visualize the driving status and intentions of autonomous wheelchairs. This is crucial for enhancing the understanding of wheelchair users and other road users about the behaviors of autonomous wheelchairs and thus promoting transparent communication. Currently, there is a gap in the design and research specifically aimed at the visualization of driving status and intentions for autonomous wheelchairs. Additionally, there is a need to evaluate the impact of this human–machine interaction method on the attitudes and acceptance levels of both wheelchair users and other road users.

2. eHMI and Projection Interaction of Autonomous Vehicles

External human–machine interaction (eHMI) arises as a solution for the vehicle to transmit information to potentially dangerous agents in the vicinity, representing a newly developing direction of autonomous driving [13]. eHMI encompasses the interaction design between autonomous vehicles (AVs), pedestrians, and other road users, primarily involving the communication of the vehicle’s status, intentions, and interaction modalities with pedestrians [6].

As ADS technology advances, the clarity and comprehensibility of such interactions become pivotal for road safety. Papakostopoulos et al. conducted an experiment and reported that eHMI has a positive effect on other drivers’ ability to infer the AV motion intentions [14]. In addition, the congruence of eHMI with vehicle kinematics has been shown to be crucial. Rettenmaier et al. found that when eHMI signals matched vehicle movements, drivers experienced fewer crashes and passed more quickly than without eHMI [15]. Faas et al.’s study demonstrated that any eHMI contributes to a more positive feeling towards AVs compared to the baseline condition without eHMI [16]. These indicate that eHMI facilitates effective communication, reducing the uncertainty in intention estimation and thereby preventing accidents.

The design of eHMI could be multiform and multimodal. External interaction screens, matrix light, and projection interfaces are widely used in eHMI design due to their versatile interaction capabilities with diverse road users [17]. Among these, common visual communication modalities encompass emulation of human driver communication cues, textual interfaces, graphical interfaces, lighting interfaces, and projection interfaces [18][19][20][21]. Dou et al. evaluated the multimodal eHMI of AVs in virtual reality. Participants, as pedestrians, felt an increased sense of safety and showed a preference for the visual features of arrows [22], offering insights for future design. Dietrich et al. explored the use of projection-based methods in virtual environments to facilitate interactions between AVs and pedestrians [23]. Additionally, on-market vehicles have experimented with headlight projections to convey information. These highlight the implementation of projection as a visual form of eHMI, suggesting further potential for exploration in this area.

On lightweight vehicles such as wheelchairs and electric bicycles, eHMI in the form of on-ground projections is more reasonable. Utilizing on-ground projections, information can be directly exhibited on the roadway, offering explicit motion intentions or navigation paths. This approach of integrating interfaces with the environment through augmented reality offers communication that is intuitive and visibly clear, unaffected by the movement of vehicles [21][24]. Notably, augmented reality technology and multimodal interfaces are now considered effective means for ensuring smooth and comfortable interactions [25][26]. This provides insights for the exploration of more complex and engaging interfaces, especially through the method of on-ground projections.

eHMI has more value in social attributes and interaction design. According to the research of Clercq et al. and Colley et al [27][28], eHMI in the form of on-ground projections, particularly those conveying functions of autonomous driving mechanisms like parking and alerting pedestrians, are predominantly accepted by the majority of individuals. The experiment by Wang et al. [29] showed that this form of eHMI is safer, more helpful, and better at maintaining users’ attention on the route.

Interestingly, for wheelchairs with on-ground projection-based eHMIs, this system serves not only as an external interaction tool but also plays a pivotal role in the user–wheelchair interaction due to the visibility of the projection in public spaces. Wheelchair users can observe the system’s status through the projections and also interact with it, modifying its operational parameters, thus using it as a user interface for the wheelchairs. In the context of autonomous driving, ensuring the safety of autonomous wheelchairs on the road requires further exploration of their user and external interactions. There is also a need to develop specific and universally accepted UI system designs.

3. Shared-Control Wheelchair

Similar to the classification of autonomous driving, smart wheelchairs can be categorized into fully-autonomous and semi-autonomous control modes based on the degree of allocation of driving rights [30][31]. Different autonomous driving levels have distinct control strategies, influencing user interaction and eHMI designs; this impacts the communication of wheelchair information to users, user control mechanisms, and the information shared with other road users.

In a fully-autonomous control mode, there are three possible scenarios for managing the allocation of driving rights for wheelchairs. Firstly, the driving right is completely handed over to the autonomous system, users simply indicate their destination, and the wheelchair navigates there without collisions [32]. Secondly, external collaborative control allows experienced individuals to remotely or directly control the wheelchair. Additionally, the driving rights of wheelchairs may also be controlled by a centralized system at the upper level, which uniformly allocates and manages the control of multiple wheelchairs [33][34]. This approach facilitates large-scale management, ideal for settings like hospitals and nursing homes that need centralized wheelchair oversight. Fully-autonomous wheelchairs have been found to overlook user capabilities and intentions. On the other hand, in semi-autonomous wheelchairs, users tend to favor maintaining control during operation and high-level decision-making processes [35].

The shared-control wheelchair employs a semi-autonomous control mode where the user and the autonomous system collaboratively determine the final motion of the wheelchair, aiming for a safer, more efficient, and comfortable driving experience [36][37]. The shared-control wheelchair will transfer part of the driving rights to the autonomous system. When the autonomous system is unable to determine or the user has a unique preference, the driving rights will be transferred back to the user for specific operations. Wang et al. discussed the human driver model and interaction strategy in vehicle sharing. This protects user experience when technology applications are limited [38]. Xi et al. [39] proposed a reinforcement learning-based control method that can adjust the control weights between users and wheelchairs to meet the requirements of fully utilizing user operational capabilities. High automation makes it suitable for wheelchair users who are unable to perform manual operations, such as those with high paraplegia and cognitive impairment.

Shared control encompasses two primary strategies. In the first, known as hierarchical shared control, users dictate high-level actions while the wheelchair autonomously handles collision avoidance [40]. The second strategy merges inputs from both the user and the motion planner, moving the wheelchair only when commands from both are present, thereby enhancing user control and collaboration [31][37][41][42]. Guided by these strategies to foster a more suitable human–machine collaboration for shared-control wheelchairs, the user retains higher-level decision-making while allowing the automated system to handle simple driving tasks. The wheelchair autonomously moves only when the user activates the autonomous mode. It automatically handles basic navigation by planning and selecting optimal routes based on user-specified destinations. Users can influence driving settings and motion planning with advanced directives in autonomous mode, such as adding temporary waypoints, yielding to pedestrians, or adjusting speed. Likewise, users have the authority to pause autonomous control for more detailed adjustments to the wheelchair’s behaviors. These control methods ensure a harmonious balance between automation and user preferences in human–machine interaction, optimizing safety and user experience. And the driving information is communicated to both users and pedestrians through internal and external interfaces, ensuring clear and effective feedback for the success of the shared control systems [43][44].

4. Pedestrians’ Concerns or Opinions about the Uncertainty of Autonomous Vehicles

The majority of previous research has focused on user acceptance of automated vehicles (AVs). Kaye et al. examined the receptiveness of pedestrians to AVs, partially filling the gap in research on pedestrian acceptance of AVs [45]. Pedestrian behavior is challenging to predict due to its dynamic nature, lack of training, and a tendency to disobey rules in certain situations [13]. Pedestrians may feel unsafe interacting with autonomous personal mobility vehicles (APMVs) when uncertain about their driving intentions. A lack of clarity regarding the APMV’s intentions can lead to hesitation or even feelings of danger among pedestrians [46]. The understanding of driving intentions by pedestrians is by means of trust in human–machine interactions [47]. AVs are capable of reliably detecting pedestrians, but it is challenging for both AVs and pedestrians to predict each other’s intentions.

Epke et al. examined the effects of using explicit hand gestures and receptive eHMI in the interaction between pedestrians and AVs. The eHMI was proved to facilitate participants in predicting the AV’s behaviors [48]. In exploring interactions between pedestrians and autonomous wheelchairs, both Watanabe et al. and Moondeep et al. identified that explicitly conveying the wheelchair’s movement intentions, through methods such as ground-projected light paths or red projected arrows, notably enhanced the smoothness of interactions and augmented pedestrians cooperation [49][50]. This underscores the significance of transparently communicating driving intentions in alleviating pedestrians concerns regarding the uncertainty of autonomous vehicles. However, interactions and scenarios between autonomous wheelchairs and pedestrians, such as yielding behaviors of the wheelchair, have not been extensively studied. Moreover, the systematic exploration of eHMI used in autonomous wheelchair is needed, along with research into pedestrian acceptance based on these systems.

5. The Sociology of Wheelchair eHMI and the Image of Wheelchair Users

With the gradual development and popularization of the shared-control wheelchair, ADS technology will face more challenges and new design opportunities. In this context, eHMI holds potential to enhance public trust in such technology [13]. eHMI not only offers a clear interactive interface, but also helps pedestrians and other road users understand the intentions and behaviors of vehicles [51]. This can reduce anxiety and concerns about the transfer of control to machines or others within the context of shared control between drivers and the autonomous system. Due to the feature of real-time interactivity, eHMI could become the interface to communicate with society, enhance society trust and help vehicle users convey their emotions and intentions.

Wheelchairs, as vital empowering tools, also represent a continual visible sign of disability, attracting unwanted attention and potentially leading to social stigma [52]. Wheelchair users, sensitive to this, often prefer visual over auditory communication in their daily interactions to avoid such issues [53]. As a vulnerable group, wheelchair users require enhanced driving support and comfortable, engaging external interactions for a valued and self-acknowledged image in society. The experience of users with on-ground projection interfaces is worth exploring to investigate how eHMI can enhance societal understanding and trust in autonomous wheelchairs, fostering more empathetic and friendly connections between users and society.

References

- Sivakanthan, S.; Candiotti, J.L.; Sundaram, S.A.; Duvall, J.A.; Sergeant, J.J.G.; Cooper, R.; Satpute, S.; Turner, R.L.; Cooper, R.A. Mini-Review: Robotic Wheelchair Taxonomy and Readiness. Neurosci. Lett. 2022, 772, 136482.

- Ryu, H.-Y.; Kwon, J.-S.; Lim, J.-H.; Kim, A.-H.; Baek, S.-J.; Kim, J.-W. Development of an Autonomous Driving Smart Wheelchair for the Physically Weak. Appl. Sci. 2021, 12, 377.

- Megalingam, R.K.; Rajendraprasad, A.; Raj, A.; Raghavan, D.; Teja, C.R.; Sreekanth, S.; Sankaran, R. Self-E: A Self-Driving Wheelchair for Elders and Physically Challenged. Int. J. Intell. Robot. Appl. 2021, 5, 477–493.

- Grewal, H.; Matthews, A.; Tea, R.; George, K. LIDAR-Based Autonomous Wheelchair. In Proceedings of the 2017 IEEE Sensors Applications Symposium (SAS), Glassboro, NJ, USA, 13–15 March 2017; pp. 1–6.

- Alkhatib, R.; Swaidan, A.; Marzouk, J.; Sabbah, M.; Berjaoui, S.; Diab, M.O. Smart Autonomous Wheelchair. In Proceedings of the 2019 3rd International Conference on Bio-engineering for Smart Technologies (BioSMART), Paris, France, 24–26 April 2019; pp. 1–5.

- Lim, D.; Kim, B. UI Design of eHMI of Autonomous Vehicles. Int. J. Hum. Comput. Interact. 2022, 38, 1944–1961.

- Jang, J.; Li, Y.; Carrington, P. “I Should Feel Like I’m In Control”: Understanding Expectations, Concerns, and Motivations for the Use of Autonomous Navigation on Wheelchairs. In Proceedings of the 24th International ACM SIGACCESS Conference on Computers and Accessibility, Athens, Greece, 23 October 2022; pp. 1–5.

- Li, J.; He, Y.; Yin, S.; Liu, L. Effects of Automation Transparency on Trust: Evaluating HMI in the Context of Fully Autonomous Driving. In Proceedings of the 15th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ingolstadt, Germany, 18 September 2023; pp. 311–321.

- Lyons, J.B.; Havig, P.R. Transparency in a Human-Machine Context: Approaches for Fostering Shared Awareness/Intent. In Virtual, Augmented and Mixed Reality: Designing and Developing Virtual and Augmented Environments—Proceedings of the 6th International Conference, VAMR 2014, Heraklion, Greece, 22–27 June 2014; Shumaker, R., Lackey, S., Eds.; Springer: Cham, Switzerland, 2014; pp. 181–190.

- Utaminingrum, F.; Mayena, S.; Karim, C.; Wahyudi, S.; Huda, F.A.; Lin, C.-Y.; Shih, T.K.; Thaipisutikul, T. Road Surface Detection for Autonomous Smart Wheelchair. In Proceedings of the 2022 5th World Symposium on Communication Engineering (WSCE), Nagoya, Japan, 16 September 2022; pp. 69–73.

- Zang, G.; Azouigui, S.; Saudrais, S.; Hebert, M.; Goncalves, W. Evaluating the Understandability of Light Patterns and Pictograms for Autonomous Vehicle-to-Pedestrian Communication Functions. IEEE Trans. Intell. Transport. Syst. 2022, 23, 18668–18680.

- Wu, C.F.; Xu, D.D.; Lu, S.H.; Chen, W.C. Effect of Signal Design of Autonomous Vehicle Intention Presentation on Pedestrians’ Cognition. Behav. Sci. 2022, 12, 502.

- Carmona, J.; Guindel, C.; Garcia, F.; de la Escalera, A. eHMI: Review and Guidelines for Deployment on Autonomous Vehicles. Sensors 2021, 21, 2912.

- Papakostopoulos, V.; Nathanael, D.; Portouli, E.; Amditis, A. Effect of External HMI for Automated Vehicles (AVs) on Drivers’ Ability to Infer the AV Motion Intention: A Field Experiment. Transp. Res. Part F Traffic Psychol. Behav. 2021, 82, 32–42.

- Rettenmaier, M.; Albers, D.; Bengler, K. After You?!—Use of External Human-Machine Interfaces in Road Bottleneck Scenarios. Transp. Res. Part F Traffic Psychol. Behav. 2020, 70, 175–190.

- Faas, S.M.; Mathis, L.-A.; Baumann, M. External HMI for Self-Driving Vehicles: Which Information Shall Be Displayed? Transp. Res. Part F Traffic Psychol. Behav. 2020, 68, 171–186.

- Jiang, Q.; Zhuang, X.; Ma, G. Evaluation of external HMI in autonomous vehicles based on pedestrian road crossing decision-making model. Adv. Psychol. Sci. 2021, 29, 1979–1992.

- Eisma, Y.B.; Van Bergen, S.; Ter Brake, S.M.; Hensen, M.T.T.; Tempelaar, W.J.; De Winter, J.C.F. External Human–Machine Interfaces: The Effect of Display Location on Crossing Intentions and Eye Movements. Information 2019, 11, 13.

- Othersen, I.; Conti-Kufner, A.S.; Dietrich, A.; Maruhn, P.; Bengler, K. Designing for Automated Vehicle and Pedestrian Communication: Perspectives on eHMIs from Older and Younger Persons. In Proceedings of the HFES Europe Annual Meeting, Berlin, Germany, 3–8 October 2018.

- Bazilinskyy, P.; Dodou, D.; De Winter, J. Survey on eHMI Concepts: The Effect of Text, Color, and Perspective. Transp. Res. Part F Traffic Psychol. Behav. 2019, 67, 175–194.

- Löcken, A.; Golling, C.; Riener, A. How Should Automated Vehicles Interact with Pedestrians? A Comparative Analysis of Interaction Concepts in Virtual Reality. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands, 21 September 2019; pp. 262–274.

- Dou, J.; Chen, S.; Tang, Z.; Xu, C.; Xue, C. Evaluation of Multimodal External Human–Machine Interface for Driverless Vehicles in Virtual Reality. Symmetry 2021, 13, 687.

- Whee, A.; Willrodt, J.-H.; Wagner, K.; Bengler, K. Path Planning of a Multifunctional Elderly Intelligent. Projection-Based External Human Machine Interfaces—Enabling Interaction between Automated Vehicles and Pedestrians. In Proceedings of the Driving Simulation Conference 2018 Europe VR, Driving Simulation Association, Antibes, France, 5 September 2018; pp. 43–50.

- Tabone, W.; Lee, Y.M.; Merat, N.; Happee, R.; de Winter, J. Towards Future Pedestrian-Vehicle Interactions: Introducing Theoretically-Supported AR Prototypes. In Proceedings of the 13th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Association for Computing Machinery, New York, NY, USA, 20 September 2021; pp. 209–218.

- Zolotas, M.; Demiris, Y. Towards Explainable Shared Control Using Augmented Reality. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 3020–3026.

- Zolotas, M.; Elsdon, J.; Demiris, Y. Head-Mounted Augmented Reality for Explainable Robotic Wheelchair Assistance. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1823–1829.

- De Clercq, K.; Dietrich, A.; Núñez Velasco, J.P.; De Winter, J.; Happee, R. External Human-Machine Interfaces on Automated Vehicles: Effects on Pedestrian Crossing Decisions. Hum. Factors 2019, 61, 1353–1370.

- Colley, A.; Häkkilä, J.; Forsman, M.-T.; Pfleging, B.; Alt, F. Car Exterior Surface Displays: Exploration in a Real-World Context. In Proceedings of the 7th ACM International Symposium on Pervasive Displays, Munich, Germany, 6 June 2018; pp. 1–8.

- Wang, B.J.; Yang, C.H.; Gu, Z.Y. Smart Flashlight: Navigation Support for Cyclists. In Design, User Experience, and Usability: Users, Contexts and Case Studies—Proceedings of the 7th International Conference, DUXU 2018, Las Vegas, NV, USA, 15–20 July 2018; Marcus, A., Wang, W., Eds.; Springer: Cham, Switzerland, 2018; pp. 406–414.

- Kim, B.; Pineau, J. Socially Adaptive Path Planning in Human Environments Using Inverse Reinforcement Learning. Int. J. Soc. Robot. 2016, 8, 51–66.

- Ezeh, C.; Trautman, P.; Devigne, L.; Bureau, V.; Babel, M.; Carlson, T. Probabilistic vs Linear Blending Approaches to Shared Control for Wheelchair Driving. In Proceedings of the 2017 International Conference on Rehabilitation Robotics (ICORR), London, UK, 17–20 July 2017; pp. 835–840.

- Simpson, R.; LoPresti, E.; Hayashi, S.; Nourbakhsh, I.; Miller, D. The Smart Wheelchair Component System. J. Rehabil. Res. Dev. 2004, 41, 429–442.

- Baltazar, A.R.; Petry, M.R.; Silva, M.F.; Moreira, A.P. Autonomous Wheelchair for Patient’s Transportation on Healthcare Institutions. SN Appl. Sci. 2021, 3, 354.

- Thuan Nguyen, V.; Sentouh, C.; Pudlo, P.; Popieul, J.-C. Joystick Haptic Force Feedback for Powered Wheelchair—A Model-Based Shared Control Approach. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11 October 2020; pp. 4453–4459.

- Viswanathan, P.; Zambalde, E.P.; Foley, G.; Graham, J.L.; Wang, R.H.; Adhikari, B.; Mackworth, A.K.; Mihailidis, A.; Miller, W.C.; Mitchell, I.M. Intelligent Wheelchair Control Strategies for Older Adults with Cognitive Impairment: User Attitudes, Needs, and Preferences. Auton. Robot. 2017, 41, 539–554.

- Wang, Y.; Hespanhol, L.; Tomitsch, M. How Can Autonomous Vehicles Convey Emotions to Pedestrians? A Review of Emotionally Expressive Non-Humanoid Robots. Multimodal. Technol. Interact. 2021, 5, 84.

- Carlson, T.; Demiris, Y. Human-Wheelchair Collaboration through Prediction of Intention and Adaptive Assistance. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 3926–3931.

- Wang, W.; Na, X.; Cao, D.; Gong, J.; Xi, J.; Xing, Y.; Wang, F.-Y. Decision-Making in Driver-Automation Shared Control: A Review and Perspectives. IEEE/CAA J. Autom. Sin. 2020, 7, 1289–1307.

- Xi, L.; Shino, M. Shared Control of an Electric Wheelchair Considering Physical Functions and Driving Motivation. Int. J. Environ. Res. Public Health 2020, 17, 5502.

- Escobedo, A.; Spalanzani, A.; Laugier, C. Multimodal Control of a Robotic Wheelchair: Using Contextual Information for Usability Improvement. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 4262–4267.

- Li, Q.; Chen, W.; Wang, J. Dynamic Shared Control for Human-Wheelchair Cooperation. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 4278–4283.

- Zhang, B.; Barbareschi, G.; Ramirez Herrera, R.; Carlson, T.; Holloway, C. Understanding Interactions for Smart Wheelchair Navigation in Crowds. In Proceedings of the CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April 2022; pp. 1–16.

- Anwer, S.; Waris, A.; Sultan, H.; Butt, S.I.; Zafar, M.H.; Sarwar, M.; Niazi, I.K.; Shafique, M.; Pujari, A.N. Eye and Voice-Controlled Human Machine Interface System for Wheelchairs Using Image Gradient Approach. Sensors 2020, 20, 5510.

- Barbareschi, G.; Daymond, S.; Honeywill, J.; Singh, A.; Noble, D.N.; Mbugua, N.; Harris, I.; Austin, V.; Holloway, C. Value beyond Function: Analyzing the Perception of Wheelchair Innovations in Kenya. In Proceedings of the 22nd International ACM SIGACCESS Conference on Computers and Accessibility, Virtual Event, 26 October 2020; pp. 1–14.

- Kaye, S.-A.; Li, X.; Oviedo-Trespalacios, O.; Pooyan Afghari, A. Getting in the Path of the Robot: Pedestrians Acceptance of Crossing Roads near Fully Automated Vehicles. Travel Behav. Soc. 2022, 26, 1–8.

- Liu, H.; Hirayama, T.; Morales Saiki, L.Y.; Murase, H. Implicit Interaction with an Autonomous Personal Mobility Vehicle: Relations of Pedestrians’ Gaze Behavior with Situation Awareness and Perceived Risks. Int. J. Hum. Comput. Interact. 2023, 39, 2016–2032.

- Kong, J.; Li, P. Path Planning of a Multifunctional Elderly Intelligent Wheelchair Based on the Sensor and Fuzzy Bayesian Network Algorithm. J. Sens. 2022, 2022, e8485644.

- Epke, M.R.; Kooijman, L.; de Winter, J.C.F. I See Your Gesture: A VR-Based Study of Bidirectional Communication between Pedestrians and Automated Vehicles. J. Adv. Transp. 2021, 2021, e5573560.

- Watanabe, A.; Ikeda, T.; Morales, Y.; Shinozawa, K.; Miyashita, T.; Hagita, N. Communicating Robotic Navigational Intentions. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 31 October 2015.

- Shrestha, M.C.; Onishi, T.; Kobayashi, A.; Kamezaki, M.; Sugano, S. Communicating Directional Intent in Robot Navigation Using Projection Indicators. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 746–751.

- Othman, K. Public Acceptance and Perception of Autonomous Vehicles: A Comprehensive Review. AI Ethics 2021, 1, 355–387.

- Barbareschi, G.; Carew, M.T.; Johnson, E.A.; Kopi, N.; Holloway, C. “When They See a Wheelchair, They’ve Not Even Seen Me”—Factors Shaping the Experience of Disability Stigma and Discrimination in Kenya. Int. J. Environ. Res. Public Health 2021, 18, 4272.

- Asha, A.Z.; Smith, C.; Freeman, G.; Crump, S.; Somanath, S.; Oehlberg, L.; Sharlin, E. Co-Designing Interactions between Pedestrians in Wheelchairs and Autonomous Vehicles. In Proceedings of the 2021 ACM Designing Interactive Systems Conference, Virtual Event, 28 June–2 July 2021; pp. 339–351.

More

Information

Subjects:

Transportation Science & Technology; Health Care Sciences & Services; Computer Science, Interdisciplinary Applications

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

816

Revisions:

2 times

(View History)

Update Date:

19 Jan 2024

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No