| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Bobak Mosadegh | -- | 5156 | 2024-01-09 19:10:37 | | | |

| 2 | Mona Zou | Meta information modification | 5156 | 2024-01-10 09:25:11 | | |

Video Upload Options

Personalized medicine transforms healthcare by adapting interventions to individuals’ unique genetic, molecular, and clinical profiles. To maximize diagnostic and/or therapeutic efficacy, personalized medicine requires advanced imaging devices and sensors for accurate assessment and monitoring of individual patient conditions or responses to therapeutics. In the field of biomedical optics, short-wave infrared (SWIR) techniques offer an array of capabilities that hold promise to significantly enhance diagnostics, imaging, and therapeutic interventions. SWIR techniques provide in vivo information, which was previously inaccessible, by making use of its capacity to penetrate biological tissues with reduced attenuation and enable researchers and clinicians to delve deeper into anatomical structures, physiological processes, and molecular interactions. Combining SWIR techniques with machine learning (ML), which is a powerful tool for analyzing information, holds the potential to provide unprecedented accuracy for disease detection, precision in treatment guidance, and correlations of complex biological features, opening the way for the data-driven personalized medicine field. Despite numerous biomedical demonstrations that utilize cutting-edge SWIR techniques, the clinical potential of this approach has remained significantly underexplored.

1. Common SWIR Imaging Technologies

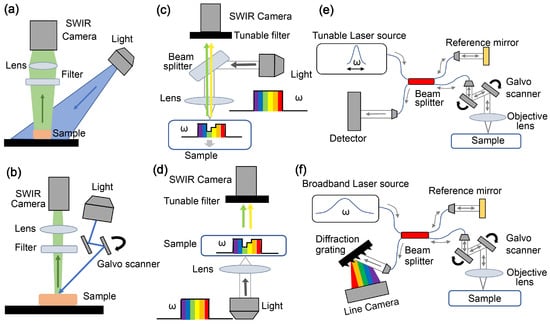

1.1. Fluorescent Imaging

1.2. Multispectral/Hyperspectral Imaging

1.3. Optical Coherence Tomography (OCT)

2. Biomedical Applications of ML-Assisted SWIR Techniques

2.1. Assistance in Diagnosis

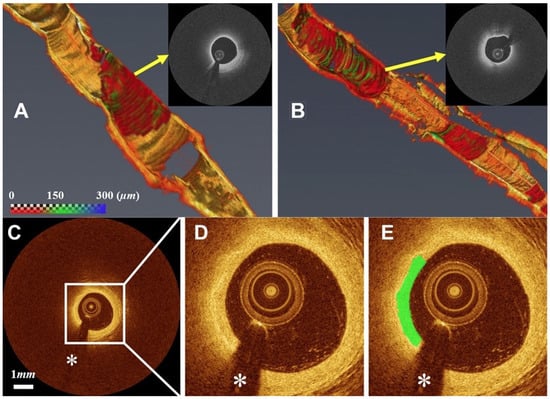

2.1.1. Cardiovascular Diseases (CVDs)

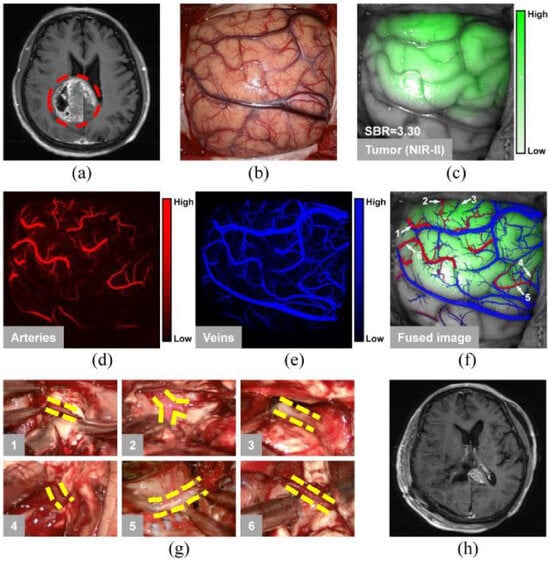

2.1.2. Cancer Diagnosis and Surgical Interventions

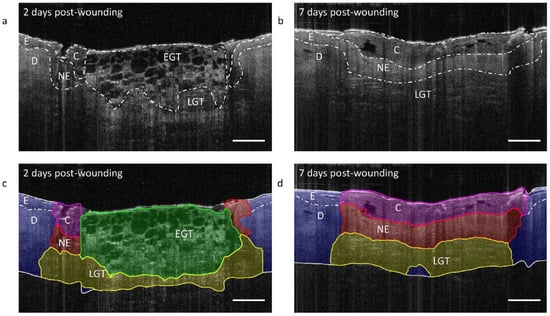

2.2. Quantitative Imaging and Prognosis

2.3. Overcoming Technological Limitations

References

- Stuker, F.; Ripoll, J.; Rudin, M. Fluorescence molecular tomography: Principles and potential for pharmaceutical research. Pharmaceutics 2011, 3, 229–274.

- Hernot, S.; van Manen, L.; Debie, P.; Mieog, J.S.D.; Vahrmeijer, A.L. Latest developments in molecular tracers for fluorescence image-guided cancer surgery. Lancet Oncol. 2019, 20, e354–e367.

- Qi, J.; Sun, C.; Zebibula, A.; Zhang, H.; Kwok, R.T.; Zhao, X.; Xi, W.; Lam, J.W.; Qian, J.; Tang, B.Z. Real-time and high-resolution bioimaging with bright aggregation-induced emission dots in short-wave infrared region. Adv. Mater. 2018, 30, 1706856.

- Zhang, Q.; Grabowska, A.M.; Clarke, P.A.; Morgan, S.P. Numerical Simulation of a Scanning Illumination System for Deep Tissue Fluorescence Imaging. J. Imaging 2019, 5, 83.

- Zhang, H.; Salo, D.; Kim, D.M.; Komarov, S.; Tai, Y.C.; Berezin, M.Y. Penetration depth of photons in biological tissues from hyperspectral imaging in shortwave infrared in transmission and reflection geometries. J. Biomed. Opt. 2016, 21, 126006.

- Randeberg, L.L. Hyperspectral characterization of tissue in the SWIR spectral range: A road to new insight? In Optical Biopsy XVII: Toward Real-Time Spectroscopic Imaging and Diagnosis; SPIE: Bellingham, WA, USA, 2019; Volume 10873, pp. 125–140.

- Wilson, R.H.; Nadeau, K.P.; Jaworski, F.B.; Tromberg, B.J.; Durkin, A.J. Review of short-wave infrared spectroscopy and imaging methods for biological tissue characterization. J. Biomed. Opt. 2015, 20, 030901.

- Nachabé, R.; Evers, D.J.; Hendriks, B.H.; Lucassen, G.W.; van der Voort, M.; Rutgers, E.J.; Peeters, M.J.V.; Van der Hage, J.A.; Oldenburg, H.S.; Wesseling, J.; et al. Diagnosis of breast cancer using diffuse optical spectroscopy from 500 to 1600 nm: Comparison of classification methods. J. Biomed. Opt. 2011, 16, 087010.

- Drexler, W.; Fujimoto, J.G. Optical Coherence Tomography: Technology and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2008.

- Salimi, M.H.; Villiger, M.; Tabatabaei, N. Three-dimensional opto-thermo-mechanical model for predicting photo-thermal optical coherence tomography responses in multilayer geometries. Biomed. Opt. Express 2022, 13, 3416–3433.

- Singh, M.; Zvietcovich, F.; Larin, K.V. Introduction to optical coherence elastography: Tutorial. J. Opt. Soc. Am. A 2022, 39, 418–430.

- Wang, S.; Cao, G.; Wang, Y.; Liao, S.; Wang, Q.; Shi, J.; Li, C.; Shen, D. Review and prospect: Artificial intelligence in advanced medical imaging. Front. Radiol. 2021, 1, 781868.

- Johnson, K.W.; Torres Soto, J.; Glicksberg, B.S.; Shameer, K.; Miotto, R.; Ali, M.; Ashley, E.; Dudley, J.T. Artificial intelligence in cardiology. J. Am. Coll. Cardiol. 2018, 71, 2668–2679.

- Xu, M.; Chen, Z.; Zheng, J.; Zhao, Q.; Yuan, Z. Artificial Intelligence-Aided Optical Imaging for Cancer Theranostics. Semin. Cancer Biol. 2023, 94, 62–80.

- World Health Organization. Cardiovascular Diseases. 2023. Available online: http://surl.li/kjhtr (accessed on 23 October 2023).

- Bui, Q.T.; Prempeh, M.; Wilensky, R.L. Atherosclerotic plaque development. Int. J. Biochem. Cell Biol. 2009, 41, 2109–2113.

- Virmani, R.; Burke, A.P.; Farb, A.; Kolodgie, F.D. Pathology of the vulnerable plaque. J. Am. Coll. Cardiol. 2006, 47, C13–C18.

- Tearney, G.J.; Regar, E.; Akasaka, T.; Adriaenssens, T.; Barlis, P.; Bezerra, H.G.; Bouma, B.; Bruining, N.; Cho, J.M.; Chowdhary, S.; et al. Consensus standards for acquisition, measurement, and reporting of intravascular optical coherence tomography studies: A report from the International Working Group for Intravascular Optical Coherence Tomography Standardization and Validation. J. Am. Coll. Cardiol. 2012, 59, 1058–1072.

- Abdolmanafi, A.; Duong, L.; Dahdah, N.; Adib, I.R.; Cheriet, F. Characterization of coronary artery pathological formations from OCT imaging using deep learning. Biomed. Opt. Express 2018, 9, 4936–4960.

- Abdolmanafi, A.; Duong, L.; Dahdah, N.; Cheriet, F. Deep feature learning for automatic tissue classification of coronary artery using optical coherence tomography. Biomed. Opt. Express 2017, 8, 1203–1220.

- Kolluru, C. Deep Neural Networks for A-Line Based Plaque Classification in Intravascular Optical Coherence Tomography Images. Ph.D. Thesis, Case Western Reserve University, Cleveland, OH, USA, 2018.

- Lee, J.; Pereira, G.T.; Gharaibeh, Y.; Kolluru, C.; Zimin, V.N.; Dallan, L.A.; Kim, J.N.; Hoori, A.; Al-Kindi, S.G.; Guagliumi, G.; et al. Automated analysis of fibrous cap in intravascular optical coherence tomography images of coronary arteries. Sci. Rep. 2022, 12, 21454.

- Lee, J.; Prabhu, D.; Kolluru, C.; Gharaibeh, Y.; Zimin, V.N.; Bezerra, H.G.; Wilson, D.L. Automated plaque characterization using deep learning on coronary intravascular optical coherence tomographic images. Biomed. Opt. Express 2019, 10, 6497–6515.

- Shi, P.; Xin, J.; Wu, J.; Deng, Y.; Cai, Z.; Du, S.; Zheng, N. Detection of thin-cap fibroatheroma in IVOCT images based on weakly supervised learning and domain knowledge. J. Biophotonics 2023, 16, e202200343.

- Johnson, K.W.; Glicksberg, B.S.; Shameer, K.; Vengrenyuk, Y.; Krittanawong, C.; Russak, A.J.; Sharma, S.K.; Narula, J.N.; Dudley, J.T.; Kini, A.S. A transcriptomic model to predict increase in fibrous cap thickness in response to high-dose statin treatment: Validation by serial intracoronary OCT imaging. EBioMedicine 2019, 44, 41–49.

- Kolluru, C.; Prabhu, D.; Gharaibeh, Y.; Wu, H.; Wilson, D.L. Voxel-based plaque classification in coronary intravascular optical coherence tomography images using decision trees. In Medical Imaging 2018: Computer-Aided Diagnosis; SPIE: Bellingham, WA, USA, 2018; Volume 10575, pp. 657–662.

- Yang, G.; Mehanna, E.; Li, C.; Zhu, H.; He, C.; Lu, F.; Zhao, K.; Gong, Y.; Wang, Z. Stent detection with very thick tissue coverage in intravascular OCT. Biomed. Opt. Express 2021, 12, 7500–7516.

- Wang, Z.; Jenkins, M.W.; Linderman, G.C.; Bezerra, H.G.; Fujino, Y.; Costa, M.A.; Wilson, D.L.; Rollins, A.M. 3-D stent detection in intravascular OCT using a Bayesian network and graph search. IEEE Trans. Med. Imaging 2015, 34, 1549–1561.

- Lu, H.; Gargesha, M.; Wang, Z.; Chamie, D.; Attizzani, G.F.; Kanaya, T.; Ray, S.; Costa, M.A.; Rollins, A.M.; Bezerra, H.G.; et al. Automatic stent detection in intravascular OCT images using bagged decision trees. Biomed. Opt. Express 2012, 3, 2809–2824.

- Lu, H.; Lee, J.; Ray, S.; Tanaka, K.; Bezerra, H.G.; Rollins, A.M.; Wilson, D.L. Automated stent coverage analysis in intravascular OCT (IVOCT) image volumes using a support vector machine and mesh growing. Biomed. Opt. Express 2019, 10, 2809–2828.

- Lee, J.; Kim, J.N.; Gharaibeh, Y.; Zimin, V.N.; Dallan, L.A.; Pereira, G.T.; Vergara-Martel, A.; Kolluru, C.; Hoori, A.; Bezerra, H.G.; et al. OCTOPUS–Optical coherence tomography plaque and stent analysis software. Heliyon 2023, 9, e13396.

- Smith, A.G.; Perez, R.; Thomas, A.; Stewart, S.; Samiei, A.; Bangalore, A.; Gomer, H.; Darr, M.B.; Schweitzer, R.C.; Vasudevan, S. Objective determination of peripheral edema in heart failure patients using short-wave infrared molecular chemical imaging. J. Biomed. Opt. 2021, 26, 105002.

- World Health Organization. Cancer. 2023. Available online: http://surl.li/cdgtc (accessed on 23 October 2023).

- Waterhouse, D.J.; Privitera, L.; Anderson, J.; Stoyanov, D.; Giuliani, S. Enhancing intraoperative tumor delineation with multispectral short-wave infrared fluorescence imaging and machine learning. J. Biomed. Opt. 2023, 28, 094804.

- Shen, B.; Zhang, Z.; Shi, X.; Cao, C.; Zhang, Z.; Hu, Z.; Ji, N.; Tian, J. Real-time intraoperative glioma diagnosis using fluorescence imaging and deep convolutional neural networks. Eur. J. Nucl. Med. Mol. Imaging 2021, 48, 3482–3492.

- Haifler, M.; Pence, I.; Sun, Y.; Kutikov, A.; Uzzo, R.G.; Mahadevan-Jansen, A.; Patil, C.A. Discrimination of malignant and normal kidney tissue with short wave infrared dispersive Raman spectroscopy. J. Biophotonics 2018, 11, e201700188.

- Cao, C.; Jin, Z.; Shi, X.; Zhang, Z.; Xiao, A.; Yang, J.; Ji, N.; Tian, J.; Hu, Z. First clinical investigation of near-infrared window IIa/IIb fluorescence imaging for precise surgical resection of gliomas. IEEE Trans. Biomed. Eng. 2022, 69, 2404–2413.

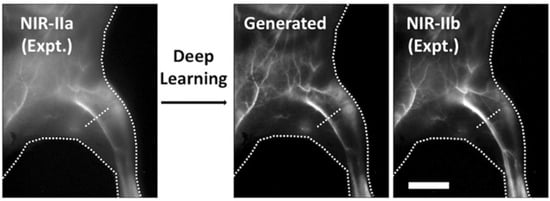

- Baulin, V.A.; Usson, Y.; Le Guével, X. Deep learning: Step forward to high-resolution in vivo shortwave infrared imaging. J. Biophotonics 2021, 14, e202100102.

- de Lucena, D.V.; da Silva Soares, A.; Coelho, C.J.; Wastowski, I.J.; Filho, A.R.G. Detection of tumoral epithelial lesions using hyperspectral imaging and deep learning. In Proceedings of the Computational Science—ICCS 2020: 20th International Conference, Amsterdam, The Netherlands, 3–5 June 2020; Proceedings, Part III 20. Springer: Cham, Switzerland, 2020; pp. 599–612.

- Butola, A.; Prasad, D.K.; Ahmad, A.; Dubey, V.; Qaiser, D.; Srivastava, A.; Senthilkumaran, P.; Ahluwalia, B.S.; Mehta, D.S. Deep learning architecture “LightOCT” for diagnostic decision support using optical coherence tomography images of biological samples. Biomed. Opt. Express 2020, 11, 5017–5031.

- Yao, X.; Gan, Y.; Chang, E.; Hibshoosh, H.; Feldman, S.; Hendon, C. Visualization and tissue classification of human breast cancer images using ultrahigh-resolution OCT. Lasers Surg. Med. 2017, 49, 258–269.

- Butola, A.; Ahmad, A.; Dubey, V.; Srivastava, V.; Qaiser, D.; Srivastava, A.; Senthilkumaran, P.; Mehta, D.S. Volumetric analysis of breast cancer tissues using machine learning and swept-source optical coherence tomography. Appl. Opt. 2019, 58, A135–A141.

- You, C.; Yi, J.Y.; Hsu, T.W.; Huang, S.L. Integration of cellular-resolution optical coherence tomography and Raman spectroscopy for discrimination of skin cancer cells with machine learning. J. Biomed. Opt. 2023, 28, 096005.

- Zhu, D.; Wang, J.; Marjanovic, M.; Chaney, E.J.; Cradock, K.A.; Higham, A.M.; Liu, Z.G.; Gao, Z.; Boppart, S.A. Differentiation of breast tissue types for surgical margin assessment using machine learning and polarization-sensitive optical coherence tomography. Biomed. Opt. Express 2021, 12, 3021–3036.

- Li, Z.; Huang, S.; He, Y.; van Wijnbergen, J.W.; Zhang, Y.; Cottrell, R.D.; Smith, S.G.; Hammond, P.T.; Chen, D.Z.; Padera, T.P.; et al. A new label-free optical imaging method for the lymphatic system enhanced by deep learning. bioRxiv 2023.

- Spink, S.S.; Pilvar, A.; Wei, L.L.; Frias, J.; Anders, K.; Franco, S.T.; Rose, O.C.; Freeman, M.; Bag, G.; Huang, H.; et al. Shortwave infrared diffuse optical wearable probe for quantification of water and lipid content in emulsion phantoms using deep learning. J. Biomed. Opt. 2023, 28, 094808.

- Zhao, Y.; Pilvar, A.; Tank, A.; Peterson, H.; Jiang, J.; Aster, J.C.; Dumas, J.P.; Pierce, M.C.; Roblyer, D. Shortwave-infrared meso-patterned imaging enables label-free mapping of tissue water and lipid content. Nat. Commun. 2020, 11, 5355.

- Vyas, S.; Banerjee, A.; Burlina, P. Estimating physiological skin parameters from hyperspectral signatures. J. Biomed. Opt. 2013, 18, 057008.

- Finn, A.V.; Nakano, M.; Narula, J.; Kolodgie, F.D.; Virmani, R. Concept of vulnerable/unstable plaque. Arterioscler. Thromb. Vasc. Biol. 2010, 30, 1282–1292.

- Fleming, C.P.; Eckert, J.; Halpern, E.F.; Gardecki, J.A.; Tearney, G.J. Depth resolved detection of lipid using spectroscopic optical coherence tomography. Biomed. Opt. Express 2013, 4, 1269–1284.

- Wang, Y.; Freeman, A.; Ajjan, R.; Del Galdo, F.; Tiganescu, A. Automated quantification of 3D wound morphology by machine learning and optical coherence tomography in type 2 diabetes. Ski. Health Dis. 2023, 3, e203.

- Zheng, G.; Jiang, Y.; Shi, C.; Miao, H.; Yu, X.; Wang, Y.; Chen, S.; Lin, Z.; Wang, W.; Lu, F.; et al. Deep learning algorithms to segment and quantify the choroidal thickness and vasculature in swept-source optical coherence tomography images. J. Innov. Opt. Health Sci. 2021, 14, 2140002.

- Takahashi, H.; Mao, Z.; Du, R.; Ohno-Matsui, K. Machine learning-based 3D modeling and volumetry of human posterior vitreous cavity of optical coherence tomographic images. Sci. Rep. 2022, 12, 13836.

- Teja, R.V.; Manne, S.R.; Goud, A.; Rasheed, M.A.; Dansingani, K.K.; Chhablani, J.; Vupparaboina, K.K.; Jana, S. Classification and quantification of retinal cysts in OCT B-scans: Efficacy of machine learning methods. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 48–51.

- Fujimoto, S.; Miki, A.; Maruyama, K.; Mei, S.; Mao, Z.; Wang, Z.; Chan, K.; Nishida, K. Three-Dimensional Volume Calculation of Intrachoroidal Cavitation Using Deep-Learning–Based Noise Reduction of Optical Coherence Tomography. Transl. Vis. Sci. Technol. 2022, 11, 1.

- Kulmaganbetov, M.; Bevan, R.J.; Anantrasirichai, N.; Achim, A.; Erchova, I.; White, N.; Albon, J.; Morgan, J.E. Textural feature analysis of optical coherence tomography phantoms. Electronics 2022, 11, 669.

- Seesan, T.; Abd El-Sadek, I.; Mukherjee, P.; Zhu, L.; Oikawa, K.; Miyazawa, A.; Shen, L.T.W.; Matsusaka, S.; Buranasiri, P.; Makita, S.; et al. Deep convolutional neural network-based scatterer density and resolution estimators in optical coherence tomography. Biomed. Opt. Express 2022, 13, 168–183.

- Salimi, M.; Villiger, M.; Tabatabaei, N. Effects of lipid composition on photothermal optical coherence tomography signals. J. Biomed. Opt. 2020, 25, 120501.

- Salimi, M.; Villiger, M.; Tabatabaei, N. Molecular-Specific Imaging of Tissue with Photo-Thermal Optical Coherence Tomography. Int. J. Thermophys. 2023, 44, 36.

- Neidhardt, M.; Bengs, M.; Latus, S.; Schlüter, M.; Saathoff, T.; Schlaefer, A. 4D deep learning for real-time volumetric optical coherence elastography. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 23–27.

- Carr, J.A.; Franke, D.; Caram, J.R.; Perkinson, C.F.; Saif, M.; Askoxylakis, V.; Datta, M.; Fukumura, D.; Jain, R.K.; Bawendi, M.G.; et al. Shortwave infrared fluorescence imaging with the clinically approved near-infrared dye indocyanine green. Proc. Natl. Acad. Sci. USA 2018, 115, 4465–4470.

- Ma, Z.; Wang, F.; Wang, W.; Zhong, Y.; Dai, H. Deep learning for in vivo near-infrared imaging. Proc. Natl. Acad. Sci. USA 2021, 118, e2021446118.

- Cao, C.; Xiao, A.; Cai, M.; Shen, B.; Guo, L.; Shi, X.; Tian, J.; Hu, Z. Excitation-based fully connected network for precise NIR-II fluorescence molecular tomography. Biomed. Opt. Express 2022, 13, 6284–6299.

- Chen, R.; Peng, S.; Zhu, L.; Meng, J.; Fan, X.; Feng, Z.; Zhang, H.; Qian, J. Enhancing Total Optical Throughput of Microscopy with Deep Learning for Intravital Observation. Small Methods 2023, 7, 2300172.

- Lee, W.; Nam, H.S.; Seok, J.Y.; Oh, W.Y.; Kim, J.W.; Yoo, H. Deep learning-based image enhancement in optical coherence tomography by exploiting interference fringe. Commun. Biol. 2023, 6, 464.

- Wu, R.; Huang, S.; Zhong, J.; Li, M.; Zheng, F.; Bo, E.; Liu, L.; Liu, Y.; Ge, X.; Ni, G. MAS-Net OCT: A deep-learning-based speckle-free multiple aperture synthetic optical coherence tomography. Biomed. Opt. Express 2023, 14, 2591–2607.

- Dong, Z.; Liu, G.; Ni, G.; Jerwick, J.; Duan, L.; Zhou, C. Optical coherence tomography image denoising using a generative adversarial network with speckle modulation. J. Biophotonics 2020, 13, e201960135.

- Shi, F.; Cai, N.; Gu, Y.; Hu, D.; Ma, Y.; Chen, Y.; Chen, X. DeSpecNet: A CNN-based method for speckle reduction in retinal optical coherence tomography images. Phys. Med. Biol. 2019, 64, 175010.

- Ma, Y.; Chen, X.; Zhu, W.; Cheng, X.; Xiang, D.; Shi, F. Speckle noise reduction in optical coherence tomography images based on edge-sensitive cGAN. Biomed. Opt. Express 2018, 9, 5129–5146.

- Yuan, Z.; Yang, D.; Yang, Z.; Zhao, J.; Liang, Y. Digital refocusing based on deep learning in optical coherence tomography. Biomed. Opt. Express 2022, 13, 3005–3020.

- Zhang, Z.; Li, H.; Lv, G.; Zhou, H.; Feng, H.; Xu, Z.; Li, Q.; Jiang, T.; Chen, Y. Deep learning-based image reconstruction for photonic integrated interferometric imaging. Opt. Express 2022, 30, 41359–41373.

- Zhang, Y.; Liu, T.; Singh, M.; Çetintaş, E.; Luo, Y.; Rivenson, Y.; Larin, K.V.; Ozcan, A. Neural network-based image reconstruction in swept-source optical coherence tomography using undersampled spectral data. Light. Sci. Appl. 2021, 10, 155.

- Ahmed, S.; Le, D.; Son, T.; Adejumo, T.; Ma, G.; Yao, X. ADC-net: An open-source deep learning network for automated dispersion compensation in optical coherence tomography. Front. Med. 2022, 9, 864879.

- Gao, M.; Guo, Y.; Hormel, T.T.; Sun, J.; Hwang, T.S.; Jia, Y. Reconstruction of high-resolution 6 × 6-mm OCT angiograms using deep learning. Biomed. Opt. Express 2020, 11, 3585–3600.

- Salimi, M. Advanced Photothermal Optical Coherence Tomography (PT-OCT) for Quantification of Tissue Composition. Ph.D. Thesis, York University, Toronto, ON, Canada, 2022.

- Sun, Y.; Wang, J.; Shi, J.; Boppart, S.A. Synthetic polarization-sensitive optical coherence tomography by deep learning. NPJ Digit. Med. 2021, 4, 105.

- Kashani, R.G.; Młyńczak, M.C.; Zarabanda, D.; Solis-Pazmino, P.; Huland, D.M.; Ahmad, I.N.; Singh, S.P.; Valdez, T.A. Shortwave infrared otoscopy for diagnosis of middle ear effusions: A machine-learning-based approach. Sci. Rep. 2021, 11, 12509.