Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Xinzhi Wang | -- | 1680 | 2023-12-15 03:46:07 | | | |

| 2 | Lindsay Dong | -2 word(s) | 1678 | 2023-12-18 07:41:10 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Wang, X.; Li, M.; Liu, Q.; Chang, Y.; Zhang, H. Fire Segmentation. Encyclopedia. Available online: https://encyclopedia.pub/entry/52783 (accessed on 08 February 2026).

Wang X, Li M, Liu Q, Chang Y, Zhang H. Fire Segmentation. Encyclopedia. Available at: https://encyclopedia.pub/entry/52783. Accessed February 08, 2026.

Wang, Xinzhi, Mengyue Li, Quanyi Liu, Yudong Chang, Hui Zhang. "Fire Segmentation" Encyclopedia, https://encyclopedia.pub/entry/52783 (accessed February 08, 2026).

Wang, X., Li, M., Liu, Q., Chang, Y., & Zhang, H. (2023, December 15). Fire Segmentation. In Encyclopedia. https://encyclopedia.pub/entry/52783

Wang, Xinzhi, et al. "Fire Segmentation." Encyclopedia. Web. 15 December, 2023.

Copy Citation

Fire segmentation detects fire pixels in fire images by separating the foreground of smoke or flame from the background. It avoids using bounding boxes and focuses on delineating distinct boundaries. There are two categories of fire segmentation methods, feature analysis-based methods and deep learning-based methods.

fire image segmentation

multi-scale feature fusion

flame situation detection

1. Introduction

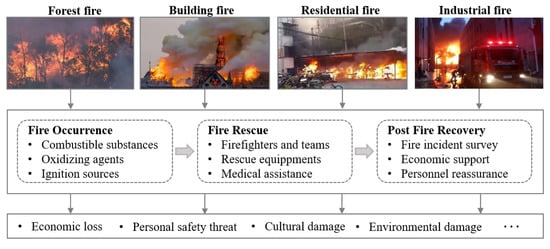

Fire is a pervasive and hazardous threat that poses significant risks to public safety and social progress. For example, for high-rise buildings with dense populations, fires cause incalculable damage to the personal and property safety of residents. Forest fires engender substantial economic losses, air pollution, environmental degradation, and risks to both humans and animals. As shown in Figure 1, typical fire incidents include the Australian forest fires, the Notre Dame Cathedral fire, the parking shed fire in Shanghai, China, and the industrial plant fire in Anyang, China. Australia experienced devastating wildfires lasting for several months between July 2019 and February 2020, claiming the lives of 33 individuals and resulting in the death or displacement of three billion animals. On 22 December 2022, a sudden fire engulfed a parking shed in a residential area in Shanghai, resulting in the destruction of numerous vehicles. In secure areas such as residential housing, flames appear seconds after vehicle short circuits or malfunctions. Within approximately three minutes, the flame temperature can escalate to 1200 ℃. High-temperature toxic gases rapidly permeate corridors and rooms, causing people to suffocate to death. Shockingly, a vehicle fire takes a person’s life in as little as 100 s. Therefore, efficient fire prevention and control measures can effectively reduce casualties and property losses.

Figure 1. Process of dealing with fire incidents.

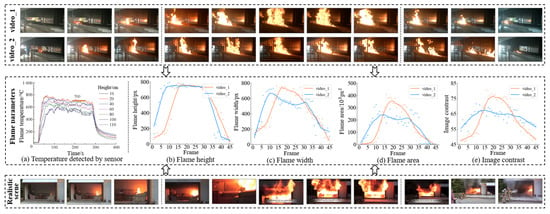

Fire situation information plays a crucial role in guiding fire rescue operations by providing essential details such as the fire location, fire area, and fire intensity. As shown by the flame temperature data captured by sensors [1], shown in the subsequent Figure 2a, the evolution of a fire typically encompasses three distinct stages: the fire initiation stage, violent burning stage, and decay extinguishing stage. During the stage of fire initiation, the flame burns locally and erratically, accompanied by relatively low room temperatures. This phase presents an opportune time to effectively put out the fire. In the violent burning stage, the flame spreads throughout the entire room, burning steadily and causing the room temperature to rapidly rise to approximately 1000 ℃. Extinguishing the fire becomes challenging during this stage. In the decay stage, combustible materials are consumed, and fire suppression efforts take effect. However, it is crucial to note that the fire environment undergoes rapid and dynamic changes during these stages. This renders traditional sensor-based detectors inadequate, primarily due to limitations in detection distance and susceptibility to false alarms triggered by factors such as light and dust. Moreover, these detectors are incapable of providing a visual representation of the fire scene, hindering comprehensive situational awareness.

Figure 2. Flame parameters trends during combustion. Based on two fire videos captured in the experimental scenario, flame parameters for both fire processes were computed. Similar to the sensor-based approach, flame parameters derived from visual data exhibit a three-stage combustion pattern. It is evident that the vision-based fire detection method can be extended to respond rapidly to real-world fire incidents.

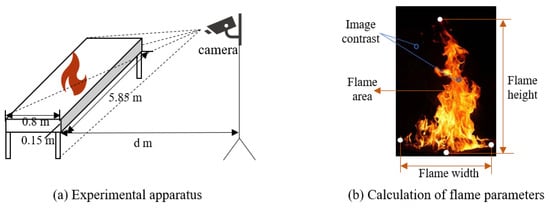

Fire detection technology based on visual images offers inherent advantages. On the one hand, the portability and anti-interference of camera equipment enhance the reliability of visual fire detection. On the other hand, fire situation parameters derived from visual methods such as the flame height, width, and area are significant to fire detection and control [2]. Figure 2 illustrates the acquisition of flame situation parameters through visual fire detection technology. In the upper part of Figure 2, video_1 and video_2 represent two fire video sequences obtained in the experimental scene, in which the images were acquired through the acquisition device in Figure 3a. For the two fire videos, 45 frames of images (including three processes of combustion) were selected from each for flame parameter analysis. These image samples were subjected to the calculation process described in Figure 3b to obtain the flame height, width, and area in each frame of image, as well as the image contrast. The obtained flame parameter trends are shown in the middle of Figure 2. Initially, the size of the fire region is small. As the flames spread, the values of the flame parameters rapidly increase until they reach a stable state. After complete combustion, the flames gradually diminish, resulting in a decrease in each parameter value. Figure 2a shows the flame temperature data detected by Zhu et al. using sensors at different locations in their experimental scenario [1]. They observed that the fire process also exhibited three-stage characteristics. The five sub-figures show that the vision-based fire detection method captures flame motion patterns similar to those obtained through sensor-based methods. The visual fire detection method can be extended to realistic fire scenes, such as the home fire scene in the lower part of Figure 2. With the rapid development of computer vision technology and intelligent monitoring systems, vision-based methods offer faster response times and lower misjudgment rates than traditional sensors. The strength significantly contributes to accelerating the intelligent development of fire safety in urban areas.

Figure 3. Schematic diagram of experimental setup and flame parameters.

In recent years, scholars have extensively explored fire detection methods from the perspectives of image classification, object detection, and semantic segmentation. The method based on image classification aims to determine whether smoke or flame is present in an image. Zhong et al. optimized a convolutional neural network (CNN) model and combined it with the RGB color space model to extract relevant fire features [3]. Dilshad et al. proposed a real-time fire detection framework called E-FireNet, specifically designed for complex monitoring environments [4]. The object detection-based fire detection method aims to annotate fire objects in images using rectangular bounding boxes. Avazov et al. employed an enhanced YOLOv4 network capable of detecting fire areas by adapting to diverse weather conditions [5]. Fang et al. accelerated the detection speed by extracting key frames from a video and subsequently locating fires using superpixels [6]. The fire detection method based on semantic segmentation detects the fire objects’ contours by determining whether each pixel in the fire image is a fire pixel. This approach has potential to gain fine-grained parameter information (such as flame height, width, and area) in complex fire scenarios. De et al. proposed a rule-based color model, which employed the RGB and YCbCr color spaces to allow the simultaneous detection of multiple fires [7]. Wang et al. introduced an attention-guided optical satellite video smoke segmentation network that effectively suppresses the ground background and extracts the multi-scale features of smoke [8]. Ghali et al. utilized two vision-based variants of Transformer (TransUNet and MedT) to explore the potential of visible spectral images in forest fire segmentation [9]. Many fire segmentation methods have achieved notable results. However, flames exhibit variable shapes and sizes, and their edges are blurred, posing challenges to accurate segmentation. Therefore, little attention is paid to further mining fire situation information through fire segmentation.

2. Fire Segmentation

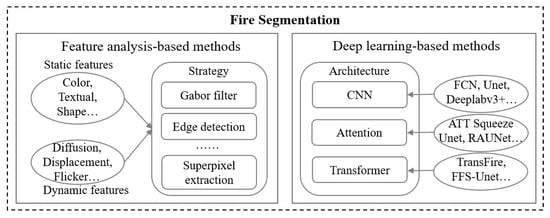

Fire segmentation detects fire pixels in fire images by separating the foreground of smoke or flame from the background. It avoids using bounding boxes and focuses on delineating distinct boundaries. There are two categories of fire segmentation methods, feature analysis-based methods and deep learning-based methods, as shown in Figure 4.

Figure 4. Overview of relevant research on fire segmentation.

Feature analysis-based methods: Fire objects exhibit rich visual characteristics, encompassing both static features like color, texture, and shape, and dynamic features such as diffusion, displacement, and flicker [10]. Researchers have employed distinct methods to extract these features and construct comprehensive feature representations to enable effective recognition. Celik et al. employed the YCbCr color space instead of RGB to construct a universal chromaticity model for flame pixel classification [11]. Yang et al. fused two-dimensional Otst and HSI color gamut growth to handle reflections and smoke areas, efficiently segmenting suspected fire regions [12]. Ajith et al. combined optical flow, scatter, and intensity features in infrared fire images for discriminative segmentation features [13]. Chen et al. presented a fire recognition method based on enhanced color segmentation and multi-feature description [14]. The approach employs the area coefficient of variation, centroid dispersion, and circularity of images as statistics to determine the optimal threshold in fine fire segmentation. Malbog et al. compared the Sobel and Canny edge detection techniques, finding that Canny edge detection suppresses noisy edges better, achieving higher accuracy and facilitating fire growth detection [15].

Deep learning-based methods: Deep learning methods have gained prominence in computer vision across various application domains [16]. These methods utilize deep neural networks to automatically learn feature representations through end-to-end supervised training. Researchers have made substantial progress by improving classical CNN for fire segmentation. Yuan et al. proposed a CNN-based smoke segmentation method that fuses information from two paths to generate a smoke mask [17]. Zhou et al. employed Mask RCNN to identify and segment indoor combustible objects and predict the fire load [18]. Harkat et al. applied the Deeplab v3+ framework to segment flame areas in limited aerial images [19]. Perrolas et al. introduced a multi-resolution iterative tree search algorithm for flame and smoke region segmentation [20]. Wang et al. selected four classical semantic segmentation models (Unet, Deeplab v3+, FCN, and PSPNet) with two backbones (VGG16 and ResNet50) to analyze forest fires [21]. They found the Unet model with the ResNet50 backbone to have the highest accuracy. Frizzi et al. introduced a novel CNN-based network for smoke and flame detection in forests [22]. The network generates accurate flame and smoke masks in RGB images, reducing false alarms caused by clouds or haze. Attention mechanisms have also been employed, such as in ATT Squeeze Unet and Smoke-Unet, to focus on salient fire features [23][24]. Wang et al. introduced Smoke-Unet, an improved Unet model that combines an attention mechanism and residual blocks for smoke segmentation [24]. It utilizes high-sensitivity bands and remote sensing indices in multiple bands to detect forest fire smoke early. CNN-based models are effective for fire detection and segmentation, but they struggle in capturing global image information. Transformer-based models, with their self-attention mechanism, excel at capturing global features. Chali et al. employed two Transformer-based models (TransUnet and TransFire) and a CNN-based model (EfficientSeg) to identify precise fire regions in wildfires [25].

References

- Zhu, P.; Luo, S.; Liu, Q.; Shao, Q.; Yang, R. Effectiveness of aviation kerosene pool fire suppression by water mist in a cargo compartment with low-pressure environment. J. Tsinghua Univ. (Sci. Technol.) 2022, 62, 21–32.

- Chen, X.; Hopkins, B.; Wang, H.; O’Neill, L.; Afghah, F.; Razi, A.; Fulé, P.; Coen, J.; Rowell, E.; Watts, A. Wildland Fire Detection and Monitoring Using a Drone-Collected RGB/IR Image Dataset. IEEE Access 2022, 10, 121301–121317.

- Zhong, Z.; Wang, M.; Shi, Y.; Gao, W. A convolutional neural network-based flame detection method in video sequence. Signal Image Video Process. 2018, 12, 1619–1627.

- Dilshad, N.; Khan, T.; Song, J. Efficient Deep Learning Framework for Fire Detection in Complex Surveillance Environment. Comput. Syst. Sci. Eng. 2023, 46, 749–764.

- Avazov, K.; Mukhiddinov, M.; Makhmudov, F.; Cho, Y.I. Fire detection method in smart city environments using a deep-learning-based approach. Electronics 2021, 11, 73.

- Fang, Q.; Peng, Z.; Yan, P.; Huang, J. A fire detection and localisation method based on keyframes and superpixels for large-space buildings. Int. J. Intell. Inf. Database Syst. 2023, 16, 1–19.

- De Sousa, J.V.R.; Gamboa, P.V. Aerial forest fire detection and monitoring using a small uav. KnE Eng. 2020, 5, 242–256.

- Wang, T.; Hong, J.; Han, Y.; Zhang, G.; Chen, S.; Dong, T.; Yang, Y.; Ruan, H. AOSVSSNet: Attention-guided optical satellite video smoke segmentation network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8552–8566.

- Ghali, R.; Akhloufi, M.A.; Jmal, M.; Souidene Mseddi, W.; Attia, R. Wildfire segmentation using deep vision transformers. Remote Sens. 2021, 13, 3527.

- Chen, Y.; Xu, W.; Zuo, J.; Yang, K. The fire recognition algorithm using dynamic feature fusion and IV-SVM classifier. Clust. Comput. 2019, 22, 7665–7675.

- Celik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158.

- Yang, L.; Zhang, D.; Wang, Y.H. A new flame segmentation algorithm based color space model. In Proceedings of the 2017 29th Chinese Control And Decision Conference (CCDC), Chongqing, China, 28–30 May 2017; pp. 2708–2713.

- Ajith, M.; Martínez-Ramón, M. Unsupervised segmentation of fire and smoke from infra-red videos. IEEE Access 2019, 7, 182381–182394.

- Chen, X.; An, Q.; Yu, K.; Ban, Y. A novel fire identification algorithm based on improved color segmentation and enhanced feature data. IEEE Trans. Instrum. Meas. 2021, 70, 5009415.

- Malbog, M.A.F.; Lacatan, L.L.; Dellosa, R.M.; Austria, Y.D.; Cunanan, C.F. Edge detection comparison of hybrid feature extraction for combustible fire segmentation: A Canny vs. Sobel performance analysis. In Proceedings of the 2020 11th IEEE Control and System Graduate Research Colloquium (ICSGRC), Shah Alam, Malaysia, 8 August 2020; pp. 318–322.

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349.

- Yuan, F.; Zhang, L.; Xia, X.; Wan, B.; Huang, Q.; Li, X. Deep smoke segmentation. Neurocomputing 2019, 357, 248–260.

- Zhou, Y.C.; Hu, Z.Z.; Yan, K.X.; Lin, J.R. Deep learning-based instance segmentation for indoor fire load recognition. IEEE Access 2021, 9, 148771–148782.

- Harkat, H.; Nascimento, J.M.; Bernardino, A.; Thariq Ahmed, H.F. Assessing the impact of the loss function and encoder architecture for fire aerial images segmentation using deeplabv3+. Remote Sens. 2022, 14, 2023.

- Perrolas, G.; Niknejad, M.; Ribeiro, R.; Bernardino, A. Scalable fire and smoke segmentation from aerial images using convolutional neural networks and quad-tree search. Sensors 2022, 22, 1701.

- Wang, Z.; Peng, T.; Lu, Z. Comparative research on forest fire image segmentation algorithms based on fully convolutional neural networks. Forests 2022, 13, 1133.

- Frizzi, S.; Bouchouicha, M.; Ginoux, J.M.; Moreau, E.; Sayadi, M. Convolutional neural network for smoke and fire semantic segmentation. IET Image Process. 2021, 15, 634–647.

- Zhang, J.; Zhu, H.; Wang, P.; Ling, X. ATT squeeze U-Net: A lightweight network for forest fire detection and recognition. IEEE Access 2021, 9, 10858–10870.

- Wang, Z.; Yang, P.; Liang, H.; Zheng, C.; Yin, J.; Tian, Y.; Cui, W. Semantic segmentation and analysis on sensitive parameters of forest fire smoke using smoke-unet and landsat-8 imagery. Remote Sens. 2022, 14, 45.

- Ghali, R.; Akhloufi, M.A.; Mseddi, W.S. Deep learning and transformer approaches for UAV-based wildfire detection and segmentation. Sensors 2022, 22, 1977.

More

Information

Subjects:

Engineering, Electrical & Electronic

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

1.1K

Revisions:

2 times

(View History)

Update Date:

18 Dec 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No