With the impacts of global climate change and habitat loss, wild animals are facing unprecedented threats to their survival. For instance, rising temperatures, increased extreme weather events, and rising sea levels all pose threats to the survival of wildlife. The global urbanization and agricultural expansion have led to large-scale habitat destruction and loss. This has deprived many wild animals of places to live and breed. Moreover, the decline of biodiversity has also exacerbated human concerns about environmental sustainability. As a result, the issue of wildlife conservation has received extensive international attention. In this context, governments and international organizations have taken various initiatives in an attempt to curb the decline of wildlife populations and to improve the efficiency of conservation through the use of scientific and technological means. Drones are widely used for wildlife monitoring. Deep learning algorithms are key to the success of monitoring wildlife with drones, although they face the problem of detecting small targets.

1. Introduction

In recent years, aerial imagery has been extensively utilized in wildlife conservation due to the fast-paced evolution of drones and other aerial platforms

[1]. Moreover, Unmanned Aerial Vehicles (UAVs) offer several advantages in aerial imagery due to their high altitude and ability to carry high-resolution camera payloads. Aerial imagery can, therefore, provide humans with valuable information in complex and harsh natural environments in a relatively short period of time

[2]. For instance, high-resolution aerial photographs can accurately identify wildlife categories and population numbers, facilitating wildlife population counts and assessments of their endangerment levels

[3]. Similarly, aerial photographs with broader coverage can detect wildlife in remote or otherwise inaccessible areas

[4]. The wide field of view is advantageous as it enables the aerial images to observe a large area, covering several square kilometers, while simultaneously detecting individuals and groups of animals that are otherwise difficult to access

[5]. Various methods have been explored for detecting wildlife on aerial images, including target detection algorithms, semantic segmentation algorithms, and deep learning methods

[6][7][8][9][10]. For instance, Barbedo et al.

[7] used convolutional neural networks to monitor herds of cattle with drones. Brown et al.

[8] employed high-resolution UAV images to establish an accurate ground truth. They utilized a target detection-based method using YOLOv5 to achieve animal localization and counting on Australian farms. Similarly, Padubidri et al.

[10] counted sea lions and African elephants in aerial images by utilizing a UNet model based on semantic segmentation. Furthermore, wildlife detection using deep learning techniques and aerial imagery can rapidly locate wildlife, aiding in the planning of effective protection and search activities

[11]. Additionally, it can determine the living conditions of wildlife in dangerous and complex natural habitats such as swamps and forests

[12]. In a word, deep learning models, such as YOLO, have emerged as a significant approach in UAV aerial imagery-based applications for wildlife detection.

The success of deep learning in wildlife detection with drones relies on having a large amount of real-world data available for training algorithms

[13]. For example, training on large datasets like ImageNet

[14] and MSCOCO

[15] was then followed by transfer learning on actual data. Unfortunately, the extremely high cost of acquiring high-quality aerial images makes obtaining real transfer learning data challenging, not to mention constructing large-scale aerial wildlife datasets. That is to say, due to the lack of large-scale aerial wildlife image datasets, most current methods involve adapting object detection algorithms developed for natural scene images to aerial images, which is not suitable for wildlife detection

[16]. These challenges contribute to the current shortcomings of drone-based wildlife detection algorithms, including low accuracy, weak robustness, and unsatisfactory practical application outcomes

[17][18].

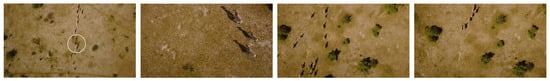

In addition, the data of wildlife captured by drones have unique characteristics. As can be seen from

Figure 1, the individual animals in aerial images are small and the proportion varies greatly. This arises not only from differences in drone flight altitudes but also relates to inherent variations in object sizes within the same category. Therefore, it is necessary to overcome the small target problem when using drones to detect wildlife

[19]. Currently, researchers have invested substantial efforts in enhancing the accuracy of algorithms for detecting these small targets, but few of them focus on wildlife aerial imagery applications

[20]. For instance, Chen et al.

[21] proposed an approach that combines contextual models and small region proposal generators to enhance the R-CNN algorithm, thereby improving the detection of small objects in images. Zhou et al.

[22] introduced a scale-transferable detection network that maintains scale consistency between different detection scales through embedded super-resolution layers, enhancing the detection of multi-scale objects in images. However, although the above work has improved the effect of small target detection to a certain extent, challenges remain, including limited real-time performance, high computational demands, information redundancy, and complex network structures.

Figure 1. Example of aerial images of zebra migration at different flight heights.

On the one hand, to address real-time wildlife detection, researchers often employ one-stage YOLO methods

[23]. For example, Zhong et al.

[24] used the YOLO method to monitor marine animal populations, demonstrating that YOLO can rapidly and accurately identify marine animals within coral reefs. Roy et al.

[25] proposed a high-performance YOLO-based automatic detection model for real-time detection of endangered wildlife. Although advanced versions like YOLOv7

[26] have started addressing multi-scale detection issues, they still struggle with the highly dynamic scenes encountered in drone aerial imagery

[27]. On the other hand, researchers have also turned their attention to attention mechanisms to address the small target detection problem. These mechanisms guide the model to focus on critical feature regions of the target, leading to more accurate localization of small objects and reduced computational resource consumption

[28]. For instance, Wang et al.

[29] introduced a pre-detection concept based on attention mechanisms into the model, which restricts the detection area in images based on the characteristics of small object detection. This reduces redundant information and improves the efficiency of small target detection. Zuo et al.

[30] proposed an attention-fused feature pyramid network to address the issue of potential target loss in deep network structures. Their adaptive attention fusion module enhances the spatial positions and semantic information features of infrared small targets in shallow and deep layers, enhancing the network’s ability to represent target features. Zhu et al.

[31] introduced a lightweight small target detection network with embedded attention mechanisms. This enhances the network’s ability to focus on regions of interest in images while maintaining a lightweight network structure, thereby improving the detection of small targets in complex backgrounds. These studies indicate that incorporating attention mechanisms into models is beneficial for effectively fusing features from different levels and enhancing the detection efficiency and capabilities of small targets. Notably, channel attention mechanisms hold advantages in small target detection

[32][33]. Channel attention mechanisms better consider information from different channels, focusing on various dimensions of small targets and effectively capturing their features

[34]. Moreover, since channel attention mechanisms are unaffected by spatial dimensions, they might be more stable when handling targets with different spatial distributions

[35]. This can enhance robustness when dealing with targets of varying sizes, positions, and orientations.

2. Diverse Datasets for Wildlife Detection

Currently, there are numerous publicly available animal datasets based on ground images as shown in

Table 1. As seen from

Table 1, Song et al.

[36] introduced the Animal10N dataset, containing 55,000 images from five pairs of animals: (cat, lynx), (tiger, cheetah), (wolf, coyote), (gorilla, chimpanzee), and (hamster, guinea pig). The training set comprises 50,000 images, whereas the test set has 5000 images. Ng et al.

[37] presented the Animal Kingdom dataset, a vast and diverse collection offering annotated tasks to comprehensively understand natural animal behavior. It includes 50 h of annotated videos for locating relevant animal behavior segments in lengthy videos, 30 K video sequences for fine-grained multi-label action recognition tasks, and 33 K frames for pose estimation tasks across 850 species of six major animal phyla. Cao et al.

[38] introduced the Animal-Pose dataset for animal pose estimation, covering five categories: dogs, cats, cows, horses, and sheep, with over 6000 instances from 4000+ images. Additionally, it provides bounding box annotations for seven animal categories: otter, lynx, rhinoceros, hippopotamus, gorilla, bear, and antelope. AnimalWeb

[39] is a large-scale, hierarchically annotated animal face dataset, encompassing 22.4 K faces from 350 species and 21 animal families. These facial images were captured in the wild under field conditions and annotated with nine key facial landmarks consistently across the dataset. However, these existing datasets may not sufficiently support the demands of comprehensive aerial wildlife monitoring. For instance, the Animal10 dataset focuses on animal detection rather than wildlife detection in natural environments. The Animal Kingdom and Animal-Pose datasets were not specifically designed for wildlife detection in drone aerial images.

Table 1. A summary of the animal image datasets currently available.

| Dataset |

Animal10 |

Animal-Pose |

AnimalWeb |

Animal Kingdom |

WAID (Ours) |

| Year |

2019 |

2019 |

2020 |

2022 |

2023 |

| multicategory |

√ |

√ |

√ |

√ |

√ |

| Wilderness |

× |

√ |

√ |

√ |

√ |

| Multiscale |

× |

√ |

× |

√ |

√ |

| ValidationSet |

× |

× |

× |

√ |

√ |

| AerialView |

× |

× |

× |

× |

√ |

In addition, most publicly available aerial image datasets are concentrated in urban areas and primarily used for tasks like building detection, vehicle detection or segmentation, and road planning

[40][41][42]. However, to the best of the researcher’s knowledge, there are only a few public datasets available for animal detection in aerial images, such as the NOAA Arctic Seal dataset

[43] and the Aerial Elephant Dataset (AED)

[44]. The NOAA Arctic Seal dataset comprises approximately 80,000 color and infrared (thermal) images, collected during flights conducted by the NOAA Alaska Fisheries Science Center in Alaska in 2019. The images are annotated with around 28,000 ice seal bounding boxes (14,000 on color images and 14,000 on thermal images). The AED is a challenging dataset containing 2074 images, including a total of 15,581 African forest elephants in their natural habitat. The imaging was conducted with consistent methods across a range of background types, resolutions, and times of day. However, these datasets are annotated specifically for certain species. In addition to this, there are a number of small-scale aerial wildlife datasets (e.g., elephants or camels) available on data collection platforms (e.g., rebowflow). In summary, there are three limitations to the current aerial wildlife detection datasets. First, the current aerial wildlife datasets are small in size and not species-rich. Second, these datasets often suffer from limited image quantity and inconsistent annotation quality. Third, there are no open-source, large-scale, high-quality wildlife aerial photography datasets yet. In summary, the current aerial wildlife datasets, these limitations have restricted their applicability in diverse wildlife monitoring scenarios.

3. Object Detection Methods

3.1. Single-Stage Object Detection Method

Single-stage object detection methods refer to those that require only one feature extraction process to achieve object detection. Compared to multi-stage detection methods, single-stage methods are faster in terms of speed, although they might have slightly lower accuracy. Some typical single-stage object detection methods include the YOLO series, SSD, and RefineDet. The YOLO model was initially introduced by Redmon et al.

[45] in 2015. Its core idea is to divide the image into S*S grids, and each grid is responsible for predicting and generating multiple bounding boxes if the center point of the object is within that grid. However, during training, each bounding box is allowed to predict only one object, limiting its ability to detect multiple objects and thus affecting detection accuracy. Nonetheless, it significantly improves detection speed. Shortly after the publication of the YOLO model, the SSD model was also introduced

[46]. SSD uses VGG16

[47] as its backbone network and does not generate candidate boxes. Instead, it allows feature extraction at multiple scales. The SSD algorithm trains the model using a weighted combination of data augmentation, localization loss, and confidence loss, resulting in fast speeds. However, due to its challenging training process, its accuracy is relatively lower. In 2018, Ref.

[48] proposed the RefineDet detection algorithm. This algorithm comprises the ARM module, TCB module, and ODM module. The ARM module focuses on refining anchor boxes, reducing the proportion of negative samples, and adjusting the size and position of anchor boxes to provide better results for regression and classification. The TCB module transforms the output of the ARM into the input of ODM, achieving the fusion of high-level semantic information and low-level feature information. RefineDet uses two-stage regression to improve object detection accuracy and achieve end-to-end multi-task training.

3.2. Two-Stage Object Detection Method

Compared to single-stage detectors, two-stage object detection algorithms divide the detection process into two main steps: region proposal generation and object classification. The advantage of this approach lies in its ability to select multiple candidate boxes, effectively extracting feature information from targets, resulting in high-precision detection and accurate localization. However, due to the involvement of two independent steps, this can lead to slower detection speeds and more complex models. Girshick et al.

[49] introduced the classic R-CNN algorithm, which employed a “Region Proposal + CNN” approach to feature extraction, opening the door for applying deep learning to object detection and laying the foundation for subsequent algorithms. Subsequently, He et al.

[50] introduced the SPP-Net algorithm to address the time-consuming feature extraction process in R-CNN. To further improve upon the drawbacks of R-CNN and SPP-Net, Girshick

[51] proposed the Fast R-CNN algorithm. Fast R-CNN combined the advantages of R-CNN and SPP-Net, but still did not achieve end-to-end object detection. In 2015, Ren et al.

[52] introduced the Faster R-CNN algorithm, which was the first deep learning-based detection algorithm that approached real-time performance. Faster R-CNN introduced Region Proposal Networks (RPNs) to replace traditional Selective Search algorithms for generating proposals. Although Faster R-CNN surpassed the speed limitations of Fast R-CNN, there still remained issues of computational redundancy in the subsequent detection stage. The algorithm continued to use ROI Pooling layers, which could lead to reduced localization accuracy in object detection. Furthermore, Faster R-CNN’s performance in detecting small objects was subpar. Various improvements were subsequently proposed, such as R-FCN

[53] and Light-Head R-CNN

[54], which further enhanced the performance of Faster R-CNN.

4. Gated Channel Attention Mechanism

Incorporating attention mechanisms into neural networks offers various methods, and taking convolutional neural networks (CNNs) as an example, attention can be introduced in the spatial dimension, as well as in the channel dimension. For instance, in the Inception

[55] network, the multi-scale approach assigns different weights to parallel convolutional layers, offering an attention-like mechanism. Attention can also be introduced in the channel dimension

[56], and it is possible to combine both spatial and channel attention mechanisms

[57]. The channel attention mechanism focuses on the differences in importance among channels within a convolutional layer, adjusting the weights of channels to enhance feature extraction. On the other hand, the spatial attention mechanism builds upon the channel attention concept, suggesting that the importance of each pixel in different channels varies. By adjusting the weights of all pixels across different channels, the network’s feature extraction capabilities are enhanced.

When applied to small object detection in aerial images, the channel attention mechanism is considered more suitable and has seen substantial research in enhancing small object detection while also addressing model complexity. For example, Wang et al.

[58] addressed the trade-off between model detection performance and complexity by proposing an efficient channel attention module (ECA), which introduces a small number of parameters but yields significant performance improvements. Tong et al.

[59] introduced a channel attention-based DenseNet network that rapidly and accurately captures key features from images, thus improving the classification of remote sensing image scenes. To tackle the issue of low recognition rates and high miss rates in current object detection tasks, Yang et al.

[60] proposed an improved YOLOv3 algorithm incorporating a gated channel attention mechanism (GCAM) and an adaptive upsampling module. Results showed that the improved approach adapts to multi-scale object detection tasks in complex scenes and reduces the omission rate of small objects. Hence, in this research, a channel attention mechanism to enhance the performance of YOLO with drones is attempted use.

5. Drone-Based Wildlife Detection

In the field of wildlife detection using drones, UAVs have made wildlife conservation technology more accessible. The benefits of aerial imagery from drones have contributed to wildlife conservation.

Drones have automated the process of capturing high-altitude aerial photos of wildlife habitats, greatly simplifying the process of obtaining images and monitoring wildlife using automated techniques such as image recognition. This technology eliminates the necessity for capturing images manually and enables more efficient monitoring of wildlife populations. For example, Hodgson et al.

[61] applied UAV technology to wildlife monitoring in tropical and polar environments and demonstrated through their study that UAV counts of nesting bird colonies were an order of magnitude more accurate than traditional ground counts. Sirmacek et al.

[62] proposed a computer vision method for quantifying animals in aerial images, resulting in successful detection and counting. The approach holds promise for effective wildlife conservation management. In a study comparing the efficacy of object- and pixel-based classification methods, Ruwaimana et al.

[63] established that UAV images were more informative than satellite images in mapping mangrove forests in Malaysian wetlands. This opens the possibility for automating the detection and analysis of wildlife in aerial images using computer vision techniques, hence rendering wildlife conservation studies based on these images more efficient and feasible. Raoult et al.

[64] employed drones in examining marine animals, which enabled the study of numerous aspects of ecology, behavior, health, and movement patterns of these animals. Overall, UAVs and aerial imagery have significant research value and potential for development in wildlife conservation.

Moreover, aerial imagery obtained through the use of drones is useful in various wildlife conservation applications due to its high level of accuracy, vast coverage, and broad field of view. This type of imagery, particularly those with 4K resolution or higher, can accurately identify various animal details, such as their category and quantity

[65]. For instance, the distinctive stripes of zebras can easily be recognized

[66], which is essential in counting the number of animals and assessing their level of endangerment. Aerial photos that cover a broad territory can be useful to survey wildlife in remote or hard-to-reach locations, aiding in complete assessments of animal distribution and habitat utilization

[67]. For the advantage of comprehensive coverage, UAVs can survey areas encompassing vast wetlands or dense forests to detect elusive wildlife populations and monitor their ranges. This helps develop effective conservation plans

[68].

However, current algorithms for wildlife detection using UAVs still encounter various challenges. Firstly, the accuracy of detecting small targets is inadequate. Existing algorithms fail to satisfy the requirements for accurate detection of small targets. As UAVs capture images from far away, small targets are often only a few tens of pixels. For instance, an enhanced YOLOv4 model was proposed by Tan et al.

[69] for detecting UAV aerial images, which achieved only 45% of the mean average precision. Wang et al.

[12] discovered that identifying small animals within obstructed jungle environments during UAV wildlife investigations is challenging. Furthermore, meeting practical use standards requires enhanced real-time algorithm performance, which current techniques struggle to achieve the necessary response speed for flight. For instance, Benjdira et al.

[70] utilized the Faster R-CNN method in detecting vehicles in aerial images. The experimental results revealed that the detection speed hit 1.39 s. However, this could not satisfy the real-time requirements. Fortunately, YOLO, a one-stage detection algorithm, exhibits outstanding real-time performance, making it a crucial choice as the primary model for this study. Additionally, the publicly accessible training data remain limited, which inevitably leads to the model’s overfitting issue and weak generalization ability in practical scenarios. These limitations suggest that there remains a disparity between the existing algorithms and the necessity for ongoing enhancement in order to attain precise and effective identification of wildlife in aerial images. Consequently, it has been spurred to develop a real-time detection (e.g., YOLO) technique for detecting small-target UAV wildlife and to divulge a superior, open-source training dataset to advance the field of academia.