| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Yuya Hiruta | -- | 2600 | 2023-08-02 12:32:15 | | | |

| 2 | Dean Liu | -3 word(s) | 2597 | 2023-08-03 02:45:47 | | | | |

| 3 | Dean Liu | -4 word(s) | 2593 | 2023-08-08 02:32:39 | | |

Video Upload Options

There is a novel approach for estimating the 3D information of an observed scene utilizing a monocular image based on a catadioptric imaging system employing an omnidirectional camera and a spherical mirror. Researchers aim to develop a method that is independent of learning and makes it possible to capture a wide range of 3D information using a compact device.

1. Introduction

The growing demand for 3D information in various fields, such as autonomous driving for understanding the traffic environment or providing a free viewpoint in sports events, has led to the rapid spread of technologies for acquiring and displaying 3D information. These technologies can be categorized into two main types: active sensing, which involves irradiating objects with light for measurement, and passive sensing, which utilizes cameras as light-receiving sensors. Active sensing methods, like LiDAR(Light Detection and Ranging) [1], offer direct 3D information but require additional light-emitting devices. Passive sensing methods, specifically cameras, become necessary to incorporate visual information, such as texture, into the measured 3D information. However, there are challenges related to the scale of observation systems, measurement range, and prior knowledge.

The widely-used Structure from Motion (SfM) technique [2] enables high-precision 3D reconstruction using multiple or moving cameras. However, this approach necessitates large-scale observation equipment and dynamic imaging. Researchers have explored estimating 3D information from single-shot images captured by a single camera to achieve compactness. Nonetheless, this leads to a limited observation range. This limitation can be overcome by utilizing omnidirectional cameras.

Deep learning-based 3D estimation from monocular omnidirectional images [3] has garnered attention. However, estimating 3D information for unknown objects poses difficulties due to the reliance on prior knowledge, specifically training data. Estimating the 3D shape of objects with insufficient training data, such as scenes containing rare objects, may decrease accuracy.

One method for obtaining alternative viewpoints in monocular images is through mirrors. A catadioptric system, commonly found in telescopes, consists of a mirror and lenses. When a camera is used as a lens in such a system, it is called a catadioptric imaging system. By employing a curved mirror, the catadioptric imaging system captures the light rays reflected on the mirror surface, enabling the observation of a wider scene than conventional cameras. However, most catadioptric imaging systems [4][5][6][7] have a fixed positional relationship between the mirror and the camera, limiting the observation range and degree of freedom. Agrawal et al. [8] proposed using multiple curved mirrors to estimate 3D information when the positional relationship in the catadioptric imaging system is not fixed. Thus, these require multiple mirrors [6][8] or imaging systems [5][7], resulting in compactness and single-shot imaging loss. Researchers propose a 3D estimation method for a catadioptric imaging system using a single curved mirror with an unknown 3D position. Thus, prior to estimating the 3D information of the observed scene, the method estimates the 3D position of the mirror by analyzing the mirror image region in the captured image. The objective is to estimate a wide range of 3D information from a monocular image captured using a compact device without relying on training data.

The proposed method is considered effective for dynamic tubular objects because its characteristic is that 3D information is estimated more effectively on the side of the system. For example, it can be applied to the estimation of 3D information from an endoscope, a medical device that takes images inside the body. Sagawa et al. [9] utilized an endoscope with an attachment for omnidirectional observation, allowing for wide-range observation inside the body. With a compact device, the proposed method makes it possible to estimate a broad spectrum of 3D information concerning the body, which undergoes temporal changes, from a single-shot image. In addition, since the positional relationship of the system is estimated from the captured images, the system does not require prior calibration, as is the case with stereo systems that use two camera sets, and is robust to external vibrations and long-term use. In stereo, the positional relationship of the camera sets at the calibration time can deviate, leading to decreased accuracy. In tunnel excavation, a vibration of the drill creates such a situation. Thus, this method is considered to be effective for tunnel excavation.

2. 3D Estimation Method Using an Omnidirectional Camera and a Spherical Mirror

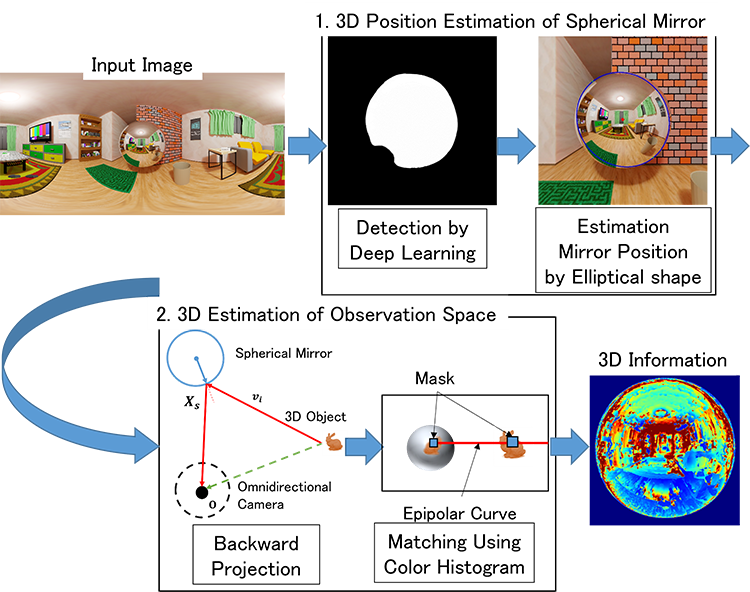

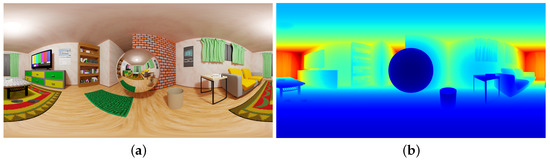

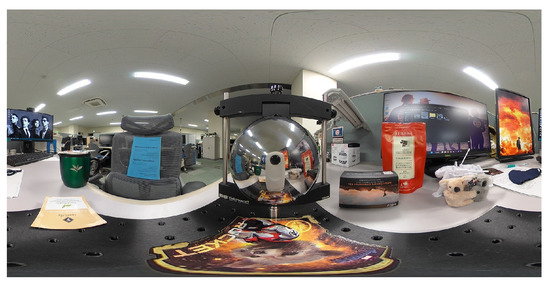

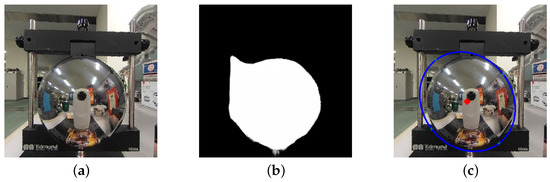

The proposed 3D estimation process follows the flow shown in Figure 1. In this process, a known spherical mirror is assumed to be placed in the scene, and images of the mirror are captured using an omnidirectional camera.

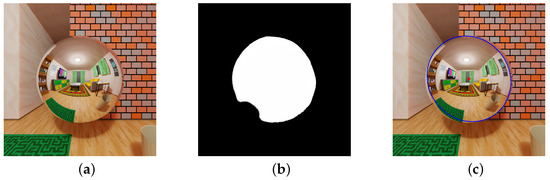

To estimate the 3D information in the catadioptric imaging system, the determination of the 3D position of the spherical mirror is crucial. This method estimates the mirror's position by analyzing the mirror image observed in the omnidirectional image. The mirror region is obtained by applying the mirror region segmentation network discussed to the omnidirectional image. An ellipse is fitted to the mirror region, and the 3D position of the spherical mirror is estimated based on the shape of the ellipse.

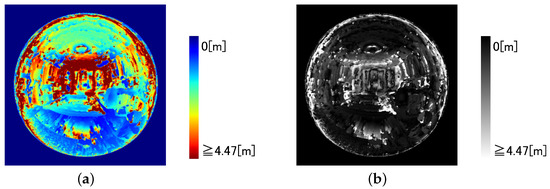

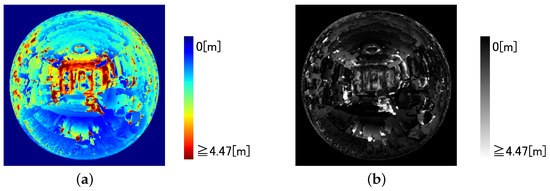

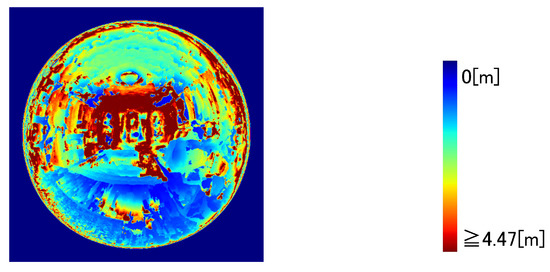

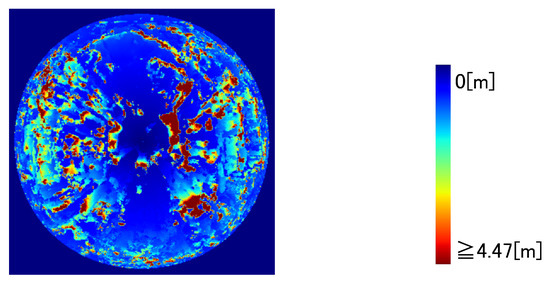

The 3D information of the captured scene is estimated using the estimated 3D position information of the spherical mirror. The process begins with backward projection, which determines the reflection points on the spherical mirror's surface and the incident light ray directions from the objects in the scene. Next, to obtain the 3D information observed from multiple viewpoints, the mirror image at each reflection point and the corresponding area in the omnidirectional image are searched. Stereo matching along the ray directions, estimated through backward projection, is performed to calculate the similarity of visibility. The 3D information with the highest similarity is selected. The similarity of visibility is computed using a color histogram, considering the distortion of the mirror image caused by the spherical shape. This process is applied to all pixels of the spherical mirror in the omnidirectional image.

By following this flow, the proposed method enables the estimation of 3D information in the scene captured by the catadioptric imaging system, leveraging the 3D position estimation of the spherical mirror and the analysis of the mirror reflections.

Figure 1. The Process of a 3D Estimation Based on a Catadioptric Imaging System with an Omnidirectional Camera and a Spherical Mirror: 1. Segmentation of the mirror image region in an omnidirectional image by network. Estimation of the 3D position of a spherical mirror from the shape of the mirror image. 2. Estimation of the 3D information of the shooting scene based on the 3D position of the spherical mirror. Searching for the omnidirectional image part corresponding to the mirror image using the incident light rays from the object obtained by the 3D position information of the spherical mirror, using stereo matching.

3. Simulation

3.1. Simulation with CG Model of a Room

3.2. Discussion of Room Model Simulation

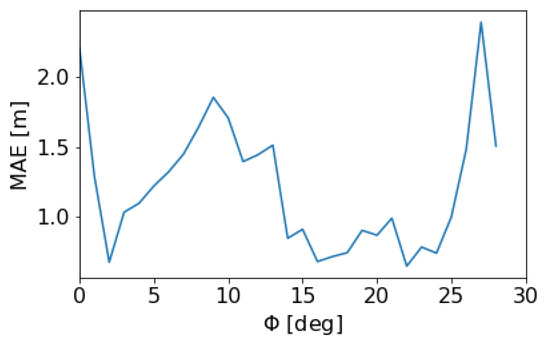

3.3. Verification of the Effect of 3D Position Estimation Error of a Spherical Mirror on 3D Information Estimation

3.4. Simulation with Image in Real-World

References

- Behroozpour, B.; Sandborn, P.; Wu, M.; Boser, B. Lidar System Architectures and Circuits.. IEEE Commun. Mag. 2017, 55, 135–142.

- Dhond, U.; Aggarwal, J. Structure from Stereo—A Review. IEEE Trans. Syst. Man Cybernrtics 1989, 19, 1489–1510.

- Zioulis, N.; Karakottas, A.; Zrpalas, D.; Daras, P. OmniDepth: Dense Depth Estimation for Indoors Spherical Panoramas. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018.

- Yamazawa, K.; Yagi, Y.; Yachida, M. HyperOmni Vision: Visual Navigation with an Omnidirectional Image Sensor. Syst. Comput. Jpn. 1997, 28, 36–47.

- Chaen, A.; Yamazawa, K.; Yokoya, N.; Takemura, H. Acquisition of Three-Dimensional Information Using Omnidirectional Stereo Vision. In Proceedings of the Asian Conference on Computer Vision, Hong Kong, China, 8–10 January 1998.

- Sagawa, R.; Kurita, N.; Echigo T.; Yagi, Y. Compound Catadioptric Stereo Sensor for Omnidirectional Object Detection, In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Sendai, Japan, 28 September–2 October 2004.

- Micusik, B.; Pajdla, T. Autocalibration & 3D Reconstruction with Non-central Catadioptric Cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004.

- Agrawal, A.; Taguchi, Y.; Ramalingam, S. Beyond Alhazen’s Problem: Analytical Projection Model for Non-central Catadioptric Cameras with Quadric Mirrors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 21–23 June 2011.

- Sagawa, R.; Sakai, T.; Echigo, T.; Yagi, K.; Shiba, M.; Higuchi, K.; Arakawa, T.; Yagi, Y. Omnidirectional Vision Attachment for Medical Endoscopes. In Proceedings of the IEEE Workshop on Omnidirectional Vision, Camera Networks and Non-classical Cameras, Marseille, France, 17 October 2008.