Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Ovishake Sen | -- | 4062 | 2023-06-29 22:28:44 | | | |

| 2 | Peter Tang | + 11 word(s) | 4073 | 2023-06-30 03:24:14 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Sen, O.; Sheehan, A.M.; Raman, P.R.; Khara, K.S.; Khalifa, A.; Chatterjee, B. Machine-Learning Methods for Speech and Handwriting Detection. Encyclopedia. Available online: https://encyclopedia.pub/entry/46242 (accessed on 08 February 2026).

Sen O, Sheehan AM, Raman PR, Khara KS, Khalifa A, Chatterjee B. Machine-Learning Methods for Speech and Handwriting Detection. Encyclopedia. Available at: https://encyclopedia.pub/entry/46242. Accessed February 08, 2026.

Sen, Ovishake, Anna M. Sheehan, Pranay R. Raman, Kabir S. Khara, Adam Khalifa, Baibhab Chatterjee. "Machine-Learning Methods for Speech and Handwriting Detection" Encyclopedia, https://encyclopedia.pub/entry/46242 (accessed February 08, 2026).

Sen, O., Sheehan, A.M., Raman, P.R., Khara, K.S., Khalifa, A., & Chatterjee, B. (2023, June 29). Machine-Learning Methods for Speech and Handwriting Detection. In Encyclopedia. https://encyclopedia.pub/entry/46242

Sen, Ovishake, et al. "Machine-Learning Methods for Speech and Handwriting Detection." Encyclopedia. Web. 29 June, 2023.

Copy Citation

Brain–Computer Interfaces (BCIs) have become increasingly popular due to their potential applications in diverse fields, ranging from the medical sector (people with motor and/or communication disabilities), cognitive training, gaming, and Augmented Reality/Virtual Reality (AR/VR), among other areas. BCI which can decode and recognize neural signals involved in speech and handwriting has the potential to greatly assist individuals with severe motor impairments in their communication and interaction needs. Innovative and cutting-edge advancements in this field have the potential to develop a highly accessible and interactive communication platform for these people.

neural signals

machine learning

speech recognition

handwriting recognition

signal processing

1. Introduction

Acquiring and analyzing neural signals can greatly benefit individuals who have limitations in their movement and communication. Neurological disorders, such as Parkinson’s disease, multiple sclerosis, infectious diseases, stroke, injuries of the central nervous system, developmental disorders, locked-in syndrome [1], and cancer, often lead to physical activity impairments [2]. The acquisition of neural signals, along with stimulation and/or neuromodulation using BCIs [3], aims to alleviate some of these conditions. In addition, neural signals have been utilized in various fields such as security and privacy, cognitive training, imaginary or silent speech recognition [4][5], emotion recognition [6][7], mental state recognition [8], human identification [9][10], speech communication [11], synthesized speech communication [12] gaming [13], Internet of Things (IoT) applications [14], Brain Machine Interface (BMI) applications [15][16][17], neuroscience research [18][19], speech activity detection [20][21] and more. The first step involves collecting neural signals from patients, which are then processed and analyzed. The processed signals are then used to operate assistive devices, which help patients with their movements and communication. Neural signals can also be utilized to gauge the mental state of the general population, detect brain injuries or sleep disorders, and identify individual emotions [22]. Speech-based BCIs have shown great potential in assisting patients who have experienced brainstem strokes or amyotrophic lateral sclerosis (ALS) and are consequently diagnosed with Locked-in Syndrome (LIS). These patients can only interact with others using restricted movements, such as eye movements or blinking [23]. Again, BCI technology can assist in facilitating high-performance communication with individuals who are paralyzed [24][25]. Additionally, speech BCI can be beneficial for individuals suffering from aphasia, a condition that causes pathological changes in cortical regions related to speech [26]. Researchers are currently developing intracortical BCI to aid individuals with motor disabilities in their communication and interaction with the environment [27]. However, this technology relies on recordings from the primary motor cortex, which can potentially exhibit day-to-day variability [28].

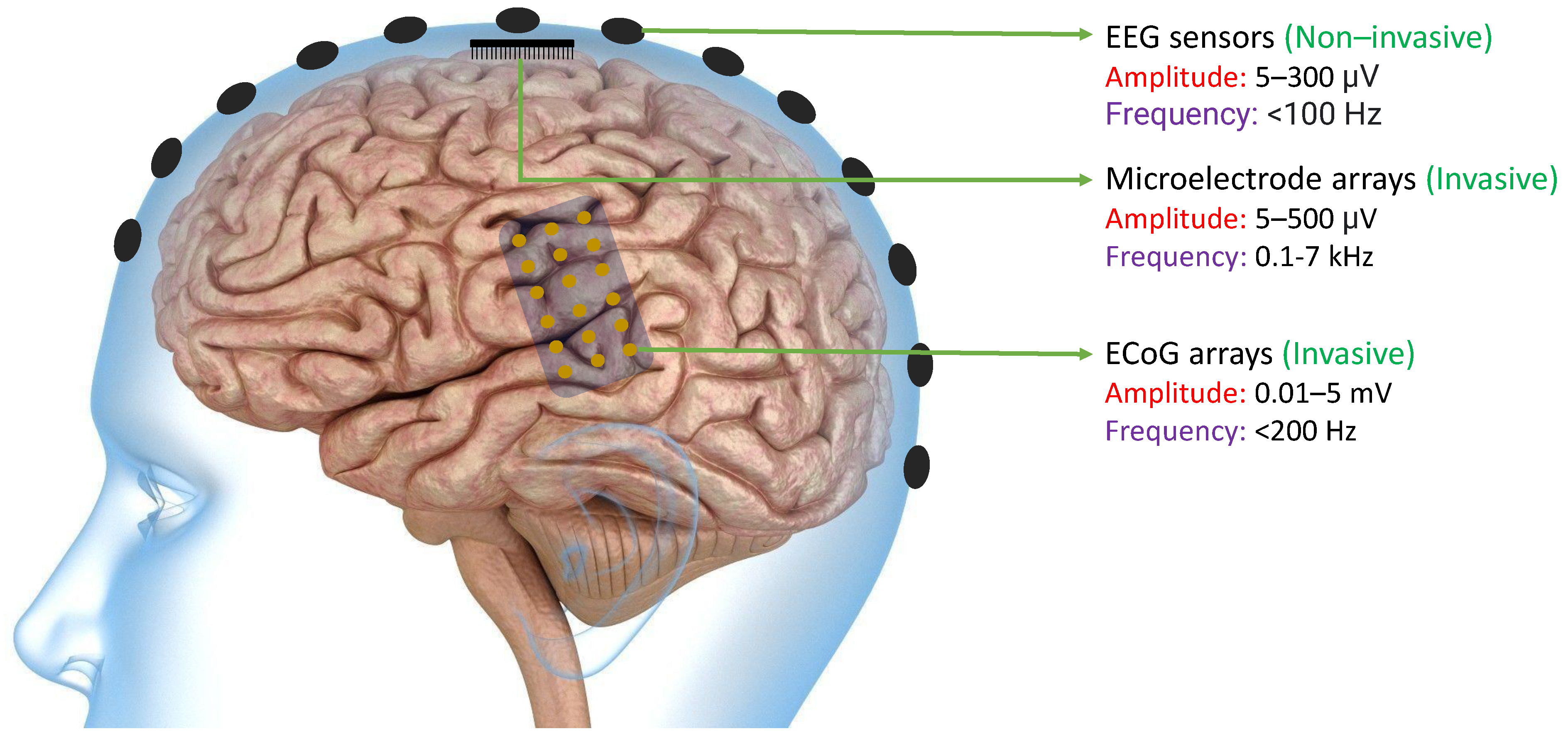

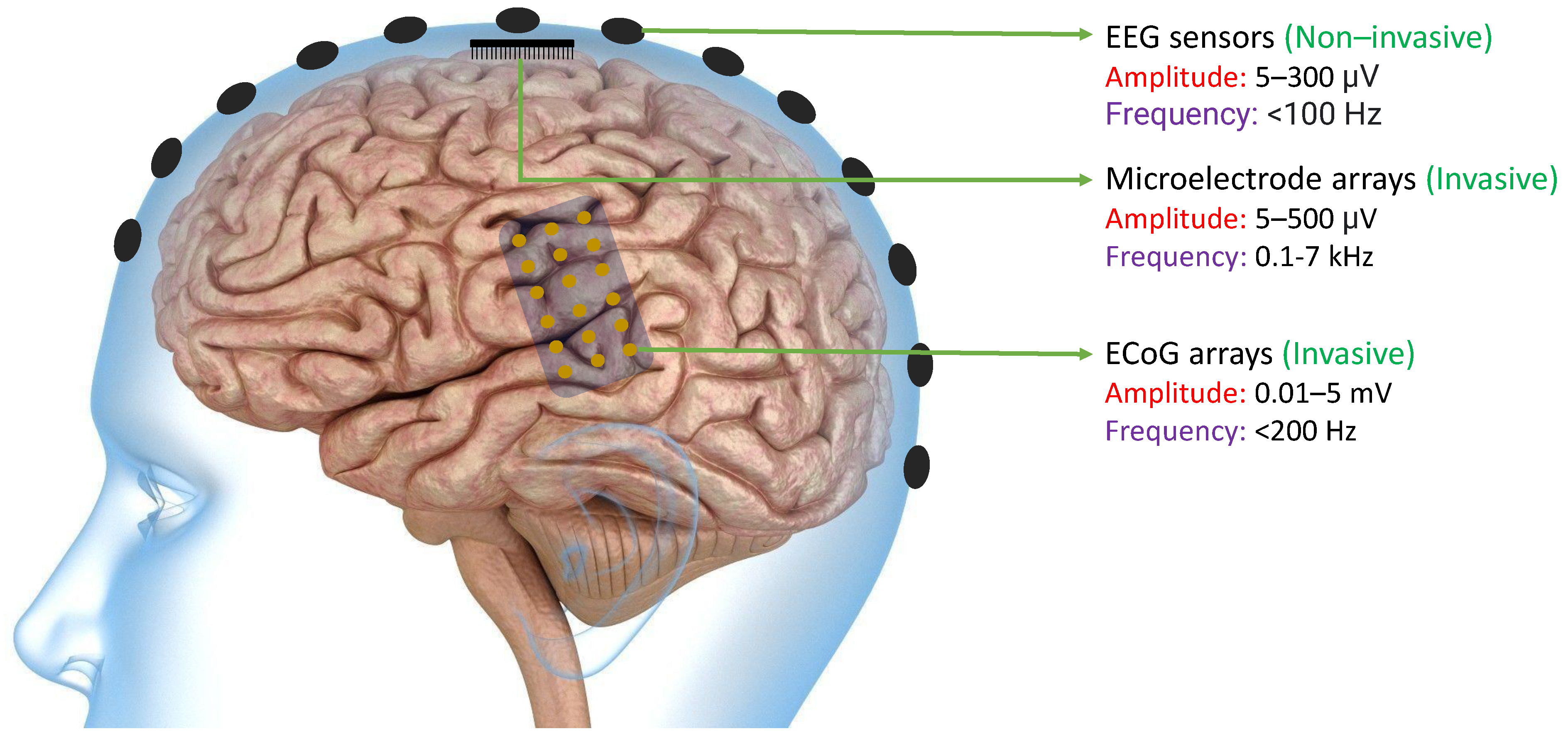

Neural signals can be collected using two primary methods: invasive and non-invasive. Using the invasive method, signals (for example, Electrocorticogram (ECoG) [29][30]) are collected from inside the skull, which requires surgery. On the other hand, in the non-invasive method, signals, such as Electroencephalogram (EEG) [31][32], are collected from the scalp, which does not require surgery. However, the amplitude of the signals that are received using non-invasive methods is usually smaller than signals received with invasive techniques. Nevertheless, signal acquisition using non-invasive methods is easier and safer than invasive techniques, and because of that, there is a strong research interest in improving the signal-to-noise ratio (SNR) [33] in non-invasive methods using specific signal processing techniques. Both invasive and non-invasive signals can be used to detect brain patterns and help individuals recognize handwriting, speech [34], silent speech [35], emotion, and mental states. Another widely recognized neural signal extensively employed in the field of BCI is the steady-state visual-evoked potential (SSVEP). SSVEP refers to a measurable, objective fluctuation of electrical brain activity triggered by a particular group of visual stimuli. SSVEP provides a stable and consistent neural response to visual stimuli. SSVEP can be detected using non-invasive techniques, e.g., EEG technology. SSVEP-based systems provide high information transfer rates. Again, different visual targets or objects provide different SSVEP responses. SSVEPs are used for implementing EEG-based BCI spellers as well [36].

The BCI technology related to detecting handwriting and speech from neural signals is a new research area. Individuals with severe motor impairments can greatly benefit from this type of BCI technology as it can significantly enhance their communication and interaction capabilities [37]. Consequently, there is a growing demand for research in this field. This entry aims to provide readers with an overview of the existing research conducted on the recognition of handwriting and speech from neural signals up to this point.

2. Regions of the Brain Responsible for Handwriting and Speech Production

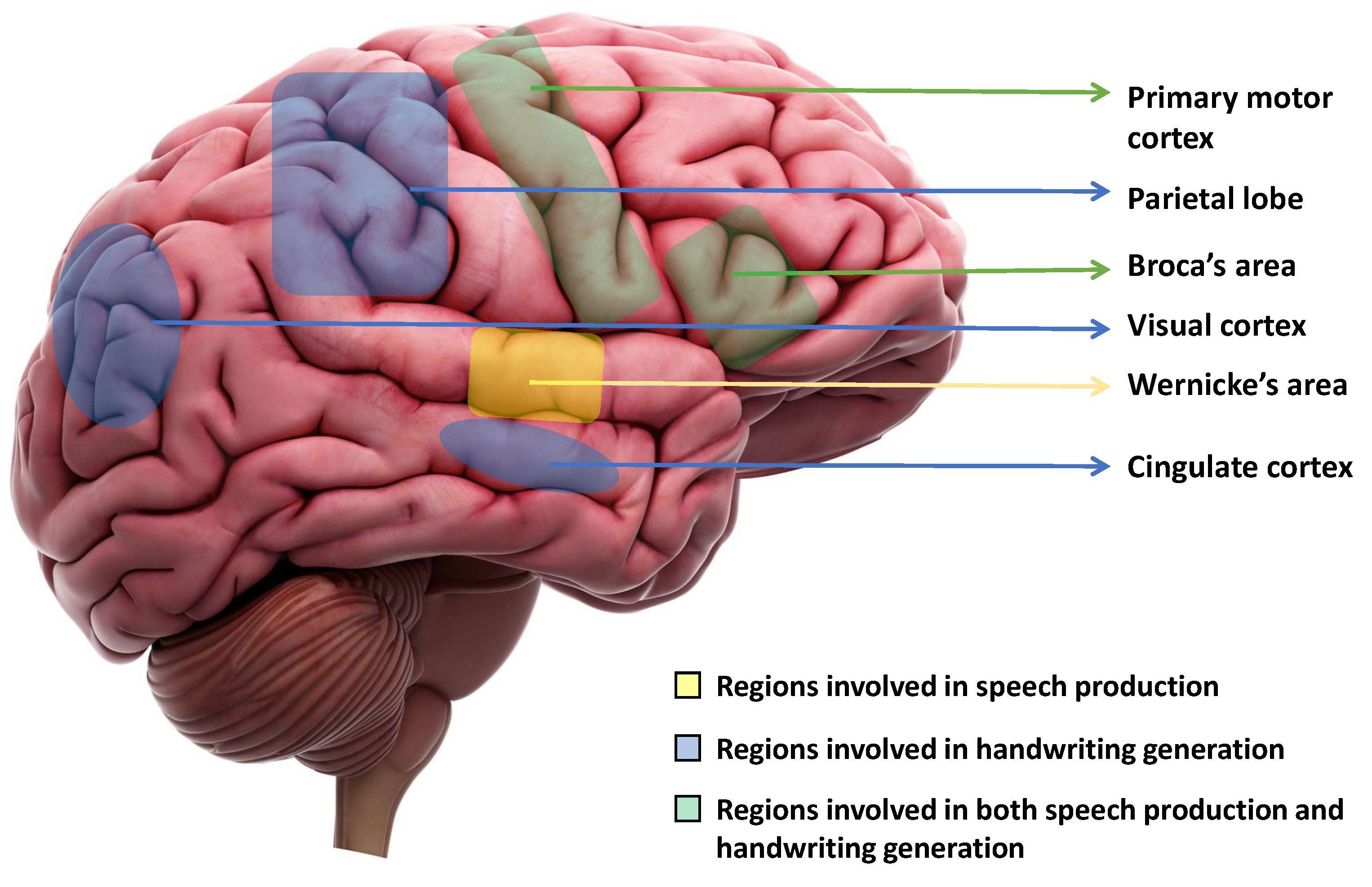

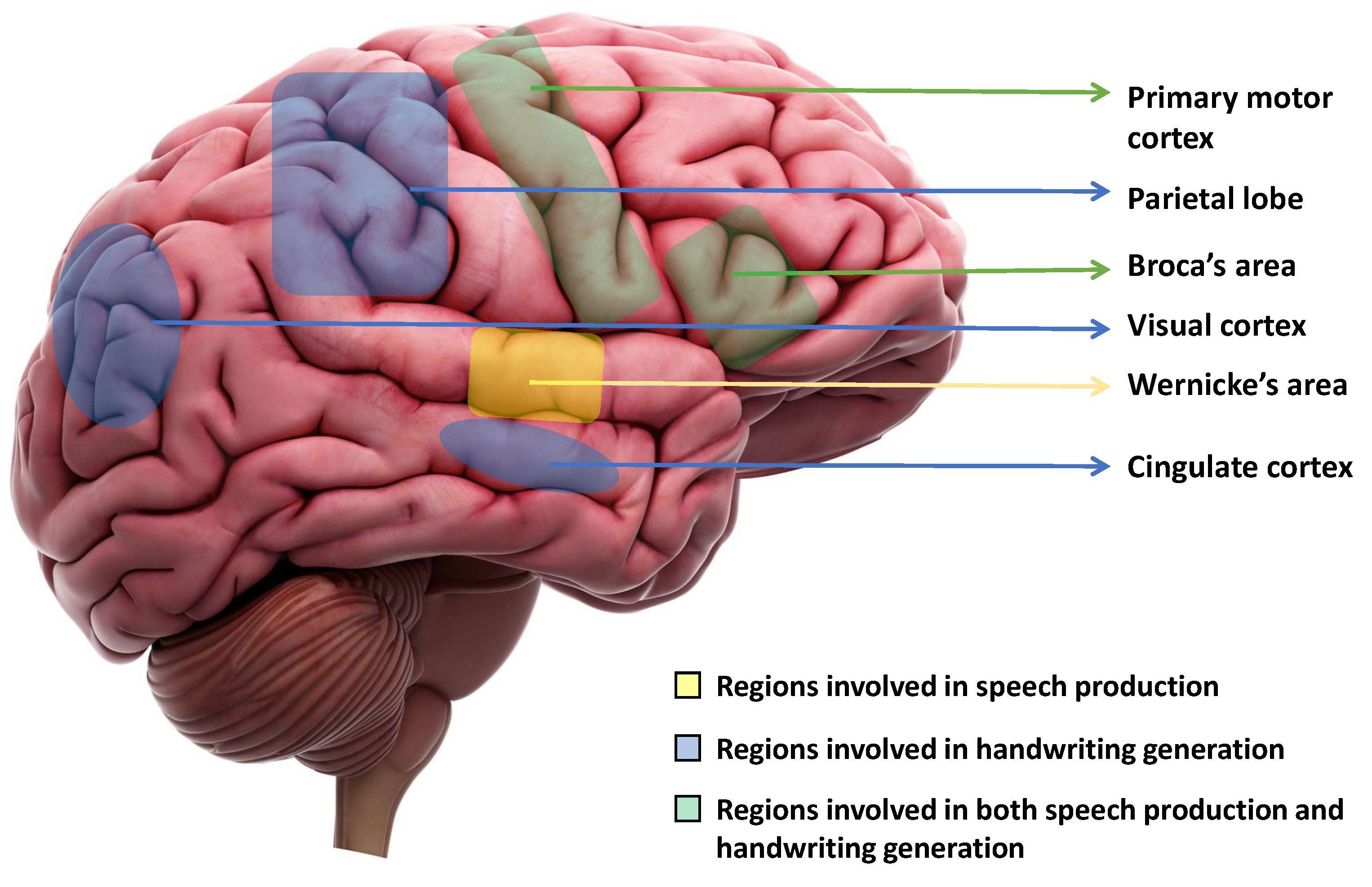

The production of speech involves several stages in the brain, including the translation of thoughts into words, the construction of sentences, and the physical articulation of sounds [38]. Three key areas of the brain are directly involved in speech production: the primary motor cortex, Broca’s area, and Wernicke’s area [39]. Wernicke’s area is primarily responsible for producing coherent speech that conveys meaningful information. Damage of Wernicke’s area, also known as fluent aphasia, can affect comprehension and meaningless sentences [40]. Broca’s area aids in generating smooth speech and constructing sentences before speaking. Damage to one’s Broca’s area results in a condition known as Broca’s aphasia, or non-fluent aphasia, which can cause the person to lose their ability to produce speech sounds altogether or to only speak slowly and in short sentences [39]. Finally, the motor cortex plays a role in planning and executing the muscle movements necessary for speech production, including the movement of the mouth, lips, tongue, and vocal cords. Damages to the primary motor cortex can cause paralysis of the muscles used for speaking. However, therapy and repetition can help improve these impairments [41].

When writing is initiated, our ideas are first organized in our mind, and the physical act of writing is facilitated by our brain, which controls the movements of our hands, arms, and fingers [42]. This process is initiated by the cingulate cortex of the brain. The visual cortex then creates an internal picture of what the writing will look like. Next, the left angular gyrus [43] converts the visual cortex signal into a comprehension of words, and this process involves Wernicke’s area also. Finally, the parietal lobe and the primary motor cortex work together to coordinate all of these signals and produce motor signals that control the movements of the hand, arm, and finger required for writing [42]. In a study, Willett et al. [44] proposed a discrete BCI, which is capable of accurately decoding limb movements, including those of all four limbs, from the hand knob [45][46]. Figure 1 shows the regions of the brain that are primarily responsible for speech production and motor movements for handwriting.

Figure 1. Key regions of the brain that are fundamentally responsible for speech production and initiating motor movements for generating handwriting. Wernicke’s area is responsible for speech production. The parietal lobe, Visual cortex, and Cingulate cortex are responsible for handwriting generation. The primary motor cortex and Broca’s area are responsible for both speech production and handwriting generation.

3. Methods of Collecting Data from Brain

The primary objective of many BCIs is to capture neural signals in a manner that allows external computer software or devices to interpret them with ease. Neural signals can be obtained from the brain through various methods such as EEG sensors, Microelectrode arrays, or ECoG arrays. As shown in Figure 2, EEG signals can be extracted non-invasively from the scalp. because of which they typically have lower magnitudes compared to other neural signals. On the other hand, ECoG arrays can produce signals of higher magnitude since they are implanted invasively in the brain. However, because of their physical dimensions, the spatial resolution is still limited. Finally, microelectrode arrays can acquire high-frequency spikes with much improved spatial resolution [47]. In all of these methods, the signals must be processed in a way that enables the BCI software or devices to effectively decipher them [48].

Figure 2. Existing technologies like EEG Sensors, ECoG Arrays, and Microelectrode Arrays that are used to acquire neural signals with their acquired signal characteristics including amplitude and frequency bands [47]. The amplitudes of neural signals acquired from ECoG arrays and the frequency of neural signals acquired from microelectrode arrays are typically higher than other existing technologies.

4. Articles Related to Handwriting and Speech Recognition Using Neural Signals

4.1. Speech Recognition Using Non-Invasive Neural Datasets

In 2017, Kumar et al. [49] proposed a Random Forest (RF) based silent speech recognition system utilizing EEG signals. They introduced a coarse-to-fine-level envisioned speech recognition model using EEG signals, where the coarse level predicts the category of the envisioned speech, and the finer-level classification predicts the actual class of the expected category. The model performed three types of classification: digits, characters, and images. The EEG dataset comprised 30 text and non-text class objects that were imagined by multiple users. After performing the coarse-level classification, a fine-level classification accuracy of 57.11% was achieved using the Random Forest classifier. The study also examined the impact of aging and the time elapsed since the EEG signal was recorded.

In 2017, Rosinová et al. [50] proposed a voice command recognition system using EEG signals. EEG data were collected from 20 participants aged 18 to 28 years, consisting of 13 females and 7 males. The EEG data of 50 voice commands were recorded 5 times during the training phase. The proposed model was tested on a 23-year-old participant, whose EEG signal data was collected when speaking the 50 voice commands 30 times. The hidden Markov model (HMM) and Gaussian Mixture model (GMM) were used to train and test the proposed model. The authors claim that the highest classification accuracy was achieved on alpha, beta, and theta frequencies. However, the recording data were insufficient and the accuracy was very low.

In 2019, Krishna et al. [51] presented a method for automatic speech recognition from an EEG signal based on Gated Recurrent Units (GRU). Their proposed method was trained on only four English words—“yes”, “no”, “left”, and “right”—spoken by four different individuals. The proposed method can effectively detect speech in the presence of background noise, with a 60 dB noise level used in the research. The paper reported a high recognition accuracy of 99.38% even in the presence of background noise.

In 2020, Kapur et al. [35] proposed a silent speech recognition system based on Convolutional Neural Network (CNN) utilizing neuromuscular signals. This research marks the first non-invasive real-time silent speech recognition system. The dataset used comprised 10 trials of 15 sentences from three multiple sclerosis (MS) patients. The research obtained 81% accuracy, and an information transfer rate of 203.73 bits per minute was recorded.

In 2021, Vorontsova et al. [2] proposed a silent speech recognition system based on Residual Networks (ResNet)18 and GRU models that use EEG signals. The researchers collected EEG data from 268 healthy participants who varied in age, gender, education, and occupation. The study focused on the classification of nine Russian words as silent speech. The dataset consists of a 40-channel EEG signal recorded at a 500 Hz frequency. The results showed an 85% accuracy rate for the classification of the nine words. Interestingly, the authors found that a smaller dataset collected from a single participant can provide higher accuracy compared to a larger dataset collected from a group of people. However, the out-of-sample accuracy is relatively low in this study.

4.2. Speech Recognition Using Invasive Neural Datasets

In 2014, Mugler et al. [52] published the first research article about decoding the entire set of phonemes from American English. In linguistics, a phoneme refers to the smallest distinctive unit of sound in a language, which can be used to differentiate one word from another [53]. The authors used ECoG signals from four individuals. In this study, a high-density (1–2 mm) electrode array with 4 cm of speech motor cortex was used to decode speech. The researchers achieved 36% accuracy in classifying phonemes using ECoG signals with Linear Discriminant Analysis (LDA). However, the accuracy in word identification from phonemic analysis alone was only 18.8%, which falls short of the mark.

In 2019, Anumanchipalli et al. [54] proposed a speech restoration technique that converts brain impulses into understandable synthesized speech at the rate of a fluent speaker. Bidirectional long short-term memory (BLSTM) was used to decode kinematic representations of articulation from high-density ECoG signals collected from 5 individuals.

In 2019, Moses et al. [55] proposed a real-time question-and-answer decoding method using ECoG recordings. The authors used the Viterbi decoding algorithm which is the most commonly used decoding algorithm for HMM. The real-time high gamma activity of the ECoG signals has been collected from the brain. The authors received 61% decoding accuracy for producing utterances and 76% decoding accuracy for perceiving utterances.

In 2020, Makin et al. [56] published an article on machine translation of cortical activity to text using ECoG signals. The authors trained a Recurrent Neural Network (RNN) to encode each sentence-length sequence of neural activity. The encoder-decoder framework was employed for machine translation. The authors decoded cortical activity to text based on words, as they are more distinguishable than phonemes. For training purposes, 30–50 sentences of data were used.

In 2022, Metzger et al. [57] proposed an Artificial Neural Network (ANN) based model for recognizing attempts at silent speech mainly built on GRU layers. ECoG activity from the neural signal, along with a speech detection model, was used for spelling sentences. Only code words from the North Atlantic Treaty Organization (NATO) phonetic alphabet [58] were used during spelling to improve the neural discriminability from one word to another. In online mode, an 1152-word vocabulary model was used, with a 6.13% character error rate and 29.4 characters per minute. The beam search technique was used to spell the most accurate sentences. However, only one participant was involved in this training and spelling process.

4.3. Handwritten Character Recognition Using Non-Invasive Neural Datasets

In September 2015, Chen et al. [59] proposed a BCI speller using EEG. The study implemented a Joint Frequency Phrase Modulation (JFPM) based SSVEP speller to achieve high-speed spelling. Eighteen participants took part in the study, and six blocks of 40 characters were used for training with 40 trials on each block in random order. The study found a spelling rate of up to 60 characters per minute and an information transfer rate of up to 5.32 bits per second.

Saini et al. [60] presented a method for identifying and verifying individuals using their signature and EEG signals in 2017. The study involved collecting signatures and EEG signals from 70 individuals between the ages of 15 and 55. Each participant provided 10 signature samples, and EEG signals were captured using an Emotiv Epoc+ neuro headset. The researchers used 1400 samples of signature and EEG signals for user identification and an equal number of samples for user verification. They evaluated the performance of the method using three types of tests: using only signatures, using only EEG signals, and using signature-EEG fusion. The results showed that the signature-EEG fusion data achieved the highest accuracy of 98.24% for personal identification. For user verification, the EEG-based model performed better than the signature-based model and the signature-EEG fusion data. The authors also found that individuals between the ages of 15 and 25 had higher identification accuracy than others, and males had higher identification accuracy than females.

In 2019, Kumar et al. [61] proposed a novel user authentication system that utilizes both dynamic signatures and EEG signals. The study involved collecting signatures and EEG signals from 58 individuals who signed on their mobile phones simultaneously. A total of 1980 samples of dynamic signatures and EEG signals were collected, with EEG signals being recorded using an Emotiv EPOC+ device and signatures being written on the mobile screen. To train the system, a BLSTM neural network-based classifier was utilized for both dynamic signatures and EEG signals. The results showed that the signature-EEG fusion data using the Borda count fusion technique achieved an accuracy of 98.78%. The Borda count decision fusion verification model was used for user verification, which resulted in a false acceptance rate of 3.75%.

In 2021, Pei et al. [62] proposed a method for mapping scalp-recorded brain activities to handwritten character recognition using EEG signals. In the study, five participants provided their neural signal data while writing the phrase “HELLO, WORLD!” CNN-based classifiers were employed for the analysis. The accuracy of handwritten character recognition varied among participants, ranging from 76.8% to 97%. The accuracy of cross-participant recognition ranged from 11.1% to 60%.

4.4. Handwritten Character Recognition Using Invasive Neural Datasets

In 2021, Willett et al. [63] proposed a brain-to-text communication method using neural signals from the motor cortex. The authors employed an RNN for decoding the text from the neural activity. The proposed model decoded 90 characters per minute with 94.1% raw accuracy in real-time and greater than 99% accuracy offline using a language model. Sentence labeling was performed using an HMM, and the Viterbi search technique was employed for offline language modeling. The authors also demonstrated that handwriting letters with neural activity are easier to distinguish than point-to-point movements.

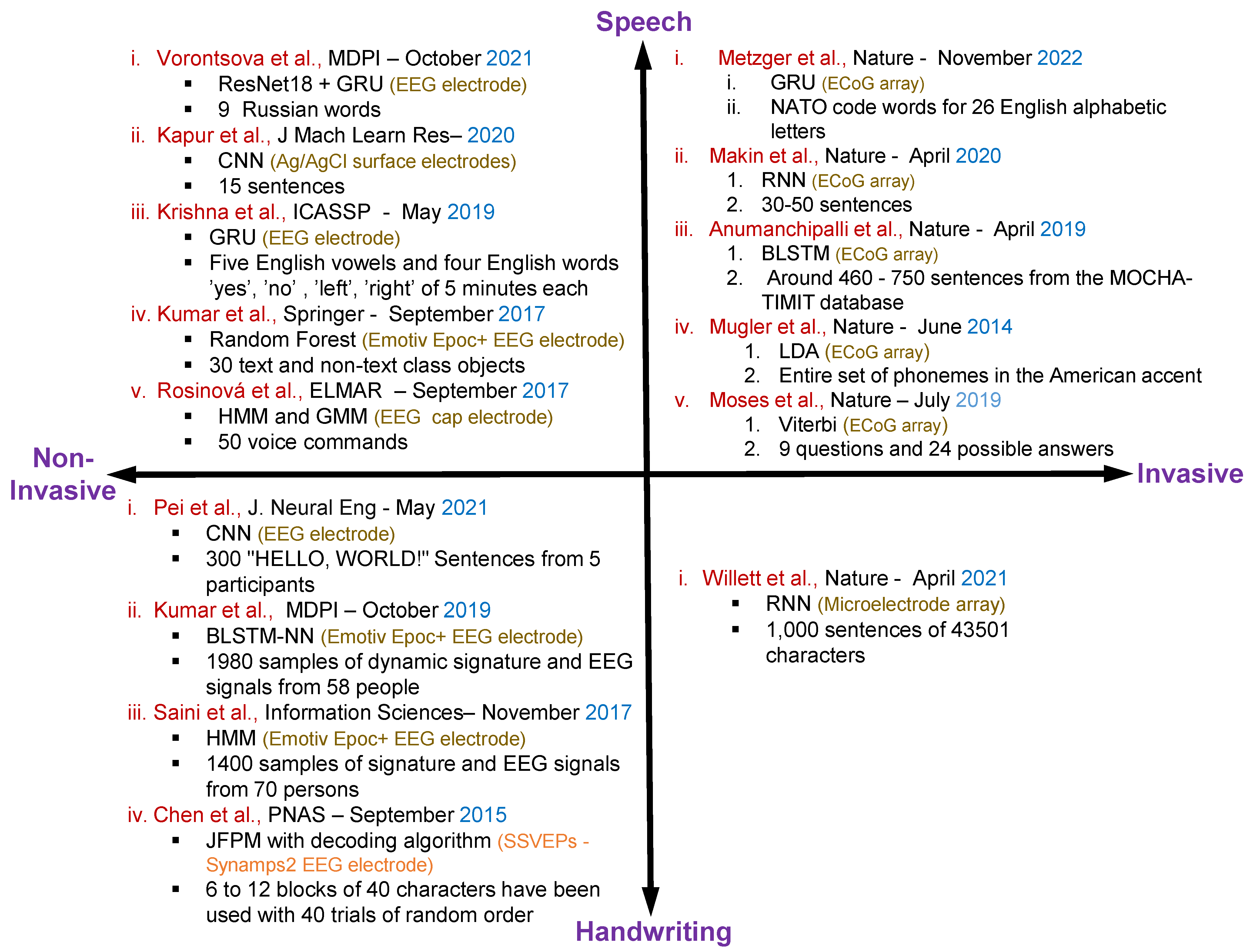

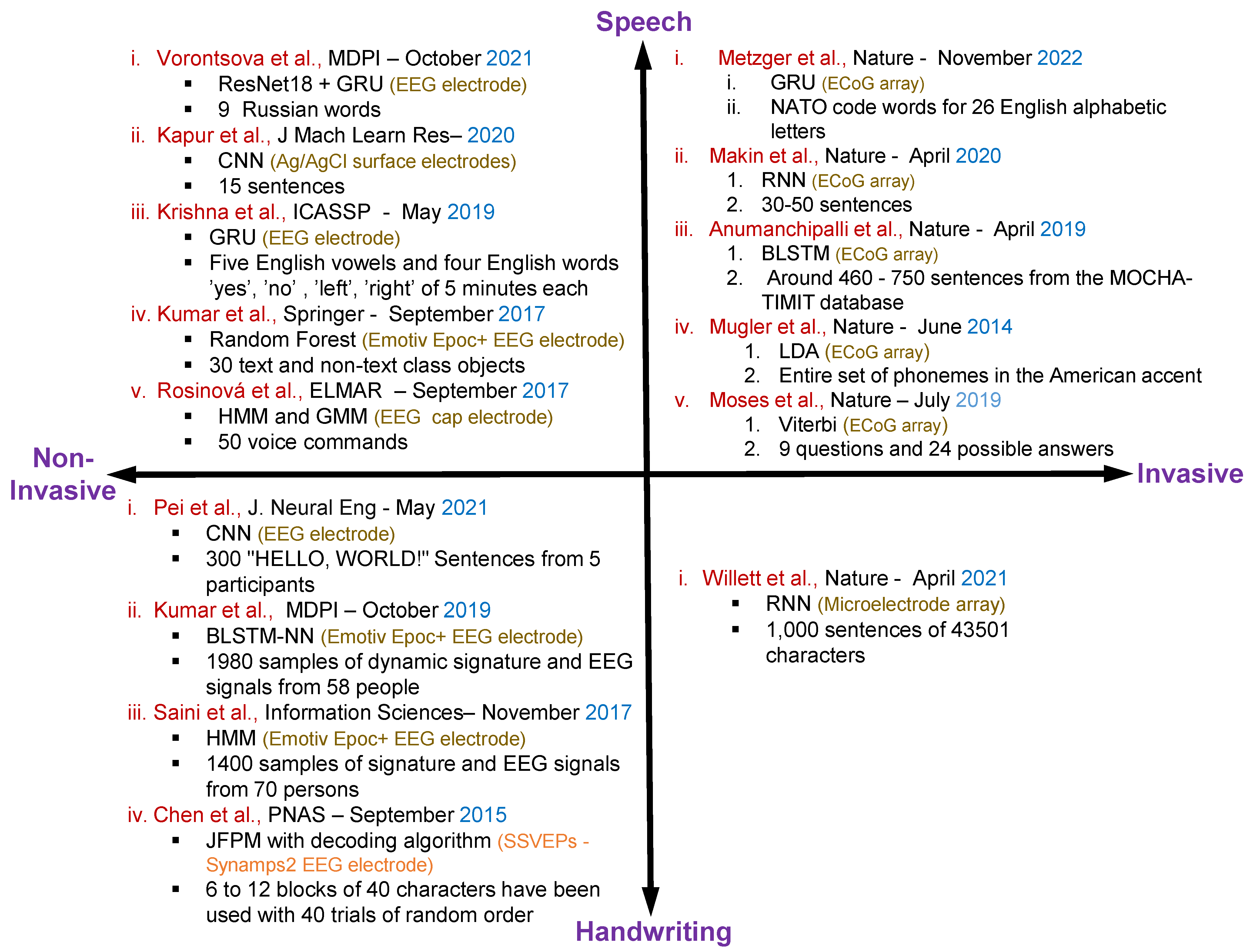

Figure 3 shows the overall summary for the speech and handwritten character recognition-based articles with invasive and non-invasive neural signal acquisition.

Figure 3. Summary of the existing articles on speech and handwritten character recognition with invasive and non-invasive neural signal acquisition including methods, datasets, electrodes specification and publication details of the individual articles [2][35][49][50][51][52][54][55][56][57][59][60][61][62][63].

5. General Principle of Using Machine Learning Methods for Neural Signals

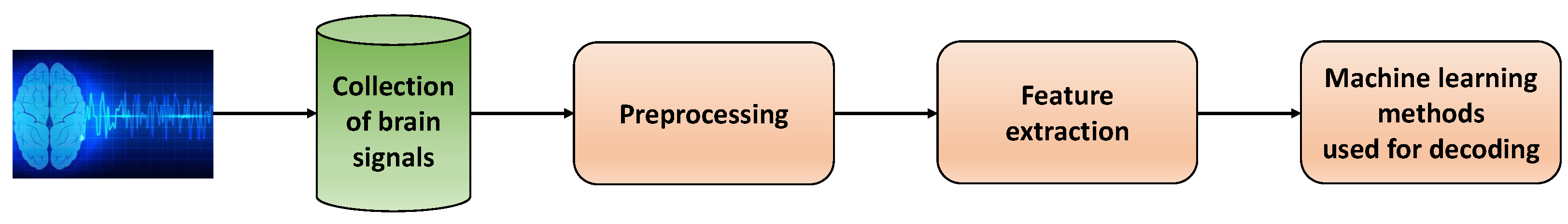

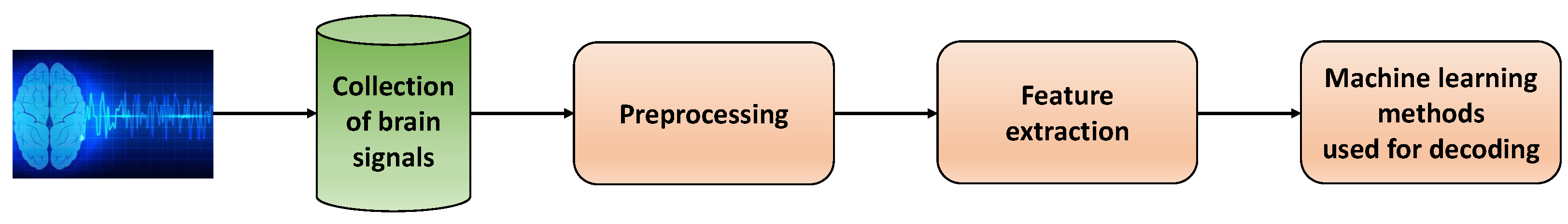

The research conducted on neural signals typically follows a standardized flowchart. It begins with the acquisition of neural signals and concludes with the identification of these signals using the most efficient methods. In this context, the researchers will focus on research conducted using machine learning and classical techniques.

Figure 4 depicts a step-by-step diagram commonly utilized in existing research articles that work with neural signals. To begin, invasive or non-invasive processes are used to collect, digitize and store neural signals. These signals then undergo a series of preprocessing techniques to enhance their quality. Next, meaningful features are extracted from the processed signals. Finally, machine learning methods are employed to accurately decode the signals.

Figure 4. Diagram of data processing and machine learning methods used for decoding neural signals (each block corresponds to one step of the whole process).

6. Features of the brain signals used in existing research

For detecting handwriting and speech from neural signals different types of features have been used in the existing research. Neural features are highly classified by the way of extracting neural signals from the brain i.e., invasively, or non-invasively. The most used frequency bands and their approximate spectral boundaries of EEG signals are delta (1–3 Hz), theta (4–7 Hz), alpha (8–12 Hz), beta (13–30 Hz), and gamma (30–100 Hz). For ECoG signals the commonly used frequency bands and their most approximate spectral boundaries are gamma (30–70 Hz) and high-gamma (>80 Hz).

7. Chronological Analysis of Methods Used for Training Neural Signals

Figure 8 presents a chronological overview of the methods used to process the neural signals. Earlier in 2014, researchers employed classical methods such as LDA, SSVEPS, and HMMs to train neural signals. However, over time, machine learning classifier algorithms such as random forests and CNN became more popular for classifying neural signals. In recent years, with the rapid development of ANN, researchers have discovered that advanced RNN architectures, such as LSTM, RNN, and GRU can work better with time-series data such as neural signals. As a result, they have increasingly utilized such networks to train with neural signals from 2020 to the present day. Compared to previous methods used with neural datasets, researchers have achieved higher accuracy working with advanced RNN architectures.

8. Discussion

Previous studies have shown that neural signals can assist individuals with disabilities in their communication and movement. Moreover, neural signals have been applied in a variety of fields such as security and privacy, emotion recognition, mental state recognition , user verification, gaming, IoT applications, and others. As a result, the research on neural signals is steadily increasing. Although classical methods were once widely used, machine-learning techniques have yielded promising results in recent years.

When working with neural signals, collecting and processing them can be one of the most challenging tasks. As a result, much of the research in this field has been conducted using non-invasive neural signals, which are easier to collect and process. However, some research has also been done on invasive neural signals. Nieto et al. also proposed an EEG-based dataset for inner speech recognition. The use of neural signals to recognize a person’s handwriting and speech has received significant attention in recent times. According to a study conducted by the authors, identifying letters through neural activity is more practical than point-to-point movements. Inner speech recognition through neural signals is also becoming more popular in research [64].

Most studies on speech-based Brain-Computer Interfaces (BCIs) have used acute or short-term ECoG recordings, but in the future, the potential of long-term ECoG recordings and their applications could be explored further. Currently, the development of high-speed BCI spellers is one of the most popular research directions. Ongoing innovations aim to increase electrode counts by at least an order of magnitude to improve the accuracy of extracting neural signals. Multimodal approaches using simultaneous EEG or ECoG signals to identify individuals have also gained considerable attention in recent years [65]. The performance of BCI communication can be enhanced by applying modern machine learning models to a large, accurate, and user-friendly dataset. In the future, more robust features may be extracted from EEG or ECoG signals to improve system recognition performance.

EEG used to monitor the electrical activity of the brain, is an invaluable tool for investigating disease pathologies. It involves analyzing the numerical distribution of data and establishing connections between brain signals (EEG) and other biomedical signals. These include the electrical activity of the heart measured by electrocardiogram (ECG), heart rate monitoring using a photoplethysmography (PPG), and the electrical activity generated by muscles recorded through electromyography (EMG). The integration of neural signals with other biomedical signals has led to diverse applications, such as emotion detection through eye tracking, video gaming, game research epilepsy detection, and motion classification utilizing sEMG-EEG signals, among others.

One other extremely important consideration is the ability to detect and analyze neural signals in real time for the production of speech and handwriting. To develop real-time BCI applications, several issues and challenges have to be addressed. The neural data collection methods need to become faster as well as more accurate. The pre-processing techniques for the neural signals should also be improved in terms of their latency and efficiency. At the same time, the decoding and classification methods used on these processed data should also work with good accuracy and low latency. Moreover, for developing real-time BCI, certain features of the neural signals should be extracted from the processed data within a short time. Intraoperative mapping using high-resolution ECoG can be used to produce results within minutes but still, more work needs to be done to perform this in real time. The amplitude of the neural signals should remain high, and the latency should remain low. For developing real-time speech detection from ECoG signals the high gamma activity feature has been used. Again, kinematic features have been used in ECoG data as well as from EEG. The real-time functional cortical mapping may be used for detecting handwriting and speech from ECoG recording in real time. A Pyramid Histogram of Orientation Gradient features extracted from signature images can be used for fast signature detection from EEG data. Event-related desynchronization/synchronization features from the EEG data may be used for handwriting detection when an individual thinks about writing a character.

As these technologies target providing access to the signals generated by the brain, ethical issues have emerged regarding the use of BCIs to detect speech and handwriting from neural signals. It is important to consider individuals’ freedom of thought in BCI communication, as modern BCI communication techniques raise concerns about the potential for private thoughts to be read. Key concerns involve the invasion of privacy and the risk of unauthorized access to one’s thoughts. To address these concerns, solutions may include the implementation of regulations, acquiring informed consent, and implementing strong data protection measures. Furthermore, advancements in encryption and anonymization techniques play a crucial role in ensuring the privacy and confidentiality of individuals. Ongoing research endeavors focus on enhancing BCI accuracy and dependability through the development of signal-processing algorithms and machine-learning models.

The future of BCI research in detecting handwriting and speech from neural signals shows immense potential. It offers the possibility of improving the lives of individuals with speech or motor impairments by providing alternative communication options. However, there are challenges that need to be overcome, including improving the accuracy and reliability of BCI systems, developing effective algorithms for decoding neural signals, and addressing ethical concerns such as privacy protection. Moving forward, efforts need to be focused by the new researchers in this field on refining signal processing techniques, exploring novel approaches to recording neural activity and advancing machine learning algorithms. One other direction that the current research is focused on is the collection of signals using distributed implants, which can provide simultaneous recording from multiple sites scattered throughout the brain. Such technologies hold immense promise in terms of providing more information from various regions which potentially produces correlated neural activity during the generation of speech and handwriting.

References

- Kübler, A.; Furdea, A.; Halder, S.; Hammer, E.M.; Nijboer, F.; Kotchoubey, B. A brain–computer interface controlled auditory event-related potential (P300) spelling system for locked-in patients. Ann. N. Y. Acad. Sci. 2009, 1157, 90–100.

- Vorontsova, D.; Menshikov, I.; Zubov, A.; Orlov, K.; Rikunov, P.; Zvereva, E.; Flitman, L.; Lanikin, A.; Sokolova, A.; Markov, S.; et al. Silent eeg-speech recognition using convolutional and recurrent neural network with 85% accuracy of 9 words classification. Sensors 2021, 21, 6744.

- Santhanam, G.; Ryu, S.I.; Yu, B.M.; Afshar, A.; Shenoy, K.V. A high-performance brain–computer interface. Nature 2006, 442, 195–198.

- Rusnac, A.L.; Grigore, O. CNN Architectures and Feature Extraction Methods for EEG Imaginary Speech Recognition. Sensors 2022, 22, 4679.

- Herff, C.; Schultz, T. Automatic speech recognition from neural signals: A focused review. Front. Neurosci. 2016, 10, 429.

- Horlings, R.; Datcu, D.; Rothkrantz, L.J. Emotion recognition using brain activity. In Proceedings of the 9th International Conference on Computer Systems and Technologies and Workshop for PhD Students in Computing, Gabrovo, Bulgaria, 12–13 June 2008; p. II-1.

- Patil, A.; Deshmukh, C.; Panat, A. Feature extraction of EEG for emotion recognition using Hjorth features and higher order crossings. In Proceedings of the 2016 Conference on Advances in Signal Processing (CASP), Pune, India, 9–11 June 2016; pp. 429–434.

- Lotte, F. A tutorial on EEG signal-processing techniques for mental-state recognition in brain–computer interfaces. In Guide to Brain-Computer Music Interfacing; Springer: Berlin/Heidelberg, Germany, 2014; pp. 133–161.

- Brigham, K.; Kumar, B.V. Subject identification from electroencephalogram (EEG) signals during imagined speech. In Proceedings of the 2010 Fourth IEEE International Conference on Biometrics: Theory, Applications and Systems (BTAS), Washington, DC, USA, 27–29 September 2010; pp. 1–8.

- Mirkovic, B.; Bleichner, M.G.; De Vos, M.; Debener, S. Target speaker detection with concealed EEG around the ear. Front. Neurosci. 2016, 10, 349.

- Brumberg, J.S.; Nieto-Castanon, A.; Kennedy, P.R.; Guenther, F.H. Brain–computer interfaces for speech communication. Speech Commun. 2010, 52, 367–379.

- Soman, S.; Murthy, B. Using brain computer interface for synthesized speech communication for the physically disabled. Procedia Comput. Sci. 2015, 46, 292–298.

- Ahn, M.; Lee, M.; Choi, J.; Jun, S.C. A review of brain–computer interface games and an opinion survey from researchers, developers and users. Sensors 2014, 14, 14601–14633.

- Sadeghi, K.; Banerjee, A.; Sohankar, J.; Gupta, S.K. Optimization of brain mobile interface applications using IoT. In Proceedings of the 2016 IEEE 23rd International Conference on High Performance Computing (HiPC), Hyderabad, India, 19–22 December 2016; pp. 32–41.

- Eleryan, A.; Vaidya, M.; Southerland, J.; Badreldin, I.S.; Balasubramanian, K.; Fagg, A.H.; Hatsopoulos, N.; Oweiss, K. Tracking single units in chronic, large scale, neural recordings for brain machine interface applications. Front. Neuroeng. 2014, 7, 23.

- Sussillo, D.; Stavisky, S.D.; Kao, J.C.; Ryu, S.I.; Shenoy, K.V. Making brain–machine interfaces robust to future neural variability. Nat. Commun. 2016, 7, 13749.

- Lebedev, M.A.; Nicolelis, M.A. Brain–machine interfaces: Past, present and future. Trends Neurosci. 2006, 29, 536–546.

- Vázquez-Guardado, A.; Yang, Y.; Bandodkar, A.J.; Rogers, J.A. Recent advances in neurotechnologies with broad potential for neuroscience research. Nat. Neurosci. 2020, 23, 1522–1536.

- Illes, J.; Moser, M.A.; McCormick, J.B.; Racine, E.; Blakeslee, S.; Caplan, A.; Hayden, E.C.; Ingram, J.; Lohwater, T.; McKnight, P.; et al. Neurotalk: Improving the communication of neuroscience research. Nat. Rev. Neurosci. 2010, 11, 61–69.

- Koct, M.; Juh, J. Speech Activity Detection from EEG using a feed-forward neural network. In Proceedings of the 2019 10th IEEE International Conference on Cognitive Infocommunications (CogInfoCom), Naples, Italy, 23–25 October 2019; pp. 147–152.

- Koctúrová, M.; Juhár, J. A Novel approach to EEG speech activity detection with visual stimuli and mobile BCI. Appl. Sci. 2021, 11, 674.

- Gannouni, S.; Aledaily, A.; Belwafi, K.; Aboalsamh, H. Emotion detection using electroencephalography signals and a zero-time windowing-based epoch estimation and relevant electrode identification. Sci. Rep. 2021, 11, 7071.

- Luo, S.; Rabbani, Q.; Crone, N.E. Brain-computer interface: Applications to speech decoding and synthesis to augment communication. Neurotherapeutics 2022, 19, 263–273.

- Pandarinath, C.; Nuyujukian, P.; Blabe, C.H.; Sorice, B.L.; Saab, J.; Willett, F.R.; Hochberg, L.R.; Shenoy, K.V.; Henderson, J.M. High performance communication by people with paralysis using an intracortical brain–computer interface. elife 2017, 6, e18554.

- Stavisky, S.D.; Willett, F.R.; Wilson, G.H.; Murphy, B.A.; Rezaii, P.; Avansino, D.T.; Memberg, W.D.; Miller, J.P.; Kirsch, R.F.; Hochberg, L.R.; et al. Neural ensemble dynamics in dorsal motor cortex during speech in people with paralysis. elife 2019, 8, e46015.

- Gorno-Tempini, M.L.; Hillis, A.E.; Weintraub, S.; Kertesz, A.; Mendez, M.; Cappa, S.F.; Ogar, J.M.; Rohrer, J.D.; Black, S.; Boeve, B.F.; et al. Classification of primary progressive aphasia and its variants. Neurology 2011, 76, 1006–1014.

- Willett, F.R.; Murphy, B.A.; Memberg, W.D.; Blabe, C.H.; Pandarinath, C.; Walter, B.L.; Sweet, J.A.; Miller, J.P.; Henderson, J.M.; Shenoy, K.V.; et al. Signal-independent noise in intracortical brain–computer interfaces causes movement time properties inconsistent with Fitts’ law. J. Neural Eng. 2017, 14, 026010.

- Brumberg, J.S.; Wright, E.J.; Andreasen, D.S.; Guenther, F.H.; Kennedy, P.R. Classification of intended phoneme production from chronic intracortical microelectrode recordings in speech motor cortex. Front. Neurosci. 2011, 5, 65.

- Rabbani, Q.; Milsap, G.; Crone, N.E. The potential for a speech brain–computer interface using chronic electrocorticography. Neurotherapeutics 2019, 16, 144–165.

- Yang, T.; Hakimian, S.; Schwartz, T.H. Intraoperative ElectroCorticoGraphy (ECog): Indications, techniques, and utility in epilepsy surgery. Epileptic Disord. 2014, 16, 271–279.

- Kirschstein, T.; Köhling, R. What is the source of the EEG? Clin. EEG Neurosci. 2009, 40, 146–149.

- Casson, A.J.; Smith, S.; Duncan, J.S.; Rodriguez-Villegas, E. Wearable EEG: What is it, why is it needed and what does it entail? In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–24 August 2008; pp. 5867–5870.

- Tandra, R.; Sahai, A. SNR walls for signal detection. IEEE J. Sel. Top. Signal Process. 2008, 2, 4–17.

- Wilson, G.H.; Stavisky, S.D.; Willett, F.R.; Avansino, D.T.; Kelemen, J.N.; Hochberg, L.R.; Henderson, J.M.; Druckmann, S.; Shenoy, K.V. Decoding spoken English from intracortical electrode arrays in dorsal precentral gyrus. J. Neural Eng. 2020, 17, 066007.

- Kapur, A.; Sarawgi, U.; Wadkins, E.; Wu, M.; Hollenstein, N.; Maes, P. Non-invasive silent speech recognition in multiple sclerosis with dysphonia. In Proceedings of the Machine Learning for Health Workshop. PMLR, Virtual Event, 11 December 2020; pp. 25–38.

- Müller-Putz, G.R.; Scherer, R.; Brauneis, C.; Pfurtscheller, G. Steady-state visual evoked potential (SSVEP)-based communication: Impact of harmonic frequency components. J. Neural Eng. 2005, 2, 123.

- Chandler, J.A.; Van der Loos, K.I.; Boehnke, S.; Beaudry, J.S.; Buchman, D.Z.; Illes, J. Brain Computer Interfaces and Communication Disabilities: Ethical, legal, and social aspects of decoding speech from the brain. Front. Hum. Neurosci. 2022, 16, 841035.

- What Part of the Brain Controls Speech? Available online: https://www.healthline.com/health/what-part-of-the-brain-controls-speech (accessed on 21 March 2023).

- The Telltale Hand. Available online: https://www.dana.org/article/the-telltale-hand/#:~:text=The%20sequence%20that%20produces%20handwriting,content%20of%20the%20motor%20sequence (accessed on 3 April 2023).

- How Does Your Brain Control Speech? Available online: https://districtspeech.com/how-does-your-brain-control-speech/ (accessed on 24 March 2023).

- Obleser, J.; Wise, R.J.; Dresner, M.A.; Scott, S.K. Functional integration across brain regions improves speech perception under adverse listening conditions. J. Neurosci. 2007, 27, 2283–2289.

- What Part of the Brain Controls Speech? Brain Hemispheres Functions REGIONS of the Brain Brain Injury and Speech. Available online: https://psychcentral.com/health/what-part-of-the-brain-controls-speech (accessed on 21 March 2023).

- Chang, E.F.; Rieger, J.W.; Johnson, K.; Berger, M.S.; Barbaro, N.M.; Knight, R.T. Categorical speech representation in human superior temporal gyrus. Nat. Neurosci. 2010, 13, 1428–1432.

- Willett, F.R.; Deo, D.R.; Avansino, D.T.; Rezaii, P.; Hochberg, L.R.; Henderson, J.M.; Shenoy, K.V. Hand knob area of premotor cortex represents the whole body in a compositional way. Cell 2020, 181, 396–409.

- James, K.H.; Engelhardt, L. The effects of handwriting experience on functional brain development in pre-literate children. Trends Neurosci. Educ. 2012, 1, 32–42.

- Palmis, S.; Danna, J.; Velay, J.L.; Longcamp, M. Motor control of handwriting in the developing brain: A review. Cogn. Neuropsychol. 2017, 34, 187–204.

- Neural Prosthesis Uses Brain Activity to Decode Speech. Available online: https://medicalxpress.com/news/2023-01-neural-prosthesis-brain-decode-speech.html (accessed on 20 March 2023).

- Maas, A.I.; Harrison-Felix, C.L.; Menon, D.; Adelson, P.D.; Balkin, T.; Bullock, R.; Engel, D.C.; Gordon, W.; Langlois-Orman, J.; Lew, H.L.; et al. Standardizing data collection in traumatic brain injury. J. Neurotrauma 2011, 28, 177–187.

- Kumar, P.; Saini, R.; Roy, P.P.; Sahu, P.K.; Dogra, D.P. Envisioned speech recognition using EEG sensors. Pers. Ubiquitous Comput. 2018, 22, 185–199.

- Rosinová, M.; Lojka, M.; Staš, J.; Juhár, J. Voice command recognition using eeg signals. In Proceedings of the 2017 International Symposium ELMAR, Zadar, Croatia, 18–20 September 2017; pp. 153–156.

- Krishna, G.; Tran, C.; Yu, J.; Tewfik, A.H. Speech recognition with no speech or with noisy speech. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 1090–1094.

- Mugler, E.M.; Patton, J.L.; Flint, R.D.; Wright, Z.A.; Schuele, S.U.; Rosenow, J.; Shih, J.J.; Krusienski, D.J.; Slutzky, M.W. Direct classification of all American English phonemes using signals from functional speech motor cortex. J. Neural Eng. 2014, 11, 035015.

- Moses, D.A.; Mesgarani, N.; Leonard, M.K.; Chang, E.F. Neural speech recognition: Continuous phoneme decoding using spatiotemporal representations of human cortical activity. J. Neural Eng. 2016, 13, 056004.

- Anumanchipalli, G.K.; Chartier, J.; Chang, E.F. Speech synthesis from neural decoding of spoken sentences. Nature 2019, 568, 493–498.

- Moses, D.A.; Leonard, M.K.; Makin, J.G.; Chang, E.F. Real-time decoding of question-and-answer speech dialogue using human cortical activity. Nat. Commun. 2019, 10, 3096.

- Makin, J.G.; Moses, D.A.; Chang, E.F. Machine translation of cortical activity to text with an encoder–decoder framework. Nat. Neurosci. 2020, 23, 575–582.

- Metzger, S.L.; Liu, J.R.; Moses, D.A.; Dougherty, M.E.; Seaton, M.P.; Littlejohn, K.T.; Chartier, J.; Anumanchipalli, G.K.; Tu-Chan, A.; Ganguly, K.; et al. Generalizable spelling using a speech neuroprosthesis in an individual with severe limb and vocal paralysis. Nat. Commun. 2022, 13, 6510.

- NATO Phonetic Alphabet. Available online: https://first10em.com/quick-reference/nato-phonetic-alphabet/#:~:text=Alpha%2C%20Bravo%2C%20Charlie%2C%20Delta,%2Dray%2C%20Yankee%2C%20Zulu (accessed on 24 May 2023).

- Chen, X.; Wang, Y.; Nakanishi, M.; Gao, X.; Jung, T.P.; Gao, S. High-speed spelling with a noninvasive brain–computer interface. Proc. Natl. Acad. Sci. USA 2015, 112, E6058–E6067.

- Saini, R.; Kaur, B.; Singh, P.; Kumar, P.; Roy, P.P.; Raman, B.; Singh, D. Don’t just sign use brain too: A novel multimodal approach for user identification and verification. Inf. Sci. 2018, 430, 163–178.

- Kumar, P.; Saini, R.; Kaur, B.; Roy, P.P.; Scheme, E. Fusion of neuro-signals and dynamic signatures for person authentication. Sensors 2019, 19, 4641.

- Pei, L.; Ouyang, G. Online recognition of handwritten characters from scalp-recorded brain activities during handwriting. J. Neural Eng. 2021, 18, 046070.

- Willett, F.R.; Avansino, D.T.; Hochberg, L.R.; Henderson, J.M.; Shenoy, K.V. High-performance brain-to-text communication via handwriting. Nature 2021, 593, 249–254.

- Nieto, N.; Peterson, V.; Rufiner, H.L.; Kamienkowski, J.E.; Spies, R. Thinking out loud, an open-access EEG-based BCI dataset for inner speech recognition. Sci. Data 2022, 9, 52.

- Kumar, P.; Saini, R.; Kaur, B.; Roy, P.P.; Scheme, E. Fusion of neuro-signals and dynamic signatures for person authentication. Sensors 2019, 19, 4641.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

842

Revisions:

2 times

(View History)

Update Date:

30 Jun 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No