Your browser does not fully support modern features. Please upgrade for a smoother experience.

Submitted Successfully!

Thank you for your contribution! You can also upload a video entry or images related to this topic.

For video creation, please contact our Academic Video Service.

| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Hai Minh Le | -- | 1512 | 2023-05-23 11:04:36 | | | |

| 2 | Camila Xu | Meta information modification | 1512 | 2023-05-24 02:28:13 | | |

Video Upload Options

We provide professional Academic Video Service to translate complex research into visually appealing presentations. Would you like to try it?

Cite

If you have any further questions, please contact Encyclopedia Editorial Office.

Le, M.; Cheng, C.; Liu, D. Noise Removal Filter for LiDAR Point Clouds. Encyclopedia. Available online: https://encyclopedia.pub/entry/44702 (accessed on 08 February 2026).

Le M, Cheng C, Liu D. Noise Removal Filter for LiDAR Point Clouds. Encyclopedia. Available at: https://encyclopedia.pub/entry/44702. Accessed February 08, 2026.

Le, Minh-Hai, Ching-Hwa Cheng, Don-Gey Liu. "Noise Removal Filter for LiDAR Point Clouds" Encyclopedia, https://encyclopedia.pub/entry/44702 (accessed February 08, 2026).

Le, M., Cheng, C., & Liu, D. (2023, May 23). Noise Removal Filter for LiDAR Point Clouds. In Encyclopedia. https://encyclopedia.pub/entry/44702

Le, Minh-Hai, et al. "Noise Removal Filter for LiDAR Point Clouds." Encyclopedia. Web. 23 May, 2023.

Copy Citation

Light Detection and Ranging (LiDAR) is a critical sensor for autonomous vehicle systems, providing high-resolution distance measurements in real-time. However, adverse weather conditions such as snow, rain, fog, and sun glare can affect LiDAR performance, requiring data preprocessing.

self-driving car

LiDAR point clouds

projection image from LiDAR

1. Introduction

The development of autonomous vehicle technology has recently attracted considerable attention and investment. A major challenge facing these vehicles is the ability to sense and maneuver through their environment without human intervention [1]. To overcome this challenge, autonomous vehicles use a combination of different sensors, such as cameras, LiDAR (Light Detection and Ranging), radar, and GPS, together with advanced algorithms and machine learning methods to make informed decisions and successfully navigate their environment [2][3][4]. Among these sensors, LiDAR is considered a critical component in enabling autonomous vehicles to effectively sense and understand their environment. In most cases, the fusion of these sensors provides impressive reliability and efficiency for autonomous vehicles [5][6][7][8][9][10][11].

LiDAR works by emitting a laser beam and measuring the time it takes for the beam to bounce back after hitting an object. By measuring the distance to objects in the vehicle’s environment, LiDAR can create a high-resolution 3D map of the vehicle’s surroundings [12]. This 3D map is used to detect and track objects such as other vehicles, pedestrians, and obstacles, and to help the vehicle navigate its environment. LiDAR can also be used to detect and measure the speed of other vehicles, which is important for determining safe distances and speeds for autonomous vehicles to travel. One of the main benefits of LiDAR is that it can provide accurate measurements in a wide range of lighting and weather conditions, making it a reliable sensor for use in autonomous vehicles [13][14][15][16][17]. However, the resolution of LiDAR has limitations, which can be overcome by integrating it with a camera [18]. This compatibility can help to address the weaknesses of LiDAR in object detection and recognition when using a deep learning approach [19][20][21][22].

While LiDAR is generally a reliable sensor for use in autonomous vehicles, certain weather conditions can affect its performance. One of the main problems with LiDAR in adverse weather conditions is visibility and noise. Heavy rain, snow, or fog can scatter the laser beams and reduce the sensor’s range or cause false readings [23][24][25][26][27]. In extreme cases, the sensor may not be able to detect any objects at all. In addition, glare from the sun or bright headlights can also affect the sensor’s ability to detect objects. As a result, current LiDAR sensors do not perform as well in adverse weather conditions as they do in clear weather, which is why autonomous vehicles are still in the development and testing phase and not fully ready for commercial use. To mitigate these issues, LiDAR data can be filtered to detect and remove unwanted clutter in adverse weather conditions. This process can improve its ability to detect objects in challenging conditions.

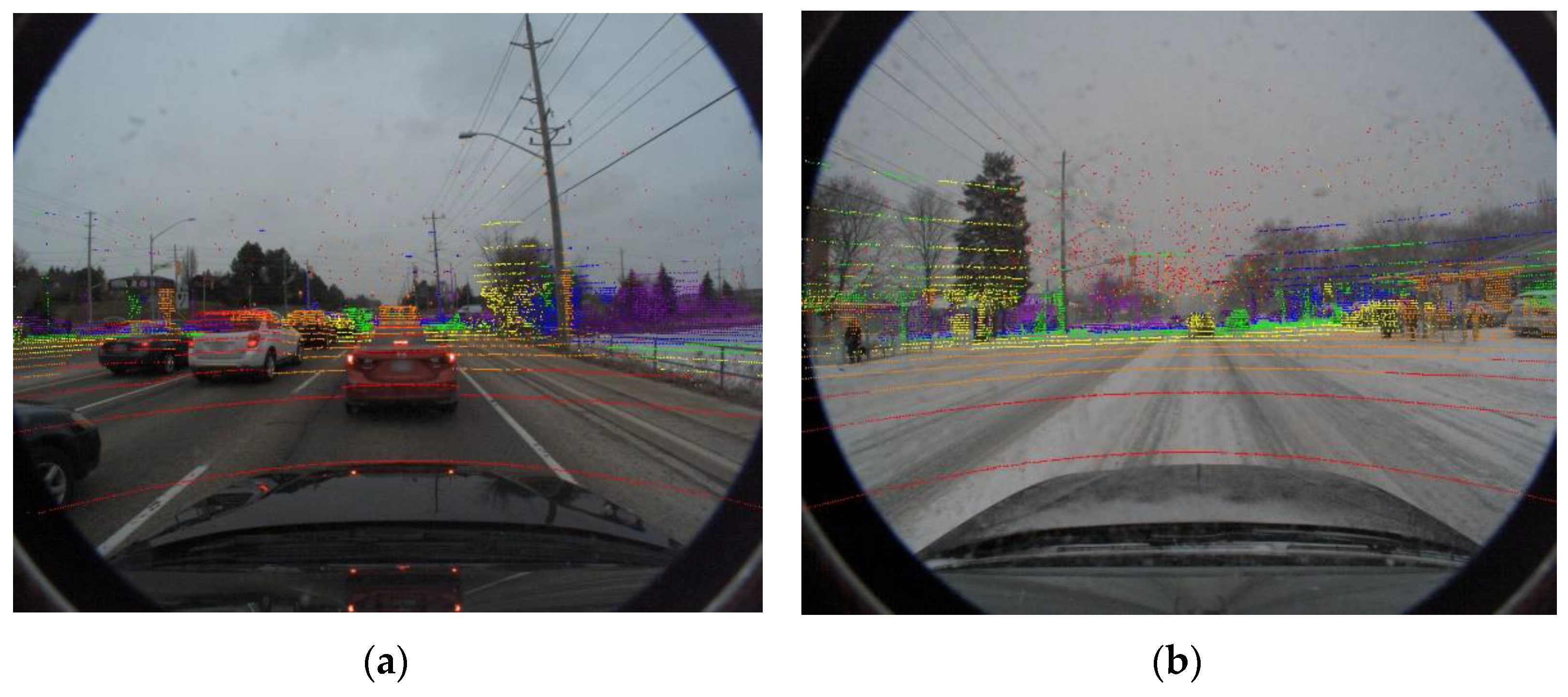

Recently, there has been a growing interest in processing LiDAR data. Figure 1 shows a LiDAR and camera fusion image from the Canadian Adverse Driving Conditions Dataset [28]. Figure 1a shows a typical LiDAR frame under light snow weather conditions. In ideal weather conditions, LiDAR can improve the visibility and reliability of automated vehicle systems. However, weather can have a negative impact on LiDAR performance [24][29][30][31]. Snow can limit the field of view of LiDAR and reduce its reliability, as shown in Figure 1b. This figure shows numerous snowflakes captured around the vehicle. These random snow particles can also interfere with the vehicle’s warning systems. The closer the snowflakes are to the vehicle, the denser they become, which could lead to serious accidents in autonomous vehicles. Therefore, removing snow particles and filtering noise from LiDAR data is critical to improving its quality and reliability.

Figure 1. Raw point clouds from LiDAR data combine with the images from the Canadian Adverse Driving Conditions Dataset: (a) vehicles under light snow weather conditions, and (b) the host vehicle under an extreme snow weather condition. The color of the points represents the distance of the reflecting sites from the LiDAR in the host. In both pictures, there are a lot of snowflakes represented in red spots closely in front of the car windows that may interfere with the eyesight of the driver and limit his ability to recognize objects.

Data processing from LiDAR sensors in adverse weather conditions can be challenging due to the increased noise and reduced signal-to-noise ratio caused by the weather [29][32]. The efficiency of traditional filters is significantly reduced on data from LiDAR. Therefore, advanced filtering techniques were proposed to remove noise from the LiDAR data [32][33][34]. Previous designs for filtering snow points from LiDAR data have shown impressive results. However, these filters also remove points that are part of actual objects, leading to the loss of valuable information from the LiDAR data.

2. Noise Removal Filter for LiDAR Point Clouds

The process of filtering noise from a LiDAR point cloud involves the elimination or reduction of extraneous data points that are not representative of the actual environment. This step is crucial, as LiDAR sensors are prone to producing false readings, such as outliers, due to environmental conditions or inherent sensor noise. The ultimate objective is to attain a precise and unadulterated representation of the environment, which is essential for a range of applications including autonomous navigation, object detection and scene comprehension.

There are several commonly used traditional methods for filtering noise from LiDAR point clouds, including statistical outlier removal (SOR) and radial outlier removal (ROR) [35][36]. Both SOR and ROR are techniques that eliminate data points that are significantly different from the majority of the data. These methods help to reduce errors or noise in Li-DAR point clouds, thereby improving the quality of the data. Noise is classified based on the clustering of the data. Errors or noise often occur randomly. Therefore, their density is sparser than the object points. Traditional filters classify data according to this characteristic. The advantage of traditional methods is that they are fast. However, accuracy and reliability are not high.

Traditional filters are usually quite simple, while the data density of objects in LiDAR is different. Therefore, several adaptive filters have been proposed to solve this problem, such as dynamic statistical outlier removal (DSOR) [37] and dynamic radius outlier removal (DROR) [29]. DROR generates an adaptive search radius that can be changed depending on the distance between the object and the LiDAR. DSOR has a similar idea. The mean distance is varied by distance to account for the change for each point in the entire LiDAR data. The effectiveness of the filter is improved by accounting for the decrease in density of object point clouds with increasing distance from the LiDAR. This is achieved by defining the search radius as the product of a multiplier constant and the distance to the object. The results of DSOR and DROR show that the proposed method achieves more than 90% accuracy on the LiDAR point cloud without removing environmental features.

In another aspect, deep learning models offer an opportunity to address this challenge, utilizing techniques such as denoising algorithms, adaptive filtering, and temporal filtering to effectively remove noise from the data [38]. As these models continue to evolve, their potential to improve the accuracy and reliability of sensor data will undoubtedly play a significant role in advancing many fields, including autonomous vehicles, robotics, and healthcare. The researchers applied computer vision to noise classification from LiDAR. Data from LiDAR will be converted to an image format. These images will be fed into the training model to detect features to identify noise from the image. Wheathernet [39] and 4DenoiseNet [40] have been shown to be effective in improving data quality from LiDAR in this approach. In certain scenarios, deep learning methods may outperform traditional approaches. However, traditional supervised learning algorithms rely heavily on large, labeled datasets to train models, making them less effective when labeled data are limited. Unsupervised learning, on the other hand, allows for the exploration and discovery of hidden patterns and structures within the data without the need for explicit labeling [41]. This enables the model to generalize better and achieve higher accuracy even when limited labeled data are available.

The intensity approach has also received much attention in recent studies. Low-intensity outlier removal (LIOR) [42] pioneered the use of point intensities in LiDAR data sets in conjunction with traditional filtering for noise detection. High-intensity object points are removed. As a result, filter performance is significantly improved. However, the filtering performance still needs to be improved. Some combined adaptive filtering methods using intensity have been proposed to overcome these limitations, such as Dynamic Distance–Intensity Outlier Removal (DDIOR) [43] and Adaptive Group of Density Outlier Removal (AGDOR) [44]. The main advantage of these filters is that they can handle point clouds with different densities more effectively. In areas of high point density, the search radius is small, resulting in more aggressive outlier removal, while in areas of low point density, the search radius is larger, resulting in more lenient outlier removal. Dynamic Light–Intensity Outlier Removal (DIOR) [45] has an efficient FPGA approach that can improve both accuracy and performance.

References

- Martínez-Díaz, M.; Soriguera, F. Autonomous Vehicles: Theoretical and Practical Challenges. Transp. Res. Procedia 2018, 33, 275–282.

- Yeong, D.J.; Velasco-hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140.

- Hu, J.W.; Zheng, B.Y.; Wang, C.; Zhao, C.H.; Hou, X.L.; Pan, Q.; Xu, Z. A Survey on Multi-Sensor Fusion Based Obstacle Detection for Intelligent Ground Vehicles in off-Road Environments. Front. Inf. Technol. Electron. Eng. 2020, 21, 675–692.

- Fayyad, J.; Jaradat, M.A.; Gruyer, D.; Najjaran, H. Deep Learning Sensor Fusion for Autonomous Vehicle Perception and Localization: A Review. Sensors 2020, 20, 4220.

- Xu, D.; Anguelov, D.; Jain, A. PointFusion: Deep Sensor Fusion for 3D Bounding Box Estimation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 244–253.

- Shahian Jahromi, B.; Tulabandhula, T.; Cetin, S. Real-Time Hybrid Multi-Sensor Fusion Framework for Perception in Autonomous Vehicles. Sensors 2019, 19, 4357.

- Norbye, H.G. Camera-Lidar Sensor Fusion in Real Time for Autonomous Surface Vehicles. Norwegian University of Science and Technology, Norway. 2019. Available online: https://folk.ntnu.no/edmundfo/msc2019-2020/norbye-lidar-camera-reduced.pdf/ (accessed on 12 March 2023).

- Yang, J.; Liu, S.; Su, H.; Tian, Y. Driving Assistance System Based on Data Fusion of Multisource Sensors for Autonomous Unmanned Ground Vehicles. Comput. Netw. 2021, 192, 108053.

- Kang, D.; Kum, D. Camera and Radar Sensor Fusion for Robust Vehicle Localization via Vehicle Part Localization. IEEE Access 2020, 8, 75223–75236.

- Wei, P.; Cagle, L.; Reza, T.; Ball, J.; Gafford, J. LiDAR and Camera Detection Fusion in a Real-Time Industrial Multi-Sensor Collision Avoidance System. Electronics 2018, 7, 84.

- De Silva, V.; Roche, J.; Kondoz, A. Robust Fusion of LiDAR and Wide-Angle Camera Data for Autonomous Mobile Robots. Sensors 2018, 18, 2730.

- Li, Y.; Ibanez-Guzman, J. Lidar for Autonomous Driving: The Principles, Challenges, and Trends for Automotive Lidar and Perception Systems. IEEE Signal Process. Mag. 2020, 37, 50–61.

- Wolcott, R.W.; Eustice, R.M. Visual Localization within LIDAR Maps for Automated Urban Driving. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 176–183.

- Murcia, H.F.; Monroy, M.F.; Mora, L.F. 3D Scene Reconstruction Based on a 2D Moving LiDAR. In Applied Informatics, Proceedings of the First International Conference, ICAI 2018, Bogotá, Colombia, 1–3 November 2018; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2018; Volume 942.

- Li, H.; Liping, D.; Huang, X.; Li, D. Laser Intensity Used in Classification of Lidar Point Cloud Data. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Boston, MA, USA, 6–11 July 2008; Volume 2.

- Akai, N.; Morales, L.Y.; Yamaguchi, T.; Takeuchi, E.; Yoshihara, Y.; Okuda, H.; Suzuki, T.; Ninomiya, Y. Autonomous Driving Based on Accurate Localization Using Multilayer LiDAR and Dead Reckoning. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Yokohama, Japan, 16–19 October 2017; pp. 1–6.

- Jang, C.H.; Kim, C.S.; Jo, K.C.; Sunwoo, M. Design Factor Optimization of 3D Flash Lidar Sensor Based on Geometrical Model for Automated Vehicle and Advanced Driver Assistance System Applications. Int. J. Automot. Technol. 2017, 18, 147–156.

- Ahmed, S.; Huda, M.N.; Rajbhandari, S.; Saha, C.; Elshaw, M.; Kanarachos, S. Pedestrian and Cyclist Detection and Intent Estimation for Autonomous Vehicles: A Survey. Appl. Sci. 2019, 9, 2335.

- Wen, L.H.; Jo, K.H. Fast and Accurate 3D Object Detection for Lidar-Camera-Based Autonomous Vehicles Using One Shared Voxel-Based Backbone. IEEE Access 2021, 9, 1.

- Zhen, W.; Hu, Y.; Liu, J.; Scherer, S. A Joint Optimization Approach of LiDAR-Camera Fusion for Accurate Dense 3-D Reconstructions. IEEE Robot. Autom. Lett. 2019, 4, 3585–3592.

- Zhong, H.; Wang, H.; Wu, Z.; Zhang, C.; Zheng, Y.; Tang, T. A Survey of LiDAR and Camera Fusion Enhancement. Procedia Comput. Sci. 2021, 183, 579–588.

- Zhang, F.; Clarke, D.; Knoll, A. Vehicle Detection Based on LiDAR and Camera Fusion. In Proceedings of the 2014 17th IEEE International Conference on Intelligent Transportation Systems, ITSC, Qingdao, China, 8–11 October 2014; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 14 November 2014; pp. 1620–1625.

- Kutila, M.; Pyykonen, P.; Holzhuter, H.; Colomb, M.; Duthon, P. Automotive LiDAR Performance Verification in Fog and Rain. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, ITSC, Maui, HI, USA, 4–7 November 2018; Volume 2018-November.

- Heinzler, R.; Schindler, P.; Seekircher, J.; Ritter, W.; Stork, W. Weather Influence and Classification with Automotive Lidar Sensors. In Proceedings of the IEEE Intelligent Vehicles Symposium, Paris, France, 9–12 June 2019; Volume 2019-June.

- Filgueira, A.; González-Jorge, H.; Lagüela, S.; Díaz-Vilariño, L.; Arias, P. Quantifying the Influence of Rain in LiDAR Performance. Measurement 2017, 95, 143–148.

- Reymann, C.; Lacroix, S. Improving LiDAR Point Cloud Classification Using Intensities and Multiple Echoes. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–3 October 2015; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 11 December 2015; Volume 2015-December, pp. 5122–5128.

- Bijelic, M.; Gruber, T.; Ritter, W. A Benchmark for Lidar Sensors in Fog: Is Detection Breaking Down? In Proceedings of the IEEE Intelligent Vehicles Symposium, 26–30 June 2018, Changshu, China; Volume 2018-June.

- Pitropov, M.; Garcia, D.E.; Rebello, J.; Smart, M.; Wang, C.; Czarnecki, K.; Waslander, S. Canadian Adverse Driving Conditions Dataset. Int. J. Robot. Res. 2021, 40, 681–690.

- Charron, N.; Phillips, S.; Waslander, S.L. De-Noising of Lidar Point Clouds Corrupted by Snowfall. In Proceedings of the 2018 15th Conference on Computer and Robot Vision, CRV, Toronto, ON, Canada, 8–10 May 2018.

- Jokela, M.; Kutila, M.; Pyykönen, P. Testing and Validation of Automotive Point-Cloud Sensors in Adverse Weather Conditions. Appl. Sci. 2019, 9, 2341.

- Rasshofer, R.H.; Spies, M.; Spies, H. Influences of Weather Phenomena on Automotive Laser Radar Systems. Adv. Radio Sci. 2011, 9, 49–60.

- Duan, Y.; Yang, C.; Li, H. Low-Complexity Adaptive Radius Outlier Removal Filter Based on PCA for Lidar Point Cloud Denoising. Appl. Opt. 2021, 60, E1–E7.

- Shi, C.; Wang, C.; Liu, X.; Sun, S.; Xiao, B.; Li, X.; Li, G. Three-Dimensional Point Cloud Denoising via a Gravitational Feature Function. Appl. Opt. 2022, 61, 1331–1343.

- Shan, Y.-H.; Zhang, X.; Niu, X.; Yang, G.; Zhang, J.-K. Denoising Algorithm of Airborne LIDAR Point Cloud Based on 3D Grid. Int. J. Signal Process. Image Process. Pattern Recognit. 2017, 10, 85–92.

- Aldoma, A.; Marton, Z.C.; Tombari, F.; Wohlkinger, W.; Potthast, C.; Zeisl, B.; Rusu, R.B.; Gedikli, S.; Vincze, M. Tutorial: Point Cloud Library: Three-Dimensional Object Recognition and 6 DOF Pose Estimation. IEEE Robot. Autom. Mag. 2012, 19, 80–91.

- Rusu, R.B.; Cousins, S. 3D Is Here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011.

- Kurup, A.; Bos, J. DSOR: A Scalable Statistical Filter for Removing Falling Snow from LiDAR Point Clouds in Severe Winter Weather. arXiv 2021, arXiv:2109.07078.

- Zhang, L.; Chang, X.; Liu, J.; Luo, M.; Li, Z.; Yao, L.; Hauptmann, A. TN-ZSTAD: Transferable Network for Zero-Shot Temporal Activity Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3848–3861.

- Heinzler, R.; Piewak, F.; Schindler, P.; Stork, W. CNN-Based Lidar Point Cloud De-Noising in Adverse Weather. IEEE Robot. Autom. Lett. 2020, 5, 2514–2521.

- Seppänen, A.; Ojala, R.; Tammi, K. 4DenoiseNet: Adverse Weather Denoising from Adjacent Point Clouds. IEEE Robotics and Automation Letters. 2022, 8, 456–463.

- Li, M.; Huang, P.-Y.; Chang, X.; Hu, J.; Yang, Y.; Hauptmann, A. Video Pivoting Unsupervised Multi-Modal Machine Translation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3918–3932.

- Park, J.-I.; Park, J.; Kim, K.S. Fast and Accurate Desnowing Algorithm for LiDAR Point Clouds. IEEE Access 2020, 8, 160202–160212.

- Wang, W.; You, X.; Chen, L.; Tian, J.; Tang, F.; Zhang, L. A Scalable and Accurate De-Snowing Algorithm for LiDAR Point Clouds in Winter. Remote Sens. 2022, 14, 1468.

- Le, M.-H.; Cheng, C.-H.; Liu, D.-G.; Nguyen, T.-T. An Adaptive Group of Density Outlier Removal Filter: Snow Particle Removal from LiDAR Data. Electronics 2022, 11, 2993.

- Roriz, R.; Campos, A.; Pinto, S.; Gomes, T. DIOR: A Hardware-Assisted Weather Denoising Solution for LiDAR Point Clouds. IEEE Sens. J. 2022, 22, 1621–1628.

More

Information

Contributors

MDPI registered users' name will be linked to their SciProfiles pages. To register with us, please refer to https://encyclopedia.pub/register

:

View Times:

3.7K

Revisions:

2 times

(View History)

Update Date:

24 May 2023

Notice

You are not a member of the advisory board for this topic. If you want to update advisory board member profile, please contact office@encyclopedia.pub.

OK

Confirm

Only members of the Encyclopedia advisory board for this topic are allowed to note entries. Would you like to become an advisory board member of the Encyclopedia?

Yes

No

${ textCharacter }/${ maxCharacter }

Submit

Cancel

Back

Comments

${ item }

|

More

No more~

There is no comment~

${ textCharacter }/${ maxCharacter }

Submit

Cancel

${ selectedItem.replyTextCharacter }/${ selectedItem.replyMaxCharacter }

Submit

Cancel

Confirm

Are you sure to Delete?

Yes

No