| Version | Summary | Created by | Modification | Content Size | Created at | Operation |

|---|---|---|---|---|---|---|

| 1 | Esma Dilek | -- | 3523 | 2023-03-20 15:01:45 | | | |

| 2 | Peter Tang | Meta information modification | 3523 | 2023-03-21 02:29:28 | | |

Video Upload Options

As technology continues to develop, computer vision (CV) applications are becoming increasingly widespread in the intelligent transportation systems (ITS) context. These applications are developed to improve the efficiency of transportation systems, increase their level of intelligence, and enhance traffic safety. Advances in CV play an important role in solving problems in the fields of traffic monitoring and control, incident detection and management, road usage pricing, and road condition monitoring, among many others, by providing more effective methods.

1. Introduction

-

CV applications in the field of ITS, along with the methods used, datasets, performance evaluation criteria, and success rates, are examined in a holistic and comprehensive way.

-

The problems and application areas addressed by CV applications in ITS are investigated.

-

The potential effects of CV studies on the transportation sector are evaluated.

-

The applicability, contributions, shortcomings, challenges, future research areas, and trends of CV applications in ITS are summarized.

-

Suggestions are made that will aid in improving the efficiency and effectiveness of transportation systems, increasing their safety levels, and making them smarter through CV studies in the future.

-

This research surveys over 300 studies that shed light on the development of CV techniques in the field of ITS. These studies have been published in journals listed in top electronic libraries and presented at leading conferences. The survey further presents recent academic papers and review articles that can be consulted by researchers aiming to conduct detailed analysis of the categories of CV applications.

-

It is believed that this survey can provide useful insights for researchers working on the potential effects of CV techniques, the automation of transportation systems, and the improvement of the efficiency and safety of ITS.

2. Computer Vision Studies in the Field of ITS

2.1. Evolution of Computer Vision Studies

2.1.1. Handcrafted Techniques

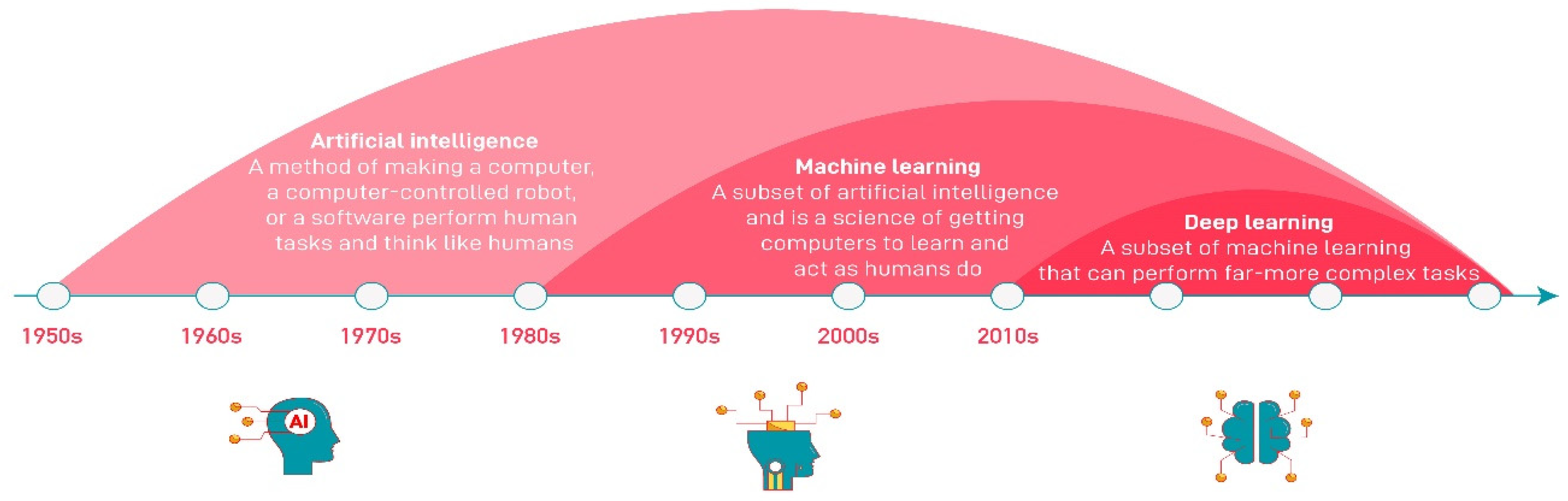

2.1.2. Machine Learning and Deep Learning Methods

2.1.3. Deep Neural Networks (DNNs)

2.1.4. Convolutional Neural Networks (CNNs)

2.1.5. Recurrent Neural Networks (RNNs)

2.1.6. Generative Adversarial Networks (GANs)

2.1.7. Other Methods

2.2. Computer Vision Functions

|

Computer Vision Function |

Application Areas |

Sample Datasets |

Performance Metrics |

|---|---|---|---|

|

Object Detection |

Problem: Boxing the objects in the image/video and finding their coordinates in the image

|

COCO CityScape ImageNet LISA GTSDB (German Traffic Light Detection) Pascal VOC CIFAR-10/CIFAR-100 |

mAP (mean average precision) Accuracy Precision Recall AP (average precision) RMSE (root mean squared error) |

|

Object Segmentation |

Problem: Classifying the pixels of the objects in the image and thus obtaining the individual masks of the object

|

COCO CityScape BD100K KITTI LISA |

mAP |

|

Image Enhancement |

Problem: Restoring images that have been corrupted by low lighting, haze, rain, and fog

|

REDS |

PSNR (peak signal-to-noise ratio) |

|

Object Tracking |

Problem: Tracking objects in video

|

MOT19 |

MOTA (multiple object tracking accuracy) |

|

Event Identification/Prediction |

Problem: Making sense of what happened in the video

|

UCF101 Kinetics600 |

Accuracy mAP |

|

Anomaly Detection |

Problem: Detection of abnormal behavior in transportation systems

|

UCSD Ped1 UCSD Ped 2 Avenue UMN UCF Real World Street Scene CIFAR-10/CIFAR-100 ShanghaiTech |

AUC (area under curve) Accuracy mAP |

|

Density Analysis |

Problem: Determining the density of pedestrians, passengers, or vehicles

|

Oxford 5K UCSD Mall UCF_CC_50 ShanghaiTech WorldExpo’10 |

MAE (mean absolute error) MSE (mean squared error) |

|

Image/Event Search |

Problem: Extraction of certain vehicles, pedestrians, or license plates from existing visual archives

|

Oxford 5K Pascal VOC |

Accuracy |

3. Computer Vision Applications in Intelligent Transportation Systems

References

- Lin, Y.; Wang, P.; Ma, M. Intelligent Transportation System (ITS): Concept, Challenge and Opportunity. In Proceedings of the 2017 IEEE 3rd International Conference on Big Data Security On cloud (Bigdatasecurity), IEEE International Conference on High Performance and Smart Computing (HPSC), and IEEE International Conference on Intelligent Data and Security (IDS), Beijing, China, 26–28 May 2017; IEEE: Beijing, China, 2017; pp. 167–172.

- Porter, M. Towards Safe and Equitable Intelligent Transportation Systems: Leveraging Stochastic Control Theory in Attack Detection; The University of Michigan: Michigan, MI, USA, 2021.

- Wang, Y.; Zhang, D.; Liu, Y.; Dai, B.; Lee, L.H. Enhancing Transportation Systems via Deep Learning: A Survey. Transp. Res. Part C Emerg. Technol. 2019, 99, 144–163.

- Parveen, S.; Chadha, R.S.; Noida, C.; Kumar, I.P.; Singh, J. Artificial Intelligence in Transportation Industry. Int. J. Innov. Sci. Res. Technol. 2022, 7, 1274–1283.

- Yuan, Y.; Xiong, Z.; Wang, Q. An Incremental Framework for Video-Based Traffic Sign Detection, Tracking, and Recognition. IEEE Trans. Intell. Transp. Syst. 2016, 18, 1918–1929.

- Sharma, V.; Gupta, M.; Kumar, A.; Mishra, D. Video Processing Using Deep Learning Techniques: A Systematic Literature Review. IEEE Access 2021, 9, 139489–139507.

- Loce, R.P.; Bernal, E.A.; Wu, W.; Bernal, E.A.; Bala, R. Computer Vision in Roadway Transportation Systems: A Survey Process Mining and Data Automation View Project Gait Segmentation View Project Computer Vision in Roadway Transportation Systems: A Survey Computer Vision in Roadway Transportation Systems: A Survey. Artic. J. Electron. Imaging 2013, 22, 041121.

- Patrikar, D.R.; Parate, M.R. Anomaly Detection Using Edge Computing in Video Surveillance System: Review. Int. J. Multimed. Inf. Retr. 2022, 11, 85–110.

- Nanni, L.; Ghidoni, S.; Brahnam, S. Handcrafted vs. Non-Handcrafted Features for Computer Vision Classification. Pattern Recognit. 2017, 71, 158–172.

- Varshney, H.; Khan, R.A.; Khan, U.; Verma, R. Approaches of Artificial Intelligence and Machine Learning in Smart Cities: Critical Review. IOP Conf. Ser. Mater Sci. Eng. 2021, 1022, 012019.

- Mittal, D.; Reddy, A.; Ramadurai, G.; Mitra, K.; Ravindran, B. Training a Deep Learning Architecture for Vehicle Detection Using Limited Heterogeneous Traffic Data. In Proceedings of the 2018 10th International Conference on Communication Systems & Networks (COMSNETS), Bengaluru, India, 3–7 January 2018; pp. 294–589.

- Alam, A.; Jaffery, Z.A.; Sharma, H. A Cost-Effective Computer Vision-Based Vehicle Detection System. Concurr. Eng. 2022, 30, 148–158.

- Vishal, K.; Arvind, C.S.; Mishra, R.; Gundimeda, V. Traffic Light Recognition for Autonomous Vehicles by Admixing the Traditional ML and DL. In Proceedings of the Eleventh International Conference on Machine Vision (ICMV 2018), Munich, Germany, 1–3 November 2018; SPIE: Bellingham, WA, USA, 2019; Volume 11041, pp. 126–133.

- Al-Shemarry, M.S.; Li, Y. Developing Learning-Based Preprocessing Methods for Detecting Complicated Vehicle Licence Plates. IEEE Access 2020, 8, 170951–170966.

- Greenhalgh, J.; Mirmehdi, M. Real-Time Detection and Recognition of Road Traffic Signs. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1498–1506.

- Maldonado-Bascón, S.; Lafuente-Arroyo, S.; Gil-Jimenez, P.; Gómez-Moreno, H.; López-Ferreras, F. Road-Sign Detection and Recognition Based on Support Vector Machines. IEEE Trans. Intell. Transp. Syst. 2007, 8, 264–278.

- Lafuente-Arroyo, S.; Gil-Jimenez, P.; Maldonado-Bascon, R.; López-Ferreras, F.; Maldonado-Bascon, S. Traffic Sign Shape Classification Evaluation I: SVM Using Distance to Borders. In Proceedings of the IEEE Proceedings. Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; IEEE: New York, NY, USA, 2005; pp. 557–562.

- Li, C.; Yang, C. The Research on Traffic Sign Recognition Based on Deep Learning. In Proceedings of the 2016 16th International Symposium on Communications and Information Technologies (ISCIT), Qingdao, China, 26–28 September 2016; IEEE: New York, NY, USA, 2016; pp. 156–161.

- Oren, M.; Papageorgiou, C.; Sinha, P.; Osuna, E.; Poggio, T. Pedestrian Detection Using Wavelet Templates. In Proceedings of the Proceedings of the IEEE COMPUTER society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; IEEE: New York, NY, USA, 1997; pp. 193–199.

- Papageorgiou, C.; Evgeniou, T.; Poggio, T. A Trainable Pedestrian Detection System. In Proceedings of the Proc. of Intelligent Vehicles, Seville, Spain, 23–24 March 1998; pp. 241–246.

- Pustokhina, I.V.; Pustokhin, D.A.; Rodrigues, J.J.P.C.; Gupta, D.; Khanna, A.; Shankar, K.; Seo, C.; Joshi, G.P. Automatic Vehicle License Plate Recognition Using Optimal K-Means with Convolutional Neural Network for Intelligent Transportation Systems. Ieee Access 2020, 8, 92907–92917.

- Hu, F.; Tian, Z.; Li, Y.; Huang, S.; Feng, M. A Combined Clustering and Image Mapping Based Point Cloud Segmentation for 3D Object Detection. In Proceedings of the 2018 Chinese Control And Decision Conference (CCDC), Shenyang, China, 9–11 June 2018; IEEE: New York, NY, USA, 2018; pp. 1664–1669.

- Shan, B.; Zheng, S.; Ou, J. A Stereovision-Based Crack Width Detection Approach for Concrete Surface Assessment. KSCE J. Civ. Eng. 2016, 20, 803–812.

- Hurtado-Gómez, J.; Romo, J.D.; Salazar-Cabrera, R.; Pachon de la Cruz, A.; Madrid Molina, J.M. Traffic Signal Control System Based on Intelligent Transportation System and Reinforcement Learning. Electronics 2021, 10, 2363.

- Li, L.; Lv, Y.; Wang, F.-Y. Traffic Signal Timing via Deep Reinforcement Learning. IEEE/CAA J. Autom. Sin. 2016, 3, 247–254.

- Liu, Y.; Xu, P.; Zhu, L.; Yan, M.; Xue, L. Reinforced Attention Method for Real-Time Traffic Line Detection. J. Real Time Image Process. 2022, 19, 957–968.

- Le, T.T.; Tran, S.T.; Mita, S.; Nguyen, T.D. Real Time Traffic Sign Detection Using Color and Shape-Based Features. In Proceedings of the ACIIDS, Hue City, Vietnam, 24–26 March 2010; pp. 268–278.

- Song, X.; Nevatia, R. Detection and Tracking of Moving Vehicles in Crowded Scenes. In Proceedings of the 2007 IEEE Workshop on Motion and Video Computing (WMVC’07), Austin, TX, USA, 23–24 February 2007; p. 4.

- Messelodi, S.; Modena, C.M.; Segata, N.; Zanin, M. A Kalman Filter Based Background Updating Algorithm Robust to Sharp Illumination Changes. In Proceedings of the ICIAP, Cagliari, Italy, 6–8 September 2005; Volume 3617, pp. 163–170.

- Okutani, I.; Stephanedes, Y.J. Dynamic Prediction of Traffic Volume through Kalman Filtering Theory. Transp. Res. Part B Methodol. 1984, 18, 1–11.

- Ramrath, B.; Ari, L.; Doug, M. Insurance 2030—The Impact of AI on the Future of Insurance. 2021. Available online: https://www.mckinsey.com/industries/financial-services/our-insights/insurance-2030-the-impact-of-ai-on-the-future-of-insurance (accessed on 22 January 2023).

- Babu, K.; Kumar, C.; Kannaiyaraju, C. Face Recognition System Using Deep Belief Network and Particle Swarm Optimization. Intell. Autom. Soft Comput. 2022, 33, 317–329.

- Wang, X.; Zhang, Y. The Detection and Recognition of Bridges’ Cracks Based on Deep Belief Network. In Proceedings of the 2017 IEEE International Conference on Computational Science and Engineering (CSE) and IEEE International Conference on Embedded and Ubiquitous Computing (EUC), Guangzhou, China, 21–24 July 2017; IEEE: New York, NY, USA, 2017; Volume 1, pp. 768–771.

- Maria, J.; Amaro, J.; Falcao, G.; Alexandre, L.A. Stacked Autoencoders Using Low-Power Accelerated Architectures for Object Recognition in Autonomous Systems. Neural Process. Lett. 2016, 43, 445–458.

- Theis, L.; Shi, W.; Cunningham, A.; Huszár, F. Lossy Image Compression with Compressive Autoencoders. arXiv 2017, arXiv:1703.00395.

- Song, J.; Zhang, H.; Li, X.; Gao, L.; Wang, M.; Hong, R. Self-Supervised Video Hashing with Hierarchical Binary Auto-Encoder. IEEE Trans. Image Process. 2018, 27, 3210–3221.

- Teh, Y.W.; Hinton, G.E. Rate-Coded Restricted Boltzmann Machines for Face Recognition. Adv. Neural Inf. Process. Syst. 2000, 13, 872–878.

- Ghahremannezhad, H.; Shi, H.; Liu, C. Real-Time Accident Detection in Traffic Surveillance Using Deep Learning. In Proceedings of the 2022 IEEE International Conference on Imaging Systems and Techniques (IST), Kaohsiung, Taiwan, 21–23 June 2022; IEEE: New York, NY, USA, 2022; pp. 1–6.

- Lange, S.; Ulbrich, F.; Goehring, D. Online Vehicle Detection Using Deep Neural Networks and Lidar Based Preselected Image Patches. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 22 January 2016; IEEE: New York, NY, USA, 2016; pp. 954–959.

- Laroca, R.; Severo, E.; Zanlorensi, L.A.; Oliveira, L.S.; Gonçalves, G.R.; Schwartz, W.R.; Menotti, D. A Robust Real-Time Automatic License Plate Recognition Based on the YOLO Detector. In Proceedings of the 2018 International joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; IEEE: New York, NY, USA, 2018; pp. 1–10.

- Hashmi, S.N.; Kumar, K.; Khandelwal, S.; Lochan, D.; Mittal, S. Real Time License Plate Recognition from Video Streams Using Deep Learning. Int. J. Inf. Retr. Res. 2019, 9, 65–87.

- Cirean, D.; Meier, U.; Masci, J.; Schmidhuber, J. A Committee of Neural Networks for Traffic Sign Classification. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 1918–1921.

- Sermanet, P.; LeCun, Y. Traffic Sign Recognition with Multi-Scale Convolutional Networks. In Proceedings of the 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; IEEE: New York, NY, USA, 2011; pp. 2809–2813.

- Ciresan, D.; Meier, U.; Masci, J.; Schmidhuber, J. Multi-Column Deep Neural Network for Traffic Sign Classification. Neural Netw. 2012, 32, 333–338.

- Jin, J.; Fu, K.; Zhang, C. Traffic Sign Recognition with Hinge Loss Trained Convolutional Neural Networks. IEEE Trans. Intell. Transp. Syst. 2014, 15, 1991–2000.

- Haloi, M. Traffic Sign Classification Using Deep Inception Based Convolutional Networks. arXiv 2015, arXiv:1511.02992.

- Qian, R.; Zhang, B.; Yue, Y.; Wang, Z.; Coenen, F. Robust Chinese Traffic Sign Detection and Recognition with Deep Convolutional Neural Network. In Proceedings of the 2015 11th International Conference on Natural Computation (ICNC), Zhangjiajie, China, 15–17 August 2015; IEEE: New York, NY, USA, 2015; pp. 791–796.

- Changzhen, X.; Cong, W.; Weixin, M.; Yanmei, S. A Traffic Sign Detection Algorithm Based on Deep Convolutional Neural Network. In Proceedings of the 2016 IEEE International Conference on Signal and Image Processing (ICSIP), Beijing, China, 13–15August 2016; IEEE: New York, NY, USA, 2016; pp. 676–679.

- Jung, S.; Lee, U.; Jung, J.; Shim, D.H. Real-Time Traffic Sign Recognition System with Deep Convolutional Neural Network. In Proceedings of the 2016 13th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Xi’an, China, 19–22 August 2016; IEEE: New York, NY, USA, 2016; pp. 31–34.

- Zeng, Y.; Xu, X.; Shen, D.; Fang, Y.; Xiao, Z. Traffic Sign Recognition Using Kernel Extreme Learning Machines with Deep Perceptual Features. IEEE Trans. Intell. Transp. Syst. 2016, 18, 1647–1653.

- Zhang, J.; Huang, Q.; Wu, H.; Liu, Y. A Shallow Network with Combined Pooling for Fast Traffic Sign Recognition. Information 2017, 8, 45.

- Du, X.; Ang, M.H.; Rus, D. Car Detection for Autonomous Vehicle: LIDAR and Vision Fusion Approach through Deep Learning Framework. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24 September 2017; IEEE: New York, NY, USA, 2017; pp. 749–754.

- Wu, L.-T.; Lin, H.-Y. Overtaking Vehicle Detection Techniques Based on Optical Flow and Convolutional Neural Network. In Proceedings of the VEHITS, Madeira, Portugal, 16–18 March 2018; pp. 133–140.

- Pillai, U.K.K.; Valles, D. An Initial Deep CNN Design Approach for Identification of Vehicle Color and Type for Amber and Silver Alerts. In Proceedings of the 2021 IEEE 11th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 27–30 January 2021; IEEE: New York, NY, USA, 2021; pp. 903–908.

- Shvai, N.; Hasnat, A.; Meicler, A.; Nakib, A. Accurate Classification for Automatic Vehicle-Type Recognition Based on Ensemble Classifiers. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1288–1297.

- Yi, S. Pedestrian Behavior Modeling and Understanding in Crowds. Doctoral Dissertation, The Chinese University of Hong Kong, Hong Kong, 2016.

- Ouyang, W.; Wang, X. Joint Deep Learning for Pedestrian Detection. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2056–2063.

- Fukui, H.; Yamashita, T.; Yamauchi, Y.; Fujiyoshi, H.; Murase, H. Pedestrian Detection Based on Deep Convolutional Neural Network with Ensemble Inference Network. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Republic of Korea, 28 June–1 July 2015; IEEE: New York, NY, USA, 2015; pp. 223–228.

- John, V.; Mita, S.; Liu, Z.; Qi, B. Pedestrian Detection in Thermal Images Using Adaptive Fuzzy C-Means Clustering and Convolutional Neural Networks. In Proceedings of the 2015 14th IAPR International Conference on Machine Vision Applications (MVA), Tokyo, Japan, 18–22 May 2015; IEEE: New York, NY, USA, 2015; pp. 246–249.

- Schlosser, J.; Chow, C.K.; Kira, Z. Fusing Lidar and Images for Pedestrian Detection Using Convolutional Neural Networks. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: New York, NY, USA, 2016; pp. 2198–2205.

- Kim, J.; Lee, M. Robust Lane Detection Based on Convolutional Neural Network and Random Sample Consensus. In Proceedings of the Neural Information Processing: 21st International Conference, ICONIP 2014, Kuching, Malaysia, 3–6 November 2014; Part I 21. Springer: Berlin/Heidelberg, Germany, 2014; pp. 454–461.

- Huval, B.; Wang, T.; Tandon, S.; Kiske, J.; Song, W.; Pazhayampallil, J.; Andriluka, M.; Rajpurkar, P.; Migimatsu, T.; Cheng-Yue, R. An Empirical Evaluation of Deep Learning on Highway Driving. arXiv 2015, arXiv:1504.01716.

- Li, J.; Mei, X.; Prokhorov, D.; Tao, D. Deep Neural Network for Structural Prediction and Lane Detection in Traffic Scene. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 690–703.

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-Cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969.

- Shao, J.; Loy, C.-C.; Kang, K.; Wang, X. Slicing Convolutional Neural Network for Crowd Video Understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5620–5628.

- Sabokrou, M.; Fayyaz, M.; Fathy, M.; Moayed, Z.; Klette, R. Deep-Anomaly: Fully Convolutional Neural Network for Fast Anomaly Detection in Crowded Scenes. Comput. Vis. Image Underst. 2018, 172, 88–97.

- Sun, J.; Shao, J.; He, C. Abnormal Event Detection for Video Surveillance Using Deep One-Class Learning. Multimed Tools Appl. 2019, 78, 3633–3647.

- Sabih, M.; Vishwakarma, D.K. Crowd Anomaly Detection with LSTMs Using Optical Features and Domain Knowledge for Improved Inferring. Vis. Comput. 2022, 38, 1719–1730.

- Zhang, A.; Wang, K.C.P.; Li, B.; Yang, E.; Dai, X.; Peng, Y.; Fei, Y.; Liu, Y.; Li, J.Q.; Chen, C. Automated Pixel-level Pavement Crack Detection on 3D Asphalt Surfaces Using a Deep-learning Network. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 805–819.

- Zhang, L.; Yang, F.; Zhang, Y.D.; Zhu, Y.J. Road Crack Detection Using Deep Convolutional Neural Network. In Proceedings of the 2016 IEEE international conference on image processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; IEEE: New York, NY, USA, 2016; pp. 3708–3712.

- Gulgec, N.S.; Takáč, M.; Pakzad, S.N. Structural Damage Detection Using Convolutional Neural Networks. In Model Validation and Uncertainty Quantification, Proceedings of the 35th IMAC, A Conference and Exposition on Structural Dynamics; Springer: Berlin/Heidelberg, Germany, 2017; Volume 3, pp. 331–337.

- Protopapadakis, E.; Voulodimos, A.; Doulamis, A.; Doulamis, N.; Stathaki, T. Automatic Crack Detection for Tunnel Inspection Using Deep Learning and Heuristic Image Post-Processing. Appl. Intell. 2019, 49, 2793–2806.

- Mandal, V.; Uong, L.; Adu-Gyamfi, Y. Automated Road Crack Detection Using Deep Convolutional Neural Networks. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; IEEE: New York, NY, USA, 2018; pp. 5212–5215.

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9.

- Li, S.; Zhao, X. Convolutional Neural Networks-Based Crack Detection for Real Concrete Surface. In Proceedings of the Sensors and Smart Structures Technologies for Civil, Mechanical, and Aerospace Systems 2018, Denver, CO, USA, 5–8 March 2018; SPIE: Bellingham, WA, USA, 2018; Volume 10598, pp. 955–961.

- Ahmed, T.U.; Hossain, M.S.; Alam, M.J.; Andersson, K. An Integrated CNN-RNN Framework to Assess Road Crack. In Proceedings of the 2019 22nd International Conference on Computer and Information Technology (ICCIT), Dhaka, Bangladesh, 18–20 December 2019; IEEE: New York, NY, USA, 2019; pp. 1–6.

- Nguyen, N.H.T.; Perry, S.; Bone, D.; Le, H.T.; Nguyen, T.T. Two-Stage Convolutional Neural Network for Road Crack Detection and Segmentation. Expert Syst. Appl. 2021, 186, 115718.

- Chen, J.; Liu, Z.; Wang, H.; Liu, K. High-Speed Railway Catenary Components Detection Using the Cascaded Convolutional Neural Networks. In Proceedings of the 2017 IEEE International Conference on Imaging Systems and Techniques (IST), Beijing, China, 18–20 October 2017; IEEE: New York, NY, USA, 2017; pp. 1–6.

- Bojarski, M.; del Testa, D.; Dworakowski, D.; Firner, B.; Flepp, B.; Goyal, P.; Jackel, L.D.; Monfort, M.; Muller, U.; Zhang, J. End to End Learning for Self-Driving Cars. arXiv 2016, arXiv:1604.07316.

- Farkh, R.; Alhuwaimel, S.; Alzahrani, S.; al Jaloud, K.; Tabrez Quasim, M. Deep Learning Control for Autonomous Robot. Comput. Mater. Contin. 2022, 72, 2811–2824.

- Nose, Y.; Kojima, A.; Kawabata, H.; Hironaka, T. A Study on a Lane Keeping System Using CNN for Online Learning of Steering Control from Real Time Images. In Proceedings of the 2019 34th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC), Jeju, Republic of Korea, 23–26 June 2019; IEEE: New York, NY, USA, 2019; pp. 1–4.

- Chen, Z.; Huang, X. End-to-End Learning for Lane Keeping of Self-Driving Cars. In Proceedings of the 2017 IEEE intelligent vehicles symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; IEEE: New York, NY, USA, 2017; pp. 1856–1860.

- Rateke, T.; von Wangenheim, A. Passive Vision Road Obstacle Detection: A Literature Mapping. Int. J. Comput. Appl. 2022, 44, 376–395.

- Ali, R.; Chuah, J.H.; Talip, M.S.A.; Mokhtar, N.; Shoaib, M.A. Structural Crack Detection Using Deep Convolutional Neural Networks. Autom. Constr. 2022, 133, 103989.

- GONG, W.; SHI, Z.; Qiang, J.I. Non-Segmented Chinese License Plate Recognition Algorithm Based on Deep Neural Networks. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; IEEE: New York, NY, USA, 2020; pp. 66–71.

- Chen, S.; Zhang, S.; Shang, J.; Chen, B.; Zheng, N. Brain-Inspired Cognitive Model with Attention for Self-Driving Cars. IEEE Trans. Cogn. Dev. Syst. 2017, 11, 13–25.

- Medel, J.R.; Savakis, A. Anomaly Detection in Video Using Predictive Convolutional Long Short-Term Memory Networks. arXiv 2016, arXiv:1612.00390.

- Medel, J.R. Anomaly Detection Using Predictive Convolutional Long Short-Term Memory Units; Rochester Institute of Technology: Rochester, NY, USA, 2016; ISBN 1369443943.

- Luo, W.; Liu, W.; Gao, S. Remembering History with Convolutional Lstm for Anomaly Detection. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, 10–14 July 2017; IEEE: New York, NY, USA, 2017; pp. 439–444.

- Patraucean, V.; Handa, A.; Cipolla, R. Spatio-Temporal Video Autoencoder with Differentiable Memory. arXiv 2015, arXiv:1511.06309.

- Li, Y.; Cai, Y.; Liu, J.; Lang, S.; Zhang, X. Spatio-Temporal Unity Networking for Video Anomaly Detection. IEEE Access 2019, 7, 172425–172432.

- Wang, L.; Tan, H.; Zhou, F.; Zuo, W.; Sun, P. Unsupervised Anomaly Video Detection via a Double-Flow Convlstm Variational Autoencoder. IEEE Access 2022, 10, 44278–44289.

- Kim, J.; Canny, J. Interpretable Learning for Self-Driving Cars by Visualizing Causal Attention. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2942–2950.

- Wang, X.; Che, Z.; Jiang, B.; Xiao, N.; Yang, K.; Tang, J.; Ye, J.; Wang, J.; Qi, Q. Robust Unsupervised Video Anomaly Detection by Multipath Frame Prediction. IEEE Trans. Neural. Netw. Learn. Syst. 2021, 33, 2301–2312.

- Jackson, S.D.; Cuzzolin, F. SVD-GAN for Real-Time Unsupervised Video Anomaly Detection. In Proceedings of the British Machine Vision Conference (BMVC), Virtual, 22–25 November 2021.

- Song, H.; Sun, C.; Wu, X.; Chen, M.; Jia, Y. Learning Normal Patterns via Adversarial Attention-Based Autoencoder for Abnormal Event Detection in Videos. IEEE Trans. Multimed. 2019, 22, 2138–2148.

- Ganokratanaa, T.; Aramvith, S.; Sebe, N. Unsupervised Anomaly Detection and Localization Based on Deep Spatiotemporal Translation Network. IEEE Access 2020, 8, 50312–50329.

- Chen, D.; Yue, L.; Chang, X.; Xu, M.; Jia, T. NM-GAN: Noise-Modulated Generative Adversarial Network for Video Anomaly Detection. Pattern Recognit. 2021, 116, 107969.

- Huang, C.; Wen, J.; Xu, Y.; Jiang, Q.; Yang, J.; Wang, Y.; Zhang, D. Self-Supervised Attentive Generative Adversarial Networks for Video Anomaly Detection. In IEEE Transactions on Neural Networks and Learning Systems; IEEE: New York, NY, USA, 2022.

- Darapaneni, N.; Mogeraya, K.; Mandal, S.; Narayanan, A.; Siva, P.; Paduri, A.R.; Khan, F.; Agadi, P.M. Computer Vision Based License Plate Detection for Automated Vehicle Parking Management System. In Proceedings of the 2020 11th IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 28–31 October 2020; IEEE: New York, NY, USA, 2020; pp. 800–805.

- Vetriselvi, T.; Lydia, E.L.; Mohanty, S.N.; Alabdulkreem, E.; Al-Otaibi, S.; Al-Rasheed, A.; Mansour, R.F. Deep Learning Based License Plate Number Recognition for Smart Cities. CMC Comput. Mater Contin. 2022, 70, 2049–2064.

- Duman, E.; Erdem, O.A. Anomaly Detection in Videos Using Optical Flow and Convolutional Autoencoder. IEEE Access 2019, 7, 183914–183923.

- Xing, J.; Nguyen, M.; Qi Yan, W. The Improved Framework for Traffic Sign Recognition Using Guided Image Filtering. SN Comput. Sci. 2022, 3, 461.

- Liu, J.; Zhang, S.; Wang, S.; Metaxas, D.N. Multispectral Deep Neural Networks for Pedestrian Detection. arXiv 2016, arXiv:1611.02644.

- Dewangan, D.K.; Sahu, S.P. Road Detection Using Semantic Segmentation-Based Convolutional Neural Network for Intelligent Vehicle System. In Proceedings of the Data Engineering and Communication Technology: Proceedings of ICDECT 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 629–637.

- Walk, S.; Schindler, K.; Schiele, B. Disparity Statistics for Pedestrian Detection: Combining Appearance, Motion and Stereo. In Proceedings of the Computer Vision–ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Crete, Greece, September 5–11, 2010, Proceedings, Part VI 11; Springer: Berlin/Heidelberg, Germany, 2010; pp. 182–195.

- Liu, Z.; Yu, C.; Zheng, B. Any Type of Obstacle Detection in Complex Environments Based on Monocular Vision. In Proceedings of the 32nd Chinese Control Conference, Xi’an, China, 26–28 July 2013; IEEE: New York, NY, USA, 2013; pp. 7692–7697.

- Pantilie, C.D.; Nedevschi, S. Real-Time Obstacle Detection in Complex Scenarios Using Dense Stereo Vision and Optical Flow. In Proceedings of the 13th International IEEE Conference on Intelligent Transportation Systems, Funchal, Madeira Island, Portugal, 19–22 September 2010; IEEE: New York, NY, USA, 2010; pp. 439–444.

- Dairi, A.; Harrou, F.; Sun, Y.; Senouci, M. Obstacle Detection for Intelligent Transportation Systems Using Deep Stacked Autoencoder and $ k $-Nearest Neighbor Scheme. IEEE Sens. J. 2018, 18, 5122–5132.

- Ci, W.; Xu, T.; Lin, R.; Lu, S. A Novel Method for Unexpected Obstacle Detection in the Traffic Environment Based on Computer Vision. Appl. Sci. 2022, 12, 8937.

- Cha, Y.; Choi, W.; Büyüköztürk, O. Deep Learning-based Crack Damage Detection Using Convolutional Neural Networks. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 361–378.

- Kortmann, F.; Fassmeyer, P.; Funk, B.; Drews, P. Watch out, Pothole! Featuring Road Damage Detection in an End-to-End System for Autonomous Driving. Data Knowl Eng 2022, 142, 102091.

- Liu, J.; Yang, X.; Lau, S.; Wang, X.; Luo, S.; Lee, V.C.; Ding, L. Automated Pavement Crack Detection and Segmentation Based on Two-step Convolutional Neural Network. Comput.-Aided Civ. Infrastruct. Eng. 2020, 35, 1291–1305.

- Muhammad, K.; Hussain, T.; Ullah, H.; del Ser, J.; Rezaei, M.; Kumar, N.; Hijji, M.; Bellavista, P.; de Albuquerque, V.H.C. Vision-Based Semantic Segmentation in Scene Understanding for Autonomous Driving: Recent Achievements, Challenges, and Outlooks. In IEEE Transactions on Intelligent Transportation Systems; IEEE: New York, NY, USA, 2022.

- Benamer, I.; Yahiouche, A.; Ghenai, A. Deep Learning Environment Perception and Self-Tracking for Autonomous and Connected Vehicles. In Proceedings of the Machine Learning for Networking: Third International Conference, MLN 2020, Paris, France, November 24–26, 2020, Revised Selected Papers 3; Springer: Berlin/Heidelberg, Germany, 2021; pp. 305–319.

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. Adv. Neura. Inf. Process. Syst. 2017, 30, 6000–6010.

- Zang, X.; Li, G.; Gao, W. Multidirection and Multiscale Pyramid in Transformer for Video-Based Pedestrian Retrieval. IEEE Trans. Ind. Inf. 2022, 18, 8776–8785.

- Wang, H.; Chen, J.; Huang, Z.; Li, B.; Lv, J.; Xi, J.; Wu, B.; Zhang, J.; Wu, Z. FPT: Fine-Grained Detection of Driver Distraction Based on the Feature Pyramid Vision Transformer. In IEEE Transactions on Intelligent Transportation Systems; IEEE: New York, NY, USA, 2022.

- Kirillov, A.; He, K.; Girshick, R.; Rother, C.; Dollár, P. Panoptic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9404–9413.

- Rafie, M.; Zhang, Y.; Liu, S. Evaluation Framework for Video Coding for Machines. ISO/IEC JTC 2021, 1, 3–4.

- Manikoth, N.; Loce, R.; Bernal, E.; Wu, W. Survey of Computer Vision in Roadway Transportation Systems. In Proceedings of the Visual Information Processing and Communication III; SPIE: Bellingham, WA, USA, 2012; Volume 8305, pp. 258–276.

- Buch, N.; Velastin, S.A.; Orwell, J. A Review of Computer Vision Techniques for the Analysis of Urban Traffic. IEEE Trans. Intell. Transp. Syst. 2011, 12, 920–939.